Top Regulatory Compliance/KYC/AML Providers (2025)

Why crypto compliance, KYC/AML & blockchain analytics vendors Matters in September 2025

If you operate an exchange, wallet, OTC desk, or DeFi on-ramp, choosing the right KYC/AML providers can be the difference between smooth growth and painful remediation. In 2025, regulators continue to tighten enforcement (Travel Rule, sanctions screening, transaction monitoring), while criminals get more sophisticated across bridges, mixers, and multi-chain hops. This guide shortlists ten credible vendors that help crypto businesses verify users, monitor wallets and transactions, and comply with global rules.

Definition (snippet): KYC/AML providers are companies that deliver identity verification, sanctions/PEP screening, blockchain analytics, transaction monitoring, and Travel Rule tooling so crypto businesses can meet regulatory obligations and reduce financial crime risk.

SECONDARY_KEYWORDS woven below: crypto compliance, blockchain analytics, transaction monitoring, Travel Rule.

How We Picked (Methodology & Scoring)

- What we scored (weights): Market adoption & scale (liquidity 30 as a proxy for coverage & volume handled), security posture 25 (audits, data protection, regulatory alignment), coverage 15 (chains, assets, jurisdictions), costs 15 (pricing transparency, efficiency), UX 10 (API, case mgmt., automation), support 5 (docs, SLAs).

- Data sources: Only official product pages, security/trust centers, and documentation; widely cited market datasets used only to cross-check asset/chain coverage. “Last updated September 2025.” Chainalysis+2TRM Labs+2

Top 10 crypto compliance, KYC/AML & blockchain analytics vendors in September 2025

1. Chainalysis — Best for cross-chain transaction risk & investigations

Why Use It: Chainalysis KYT and Reactor pair broad chain/token coverage with real-time risk scoring and deep investigative tooling. If you need automated alerts on deposits/withdrawals and the ability to trace through bridges/mixers/DEXs, it’s a proven, regulator-recognized stack.

Best For: Centralized exchanges, custodians, banks with crypto exposure, law enforcement teams.

Notable Features: Real-time KYT alerts • Cross-chain tracing • Case management & APIs • Attribution datasets.

Consider If: You want an enterprise-grade standard and investigator workflows under one roof.

Alternatives: TRM Labs, Elliptic. Chainalysis+1

Regions: Global • Fees/Notes: Quote-based, volume/seat tiers.

2. TRM Labs — Best for fast-moving threat intel & sanctions coverage

Why Use It: TRM’s transaction monitoring taps a large, fast-growing database of illicit activity and extends screening beyond official lists to include threat actor footprints on-chain. Strong coverage and practical APIs make it easy to plug into existing case systems.

Best For: Exchanges, payment processors, fintechs expanding into web3, risk teams that need flexible rules.

Notable Features: Real-time monitoring • Sanctions & threat actor intelligence • Case mgmt. integrations • Multi-chain coverage.

Consider If: You prioritize dynamic risk models and frequent list updates.

Alternatives: Chainalysis, Elliptic. TRM Labs+1

Regions: Global • Fees/Notes: Enterprise contracts; volume-based.

3. Elliptic — Best for scalable wallet screening at exchange scale

Why Use It: Elliptic’s Lens and Screening solutions streamline wallet/transaction checks with chain-agnostic coverage and audit-ready workflows. It’s built for high-volume screening with clean APIs and strong reporting for regulators and internal audit.

Best For: CEXs, payment companies, institutional custody, risk ops needing bulk screening.

Notable Features: Wallet & TX screening • Cross-chain risk detection • Audit trails • Customer analytics.

Consider If: You need mature address screening and large-scale throughput.

Alternatives: Chainalysis, TRM Labs. Elliptic+1

Regions: Global • Fees/Notes: Quote-based; discounts by volume.

4. ComplyAdvantage — Best for sanctions/PEP/adverse media screening in crypto

Why Use It: An AML data powerhouse for KYC and ongoing monitoring that many crypto companies use to meet screening obligations and reduce false positives. Strong watchlist coverage, adverse media, and continuous monitoring help you satisfy banking partners and auditors.

Best For: Exchanges and fintechs that want robust sanctions/PEP data plus transaction monitoring.

Notable Features: Real-time sanctions & watchlists • Ongoing monitoring • Payment screening • Graph analysis.

Consider If: You want a single vendor for screening + monitoring alongside your analytics stack.

Alternatives: Jumio (Screening), Sumsub. ComplyAdvantage+1

Regions: Global • Fees/Notes: Tiered enterprise pricing.

5. Sumsub — Best all-in-one KYC/KYB + crypto monitoring

Why Use It: Crypto-focused onboarding with liveness, documents, KYB, Travel Rule support, and transaction monitoring—plus in-house legal experts to interpret changing rules. Good for teams that need to orchestrate identity checks and AML controls in one flow.

Best For: Global exchanges, NFT/DeFi ramps, high-growth startups entering new markets.

Notable Features: KYC/KYB • Watchlists/PEPs • Device intelligence • Crypto TX monitoring • Case management.

Consider If: You want one vendor for identity + AML + Travel Rule workflow.

Alternatives: Jumio, ComplyAdvantage. Sumsub+1

Regions: Global • Fees/Notes: Per-verification & volume tiers.

6. Jumio — Best for enterprise-grade identity + AML screening

Why Use It: Jumio combines biometric KYC with automated AML screening (PEPs/sanctions) and ongoing monitoring. Its “KYX” approach provides identity insights across the customer lifecycle, helping reduce fraud while keeping onboarding friction reasonable.

Best For: Regulated exchanges, banks, brokerages with strict KYC/AML controls.

Notable Features: Biometric verification • PEPs/sanctions screening • Ongoing monitoring • Single-API platform.

Consider If: You need global coverage and battle-tested uptime/SLA.

Alternatives: Sumsub, Onfido (not listed). Jumio+1

Regions: Global • Fees/Notes: Custom enterprise pricing.

7. Notabene — Best end-to-end Travel Rule platform

Why Use It: Notabene focuses on pre-transaction decisioning, counterparty VASP due diligence, and sanctions screening across multiple Travel Rule protocols. It’s purpose-built for crypto compliance teams facing enforcement of FATF Recommendation 16.

Best For: Exchanges, custodians, and B2B payment platforms needing Travel Rule at scale.

Notable Features: Pre-TX checks • Counterparty VASP verification • Multi-protocol messaging • Jurisdictional rules engine.

Consider If: Your regulators or banking partners expect full Travel Rule compliance today.

Alternatives: Shyft Veriscope, 21 Analytics. Notabene+1

Regions: Global • Fees/Notes: Annual + usage components.

8. Shyft Network Veriscope — Best decentralized, interoperable Travel Rule messaging

Why Use It: Veriscope provides decentralized VASP discovery, secure VASP-to-VASP PII exchange, and “sunrise issue” lookback to help during uneven global rollouts. Pay-as-you-go pricing can be attractive for newer programs.

Best For: Global VASPs that want decentralized discovery and interoperability.

Notable Features: Auto VASP discovery • Secure PII transfer (no central PII storage) • Lookback support • Interoperability.

Consider If: You prefer decentralized architecture and usage-based pricing.

Alternatives: Notabene, 21 Analytics. shyft.network+1

Regions: Global • Fees/Notes: Pay-as-you-go; no setup fees. shyft.network

9. Merkle Science — Best for predictive blockchain risk analytics

Why Use It: Merkle Science’s platform emphasizes predictive risk modeling and DeFi/smart contract forensics, helping compliance teams see beyond static address tags. Good complement when you monitor emerging chains and token types.

Best For: Exchanges and protocols active in DeFi, new L1/L2 ecosystems, or smart-contract risk.

Notable Features: Predictive risk scores • DeFi & contract forensics • Case tooling • API integrations.

Consider If: You need analytics tuned for newer protocols and token standards.

Alternatives: Chainalysis, TRM Labs. merklescience.com+1

Regions: Global • Fees/Notes: Quote-based enterprise pricing.

10. Scorechain — Best EU-born analytics with audit-ready reporting

Why Use It: Based in Luxembourg, Scorechain offers risk scoring, transaction monitoring, and reporting designed to fit EU frameworks—useful for MiCA/TFR-aligned programs. Teams like the straightforward reporting exports for audits and regulators.

Best For: EU-focused exchanges, neobanks, and tokenization platforms.

Notable Features: Risk scoring • Transaction monitoring • Audit-ready reports • Tools for Travel Rule workflows.

Consider If: Your footprint is primarily EU and you want EU-centric vendor DNA.

Alternatives: Crystal (EU), Elliptic. Scorechain+1

Regions: EU/Global • Fees/Notes: Enterprise licenses; fixed and usage options.

Decision Guide: Best By Use Case

- Regulated U.S. exchange: Chainalysis, TRM Labs

- Global wallet screening at scale: Elliptic

- Enterprise KYC + AML screening combo: Jumio, Sumsub

- Travel Rule (end-to-end ops): Notabene

- Travel Rule (decentralized, pay-as-you-go): Shyft Veriscope

- DeFi/smart-contract forensics: Merkle Science

- EU-centric programs / audit exports: Scorechain

- Sanctions/PEP data depth: ComplyAdvantage

How to Choose the Right crypto compliance, KYC/AML & blockchain analytics vendors (Checklist)

- Jurisdiction & licensing: Confirm the vendor supports your countries and regulator expectations (e.g., FATF R.16 Travel Rule).

- Coverage: Chains/tokens you touch today and plan to touch in 12–18 months.

- Identity depth: Liveness, device checks, KYB for entities, ongoing monitoring.

- Analytics & monitoring: Risk models, false-positive rate, sanctions coverage cadence.

- APIs & workflow: Case management, alert triage, audit trails, BI exports.

- Costs: Pricing model (per-verification, per-alert, or pay-as-you-go).

- Security: Data handling, PII minimization, breach history, regional data residency.

- Red flags: “Black box” risk scores without documentation; no audit logs.

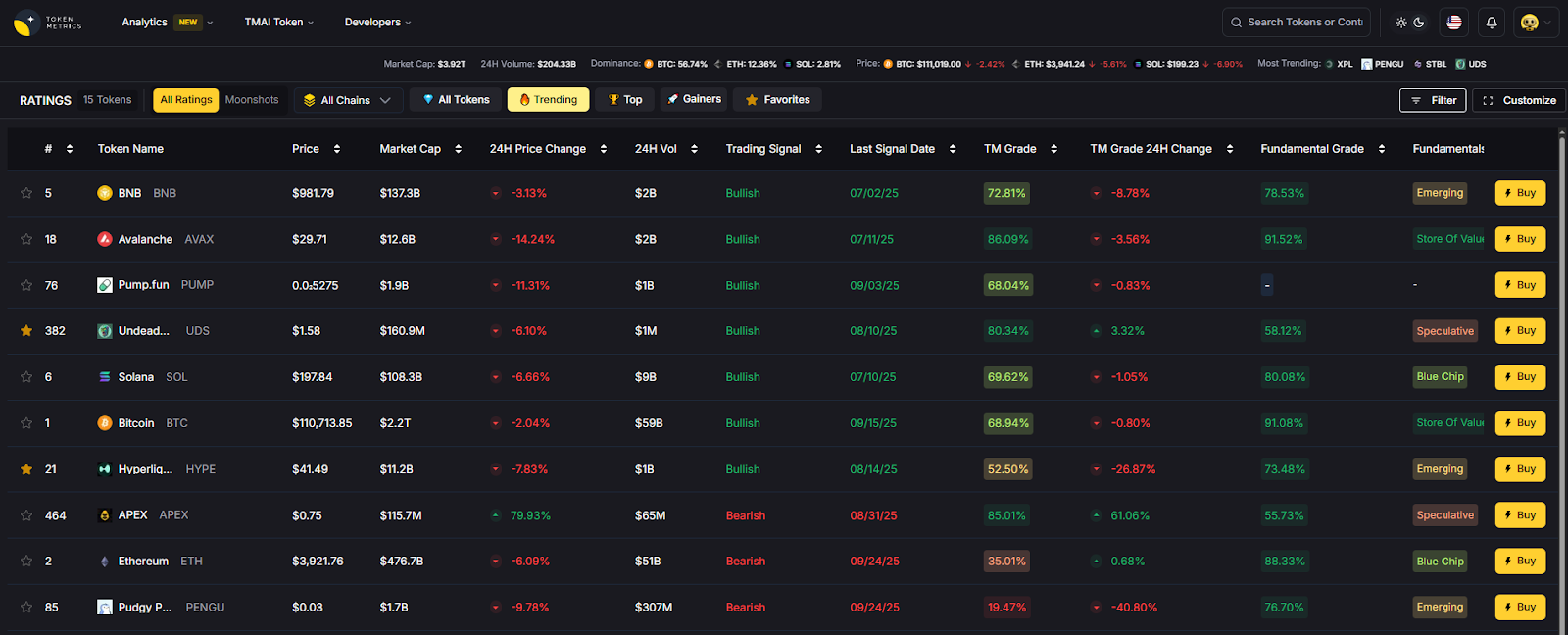

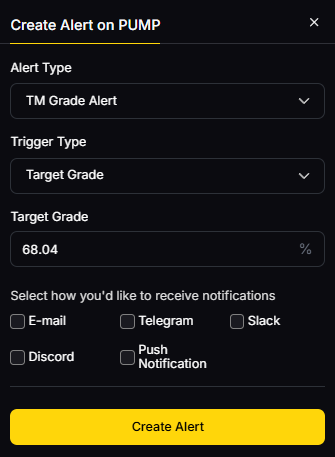

Use Token Metrics With Any crypto compliance, KYC/AML & blockchain analytics vendors

- AI Ratings: Screen assets and spot structural risks before you list.

- Narrative Detection: Track shifts that correlate with on-chain risk trends.

- Portfolio Optimization: Balance exposure as assets pass compliance checks.

- Alerts & Signals: Monitor entries/exits once assets are approved.

Workflow: Research vendors → Select/implement → List/enable assets → Monitor with Token Metrics alerts.

Primary CTA: Start a free trial of Token Metrics.

Security & Compliance Tips

- Enforce 2FA and role-based access for compliance consoles.

- Separate PII from blockchain telemetry; minimize retention.

- Implement Travel Rule pre-transaction checks where required. FATF

- Test sanctions list update cadences and backfill behavior.

- Document SAR/STR processes and case handoffs.

This article is for research/education, not financial advice.

Beginner Mistakes to Avoid

- Picking a vendor with great KYC but no Travel Rule path.

- Ignoring chain/token roadmaps—coverage gaps appear later.

- Under-investing in case management/audit trails.

- Relying solely on address tags without behavior analytics.

- Not budgeting for ongoing monitoring (alerts grow with volume).

FAQs

What’s the difference between KYC and KYT (Know Your Transaction)?

KYC verifies an individual or entity at onboarding and during refresh cycles. KYT/transaction monitoring analyzes wallets and transfers in real time (or post-event) to identify suspicious activity, sanctions exposure, and patterns of illicit finance. TRM Labs

Do I need a Travel Rule solution if I only serve retail in one country?

Possibly. Many jurisdictions apply the Travel Rule above certain thresholds and when sending to other VASPs, even domestically. If you interoperate with global exchanges or custodians, you’ll likely need it. Notabene

How do vendors differ on sanctions coverage?

Screening providers update against official lists and, in some cases, extend coverage using intelligence on known threat actors’ wallets. Look for rapid refresh cycles and retroactive screening. TRM Labs

Can I mix-and-match KYC and blockchain analytics vendors?

Yes. Many teams use a KYC/AML screening vendor plus a blockchain analytics platform; some suites offer both, but best-of-breed mixes are common.

What’s a good starting stack for a new exchange?

A KYC/KYB vendor (Jumio or Sumsub), a sanctions/PEP screening engine (ComplyAdvantage or your KYC vendor’s module), a blockchain analytics platform (Chainalysis/TRM/Elliptic), and a Travel Rule tool (Notabene or Veriscope).

Conclusion + Related Reads

Compliance isn’t one tool; it’s a stack. If you’re U.S.-regulated and high-volume, start with Chainalysis or TRM plus Jumio or Sumsub. If you’re EU-led, Scorechain can simplify audits. For Travel Rule, choose Notabene (end-to-end) or Veriscope (decentralized/pay-as-you-go). Pair your chosen stack with Token Metrics to research, monitor, and act with confidence.

Related Reads:

- Best Cryptocurrency Exchanges 2025

- Top Derivatives Platforms 2025

- Top Institutional Custody Providers 2025

Sources & Update Notes

We independently reviewed official product pages, docs, and security/trust materials for each provider (no third-party links in body). Shortlist refreshed September 2025; we’ll revisit as regulations, features, and availability change.

- Chainalysis — KYT product; platform overview. Chainalysis+1

- TRM Labs — Transaction Monitoring; platform overview; glossary. TRM Labs+2TRM Labs+2

- Elliptic — Lens (wallet screening); Screening solution. Elliptic+1

- ComplyAdvantage — Crypto AML; sanctions & watchlists; platform. ComplyAdvantage+2ComplyAdvantage+2

- Sumsub — Crypto KYC; Crypto Monitoring. Sumsub+1

- Jumio — AML Screening; Platform; KYC & AML use case. Jumio+2Jumio+2

- Notabene — Travel Rule compliance; jurisdiction tracker. Notabene+1

- Shyft Veriscope — Product page; docs. shyft.network+1

- Merkle Science — Platform overview; investigations features. merklescience.com+1

Scorechain — Product pages & glossary resources. Scorechain+1

Create Your Free Token Metrics Account

.png)

%201.svg)

%201.svg)

%201.svg)

.svg)

.png)