Aleo Airdrop 2024 - Eligibility, Process and Rewards

In the emergent world of blockchain technology, the Aleo platform stands out with its privacy-centric approach and innovative Layer 1 design. Anticipation is high for the Aleo airdrop, slated for early 2024, which promises to be a pivotal event for the crypto community.

With a substantial backing of $300M, the impending airdrop provides an opportunity to gain ALEO tokens, a potential game-changer in the realm of secure, decentralized transactions.

Prospective participants eager to engage with the Aleo airdrop should prepare for a process involving system requirements and strategic planning.

This article offers a meticulous guide on how to easily claim your airdrop, detailing eligibility, the step-by-step participation process, and insights on maximizing the potential value of ALEO tokens.

Herein, one will discover the significant aspects of Aleo's unique offerings, including the Leo language, the utility of Aleo Credits, and how these innovations could reshape the landscape of digital assets and privacy.

What is Aleo?

At the heart of the anticipated Aleo airdrop lies the innovative Aleo platform, a privacy-focused Layer 1 blockchain revolutionizing how developers create applications. Here's what sets Aleo apart:

Zero-Knowledge Cryptography: Aleo harnesses the power of zero-knowledge cryptography, specifically through zk-SNARKs, to ensure that transactions remain private.

This advanced cryptographic technique allows the verification of transactions without revealing any underlying data, a stark contrast to traditional blockchain networks where information is typically public.

Decentralized and Private Web Experience: The platform is designed to foster a web environment where user experiences are personalized and kept private.

By leveraging decentralized systems and the robustness of zero-knowledge cryptography, Aleo aims to protect user data on the internet. This feature is becoming increasingly important in today's digital age.

Simplified Implementation: Aleo's architecture is crafted to simplify the implementation of zero-knowledge proofs, making it accessible for developers to incorporate privacy features into their applications without needing deep technical knowledge of the underlying mechanisms.

AleoBFT Consensus Mechanism: The network employs AleoBFT. This hybrid consensus mechanism merges the security benefits of proof-of-work with the scalability advantages of proof-of-stake, offering a balanced approach to network security and performance.

snarkVM and Leo Language: At the core of Aleo's functionality is the snarkVM, which facilitates unlimited runtime for zero-knowledge execution, and Leo, a programming language developed specifically for writing private applications on the blockchain.

Leo programs compile into polynomials that operate within a proof system, ensuring computations are verifiable without revealing any knowledge about the data or the computation itself.

As the platform progresses through its third testnet phase, anticipation builds for the mainnet release, marking a significant milestone in Aleo's journey and the broader evolution of blockchain privacy.

The upcoming Aleo airdrop is not just a token distribution event but a doorway to participating in a cutting-edge ecosystem where the Aleo token will play a central role.

Eligibility Criteria for the Aleo Airdrop

To ensure a successful claim, participants must align with specific eligibility criteria before the Mainnet launch.

While the precise requirements remain under wraps, engaging actively within the Aleo ecosystem is a key determinant. Here's how potential beneficiaries can enhance their eligibility:

- Active Wallet Usage: Prospective participants should utilize a wallet with a robust history of token holding, staking, voting, and regular transaction activities. Such wallets stand a better chance of being recognized for the Aleo airdrop than empty or newly created wallets, which are ineligible.

- Engagement with Aleo Ecosystem: Taking actions like downloading and setting up a wallet, adding it to the Greenlist, claiming testnet tokens, or bridging ETH to Aleo could be part of the eligibility process. Moreover, creating an NFT, deploying a smart contract, or purchasing a domain name on Aleo's platform may also be necessary steps.

- Diverse Interactions: Engaging with multiple Aleo project components such as AleoSwap, and Arcane Finance or acquiring a domain name might increase eligibility. These interactions signify active participation within the Aleo ecosystem, potentially leading to greater rewards.

Aleo Airdrop Details

$ALEO Is One Of The Largest Projects Of The Year

$298M Raised - Airdrop Confirmed For Q1 2024

▪ Costs: $0

▪ Time: 20 min.

▪ Potential gain: $20,000+

Participants are advised to stay informed on the latest developments through Aleo's official channels to fully understand how to claim airdrop benefits.

Step-by-Step Guide to Participating in the Airdrop

To actively participate in the anticipated Aleo airdrop and potentially benefit from the token distribution, follow this step-by-step guide that navigates through the essential actions within the Aleo ecosystem:

1. Setting Up Your Leo Wallet

◈ Visit leo.app and download the Leo Wallet Extension.

◈ Acquire Testnet ALEO Tokens by accessing your Leo wallet's "Faucet" feature.

2. Greenlist your Leo Wallet

Go to → https://www.alchemy.com/faucets/ethereum-sepolia

◈ Copy your wallet address.

◈ Paste the wallet address in the white search field.

◈ Pass the captcha.

◈ Click on Paint it green.

3. Aleo Test Tokens

Go to → faucet.aleo.org

◈ Follow the instructions on the website.

◈ Enter your wallet address.

Note: It can take a few hours for tokens to arrive.

4. Sepolia faucet

Go to → https://sepoliafaucet.com

◈ Enter Metamask Wallet address.

◈ Pass the captcha.

◈ Click o: Send Me ETH.

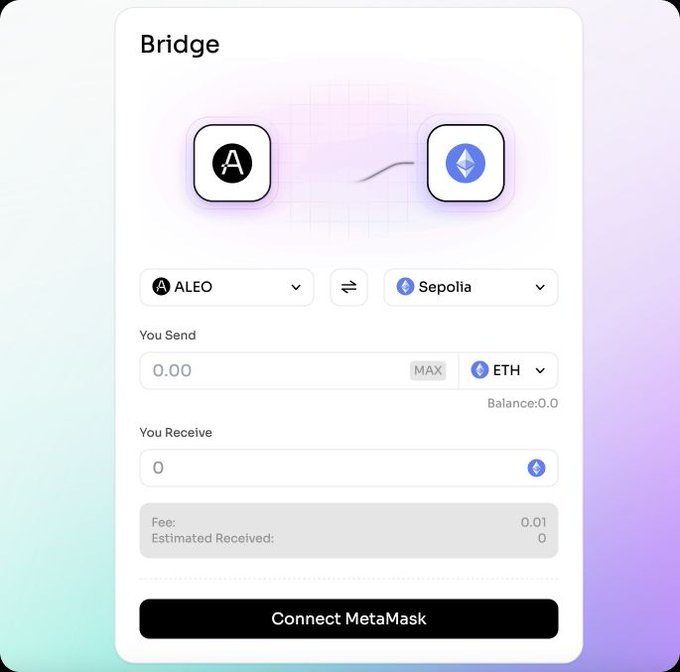

5. Aleo Bridge

Go to → https://testnet-bridge.izar.xyz/bridge

◈ Connect your Leo Wallet & Metamask Wallet

◈ When tokens arrive on your Metamask Wallet, bridge $ETH from Sepolia to Aleo.

6. Make swaps

Go to → https://aleoswap.org

◈ Connect the wallet.

◈ Make $ETH to $USDT swap and back.

7. Add Liquidity

Go to → https://app.aleoswap.org/pool/public

◈ Select a token.

◈ Enter the desired amount to liquidity.

8. Aleo Domains

Go to → https://testnet.aleonames.id/

◈ Connect your wallet.

◈ Register the domain.

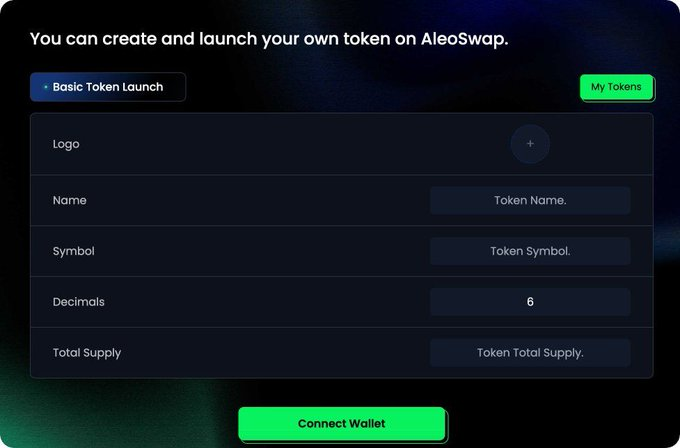

9. Launch a Token on Aleo

Go to → https://app.aleoswap.org/listClick

◈ Connect your wallet.

◈ Fill in the required fields.

10. Trade

Go to → https://alpha.privx.exchange

◈ Connect your wallet.

◈ Check the tutorial.

◈ Grab tokens in the "Claim" section.

11. Zealy quests

Go to → https://zealy.io/c/aleoofficial/questboard

◈ Complete social tasks.

By meticulously following these steps and engaging with the Aleo platform, participants can enhance their chances of claiming the Aleo airdrop. It is crucial to stay informed on the latest updates from Aleo's official channels to fully understand the nuances of how to claim airdrop rewards and maximize the potential of your ALEO token holdings.

Maximizing Your Rewards: Tips and Strategies

To optimize the benefits of the Aleo Airdrop 2024, participants can adopt several strategies to enhance their potential rewards:

- Active Wallet Management: Leveraging a wallet with a solid track record of token holding, staking, and consistent transaction activities can significantly increase the likelihood of a successful airdrop claim. This demonstrates proactive engagement in the crypto space and can be a favorable factor during the airdrop selection process.

- Community Engagement and Awareness: Staying actively involved in crypto is crucial. By keeping abreast of new projects and opportunities, you can identify various airdrop events, including the Aleo airdrop, and understand the specific actions required to participate, such as staking or holding certain tokens like Tia and Injective.

- Adherence to Regulations: Ensuring compliance with local laws and regulations is paramount when participating in token distributions. This not only guarantees the legality of your actions but also safeguards against potential legal complications.

- Diversification Strategy: Creating multiple wallets for diversified staking can spread risk and potentially increase the chances of receiving airdrops. This approach can also apply to the ALEO token distribution, enhancing your overall airdrop portfolio.

- Claiming Process: It's essential to follow the project's instructions meticulously to claim your tokens. This may involve smart contract interactions or other specific procedures the platform provides.

- Scam Vigilance: Always verify information through Aleo's official channels to avoid falling for scams. This practice ensures you engage with legitimate airdrop opportunities and safeguard your digital assets.

By implementing these tips and strategies, you can maximize your Aleo airdrop rewards and make the most of the token distribution event. Remember to stay updated with Aleo's official announcements for any changes or additional steps in the airdrop process.

Potential Value and Benefits of ALEO Tokens

Within the Aleo ecosystem, ALEO tokens are not just a cryptocurrency; they are the cornerstone of a privacy-centric web experience, providing users with control over their data while engaging with web services. The token's design is rooted in principles that ensure its longevity and utility:

- Value and Governance: ALEO tokens are integral to the Aleo platform, enabling users to access personalized web services while maintaining privacy over their personal data. This dual function as a value and governance token positions ALEO as a central player in the platform's operations.

- Tokenomics: The economics behind ALEO tokens are meticulously crafted, focusing on scarcity, incentives, and decentralization. These factors are pivotal in preserving the token's value within the blockchain ecosystem, making it a potentially lucrative asset for investors and users.

- Staking and Incentives: ALEO tokens feature a staking mechanism where traders can earn commissions on transactions. This incentivizes token holders to save and stake their tokens, playing an active role in the platform's transactional processes.

The potential of ALEO tokens extends beyond mere transactions, offering a suite of benefits that underscore the project's strong market confidence:

- Privacy and Scalability: Aleo's commitment to robust privacy protection and on-chain scalability offers a competitive edge, especially for large-scale real-time online applications. This positions ALEO tokens as an attractive asset for those looking to invest in a platform with advanced technological capabilities.

- Economic Model: The PoW physical machine + PoS on-chain staking model employed by Aleo mitigates mining risks and ensures cost predictability for users and investors, enhancing the appeal of ALEO tokens.

- Investment and Financing: Beyond transactions, ALEO tokens can be leveraged for financing projects like dApp development, providing businesses with innovative financial tools and opening new avenues for investment.

- Market Confidence: With a substantial funding pool of $298 million from internationally renowned institutions, ALEO tokens are backed by a level of confidence that suggests a promising future for the token's value.

By understanding the Aleo airdrop and the multifaceted role of ALEO tokens, participants can strategically position themselves to capitalize on the token distribution event and the subsequent opportunities within the Aleo ecosystem.

Also Read - GRASS Airdrop 2024

Aleo Airdrop - Impact on Participants and the Platform

The Aleo airdrop presents a transformative opportunity for participants and the platform alike:

- Early Supporter Benefits: Individuals who have been involved with Aleo from its early stages stand to gain significant from the airdrop, with the possibility of receiving a profit of around $9,000 without any participation cost. This rewards early backers and incentivizes continued support and engagement with the platform as it evolves.

- Token Allocation: A substantial allocation of 150M ALEO tokens earmarked for the airdrop targets early supporters, reinforcing their commitment and potentially leading to a more robust and active community post-airdrop.

- Engagement and Growth: By requiring users to complete a series of tasks, such as downloading extensions and engaging with various platforms, the airdrop fosters deeper interaction within the Aleo ecosystem. This engagement is a crucial step in ensuring the long-term success and adoption of the platform.

- Market Outlook: The crypto market's projected growth in 2024 could mean that successful airdrop participants will see a significant appreciation in the value of their ALEO tokens. The strong market confidence supports this growth, reflected in Aleo's substantial funding of $298M from major investors, including a16z and Coinbase.

- Legal Compliance: Adherence to local laws and regulations is paramount as participants navigate the airdrop process. This ensures that the distribution of ALEO tokens is conducted in a legally compliant manner, which is essential for the legitimacy and stability of the platform.

- Community Engagement: Maximizing airdrop benefits requires active participation in the crypto community and staying informed about new projects and opportunities. This proactive involvement can lead to a greater understanding of the airdrop process and its potential benefits.

By strategically engaging with the Aleo ecosystem and understanding the potential impact of the airdrop, participants can position themselves to benefit from this pivotal event in the platform's development.

Conclusion

As the Aleo platform prepares to launch its mainnet, the Aleo airdrop's significance to prospective participants and the wider blockchain community cannot be overstated.

This guide has detailed the eligibility criteria, participation processes, and action strategies essential to optimize potential rewards from this much-anticipated event.

The unique qualities of the ALEO tokens, particularly in privacy and scalability, alongside the robust economic model of the platform, set the stage for a transformative impact on decentralized web services.

The Aleo airdrop is poised to enhance user engagement within the ecosystem and reward early adopters, bolstering the platform's growth and reinforcing its market position.

With the potential value appreciation and the supportive backing of prominent investors, participants who align with the airdrop's objectives and guidelines stand to gain from the unfolding narrative of Aleo's privacy-centered innovations.

Such strategic participation holds the promise of personal reward and contributes to shaping the evolving landscape of secure, decentralized digital transactions.

Disclaimer

The information provided on this website does not constitute investment advice, financial advice, trading advice, or any other advice, and you should not treat any of the website's content as such.

Token Metrics does not recommend buying, selling, or holding any cryptocurrency. Conduct your due diligence and consult your financial advisor before making investment decisions.

Create Your Free Token Metrics Account

.png)

%201.svg)

%201.svg)

%201.svg)

.svg)

.png)