Floki Inu Coin Price Prediction 2025, 2030 - Forecast Analysis

%201.svg)

%201.svg)

In the dynamic space of cryptocurrencies, meme coins have garnered considerable interest. Among these, Floki Inu Coin has captivated the attention of investors and enthusiasts. With its distinctive branding and community-driven ethos, Floki Inu Coin has generated significant excitement in the market.

This article examines Floki Inu Coin's future potential, offering an in-depth analysis of price predictions for 2025 and 2030.

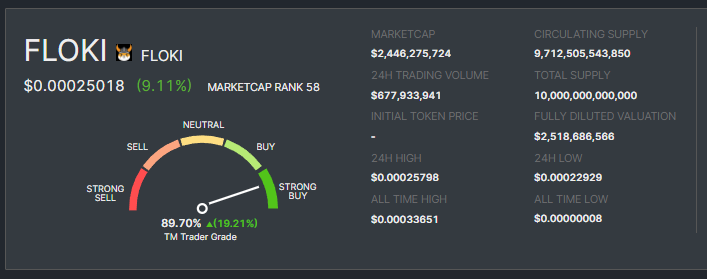

Floki Inu Coin Overview

Floki Inu, originally inspired by Elon Musk's dog and dubbed the "people's currency," has quickly gained popularity in the crypto market.

As a major player in the web3 space, Floki Inu spans decentralized finance (DeFi), non-fungible tokens (NFTs), and the Metaverse, aiming to become the premier meme-based cryptocurrency.

Historical Performance of Floki Inu Coin

Since its inception, Floki Inu has experienced significant volatility in its price. The coin has shown tremendous growth, with a staggering 162,223.13% return.

However, like many meme coins, Floki Inu faces a downtrend as the market shifts under bearish control.

Floki Inu Coin Current Fundamentals

Despite the current market conditions, Floki Inu is pioneering three transformative crypto projects:

- Valhalla: Dive into a gaming metaverse where users can engage, trade, and craft unique digital assets.

- FlokiPlaces: Discover a bustling marketplace for NFTs and exclusive goods, all powered by crypto. The NFT space remains vibrant, regardless of market trends.

- FLOKI coin's versatility: Seamlessly integrated to function on both the Binance Smart Chain and Ethereum.

These initiatives demonstrate Floki Inu's commitment to innovation and its potential for long-term growth.

Floki Inu Coin Price Prediction - Scenario Analysis

To provide a comprehensive price prediction for Floki Inu, we will analyze bullish and bearish market scenarios and consider industry expert opinions.

Floki Inu Coin Price Prediction - Bullish Market Scenario

In a bullish market scenario where positive market sentiment prevails, Floki Inu Coin's price has the potential to experience significant growth. Several factors could contribute to this bullish trend, including increased adoption, technological advancements, and positive regulatory developments.

If the crypto market cap hits $3 Trillion and Floki Inu Coin retains its current 0.08% dominance, its price could ascend to $0.000261.

In a more bullish scenario of the crypto market surging to $10 Trillion, Floki Inu Coin's price could soar to a staggering $0.000873, resulting in a potential 7x return for investors.

It is important to note that these predictions are based on various factors and assumptions, and market conditions can change rapidly. However, bullish market sentiment and increased adoption of Floki Inu Coin's solutions could drive its price to new heights.

Floki Inu Coin Price Prediction - Bearish Market Scenario

These predictions highlight the potential risks of investing in Floki Inu during a bearish market. It's crucial to assess the market conditions and make informed decisions.

In the event of a bearish market, Floki Inu Coin's price could experience a correction, potentially leading to a decrease in its value. In this case, the price of Floki Inu Coin may face downward pressure with a decreased dominance of 0.042%.

In this scenario, If the crypto market cap hits $3 Trillion, Floki Inu Coin could trade around $0.000130 in the short term and struggle to surpass $0.000436 by 2030 even if the total crypto market cap surpasses the $10 Trillion mark.

It is crucial to consider the potential risks and challenges that Floki Inu Coin may face. Factors such as regulatory developments, competition from other projects, and technological advancements can affect the growth trajectory of Floki Inu Coin.

Also Read - Next Crypto Bull Run

Floki Inu Coin Price Prediction - Industry Expert Opinion

Industry experts play a crucial role in providing insights into the potential future performance of cryptocurrencies. While their opinions may vary, it's valuable to consider their perspectives. Based on the analysis of various experts, the following price predictions can be considered:

The Changelly platform predicts Floki Inu Coin could reach $0.00290 to $0.00350 by 2030, and other industry analysts have varying opinions on the future price of Floki Inu Coin.

The Coincodex platform predicts that Floki Inu Coin could reach $ 0.000249 to $ 0.00118 by 2025. By the end of 2030, the price of Floki Inu Coin could reach $ 0.000634 to $ 0.001029.

While these predictions provide insights, it's important to remember that the crypto market is highly volatile and subject to various external factors.

Note - Start Your Free Trial Today and Uncover Your Token's Price Prediction and Forecast on Token Metrics.

Floki Inu Coin's Current Developments and Future Potential

Floki Inu's success depends on its ability to adapt to market conditions, innovate, and attract a strong community. The ongoing development of the Floki ecosystem, including Valhalla and FlokiPlaces, demonstrates the team's commitment to expanding the use cases and utility of the coin.

As the crypto market evolves, Floki Inu has the potential to establish itself as a prominent player in the meme coin space. However, it's important to stay updated on the latest developments and perform thorough research before making any investment decisions.

Also Read - Ripple (XRP) Price Prediction

Conclusion

In conclusion, Floki Inu Coin's price prediction involves careful historical performance analysis, current market conditions, and future potential. While a bullish market scenario may lead to significant price growth, a bearish market scenario can present challenges.

Considering the ongoing developments and industry expert opinions, Floki Inu has the potential to achieve notable milestones in the coming years. However, conducting thorough research, assessing market conditions, and making informed investment decisions are essential.

Remember, the crypto market is highly volatile, and prices can fluctuate rapidly. Always exercise caution and consult a professional financial advisor before making investment decisions.

Disclaimer

The information provided on this website does not constitute investment advice, financial advice, trading advice, or any other advice, and you should not treat any of the website's content as such.

Token Metrics does not recommend buying, selling, or holding any cryptocurrency. Conduct your due diligence and consult your financial advisor before making investment decisions.

AI Agents in Minutes, Not Months

.svg)

Create Your Free Token Metrics Account

.png)

Recent Posts

A New Chapter: Token Metrics Shifts Fully to On-Chain Indices

%201.svg)

%201.svg)

An important update from Ian Balina, Founder and CEO

Today marks a pivotal moment in Token Metrics' journey. After careful consideration of market dynamics and community feedback, we're making a strategic decision that will shape our future: we are sunsetting the Token Metrics Analytics platform and API to focus entirely on building and operating our on-chain indices.

Why We're Making This Change

The crypto landscape has evolved significantly. Research and analytics tools have proliferated, with many platforms offering similar features and dashboards. Meanwhile, the real innovation and differentiation is shifting toward on-chain products that enable investors to own the market itself—simply, transparently, and non-custodially.

Over time, we've noticed a clear trend: the strongest and most consistent interest around Token Metrics has centered on our indices, especially TM100, rather than new features in the Analytics platform or raw data feeds. Our community has shown us where the real value lies.

In response to these market conditions and community sentiment, we've made the decision to narrow our focus dramatically. We're consolidating into a much smaller, highly focused team with a single mission: to do fewer things and do them exceptionally well.

What's Changing and When

Here's the complete timeline for this transition:

Effective Immediately

Analytics and the API enter maintenance/read-only status. Users can still log in to view historical Ratings, but no new features will be released.

November 27, 2025

This is the strict deadline to submit your choice regarding your subscription balance. Please don't delay—requests received after this date may not be processed due to the platform shutdown.

November 28, 2025 (Black Friday)

Subject to final readiness, we plan to begin onboarding select users into the indices (starting with TM100) through a controlled early-access rollout.

November 30, 2025

We will shut down both the Analytics platform and the API endpoints. After this date, all routes will redirect to our indices experience and the Help Center.

Your Compensation Options: Choose What Works Best for You

Because you've supported Token Metrics with your subscription, we want to reward your loyalty with high-value choices. We're offering three options, each designed for different goals:

Option 1: Convert to Indices Credits (3x Value)

Receive 3x the value of your unused subscription as credits toward future indices management fees. This grants you:

- Priority access to TM100

- White-glove onboarding when the exclusive index opens

- The best value for continuing your journey with Token Metrics

This is our recommended option for those who want to stay closest to our future developments and plan to utilize our indices. If you've supported Token Metrics from the very beginning and believe in where we're headed, we strongly encourage this path.

Learn more:

- Indices Demo (40 seconds)

- Indices Deep Dive Webinar (30 minutes)

Option 2: Receive TMAI Airdrop (1.5x Value)

Receive 1.5x the value of your unused subscription in TMAI tokens.

Note: To ensure market stability, these tokens will be subject to a daily vesting schedule over 30 days.

Option 3: Request a Pro-Rata Refund (1:1 Value)

Request a standard 1:1 pro-rated refund of the unused portion of your subscription back to your original payment method.

Note: Please allow up to 60 days for refund processing.

⚠️ Action Required

Select Your Option Here

You must submit this form by November 27, 2025. Requests received after this date may not be processed due to the platform shutdown.

What This Means for Our Community

For Customers and Developers

This pivot is about clarity and focus. Instead of spreading our energy across analytics dashboards and API maintenance, we will deliver one core product: a non-custodial, rules-based index that lets you invest in the crypto market with a single token.

For Investors

This is a strategic re-alignment around the part of the business with the most long-term value. We see greater opportunity in being an index engine and distribution platform than in competing as yet another analytics surface.

For Astrobot Holders

Upon the launch of the Indices, a snapshot taken today confirms your eligibility for an airdrop of permanently staked veTMAI. This converts your current plan to the equivalent staking score level within the new Indices structure. The received veTMAI can then be utilized to benefit from discounted trading fees and a share of the platform's revenue.

For TMAI Token Holders

This is not a step away from you. As we build TM100 and related indices, we intend to maintain and, where possible, enhance the ways TMAI connects to our products. Our priority is to get the index experience live, simple, and trustworthy. Once that foundation is solid, we'll share more details on how the token and indices fit together.

Impact on Our Team

Due to this change, we are becoming a smaller, leaner company. This has required difficult decisions about team structure, but we believe this focused approach will allow us to deliver exceptional value in our chosen domain.

What to Expect Next

- Confirmation: Once you submit your compensation choice, you'll receive a confirmation email

- Reminders: You'll see in-app notices about the November 30 shutdown and November 27 deadline

- Launch Updates: Information about TM100 early access and how to participate as indices onboarding begins around Black Friday

Our Commitment to You

To our customers, API users, investors, and token holders: thank you for your support through multiple market cycles. You've trusted our Ratings, engaged with our research, and participated in our community. This decision honors that history while pointing toward a simpler, more durable product that aligns with where crypto is heading.

By narrowing our focus now, we can build something that deserves your trust for many years to come.

Questions? Reply to our announcement email or contact us at support@tokenmetrics.com. We will read every message and do our best to assist you.

With appreciation,

Ian Balina

Founder and CEO

Token Metrics

Don't forget: Select your compensation option before November 27, 2025

Top Crypto Prime Brokers (2025): Best Institutional Trading & Settlement Platforms

%201.svg)

%201.svg)

- If you’re an institution that needs multi-venue liquidity, off-exchange settlement, and integrated custody/financing, this guide ranks the top crypto prime brokers for 2025 based on scale, security posture, and product breadth.

- Top picks: Coinbase Prime for integrated trading + qualified custody with negotiated fees; FalconX for deep liquidity and capital solutions across spot/derivatives; Anchorage Digital Prime for bank-regulated custody with prime services and derivatives access. (Coinbase)

- Caveats: Pricing is often negotiated; availability and products vary by region and legal entity. Always verify coverage on the provider’s official pages before onboarding. (docs.cdp.coinbase.com)

Introduction: Why Prime Brokerage Matters in 2025

Institutional participation accelerated in 2025, and with it the need for crypto prime brokers that unify execution, financing, custody, and settlement while minimizing counterparty risk. The leading platforms now resemble capital-markets infrastructure: single-counterparty access to multi-venue liquidity, off-exchange settlement networks that keep assets in segregated custody, and derivatives rails to hedge risk. Solutions like Coinbase Prime, FalconX, and Anchorage Digital Prime illustrate this evolution, while settlement networks such as Copper ClearLoop reduce venue risk without slowing execution. (Coinbase)

Primary intent: commercial-investigational. This guide compares features, fees, regions, and tradeoffs, then maps use cases to the right providers—so teams can move from diligence to deployment with confidence.

Our Evaluation Methodology

SCORING_WEIGHTS (sum = 100):

- Scale & Liquidity (25) – depth of venues/LPs, 24/7 execution, options/futures where applicable.

- Security & Regulatory Posture (25) – qualified custody, bank licensing/registrations, segregation, insurance disclosures.

- Product Breadth (15) – spot, OTC/RFQ, derivatives, financing, settlement networks.

- Costs & Fees (10) – published or negotiated schedules, financing/borrow terms.

- Connectivity & Tooling (10) – FIX/WebSocket APIs, OEMS/SOR, reporting.

- Capital Efficiency (10) – cross-margin, off-exchange settlement, instant delegation.

- Support & Service (5) – coverage windows, onboarding SLAs.

Verification approach: We relied on official product, pricing, docs, security, and status pages only. If a claim wasn’t verifiable on an official page, we omitted it. Last updated: November 2025.

Crypto Prime Brokerage Comparison: At a Glance

Notes: “Pricing” reflects publicly stated models (often negotiated). “Regions/Notes” summarize official disclosures and licensing language where available on provider sites.

Detailed Provider Analysis

Coinbase Prime — Full-service prime brokerage at scale

Overview. Coinbase Prime combines trading, financing, and qualified custody under one institutional platform, with negotiated trading fees and flexible order denomination (base/quote). Execution integrates with reporting and controls suitable for asset managers and corporates. (Coinbase)

Ideal For. Asset managers, corporates/treasuries, hedge funds, RIAs.

Standout Features. Integrated custody; negotiated All-In / Cost-Plus fee models; staking fee schedules; institutional reporting. (docs.cdp.coinbase.com)

Pricing. Negotiated, fee currency = quote currency. (docs.cdp.coinbase.com)

Availability. Global, entity-dependent; product availability varies by jurisdiction.

Worth Noting. Some services require separate agreements (e.g., validators, derivatives access).

Alternatives to Consider. Kraken Prime, Anchorage Digital Prime. (Kraken)

FalconX — Deep liquidity & capital solutions across markets

Overview. FalconX offers RFQ/streaming execution across spot and FX with FIX/WebSocket/REST connectivity, plus electronic options and capital solutions. Scale and 24/7 liquidity are central, with workflows for large tickets and programmatic strategies. (falconx.io)

Ideal For. Multi-strategy funds, HFT/systematic traders, market makers.

Standout Features. RFQ/streaming with TWAP/FOK; electronic options; FX desk integrated into prime; institutional APIs. (falconx.io)

Pricing. Negotiated; bespoke based on flow and venues.

Availability. Global institutional focus (entity-dependent).

Worth Noting. Derivatives access and product scope vary by entity/region.

Alternatives to Consider. Coinbase Prime, Kraken Prime. (Coinbase)

Anchorage Digital Prime — Prime services on top of a US bank custodian

Overview. Anchorage Digital Prime delivers trading (13+ order types), derivatives, margin, and settlement built on Anchorage Digital Bank N.A. custody. A single interface and 24/7 trading desk support complex orders. (anchorage.com)

Ideal For. US-regulated custody requirements, governance-active institutions, corporates.

Standout Features. Bank-regulated custody; derivatives access; Atlas settlement; desk + API execution. (anchorage.com)

Pricing. Negotiated; service-by-entity.

Availability. US bank entity with global affiliates; services provided by specific Anchorage entities (see legal). (anchorage.com)

Worth Noting. Trading provided by separate non-bank entities; read entity-level disclosures. (anchorage.com)

Alternatives to Consider. BitGo Prime, Coinbase Prime. (The Digital Asset Infrastructure Company)

Kraken Prime — Multi-venue liquidity with institutional fee tiers

Overview. Kraken Prime aggregates 20+ liquidity providers and offers smart order routing, OTC spot and OTC derivatives (options/structures), plus custody integrations. Institutional fee perks are published for high-volume clients. (Kraken)

Ideal For. Funds seeking published fee schedules, multi-venue execution, and OTC coverage.

Standout Features. Multi-venue liquidity; FIX 4.4 and WebSockets APIs; OTC desk; institutional taker tiers. (Kraken)

Pricing. Maker/taker schedule with institutional perks at very high volumes. (Kraken)

Availability. Global, entity-dependent.

Worth Noting. Some advanced services require qualification and separate onboarding.

Alternatives to Consider. sFOX, Coinbase Prime. (sFOX)

BitGo Prime — Prime services from qualified custody

Overview. BitGo integrates trading, financing, and settlement directly from custody, with segregated, bankruptcy-remote accounts and published insurance coverage details. (The Digital Asset Infrastructure Company)

Ideal For. Institutions prioritizing segregation and custody-first workflows.

Standout Features. Segregated accounts; insurance disclosures; custody-integrated prime trading. (The Digital Asset Infrastructure Company)

Pricing. Negotiated; custody/prime fees depend on assets and activity.

Availability. Global entities with varying regulatory regimes.

Worth Noting. Derivatives access varies by entity/partner networks.

Alternatives to Consider. Anchorage Digital Prime, Copper ClearLoop. (anchorage.com)

sFOX (Prime Services) — Agency SOR across 80+ markets with institutional tooling

Overview. sFOX acts as an agnostic agent routing across 80+ markets for best execution, with net-price routing and institutional reporting. The platform positions itself as a full-service prime dealer for institutions. (sFOX)

Ideal For. Systematic strategies, RIAs/allocators, corporates seeking agency execution.

Standout Features. SOR across venues; platform-level price improvement; custody options. (sFOX)

Pricing. Tiered/negotiated; historical content points to fee optimization via routing. (sFOX)

Availability. US/global entities; enterprise onboarding.

Worth Noting. Product scope (derivatives, margin) varies; confirm coverage.

Alternatives to Consider. Kraken Prime, Coinbase Prime. (Kraken)

Ripple Prime — Multi-asset prime brokerage under Ripple

Overview. Following the acquisition and subsequent close of Hidden Road, Ripple Prime offers U.S. spot OTC execution and positions Ripple as the first crypto company to own and operate a global, multi-asset prime broker; custody capabilities expanded further in late 2025. (Ripple)

Ideal For. Institutions seeking an integrated multi-asset prime platform with digital asset rails.

Standout Features. OTC spot execution (U.S.); multi-asset coverage; financing/clearing. (Ripple)

Pricing. Negotiated.

Availability. U.S. launch and global expansion via Ripple entities. (Ripple)

Worth Noting. Product availability differs by jurisdiction and entity.

Alternatives to Consider. FalconX, Coinbase Prime. (falconx.io)

Binance VIP & Institutional — Exchange liquidity, OTC & programmatic access

Overview. Binance’s institutional suite spans exchange, OTC RFQ, algos, and programmatic connectivity with VIP tiers. Coverage and eligibility depend on jurisdiction; Binance.US operates separately for U.S. clients. (binance.com)

Ideal For. Non-US teams wanting direct exchange liquidity with OTC tools.

Standout Features. VIP tiers, proof-of-reserves, OTC block trading. (binance.com)

Pricing. Exchange/VIP schedules; negotiated OTC.

Availability. Region-dependent; check supported regions. (binance.com)

Worth Noting. U.S. availability/routes differ; compliance and onboarding vary by entity.

Alternatives to Consider. OKX Institutional, OSL. (OKX)

OKX Institutional — Scale, instruments, and U.S. presence via OKX US

Overview. OKX highlights high uptime, 900+ instruments, and institutional connectivity. U.S. availability exists through OKX US with scope/policies noted in compliance disclosures. (OKX)

Ideal For. Proprietary firms and funds trading a broad instrument set with API access.

Standout Features. Nitro Spreads, derivatives suite, institutional support. (OKX)

Pricing. Exchange schedule + VIP; negotiated for blocks.

Availability. Global; US services via OKX US (scope varies). (OKX)

Worth Noting. Product availability and licensing differ by region.

Alternatives to Consider. Binance Institutional, Kraken Prime. (binance.com)

OSL — HK SFC-licensed exchange, custody & OTC

Overview. OSL provides a licensed Hong Kong platform spanning custody, exchange, and OTC brokerage for institutions, with dedicated coverage in the region. (osl.com)

Ideal For. APAC institutions needing SFC-regulated venue/custody.

Standout Features. SFC-licensed exchange; institutional API; custody with insurance positioning. (sfc.hk)

Pricing. Schedule/negotiated.

Availability. Hong Kong and supported regions; institutional focus.

Worth Noting. Product availability differs by license and region.

Alternatives to Consider. Zodia Markets, Coinbase Prime. (Zodia Markets)

Copper ClearLoop — Off-exchange settlement network (multi-custodial)

Overview. ClearLoop enables instant delegation to connected exchanges while assets remain in segregated custody—now supporting third-party custodians like BitGo and Komainu. (Copper)

Ideal For. Institutions prioritizing venue risk reduction and capital efficiency.

Standout Features. Multi-custodial support; instant asset delegation; network of venues/prime brokers. (Copper)

Pricing. Varies by participants and volumes.

Availability. Global network; coverage depends on participating venues/custodians.

Worth Noting. Not a full prime broker by itself; pairs well with custody/venues.

Alternatives to Consider. Zodia Interchange, Komainu Connect. (zodia-custody.com)

Zodia Markets + Zodia Custody Interchange — Non-custodial brokerage + off-venue settlement

Overview. Zodia Markets offers institutional brokerage/exchange with non-custodial design, while Zodia Custody’s Interchange provides off-venue settlement (OVS)—trade while assets remain in custody. Registrations span UK, Ireland, ADGM. (Zodia Markets)

Ideal For. Institutions wanting bank-backed governance and segregated settlement flows.

Standout Features. Non-custodial brokerage; Interchange OVS network; institutional registrations. (Zodia Markets)

Pricing. Negotiated.

Availability. UK/EU/MENA institutional coverage (entity-specific). (Zodia Markets)

Worth Noting. Product scope varies by entity and venue integrations.

Alternatives to Consider. OSL, Copper ClearLoop. (osl.com)

Choosing the Right Prime Broker: A Framework

- Regulatory posture needed? If you require US bank-regulated custody, start with Anchorage Digital Prime. For Hong Kong, OSL’s SFC licensing is key. For UK/EU bank-backed governance with off-venue settlement, consider Zodia. (anchorage.com)

- Execution style. For multi-venue aggregation and published fee tiers, Kraken Prime is strong; for RFQ/streaming with electronic options, FalconX leads. sFOX suits agency SOR across many markets. (Kraken)

- Integrated custody + trading. Coinbase Prime and BitGo Prime minimize operational friction by tying trading to qualified custody. (Coinbase)

- Venue-risk reduction. If you want to trade while assets remain in custody, evaluate Copper ClearLoop or Zodia Interchange (and Komainu Connect as a related model). (Copper)

- Instrument breadth and scale. For vast exchange liquidity and institutional programs, Binance Institutional and OKX Institutional are at-scale options (region permitting). (binance.com)

Integrating Token Metrics Intelligence

- Research phase: Use Token Metrics screeners and on-chain/technical indicators to identify pairs suitable for your mandate (beta targets, breadth, liquidity screens).

- Execution phase: Route orders via your prime broker’s APIs (e.g., FIX/WebSocket) selected above, while TM signals inform aggression/passivity and time-of-day tactics. (Kraken)

- Monitoring: Feed fills, slippage, and borrow/financing into TM analytics to assess venue performance and adjust routing.

- Optimization: Combine TM factor views with prime-broker borrow/financing and settlement options (e.g., off-exchange) to lower VaR and operational risk.

Start a Token Metrics trial to bring quantitative screening and scenario analysis into your institutional workflow.

Security & Compliance Guidelines

- Prefer segregated, bankruptcy-remote custody and confirm insurance disclosures where published. (The Digital Asset Infrastructure Company)

- If you need a US bank custodian, validate services by legal entity and read the provider’s disclosures. (anchorage.com)

- Use off-exchange settlement networks to reduce exchange exposure during execution. (Copper)

- Confirm derivatives eligibility, margin terms, and cross-margin/netting treatment by entity/venue. (Kraken)

- Require API keys/roles with least-privilege, HSM custody, and mandatory allow-listing.

- Maintain jurisdictional watchlists and restrict activity accordingly (e.g., US vs non-US entities for global exchanges). (OKX)

Compliance note: This article is for research/education, not financial advice.

Avoiding Common Mistakes

- Treating “prime” as one product—coverage and legal entities differ by service.

- Assuming off-exchange settlement is automatic—confirm network membership and supported venues. (Copper)

- Onboarding to the wrong entity—read the jurisdictional disclosures. (anchorage.com)

- Ignoring negotiated fees—request All-In vs Cost-Plus quotes. (docs.cdp.coinbase.com)

- Skipping disaster-recovery testing—simulate exchange outage + settlement failover.

- Under-documenting OMS/EMS changes—keep audit-ready change logs.

Frequently Asked Questions

What is a crypto prime broker?

A provider that consolidates institutional trading, financing, custody, and settlement, often with multi-venue access and risk controls. Modern variants add off-exchange settlement so assets remain in custody during execution. (zodia-custody.com)

Do prime brokers publish fees?

Often no—fees are negotiated based on volumes, products, and relationship tier. Some venues publish maker/taker schedules with institutional perks. (docs.cdp.coinbase.com)

How does off-exchange settlement work?

Networks like ClearLoop and Interchange let you delegate assets to venues for trading while keeping them in segregated custody, reducing counterparty risk. (Copper)

Which providers are strongest for regulated custody?

Anchorage Digital Prime (US bank custody), BitGo (qualified custody), OSL (HK SFC-licensed custody/exchange), and Zodia (bank-backed with EU/UK registrations). (anchorage.com)

Can U.S. institutions use OKX or Binance?

Access is region-dependent and varies by entity (e.g., OKX US scope). U.S. clients typically cannot use Binance.com and instead must evaluate Binance.US or other U.S.-eligible routes. Always check official disclosures. (OKX)

Final Recommendations

For most global institutions, a two-stack works best: (1) an integrated prime broker with qualified custody (Coinbase Prime, Anchorage Digital Prime, or BitGo Prime) and (2) a venue-risk mitigator like Copper ClearLoop or Zodia Interchange. Execution-heavy funds should add FalconX or Kraken Prime for multi-venue and OTC derivatives coverage, while OSL anchors APAC/HK mandates and OKX/Binance expand exchange reach where eligible. (Coinbase)

Related Resources:

Best Yield Marketplaces for Real-World Assets (RWAs) in 2025

%201.svg)

%201.svg)

- What this guide covers: A comprehensive evaluation of 10 leading platforms that enable tokenized real-world asset trading and yield generation across private credit, treasuries, real estate, and structured finance

- Quick verdict: Ondo Finance for institutional-grade treasury exposure at ~5% APY, Maple Finance for crypto-collateralized credit facilities delivering 9-12% net yields, Goldfinch Prime for diversified access to private credit funds from Apollo and Ares

- One key limitation to know: Many RWA platforms enforce strict accreditation requirements (minimum $50K-200K) and geographic restrictions, particularly excluding US investors from certain products due to securities regulations

Why Yield Marketplaces for RWAs Matter in January 2025

The real-world asset tokenization market has experienced explosive growth, surging from $85 million in April 2020 to over $25 billion by mid-2025—representing a staggering 245-fold increase driven primarily by institutional demand for yield, transparency, and regulatory clarity. Private credit dominates at approximately 61% of total tokenized assets, followed by treasuries at 30%, with major asset managers like BlackRock, Apollo, and Franklin Templeton now actively tokenizing institutional-grade products.

The convergence of traditional finance and decentralized infrastructure has created unprecedented opportunities for investors seeking stable, real-world yields without exposure to cryptocurrency volatility. The tokenized RWA market is projected to reach between $9.43 trillion and $18.9 trillion by 2030, with regulatory frameworks in Singapore, Hong Kong, and Dubai providing clear pathways for compliant innovation.

For crypto-native investors, RWA yield marketplaces offer a critical bridge to sustainable income streams backed by productive assets rather than purely speculative token emissions. Traditional allocators gain 24/7 access to fractional ownership, instant settlement, and programmable compliance—advantages impossible in legacy financial infrastructure.

How We Evaluated These Providers

We assessed platforms across six weighted criteria totaling 100 points:

- Yield Quality & Sustainability (30%): Consistency of returns, asset backing, historical performance, and correlation to traditional markets

- Security & Risk Management (25%): Custody solutions, audit history, legal recourse, collateralization ratios, and default handling protocols

- Asset Coverage & Diversity (15%): Range of underlying assets, tranching options, and exposure across credit curves

- Accessibility & Costs (15%): Minimum investments, fee structures, geographic availability, and KYC requirements

- Liquidity & Redemption (10%): Secondary market depth, withdrawal timelines, and tokenization standards

- Infrastructure & Compliance (5%): Regulatory licenses, blockchain integrations, and institutional partnerships

Data sources: Official platform documentation, on-chain analytics from RWA.xyz and DeFiLlama, third-party audits, and regulatory filings. Research conducted October 2024-January 2025.

Verification approach: Every fee structure, yield figure, and regional restriction cited was verified on official platform pages or regulatory disclosures. Unverifiable claims were excluded.

The Contenders: Quick Comparison

Provider Reviews by Use Case

For Treasury-Backed Stability (4-5% Yield)

Top Pick: Ondo Finance — Institutional Treasury Tokenization PioneerOverview

Ondo Finance manages over $1.6 billion in tokenized assets, offering institutional-grade access to US Treasury exposure through OUSG (backed by BlackRock's BUIDL fund) and USDY (a yield-bearing stablecoin). The platform bridges TradFi stability with DeFi composability through multi-chain deployment across Ethereum, Solana, Base, and XRP Ledger.

Ideal For

- Institutional allocators seeking low-volatility dollar exposure

- DeFi protocols requiring yield-bearing collateral

- Treasuries managing idle stablecoin holdings

- Investors prioritizing regulatory compliance and brand-name backing

Standout Features

- Approximately 5% APY from short-term US Treasuries with daily interest payouts via rebasing mechanism

- Integration with Ripple's RLUSD stablecoin for instant minting and redemption 24/7

- Comprehensive SEC-registered infrastructure through acquisition of Oasis Pro, including broker-dealer, ATS, and transfer agent licenses

- Strategic partnerships including anchor position in Fidelity's FDIT tokenized money market fund and launch of Ondo Global Markets for tokenized equities

Pricing

Management fees embedded in net yield; typical 0.15-0.30% annual fee depending on product. OUSG requires $100K minimum; USDY accessible at lower thresholds with 4.29% APY.

Availability

Global except US persons for certain products. Ethereum, Solana, Polygon, Aptos, Sei, Base, XRP Ledger, and Sui supported.

Worth Noting

OUSG designed for qualified purchasers with extended lockup periods; less suitable for retail or high-frequency traders. Platform prioritizes institutional relationships over retail accessibility.

Alternatives to Consider: Franklin Templeton BENJI, OpenEden TBILL

Runner-Up: Franklin Templeton BENJI — Legacy Asset Manager Innovation

Overview

Franklin Templeton's $775 million Franklin OnChain U.S. Government Money Fund (BENJI) pioneered tokenized mutual funds in 2021, offering exposure to US government securities across eight blockchains with patent-pending intraday yield calculation.

Ideal For

- Investors seeking regulated 40 Act fund structure

- Collateral managers requiring second-by-second yield accrual

- Multi-chain strategies needing broad blockchain compatibility

- Users prioritizing traditional asset manager credibility

Standout Features

- Intraday yield tracking down to the second, enabling proportional earnings even for partial-day holdings

- Direct stablecoin purchases and redemptions with wallet-to-wallet transfer capability

- 63% of AUM deployed on Stellar Network for optimal cost efficiency

- Integrated with DeFi protocols for collateral and leverage applications

Pricing

7-day current yield approximately 4.5-5.5% gross; 0.25% management fee. No minimum investment restrictions beyond standard mutual fund requirements.

Availability

Global availability with specific KYC requirements. Active on Stellar, Ethereum, Arbitrum, Base, Avalanche, Polygon, Aptos, Solana, BNB Chain.

Worth Noting

Traditional mutual fund constraints apply including daily NAV calculations and potential redemption delays during market stress.

Alternatives to Consider: Backed bIB01, Superstate Short Duration Government Securities

For Institutional Credit Exposure (8-12% Yield)

Top Pick: Maple Finance — Premier Crypto-Collateralized LendingOverview

Maple Finance manages over $4 billion in assets, specializing in institutional over-collateralized lending backed by Bitcoin, Ethereum, Solana, and XRP, targeting $100 billion in annual loan volume by 2030. The platform introduced SyrupUSDC for permissionless retail access alongside KYC-gated institutional pools.

Ideal For

- Institutional lenders seeking exposure to cryptocurrency-backed credit

- Liquidity providers comfortable with smart contract risk

- Allocators targeting floating-rate, senior-secured structures

- Investors seeking alternatives to traditional stablecoin farming

Standout Features

- Consistent 9-12% net yields through undercollateralized institutional facilities and structured credit products

- Strategic integration with Aave's $40 billion protocol bringing syrupUSDT to Plasma instance and syrupUSDC to core markets

- Partnerships with Bitwise, Lido Finance (stETH-backed credit lines), and EtherFi (weETH collateral) expanding institutional adoption

- Robust risk management combining decades of TradFi expertise with real-time on-chain monitoring

Pricing

Variable by pool; typical effective yields 9-12% after protocol fees. Minimum investments pool-dependent, generally $10K-$50K for retail syrup products.

Availability

Global with KYC requirements for lending. Institutional Maple requires accreditation; Syrup.fi open to broader participants.

Worth Noting

Platform transitioned from uncollateralized to over-collateralized model after 2022 market turmoil. Historical defaults impacted certain pools; robust recovery mechanisms now in place.

Alternatives to Consider: Credix (emerging markets), TrueFi (uncollateralized DeFi)

Runner-Up: Goldfinch Prime — Institutional Private Credit Access

Overview

Goldfinch Prime aggregates exposure to multi-billion dollar private credit funds from Ares, Apollo, Golub Capital, and KKR, providing on-chain access to institutional-grade senior secured loans with over $1 trillion in collective AUM.

Ideal For

- Non-US investors seeking diversified private credit exposure

- Allocators targeting institutional fund performance without direct fund access

- Participants seeking uncorrelated yield to crypto markets

- Investors comfortable with longer lockup periods

Standout Features

- Exposure to 1000+ senior secured loans across industries through vetted fund managers with 10+ years experience

- Target returns of 9-12% net of fees with strict eligibility criteria including >90% senior secured portfolios and <0.75% target non-accrual rates

- Heron Finance manages fund selection and vetting while fund managers handle all underwriting and default resolution

- USDC-based deposits converted to proportional GPRIME tokens for continuous offering structure

Pricing

Net yields 9-12% after embedded management fees and profit sharing. Minimum investments vary by pool structure; typically institutional minimums apply.

Availability

Non-US persons only due to regulatory structure. KYC and accreditation verification required.

Worth Noting

Exposure is indirect through fund shares rather than direct loan origination; fund managers absorb individual borrower default risk into aggregate yields. Liquidity limited compared to Treasury products.

Alternatives to Consider: Centrifuge private credit pools, Credix fintech lending

For Real Estate & Diversified Assets (6-10% Yield)

Top Pick: Centrifuge — Multi-Asset Tokenization InfrastructureOverview

Centrifuge operates as a multichain infrastructure platform enabling asset managers to tokenize real estate, trade finance, carbon credits, and structured credit with $1.2 billion TVL and recent 10x growth driven by the first on-chain CLO and tokenized S&P 500 index fund.

Ideal For

- Investors seeking exposure to asset-backed securities beyond treasuries

- Allocators comfortable evaluating tranched credit structures

- Participants prioritizing transparency and on-chain asset verification

- Portfolio managers wanting diversification across real-world credit types

Standout Features

- Asset-agnostic architecture supporting structured credit, real estate mortgages, US treasuries, carbon credits, and consumer finance with full collateralization and legal recourse for investors

- V3 platform enables cross-chain interoperability across Ethereum, Base, Arbitrum, Avalanche, BNB Chain, and Plume with integrated KYC and compliance-as-a-feature

- Senior/junior tranche structures allowing risk-return customization

- Partnership with Chronicle Labs for real-time price feeds and integration with Circle for USDC on/off-ramping

Pricing

Yields vary by pool and tranche: typically 6-8% for senior tranches, 10-14% for junior tranches. Management fees embedded; pool-specific minimums apply.

Availability

Global with KYC requirements. Pools may have additional jurisdictional restrictions based on underlying asset location.

Worth Noting

Platform complexity requires understanding of tranched structures and asset-specific risks. Less liquid credit instruments offer less frequent pricing updates than treasury products.

Alternatives to Consider: RealT (US residential real estate focus), Republic (equity crowdfunding)

For Emerging Market Credit (12-18% Yield)

Top Pick: Credix — Latin American Fintech LendingOverview

Credix specializes in providing credit facilities to fintech lenders in Latin America, offering high-yield exposure to underserved credit markets with institutional-grade underwriting and local market expertise.

Ideal For

- Sophisticated investors seeking higher risk-adjusted returns

- Allocators comfortable with emerging market credit risk

- Participants with extended investment horizons (12-24 months typical)

- Investors seeking geographic diversification from US/EU markets

Standout Features

- Target yields 12-18% reflecting emerging market risk premiums

- Focus on fintech infrastructure enabling financial inclusion

- Partnerships with established Latin American credit originators

- Robust due diligence and monitoring of borrower networks

Pricing

Pool-dependent yields typically 12-18% gross with management fees of 1-2%. Minimum investments generally $50K+ for accredited investors.

Availability

Non-US persons primarily; requires accreditation verification and enhanced KYC given emerging market exposure.

Worth Noting

Higher yields reflect higher credit and currency risk. Platform experienced challenges during regional economic volatility; enhanced risk controls now implemented.

Alternatives to Consider: Goldfinch V1 pools (emerging market focus), TrueFi uncollateralized pools

For Tokenization Infrastructure (Platform Providers)

Top Pick: Securitize — Industry-Leading Issuance PlatformOverview

Securitize has tokenized over $4.5 billion in assets for 1.2 million investors across 3,000+ clients, operating as an SEC-registered transfer agent with integrated ATS for secondary trading. The platform powers major offerings including BlackRock's BUIDL fund.

Ideal For

- Asset managers seeking turnkey tokenization solutions

- Issuers requiring SEC-compliant digital security infrastructure

- Institutional clients needing integrated custody and compliance

- Projects prioritizing regulatory alignment and brand credibility

Standout Features

- DS Protocol automates token issuance, transfer agent functions, and regulated secondary trading with $47 million funding led by BlackRock

- Recent launch of STAC tokenized AAA CLO fund with BNY custody demonstrating institutional adoption trajectory

- Comprehensive investor onboarding, KYC/AML, and reporting infrastructure

- Partnerships with major asset managers and integration with Zero Hash for fiat conversion

Pricing

Platform fees vary by issuance size and complexity; typically 0.5-2% of AUM plus basis point fees on transactions. White-label solutions available for enterprise clients.

Availability

Global operations with jurisdiction-specific compliance modules. Primary focus on US, EU, and APAC institutional markets.

Worth Noting

Securitize is an issuance platform rather than direct investment product; investors access opportunities through partner funds and offerings launched on the infrastructure.

Alternatives to Consider: Tokeny (EU-focused), Polymath/Polymesh (security token specialists)

Complete Provider Directory

- Backed Finance: European MiCA-compliant tokenization platform offering bIB01 (Swiss government bond exposure) and bC3M (commodity basket). Strong focus on regulatory alignment with modest 3-5% yields and low entry barriers.

- Swarm Markets: Decentralized platform enabling fractional ownership of real estate and private credit with $1K-$50K minimums. Emphasizes accessibility while maintaining compliance through distributed infrastructure.

- TrueFi: Uncollateralized lending protocol using on-chain credit scoring and portfolio diversification. Higher risk-return profile (8-15% targets) with full transparency of borrower pools and performance metrics.

- Archax: UK FCA-regulated platform for institutional tokenization of bonds, funds, and money markets. Focus on traditional finance compatibility with stringent accreditation requirements.

Making Your Selection: Key Questions

- What yield stability do you require?

Treasury-backed platforms (Ondo, Franklin Templeton) offer predictable 4-5% returns with minimal volatility. Credit-focused platforms (Maple, Goldfinch) target 9-12% but introduce credit risk and performance variability. - What is your risk tolerance for underlying collateral?

Government securities provide lowest risk; over-collateralized crypto loans add smart contract and liquidation risks; uncollateralized emerging market credit carries highest default potential. - Do you meet accreditation and minimum investment thresholds?

Many institutional products require $50K-$200K minimums and qualified purchaser status. Retail-accessible options like USDY and syrupUSDC lower barriers but may sacrifice yield. - What liquidity do you need?

Treasury tokens generally offer daily liquidity; private credit pools may have quarterly redemption windows; real estate tokenization can involve 12-24 month lockups. - How important is regulatory compliance?

Platforms like Ondo, Franklin Templeton, and Securitize prioritize SEC registration and traditional fund structures. Purely DeFi-native protocols offer less regulatory clarity but greater composability. - What blockchain ecosystems do you operate in?

Multi-chain deployment (Ondo, Centrifuge) provides flexibility; single-chain specialization (some Maple pools) may offer optimization but limits interoperability. - Do you have geographic restrictions?

US persons often excluded from highest-yield opportunities due to securities laws. European investors benefit from MiCA framework; APAC sees growing regulatory clarity. - What level of transparency do you require?

On-chain native protocols offer real-time asset verification; hybrid models may rely on periodic attestations and third-party audits. - Are you seeking passive income or active yield strategies?

Treasury staking provides set-and-forget returns; DeFi integrations enable leverage, collateral strategies, and yield optimization requiring active management. - How much operational complexity can you handle?

Single-platform solutions simplify but limit optionality; multi-protocol strategies maximize returns but require gas management, tax tracking, and security across multiple platforms.

Maximizing Your Setup with Token Metrics

Token Metrics provides critical intelligence for navigating RWA yield opportunities through data-driven analysis and market insights.

Research Phase: Leverage Token Metrics' fundamental analysis to evaluate tokenized asset protocols, comparing on-chain metrics, TVL trends, and yield sustainability indicators. Our RWA sector reports identify emerging platforms and highlight regulatory developments across jurisdictions.

Portfolio Construction: Use quantitative scoring to allocate across risk tiers—balancing stable treasury exposure with higher-yield credit products. Token Metrics' portfolio tools help optimize diversification while monitoring correlation to traditional crypto markets.

Ongoing Monitoring: Real-time alerts notify you of yield changes, protocol upgrades, and risk events. Our dashboard aggregates performance across multiple RWA platforms, tracking your yield-bearing positions alongside broader cryptocurrency holdings.

Risk Management: Token Metrics' risk analytics assess smart contract security, custody arrangements, and counterparty exposure. Our reports flag platforms with concerning yield sustainability metrics or operational red flags before they impact your capital.

Start your Token Metrics free trial to access institutional-grade RWA research and optimize your real-world asset allocation.

Essential Security Practices

- Custody Verification: Confirm that platforms use institutional-grade custodians (Coinbase Custody, BitGo, Fireblocks) for underlying assets. Verify proof-of-reserve attestations and third-party audits.

- Smart Contract Risk: Review audit reports from reputable firms (Trail of Bits, OpenZeppelin, Certora). Understand upgrade mechanisms and timelock protections on protocol contracts.

- Legal Structure Assessment: Examine SPV formation, bankruptcy remoteness, and investor recourse mechanisms. Ensure tokenization structure provides actual legal claim on underlying assets, not just economic exposure.

- Regulatory Compliance: Verify platforms maintain necessary licenses (broker-dealer, transfer agent, ATS) in relevant jurisdictions. Confirm offerings comply with securities laws in your domicile.

- Counterparty Risk: Understand who services loans, manages defaults, and handles liquidations. Evaluate track records of asset originators and credit underwriters.

- Liquidity Risk Management: Never allocate more than you can afford to lock up for stated redemption periods. Maintain buffer capital for market volatility and potential redemption delays.

- Tax Implications: Consult tax professionals on treatment of tokenized yield—may be classified as interest income, dividends, or capital gains depending on structure and jurisdiction.

- Geographic Restrictions: Verify you're eligible to participate based on residency. Using VPNs or misrepresenting location can result in frozen funds and legal liability.

- Platform Concentration: Diversify across multiple RWA providers to reduce single-point-of-failure risk. No single platform should represent majority of yield allocation.

- Documentation: Maintain records of all transactions, yield distributions, and platform communications for tax reporting and potential disputes.

This article is for research and educational purposes, not financial advice. RWA investments carry risks including loss of principal.

What to Avoid

- Ignoring minimum holding periods: Many RWA products enforce lockups or redemption windows. Treating them as liquid positions can leave you unable to access capital when needed.

- Chasing unsustainably high yields: Promised returns significantly above market rates often indicate excessive risk. Verify underlying asset performance and historical consistency before committing capital.

- Overlooking platform liquidity: Token existence on-chain doesn't guarantee exit liquidity. Check secondary market depth and historical spread between minting and redemption prices.

- Neglecting jurisdictional compliance: Accessing platforms not available in your region can result in frozen accounts and regulatory penalties. Always verify eligibility before depositing funds.

- Underestimating smart contract risk: Even well-audited protocols face exploitation risk. Size positions appropriately and monitor security incident disclosures across the ecosystem.

- Failing to verify asset backing: Don't rely on marketing claims. Demand proof-of-reserve, third-party attestations, and transparent reporting on underlying collateral.

- Concentrating in single asset class: Over-allocating to one RWA category (e.g., all private credit) eliminates diversification benefits. Balance across treasuries, credit, and real estate where possible.

- Ignoring fee structures: Management fees, performance fees, and transaction costs compound over time. Calculate net yields after all costs when comparing platforms.

Reader Questions Answered

What's the difference between tokenized treasuries and yield-bearing stablecoins?

Tokenized treasuries (like OUSG) represent direct ownership of US Treasury securities with yield passed through to holders. Yield-bearing stablecoins (like USDY) maintain $1 peg while distributing treasury yields via rebasing or airdrops. Treasuries may fluctuate slightly with NAV; yield stablecoins prioritize price stability.

Are RWA yields taxable differently than crypto staking rewards?

Likely yes, though tax treatment varies by jurisdiction. RWA yields from treasuries or credit facilities often classified as interest income taxed at ordinary rates. Crypto staking may be treated as income at receipt or capital gains at sale depending on location. Consult tax professionals for specific guidance.

Can I use tokenized RWAs as collateral in DeFi protocols?

Increasingly yes. Platforms like Maple, Ondo (via Flux Finance), and Centrifuge enable using tokenized assets as DeFi collateral. However, support varies by protocol and asset—USDY and OUSG see broader integration than niche real estate tokens. Liquidation risks apply if collateral value drops.

What happens if the platform goes bankrupt?

Properly structured tokenization isolates assets in bankruptcy-remote SPVs, protecting investor claims even if platform operator fails. However, redemption processes may be delayed and require legal navigation. This is why verifying legal structure and custodial arrangements is critical.

How liquid are RWA tokens compared to stablecoins?

Significantly less liquid. While stablecoins have billions in daily DEX volume, most RWA tokens trade thinly or only through platform-controlled redemption mechanisms. Treasury tokens offer better liquidity than private credit or real estate, but all lag traditional stablecoins substantially.

Do I need to be an accredited investor?

Depends on the platform and your location. US-based offerings often require accreditation; international platforms may have lower barriers. Products like USDY, syrupUSDC, and BENJI target broader accessibility, while institutional credit pools restrict to qualified purchasers.

What are the main risks that could cause loss of principal?

(1) Underlying asset default (credit risk), (2) smart contract exploitation, (3) platform insolvency without proper asset segregation, (4) regulatory action freezing operations, (5) stablecoin de-pegging for yield products denominated in USDC/USDT, (6) liquidation cascades in collateralized structures.

How do yields compare to traditional finance alternatives?

Tokenized treasuries (4-5%) match conventional money market funds but with 24/7 access. Private credit RWAs (9-12%) offer premiums over corporate bonds due to illiquidity and platform novelty. Emerging market RWA yields (12-18%) comparable to EM debt funds with added smart contract risk.

Bottom Line

Real-world asset yield marketplaces represent the maturation of blockchain infrastructure beyond speculation into productive finance. For investors seeking stable, asset-backed returns uncorrelated to cryptocurrency volatility, platforms like Ondo Finance and Franklin Templeton deliver institutional-grade treasury exposure with DeFi composability. Those comfortable with credit risk can access superior yields through Maple Finance's collateralized institutional lending or Goldfinch Prime's diversified private credit exposure.

The key to success: match platform selection to your specific risk tolerance, liquidity needs, and regulatory constraints. Treasury products suit conservative allocators prioritizing capital preservation; credit platforms reward investors accepting higher risk for enhanced returns; multi-asset infrastructure like Centrifuge offers diversification across real-world credit types.

Start with smaller allocations to understand platform mechanics, redemption processes, and yield consistency before committing substantial capital. The RWA sector's rapid growth will continue creating opportunities, but also attracting lower-quality offerings—due diligence remains paramount.

Related Resources:

- Best Cryptocurrency Exchanges 2025

- Top Derivatives Platforms 2025

- Top Institutional Custody Providers 2025

About This Research

Methodology: This guide evaluated 20+ RWA platforms through analysis of official documentation, on-chain data, regulatory filings, and third-party audits. Platforms selected represent meaningful TVL (>$100M), regulatory compliance, and operational track record. Final selection prioritized diversity across asset classes and investor accessibility tiers.

Research Sources:

- Ondo Finance: Product documentation, tokenomics, OUSG/USDY specifications, Oasis Pro acquisition disclosure

- Maple Finance: Platform whitepaper, syrupUSDC mechanics, Aave integration announcement, CEO interviews

- Centrifuge: V3 technical documentation, pool analytics, Republic partnership details

- Goldfinch: Prime pool specifications, fund manager criteria, Heron Finance structure

- Franklin Templeton: BENJI platform technical details, intraday yield patent documentation, multi-chain deployment

- Securitize: DS Protocol documentation, STAC fund launch details, BlackRock partnership announcements

- RWA.xyz: Platform TVL data, tokenization metrics, market growth analysis

- DeFiLlama: Protocol TVL tracking, historical performance data

- BCG/Ripple: RWA market projections and industry research

- VanEck, Coinbase, Binance: Sector research reports on RWA growth trajectories

All data current as of January 2025. Platform features and yields subject to change; verify current terms on official websites before investing.

Get Your Brand in Front of 150,000+ Crypto Investors!

Create Your Free Account

Create Your Free Account9450 SW Gemini Dr

PMB 59348

Beaverton, Oregon 97008-7105 US

.svg)

No Credit Card Required

Online Payment

SSL Encrypted

.png)

Products

Subscribe to Newsletter

Token Metrics Media LLC is a regular publication of information, analysis, and commentary focused especially on blockchain technology and business, cryptocurrency, blockchain-based tokens, market trends, and trading strategies.

Token Metrics Media LLC does not provide individually tailored investment advice and does not take a subscriber’s or anyone’s personal circumstances into consideration when discussing investments; nor is Token Metrics Advisers LLC registered as an investment adviser or broker-dealer in any jurisdiction.

Information contained herein is not an offer or solicitation to buy, hold, or sell any security. The Token Metrics team has advised and invested in many blockchain companies. A complete list of their advisory roles and current holdings can be viewed here: https://tokenmetrics.com/disclosures.html/

Token Metrics Media LLC relies on information from various sources believed to be reliable, including clients and third parties, but cannot guarantee the accuracy and completeness of that information. Additionally, Token Metrics Media LLC does not provide tax advice, and investors are encouraged to consult with their personal tax advisors.

All investing involves risk, including the possible loss of money you invest, and past performance does not guarantee future performance. Ratings and price predictions are provided for informational and illustrative purposes, and may not reflect actual future performance.

%201.svg)