What is Proof of Work Versus Proof of Stake: The Complete 2025 Guide to Blockchain Consensus

The blockchain industry has seen a profound evolution in how decentralized systems secure transactions and maintain consensus. As we move through 2025, understanding what is proof of work versus proof of stake remains essential for anyone involved in the cryptocurrency industry.

At first glance, proof of work and proof of stake may appear similar as consensus mechanisms, but their underlying mechanisms and implications differ significantly.

These two consensus mechanisms serve as the backbone of blockchain technology, each with unique benefits, trade offs, and implications for network security, energy usage, and scalability. This comprehensive guide explores the fundamentals of Proof of Work (PoW) and Proof of Stake (PoS), their differences, and their impact on the future of blockchain networks.

Introduction to Blockchain Consensus

Blockchain consensus mechanisms are the foundation of decentralized systems, ensuring that all participants in a network agree on the validity of transactions without relying on a central authority. These mechanisms are responsible for validating new transactions, adding them to the blockchain, and creating new tokens in a secure and transparent manner. By eliminating the need for a single controlling entity, consensus mechanisms like proof of work and proof of stake enable trustless collaboration and robust network security.

Each consensus mechanism takes a different approach to achieving agreement and maintaining the integrity of the blockchain. Proof of work relies on energy-intensive computational work and proof, while proof of stake leverages financial incentives and staking to secure the network. Both systems are designed to prevent fraud, double-spending, and other malicious activities, ensuring that only valid transactions are recorded. As we explore these mechanisms in detail, we’ll examine their impact on energy consumption, decentralization, and the overall security of blockchain networks.

Understanding Proof of Work: The Pioneer Consensus Mechanism

Proof of Work is the original consensus mechanism that launched with the first cryptocurrency, Bitcoin, in 2009. At its core, PoW relies on miners using computational power to solve complex puzzles—specifically cryptographic puzzles—through a process often described as work and proof. Miners compete by expending electricity and processing power to find a valid hash that meets the network’s difficulty criteria. The first miner to solve the puzzle earns the right to add the next block to the blockchain and receive block rewards alongside transaction fees.

This mining process requires specialized hardware such as Application-Specific Integrated Circuits (ASICs) or powerful graphics processing units (GPUs), which perform trillions of calculations per second. The network automatically adjusts the puzzle difficulty to maintain a steady rate of adding blocks, ensuring new blocks are created approximately every 10 minutes on the Bitcoin network.

Key Characteristics of Proof of Work:

- Security Through Energy and Computation Power: PoW’s security model is based on the enormous amount of computational work and electricity required to attack the network. To successfully manipulate the blockchain, a malicious actor would need to control more than 50% of the total mining power, which is prohibitively expensive and resource-intensive. This makes the Bitcoin network, for example, extremely resilient to attacks and bad blocks.

- Decentralized System: In theory, anyone with the necessary hardware and electricity can participate in mining, promoting decentralization. As more miners join the network, the overall security and decentralization of the proof of work system are enhanced, but this also leads to increased energy consumption and potential centralization among large mining entities. However, in practice, mining pools and industrial-scale operations have concentrated significant computational power, raising concerns about central authority in some cases.

- High Energy Consumption: PoW’s reliance on computational power results in significant energy usage and power consumption. Critics highlight the environmental impact due to electricity consumption, sometimes comparable to that of small countries. Nevertheless, proponents argue that mining incentivizes the use of renewable energy and can utilize off-peak or otherwise wasted electricity.

- Proven Track Record: PoW’s robustness is demonstrated by Bitcoin’s uninterrupted operation for over a decade without a successful attack, making it the most battle-tested consensus mechanism in the cryptocurrency industry.

Bitcoin’s Consensus Mechanism: The Gold Standard in Practice

Bitcoin, the first cryptocurrency, set the standard for blockchain consensus with its innovative use of proof of work. In this system, miners harness significant computing power to compete for the opportunity to add new blocks to the blockchain. Each miner gathers pending transactions into a block and works to solve a cryptographic puzzle, which involves finding a specific nonce that satisfies the network’s difficulty requirements. This process demands repeated trial and error, consuming substantial energy and processing resources.

Once a miner discovers a valid solution, the new block is broadcast to the network, where other nodes verify its accuracy before adding it to their own copy of the blockchain. The successful miner is rewarded with newly minted bitcoins and transaction fees, incentivizing continued participation and network security. Since its launch in 2009, Bitcoin’s proof of work consensus mechanism has proven remarkably resilient, maintaining a secure and decentralized network. However, the high energy consumption required to solve these cryptographic puzzles has sparked ongoing debate about the environmental impact of this approach.

Understanding Proof of Stake: The Energy-Efficient Alternative

Proof of Stake emerged as a more energy efficient alternative to PoW, addressing the concerns related to energy cost and environmental impact. Instead of miners competing with computational power, PoS relies on validators who are selected as the 'block creator' to add new blocks based on the amount of cryptocurrency they hold and lock up as a stake. This stake acts as collateral, incentivizing honest behavior because validators risk losing their stake if they attempt to validate fraudulent transactions, behave maliciously, or go offline.

Validators are chosen through a winner based process that combines factors such as stake size, randomization, and sometimes the age of coins. Once selected, a validator proposes a new block, which must be accepted by other validators before being finalized. A threshold number of validator attestations is required before a new block is added to the blockchain. Validators are responsible for validating transactions and verifying transactions before adding them to the blockchain, including new transactions. Stake transactions involve validators locking up their tokens to participate in validating transactions and earn rewards.

Essential Features of Proof of Stake:

- Drastic Reduction in Energy Consumption: Compared to PoW, PoS systems require dramatically less electricity because they do not rely on solving energy-intensive puzzles. Ethereum’s switch from PoW to PoS resulted in a 99.992% reduction in energy usage, setting a benchmark for sustainable blockchain technology.

- Lower Hardware Requirements: Validators do not need expensive mining rigs or massive computational power. Instead, anyone holding the predetermined amount of native cryptocurrency can participate, potentially enhancing decentralization and accessibility.

- Economic Security Through Stake Proof: Validators have a financial incentive to act honestly because misbehavior can lead to losing their staked tokens through penalties known as slashing. This aligns the interests of validators with the network’s health and security.

- Improved Scalability and Performance: PoS networks typically support faster transaction processing and higher throughput, enabling more efficient blockchain transactions and supporting complex features like smart contracts.

Work and Proof in Blockchain Consensus

At the heart of blockchain technology are consensus mechanisms that guarantee the security and reliability of decentralized networks. Proof of work and proof of stake represent two distinct approaches to achieving consensus. In proof of work, network participants—known as miners—use computational power to solve complex puzzles, a process that requires significant energy and resources. This work and proof model ensures that adding new blocks to the blockchain is both challenging and costly, deterring malicious actors.

In contrast, proof of stake introduces a more energy-efficient system by selecting validators based on the amount of cryptocurrency they are willing to stake as collateral. Instead of relying on raw computational power, validators in a stake system are chosen to validate transactions and create new blocks according to their staked amount, reducing the need for excessive energy consumption. The fundamental trade-off between these consensus mechanisms lies in their approach to network security: proof of work emphasizes computational effort, while proof of stake leverages financial incentives and honest behavior. Understanding these differences is crucial for evaluating which system best fits the needs of various blockchain networks and applications.

The Great Migration: Ethereum's Historic Transition

A landmark event in the PoW vs PoS debate was Ethereum's switch from Proof of Work to Proof of Stake in September 2022, known as "The Merge." This transition transformed the Ethereum network, the second-largest blockchain platform, by eliminating its energy-intensive mining operations and adopting a PoS consensus mechanism.

Ethereum’s move to PoS not only resulted in a drastic reduction in energy consumption but also unlocked new possibilities such as liquid staking derivatives. These innovations allow users to stake their ETH while maintaining liquidity, enabling participation in DeFi applications without sacrificing staking rewards.

The transition has inspired other blockchain projects to explore PoS or hybrid consensus models, combining the security strengths of PoW with the energy efficiency and scalability of PoS. Ethereum’s successful upgrade stands as a powerful example of how major networks can evolve their consensus mechanisms to meet future demands.

Comparative Analysis: Security, Decentralization, and Performance

When comparing proof of work versus proof of stake, several critical factors emerge:

- Security Models: PoW’s security is rooted in the economic and physical costs of computational work, making attacks costly and easily detectable. Proof of work's security model has not been successfully attacked since its inception, demonstrating its reliability and resistance to manipulation. PoS secures the network economically through validators’ staked assets, where dishonest behavior results in financial penalties. Both models have proven effective but rely on different mechanisms to incentivize honest behavior.

- Environmental Impact: PoW networks consume more energy due to mining operations. Proof of work's high energy consumption is a direct result of its security model, which requires significant computational resources. PoS systems are markedly more energy efficient, appealing to sustainability-conscious users and regulators.

- Economic Incentives and Costs: PoW miners face ongoing expenses for hardware and electricity to maintain mining operations. PoS validators earn rewards by locking up their stake and risk losing it if they act maliciously. These differences create distinct economic dynamics and barriers to entry.

- Decentralization Considerations: While PoW mining pools have centralized some hash power, PoS systems can also concentrate power if large amounts of stake accumulate in a single entity or staking pool. Both systems must carefully balance decentralization with efficiency.

- Performance and Scalability: PoS generally offers faster transaction times and better scalability, supporting higher throughput and more complex blockchain applications than many PoW networks.

The Impact of Energy Consumption and Environmental Considerations

Energy consumption has become a defining issue in the debate over blockchain consensus mechanisms. Proof of work networks, such as Bitcoin, are known for their high energy requirements, with the total power consumption of the network often surpassing that of small countries. This significant energy usage is a direct result of the computational power needed to solve cryptographic puzzles and secure the network, leading to concerns about greenhouse gas emissions and environmental sustainability.

In response, proof of stake mechanisms have been developed to offer a more energy-efficient alternative. By eliminating the need for energy-intensive mining, proof of stake drastically reduces the carbon footprint of blockchain technology. The recent transition of the Ethereum network from proof of work to proof of stake serves as a prime example, resulting in a dramatic reduction in energy consumption and setting a new standard for sustainable blockchain development. As the cryptocurrency industry continues to grow, environmental considerations are becoming increasingly important, driving innovation in consensus mechanisms that prioritize both security and sustainability.

More Energy-Intensive Consensus Mechanisms

While proof of work remains the most prominent example of an energy-intensive consensus mechanism, it is not the only one that relies on substantial computational power. Other mechanisms, such as proof of capacity and proof of space, also require large amounts of energy to secure the network and validate transactions. These systems depend on participants dedicating significant storage or processing resources, further contributing to overall energy consumption.

As the demand for more sustainable blockchain solutions increases, the industry is actively exploring alternative consensus mechanisms that can deliver robust security without excessive energy costs. Hybrid models that combine elements of proof of work and proof of stake are emerging as promising options, aiming to balance the trade-offs between security, decentralization, and energy efficiency. The future of blockchain consensus will likely be shaped by ongoing research and development, as networks seek to create systems that are both secure and environmentally responsible, ensuring the long-term viability of decentralized technologies.

Current Market Landscape and Adoption Trends

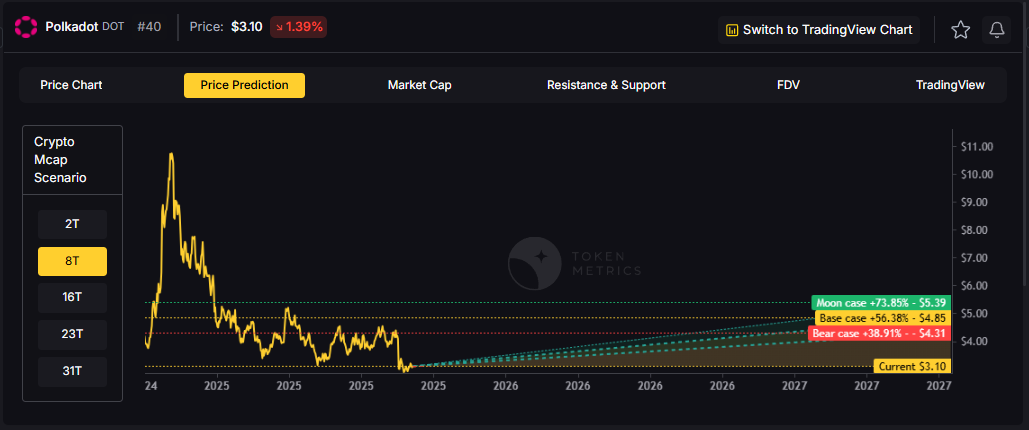

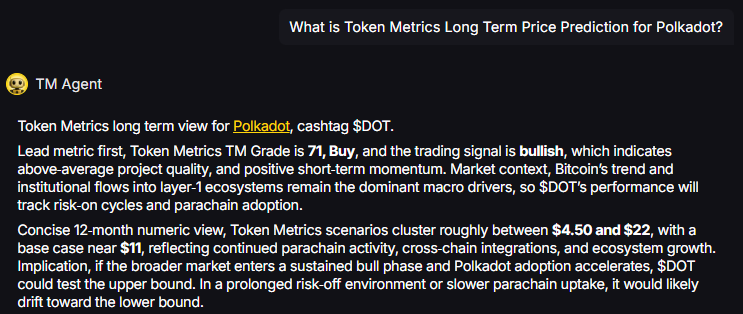

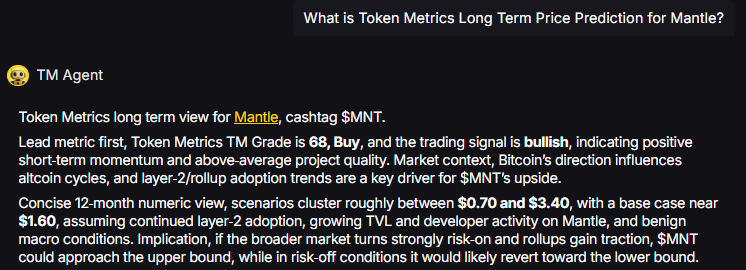

In 2025, the cryptocurrency ecosystem shows a clear trend toward adopting PoS or hybrid consensus mechanisms among new blockchain projects. The appeal of reduced energy cost, scalability, and lower hardware requirements drives this shift. Networks like Cardano, Solana, and Polkadot utilize PoS or variations thereof, emphasizing energy efficiency and performance.

Conversely, Bitcoin remains steadfast in its commitment to PoW, with its community valuing the security and decentralization benefits despite the environmental concerns. This philosophical divide between PoW and PoS communities continues to shape investment strategies and network development.

Hybrid models that integrate both PoW and PoS elements are gaining attention, aiming to combine the security of computational work systems with the efficiency of stake systems. These innovations reflect ongoing experimentation in the cryptocurrency industry’s quest for optimal consensus solutions.

Professional Tools for Consensus Mechanism Analysis

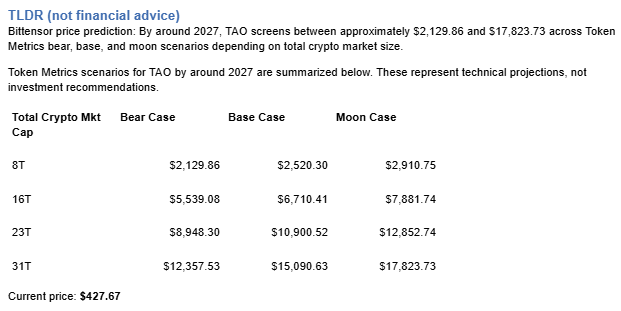

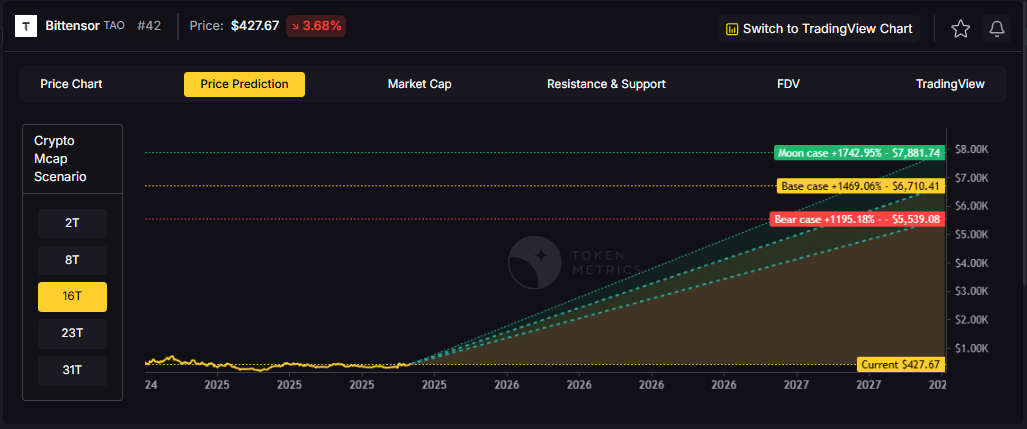

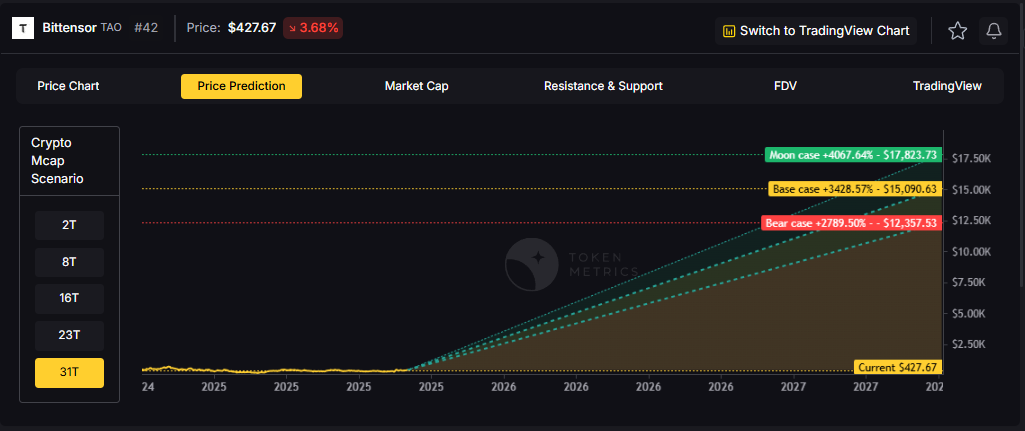

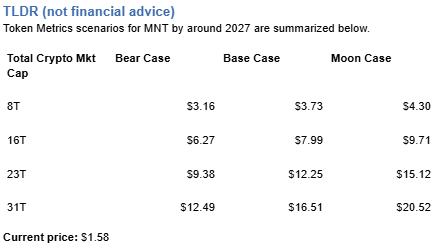

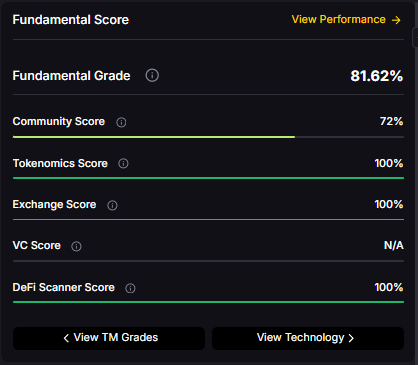

For investors and traders seeking to navigate the complexities of consensus mechanisms, professional analytics platforms like Token Metrics provide invaluable insights. Token Metrics leverages AI to analyze blockchain networks across multiple dimensions, including network security, validator performance, and staking economics.

The platform offers real-time monitoring of staking yields, validator behavior, and network participation rates, helping users optimize their strategies in PoS systems. For PoW networks, Token Metrics tracks mining difficulty, hash rate distribution, and energy consumption patterns.

Additionally, Token Metrics supports ESG-focused investors by providing detailed analysis of energy consumption across consensus mechanisms, aligning investment decisions with sustainability goals.

By continuously monitoring network updates and consensus changes, Token Metrics empowers users to stay informed about critical developments that impact the security and value of their holdings.

Staking Economics and Reward Mechanisms

The economics of PoS networks introduce new dynamics compared to PoW mining. Validators earn staking rewards based on factors such as the total amount staked, network inflation rates, and transaction activity. Typical annual yields range from 3% to 15%, though these vary widely by network and market conditions.

Participants must consider risks such as slashing penalties for validator misbehavior, lock-up periods during which staked tokens cannot be withdrawn, and potential volatility in the price of the native cryptocurrency.

The rise of liquid staking platforms has revolutionized staking by allowing users to earn rewards while retaining liquidity, enabling more flexible investment strategies that integrate staking with lending, trading, and decentralized finance.

Future Developments and Hybrid Models

The future of consensus mechanisms is marked by ongoing innovation. New protocols like Proof of Succinct Work (PoSW) aim to transform computational work into productive tasks while maintaining security. Delegated Proof of Stake (DPoS) improves governance efficiency by electing a smaller number of validators, enhancing scalability.

Artificial intelligence and machine learning are beginning to influence consensus design, with projects experimenting with AI-driven validator selection and dynamic network parameter adjustments to optimize security and performance.

Hybrid consensus models that blend PoW and PoS features seek to balance energy consumption, security, and decentralization, potentially offering the best of both worlds for future blockchain systems.

Regulatory Considerations and Institutional Adoption

Regulators worldwide are increasingly taking consensus mechanisms into account when shaping policies. PoS networks often receive more favorable treatment due to their lower environmental footprint and distinct economic models.

Tax treatment of staking rewards remains complex and varies by jurisdiction, affecting the net returns for investors and influencing adoption rates.

Institutional interest in PoS networks has surged, with major financial players offering staking services and integrating PoS assets into their portfolios. This institutional adoption enhances liquidity, governance, and legitimacy within the cryptocurrency industry.

Risk Management and Due Diligence

Engaging with either PoW or PoS networks requires careful risk management. PoW participants face challenges like hardware obsolescence, fluctuating electricity costs, and regulatory scrutiny of mining operations. PoS participants must manage risks related to slashing, validator reliability, and token lock-up periods. In particular, validators who produce or accept a bad block—an invalid or malicious block—can be penalized through slashing, which helps maintain network integrity.

Analytics platforms such as Token Metrics provide critical tools for monitoring these risks, offering insights into mining pool concentration, validator performance, and network health.

Diversifying investments across different consensus mechanisms can mitigate risks and capture opportunities arising from the evolving blockchain landscape.

Conclusion: Navigating the Consensus Mechanism Landscape

Understanding what is proof of work versus proof of stake is essential for anyone involved in blockchain technology today. Both consensus mechanisms present unique trade offs in terms of security, energy usage, economic incentives, and technical capabilities.

While Bitcoin’s PoW system remains the gold standard for security and decentralization, Ethereum’s successful transition to PoS exemplifies the future of energy-efficient blockchain networks. Emerging hybrid models and innovative consensus protocols promise to further refine how decentralized systems operate.

For investors, traders, and blockchain enthusiasts, leveraging professional tools like Token Metrics can provide critical insights into how consensus mechanisms affect network performance, security, and investment potential. Staying informed and adaptable in this dynamic environment is key to thriving in the evolving world of blockchain technology.

.svg)

Create Your Free Token Metrics Account

.png)

%201.svg)

%201.svg)

%201.svg)

.svg)

.png)