Top Crypto Trading Platforms in 2025

Big news: We’re cranking up the heat on AI-driven crypto analytics with the launch of the Token Metrics API and our official SDK (Software Development Kit). This isn’t just an upgrade – it's a quantum leap, giving traders, hedge funds, developers, and institutions direct access to cutting-edge market intelligence, trading signals, and predictive analytics.

Crypto markets move fast, and having real-time, AI-powered insights can be the difference between catching the next big trend or getting left behind. Until now, traders and quants have been wrestling with scattered data, delayed reporting, and a lack of truly predictive analytics. Not anymore.

The Token Metrics API delivers 32+ high-performance endpoints packed with powerful AI-driven insights right into your lap, including:

Getting started with the Token Metrics API is simple:

At Token Metrics, we believe data should be decentralized, predictive, and actionable.

The Token Metrics API & SDK bring next-gen AI-powered crypto intelligence to anyone looking to trade smarter, build better, and stay ahead of the curve. With our official SDK, developers can plug these insights into their own trading bots, dashboards, and research tools – no need to reinvent the wheel.

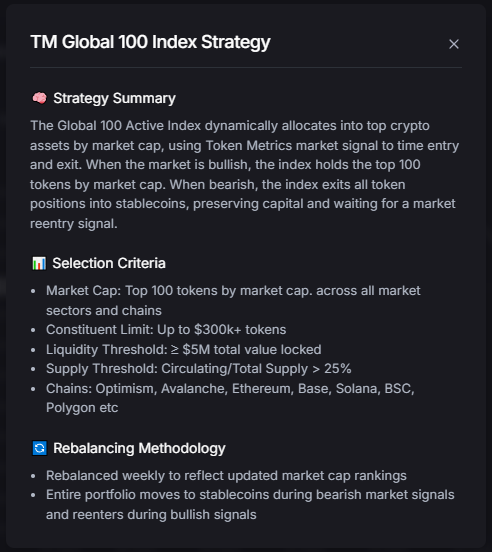

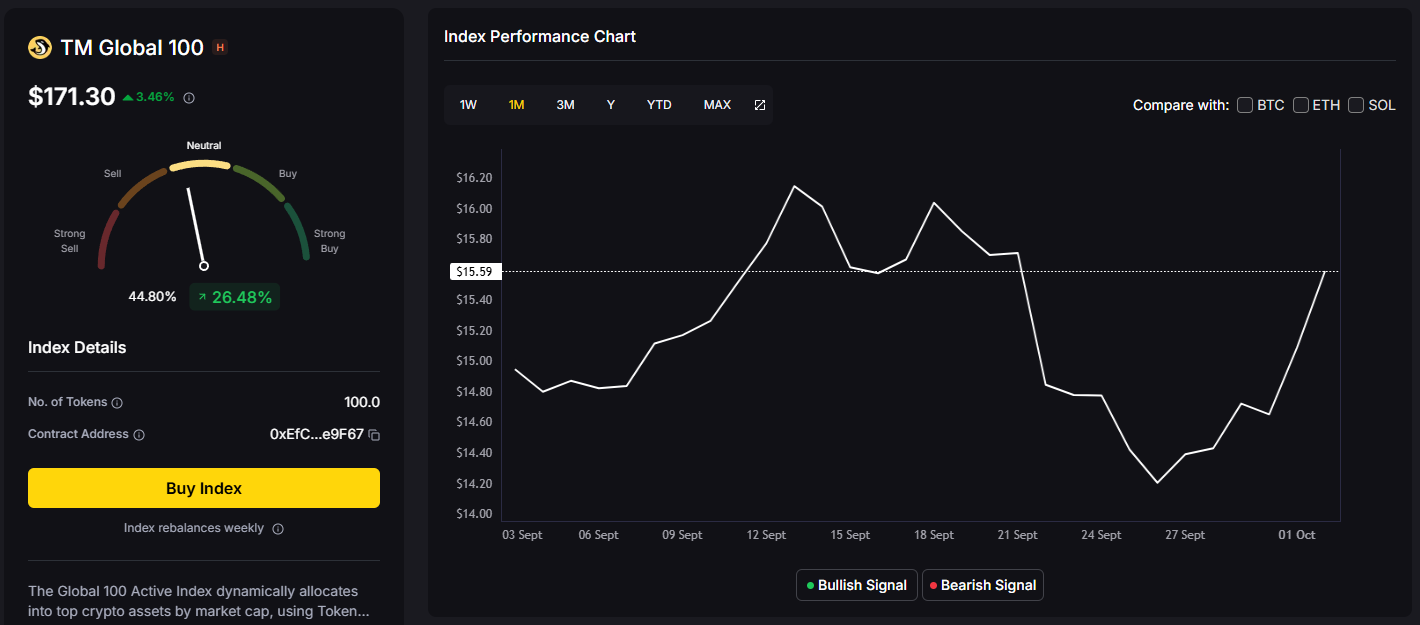

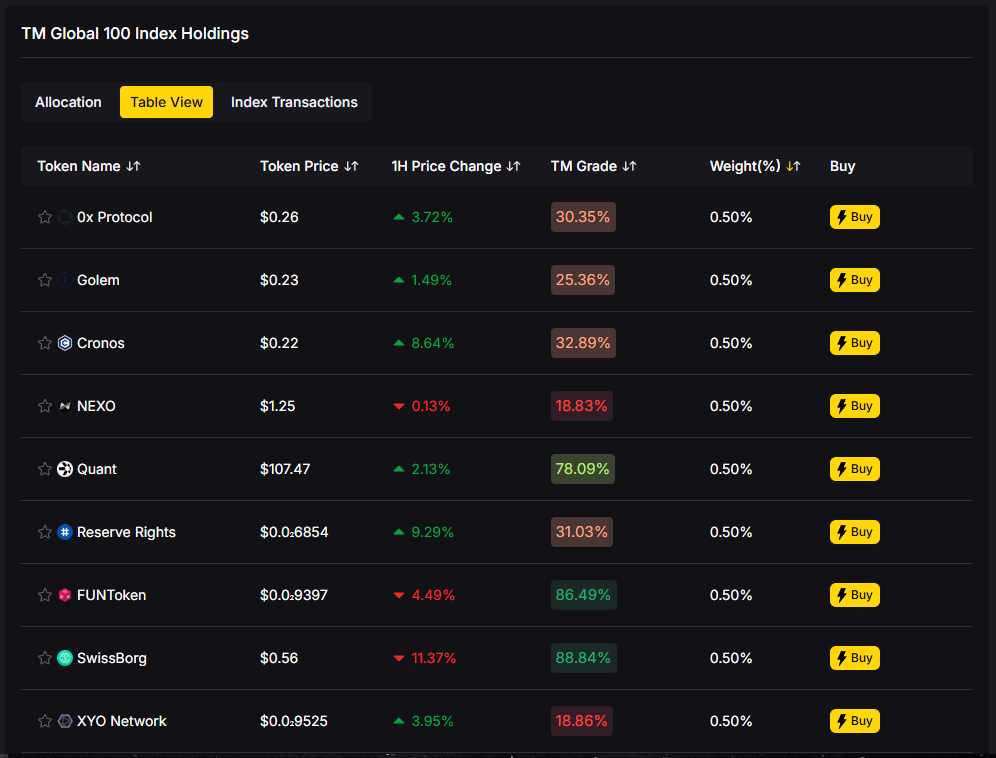

If you want broad crypto exposure without babysitting charts, a top crypto index is the simplest way to participate in the market. TM Global 100 was designed for hands-off portfolios: when conditions are bullish, the index holds the top 100 crypto assets by market cap; when signals turn bearish, it moves to stablecoins and waits. You get weekly rebalancing, transparent holdings and transaction logs, and a 90-second buy flow—so you can spend less time tinkering and more time compounding your life.

→ Join the waitlist to be first to trade TM Global 100.

Volatility is back, and investors are searching for predictable, rules-based ways to capture crypto upside without micromanaging tokens. Search interest for terms like hands-off crypto investing, weekly rebalancing, and regime switching reflects the same intent: “Give me broad exposure with guardrails.”

Definition (for snippets): A crypto index is a rules-based basket of digital assets that tracks a defined universe (e.g., top-100 by market cap) with a transparent methodology and scheduled rebalancing.

For 2025’s cycle, a top crypto index helps you participate in uptrends while a regime-switching rule can step aside during drawdowns—removing guesswork and FOMO from day-to-day decisions.

Soft CTA: See the strategy and rules.

→ Join the waitlist to be first to trade TM Global 100.

What is a top crypto index?

A rules-based basket that tracks a defined universe—here, the top 100 assets by market cap—with transparent methodology and scheduled rebalancing.

How often does the index rebalance?

Weekly. Regime switches (tokens ↔ stablecoins) can also occur when the market signal changes.

What triggers the move to stablecoins?

A proprietary market-regime signal. In bearish regimes, the index exits token positions to stablecoins and waits for a bullish re-entry signal.

Can I fund with USDC or fiat?

At launch, the embedded wallet will surface supported funding/settlement options based on your chain/wallet. USDC payout is supported when selling; additional on-ramps may follow.

Is the wallet custodial?

No. It’s an embedded, self-custodial smart wallet—you control the keys.

How are fees shown?

Before confirming, the buy flow shows estimated gas, platform fee, max slippage, and minimum expected value.

How do I join the waitlist?

Visit the Token Metrics Indices hub or the TM Global 100 strategy page and tap Join Waitlist.

Crypto is volatile and can lose value. Past performance is not indicative of future results. This article is for research/education, not financial advice.

If you want hands-off, rules-based exposure to crypto’s upside—with a stablecoin backstop in bears—TM Global 100 is built for you. See the strategy, join the waitlist, and be ready to allocate on launch.

Related Reads

Choosing the right cryptocurrency exchange is one of the most critical decisions for anyone entering the digital asset market. With over 254 exchanges tracked globally and a staggering $1.52 trillion in 24-hour trading volume, the landscape offers tremendous opportunities alongside significant risks. The wrong platform choice can expose you to security breaches, regulatory issues, or inadequate customer support that could cost you your investment.

In 2025, the cryptocurrency exchange industry has matured significantly, with clearer regulatory frameworks, enhanced security standards, and more sophisticated trading tools. However, recent data shows that nearly $1.93 billion was stolen in crypto-related crimes in the first half of 2025 alone, surpassing the total for 2024 and making it crucial to select exchanges with proven track records and robust security measures.

This comprehensive guide examines the most trusted cryptocurrency exchanges in 2025, exploring what makes them reliable, the key factors to consider when choosing a platform, and how to maximize your trading security and success.

Cryptocurrency exchanges are platforms that allow traders to buy, sell, and trade cryptocurrencies, derivatives, and other crypto-related assets. These digital marketplaces have evolved dramatically since Bitcoin's release in 2008, transforming from rudimentary peer-to-peer platforms into sophisticated financial institutions offering comprehensive services.

Centralized Exchanges (CEX): Platforms like Binance, Coinbase, and Kraken hold your funds and execute trades on your behalf, acting as intermediaries similar to traditional banks. These exchanges offer high liquidity, fast transaction speeds, user-friendly interfaces, and customer support but require trusting the platform with custody of your assets.

Decentralized Exchanges (DEX): Platforms enabling direct peer-to-peer trading without intermediaries, offering greater privacy and self-custody but typically with lower liquidity and more complex user experiences.

Hybrid Exchanges: In 2025, some platforms seek to offer the best of both worlds, providing the speed of centralized exchanges with the self-custodial nature of decentralized platforms. Notable examples include dYdX v4, Coinbase Wallet with Base integration, and ZK-powered DEXs.

Brokers: Platforms like eToro and Robinhood that allow crypto purchases at set prices without orderbook access, prioritizing simplicity over advanced trading features.

Selecting a trustworthy exchange requires evaluating multiple dimensions beyond just trading fees and available cryptocurrencies. The following factors distinguish truly reliable platforms from potentially risky alternatives.

Security remains the paramount concern, with exchanges now required to implement rigorous know-your-customer and anti-money laundering protocols in addition to meeting new licensing and reporting requirements. The most trusted exchanges maintain industry-leading security protocols including two-factor authentication, cold storage for the majority of assets, regular security audits, and comprehensive insurance funds.

Regulatory compliance has become increasingly important as governments worldwide develop frameworks for digital assets. Licensed exchanges that comply with regulations are more trustworthy and less likely to face sudden shutdowns or regulatory actions. In 2025, anti-money laundering and countering terrorism financing requirements continue as core elements of the regulatory framework for cryptocurrency businesses.

Markets in Crypto-Assets Regulation (MiCA): The European Union's comprehensive framework entered full application in late 2024, establishing uniform market rules for crypto-assets across member states. Exchanges operating under MiCA provide additional assurance of regulatory compliance and consumer protection.

U.S. Regulatory Evolution: Early 2025 marked a turning point in U.S. crypto regulation, with the SEC's Crypto Task Force working to provide clarity on securities laws application to crypto assets. The CLARITY Act, advancing through Congress, aims to distinguish digital commodities from securities, creating clearer regulatory boundaries.

High liquidity ensures easier entry and exit points, enhancing investor confidence and enabling traders to execute large orders without significant price impact. The best exchanges support large numbers of coins and trading pairs, offering spot trading, margin trading, futures, options, staking, and various earning programs.

According to current market data, the three largest cryptocurrency exchanges by trading volume are Binance, Bybit, and MEXC, with total tracked crypto exchange reserves currently standing at $327 billion. These platforms dominate due to their deep liquidity, extensive asset support, and comprehensive feature sets.

Trading fees can significantly erode profits over time, making fee comparison essential. Most exchanges employ maker-taker fee models, where makers who add liquidity to orderbooks pay lower fees than takers who remove liquidity. Fee structures typically range from 0.02% to 0.6%, with volume-based discounts rewarding high-frequency traders.

Beyond trading fees, consider deposit and withdrawal charges, staking fees, and any hidden costs associated with different transaction types. Some exchanges offer zero-fee trading pairs or native token discounts to reduce costs further.

Responsive customer support proves invaluable when issues arise. The best exchanges offer 24/7 multilingual support through multiple channels including live chat, email, and comprehensive help documentation. User experience encompasses both desktop and mobile platforms, with over 72% of users now trading via mobile apps according to recent data.

Educational resources, including learning centers, tutorials, and market analysis, help users make informed decisions and maximize platform features. Exchanges prioritizing education demonstrate commitment to user success beyond just facilitating transactions.

Based on security track records, regulatory compliance, user reviews, and feature sets, these exchanges have earned recognition as the most trustworthy platforms in the current market.

Kraken stands out as one of the few major platforms that has never experienced a hack resulting in loss of customer funds. Founded in 2011, Kraken has gained popularity thanks to its transparent team and strong focus on security, with CEO Jesse Powell often echoing the principle "Not your keys, not your crypto" while actively encouraging self-custody.

The platform offers more than 350 cryptocurrencies to buy, sell, and trade, making it one of the top exchanges for variety. Kraken maintains licenses across the United States, Canada, Australia, the United Kingdom, the European Union, and several other regions worldwide. This focus on compliance, security, and transparency has earned trust from both clients and regulators.

Kraken provides two primary interfaces: a standard version for beginners and Kraken Pro—a customizable platform for advanced traders featuring enhanced technical analysis tools, powerful margin trading, and access to sophisticated order types. All features are supported by responsive 24/7 multilingual support and educational resources.

Key Strengths:

Reputable independent industry reviewers like Kaiko and CoinGecko consistently rank Kraken among the best crypto exchanges worldwide.

Coinbase is one of the most widely known crypto exchanges in the United States and globally, often serving as the starting point for those just entering the digital assets space. Founded in 2012 by Brian Armstrong and Fred Ehrsam, Coinbase now serves customers in more than 190 countries and has approximately 36 million users as of September 2025.

The platform supports around 250 cryptocurrencies, with asset availability depending on region and account type. Coinbase offers both a standard version for beginners and Coinbase Advanced for more sophisticated trading tools and reduced fees. The exchange excels in its commitment to security, using advanced features including two-factor authentication and cold storage for the majority of assets.

Coinbase is one of the few exchanges that is publicly traded, enhancing its credibility and transparency. Users can feel confident knowing Coinbase operates under stringent regulatory guidelines, adding extra layers of trust. The platform maintains strong regulatory presence in the U.S. and is widely available in most U.S. states.

Key Strengths:

Coinbase and Kraken are considered the most secure exchanges due to their strong regulatory compliance and robust security measures.

Binance, founded in 2017, quickly reached the number one spot by trade volumes, registering more than $36 billion in trades by early 2021 and maintaining its position as the world's largest exchange. The platform serves approximately 250 million users as of January 2025, offering one of the most comprehensive cryptocurrency ecosystems in the industry.

Binance supports hundreds of cryptocurrencies and provides extensive trading options including spot, margin, futures, staking, launchpool, and various earning programs. The exchange has one of the lowest trading fees among major platforms, ranging around 0.1%, with further reductions available through native BNB token usage.

The platform maintains a clean interface with over 72% of users trading via the mobile app. Binance stores 10% of user funds in its Secure Asset Fund for Users (SAFU), providing an additional safety net against potential security incidents. The exchange offers both a standard platform and Binance Pro for advanced traders.

Key Strengths:

Note that regulatory status varies by region, with Binance.US operating separately under U.S. regulations with different features and fee structures.

Founded in 2014 by Cameron and Tyler Winklevoss, Gemini has solidified its position in the cryptocurrency exchange sphere with over $175 million in trading volume. The platform is recognized for taking additional security measures and providing high-end service suitable for both beginners and advanced users.

Gemini maintains comprehensive insurance for digital assets stored on the platform and operates as a New York trust company, subjecting it to banking compliance standards. The exchange is fully available across all U.S. states with no geographic restrictions, maintaining strong regulatory relationships nationwide.

The platform offers both simple interfaces for beginners and ActiveTrader for more sophisticated users. Gemini provides various earning options including staking and interest-bearing accounts. The exchange has launched innovative products including the Gemini Dollar stablecoin, demonstrating ongoing commitment to crypto ecosystem development.

Key Strengths:

OKX has emerged as a major global exchange offering extensive trading options across spot, futures, and derivatives markets. The platform serves users in over 180 countries and supports hundreds of digital assets with deep liquidity across major trading pairs.

The exchange provides advanced trading tools, comprehensive charting, and sophisticated order types suitable for professional traders. OKX maintains competitive fee structures and offers various earning opportunities through staking, savings, and liquidity provision programs.

Key Strengths:

Bitstamp, founded in 2011, stands as one of the oldest continuously operating cryptocurrency exchanges. The platform was among the first to be registered by BitLicense in New York, demonstrating early commitment to regulatory compliance.

The exchange adopted a tiered fee structure based on 30-day trading volumes, with fees ranging from 0% for high-volume traders to 0.5% for smaller transactions. Bitstamp maintains strong security practices and banking relationships, particularly in Europe where it serves as a primary fiat on-ramp for many investors.

Key Strengths:

While choosing a trusted exchange provides the foundation for secure crypto trading, maximizing returns requires sophisticated analytics and market intelligence. This is where Token Metrics, a leading AI-powered crypto trading and analytics platform, becomes invaluable for serious investors.

Token Metrics provides personalized crypto research and predictions powered by AI, helping users identify the best trading opportunities across all major exchanges. The platform monitors thousands of tokens continuously, providing real-time insights that enable informed decision-making regardless of which exchange you use.

Token Metrics assigns each token both a Trader Grade for short-term potential and an Investor Grade for long-term viability. These dual ratings help traders determine not just what to buy, but when to enter and exit positions across different exchanges for optimal returns.

The platform offers AI-generated buy and sell signals that help traders time their entries and exits across multiple exchanges. Token Metrics analyzes market conditions, technical indicators, sentiment data, and on-chain metrics to provide actionable trading recommendations.

Customizable alerts via email, SMS, or messaging apps ensure you never miss important opportunities or risk signals, regardless of which exchange hosts your assets. This real-time monitoring proves particularly valuable when managing portfolios across multiple platforms.

Token Metrics leverages machine learning and data-driven models to deliver powerful insights across the digital asset ecosystem. The platform's AI-managed indices dynamically rebalance based on market conditions, providing diversified exposure optimized for current trends.

For traders using multiple exchanges, Token Metrics provides unified portfolio tracking and performance analysis, enabling holistic views of holdings regardless of where assets are stored. This comprehensive approach ensures optimal allocation across platforms based on liquidity, fees, and available trading pairs.

Token Metrics helps users identify which exchanges offer the best liquidity, lowest fees, and optimal trading conditions for specific assets. The platform's analytics reveal where institutional money flows, helping traders follow smart money to exchanges with the deepest liquidity for particular tokens.

By analyzing order book depth, trading volumes, and price spreads across exchanges, Token Metrics identifies arbitrage opportunities and optimal execution venues for large trades. This intelligence enables traders to minimize slippage and maximize returns.

Beyond trading analytics, Token Metrics evaluates the security posture of projects listed on various exchanges, helping users avoid scams and high-risk tokens. The platform's Investor Grade incorporates security audit status, code quality, and team credibility—factors critical for distinguishing legitimate projects from potential frauds.

Token Metrics provides alerts about security incidents, exchange issues, or regulatory actions that might affect asset accessibility or value. This proactive risk monitoring protects users from unexpected losses related to platform failures or project compromises.

Token Metrics launched its integrated trading feature in 2025, transforming the platform into an end-to-end solution where users can analyze opportunities, compare exchange options, and execute trades seamlessly. This integration enables traders to act on insights immediately without navigating between multiple platforms.

The seamless connection between analytics and execution ensures security-conscious investors can capitalize on opportunities while maintaining rigorous risk management across all their exchange accounts.

Even when using trusted exchanges, implementing proper security practices remains essential for protecting your assets.

Two-factor authentication (2FA) provides critical additional security beyond passwords. Use authenticator apps like Google Authenticator or Authy rather than SMS-based 2FA, which remains vulnerable to SIM swap attacks. Enable 2FA for all account actions including logins, withdrawals, and API access.

While trusted exchanges maintain strong security, self-custody eliminates counterparty risk entirely. Hardware wallets like Ledger or Trezor provide optimal security for long-term holdings, keeping private keys completely offline and safe from exchange hacks.

Follow the principle "not your keys, not your crypto" for significant amounts. Keep only actively traded assets on exchanges, transferring long-term holdings to personal cold storage.

Many exchanges offer withdrawal address whitelisting, restricting withdrawals to pre-approved addresses. Enable this feature and require extended waiting periods for adding new addresses, preventing attackers from quickly draining accounts even if they gain access.

Regularly review login history, active sessions, and transaction records. Enable email and SMS notifications for all account activity including logins, trades, and withdrawals. Immediate awareness of unauthorized activity enables faster response to security incidents.

Never share account credentials, avoid accessing exchanges on public Wi-Fi networks, keep software and operating systems updated, and use unique strong passwords for each exchange account. Consider using a dedicated email address for crypto activities separate from other online accounts.

Crypto regulations and exchange availability vary significantly by region, requiring consideration of local factors when selecting platforms.

Coinbase has the strongest regulatory presence and widest state availability. Kraken offers comprehensive services with strong compliance. Binance.US operates separately with more limited features than the international platform. Regulatory clarity improved in 2025 with the CLARITY Act and enhanced SEC guidance.

The MiCA regulation provides comprehensive framework ensuring consumer protection and regulatory clarity. Kraken, Bitstamp, and Binance all maintain strong European presence with full MiCA compliance. SEPA integration provides efficient fiat on-ramps for EU users.

FCA-registered exchanges including Kraken, eToro, and Bitstamp offer strong security measures and regulatory compliance. Brexit created distinct regulatory regime requiring specific licensing for UK operations.

Bybit and OKX provide extensive services across the region. Regulatory approaches vary dramatically by country, from crypto-friendly jurisdictions like Singapore to more restrictive environments requiring careful platform selection.

The cryptocurrency exchange landscape continues evolving rapidly with several key trends shaping the industry's future.

Major financial institutions are increasingly offering crypto services, with traditional banks now providing custody following the SEC's replacement of SAB 121 with SAB 122 in early 2025. This institutional embrace drives higher security standards and regulatory clarity across the industry.

Centralized exchanges are integrating decentralized finance protocols, offering users access to yield farming, liquidity provision, and lending directly through exchange interfaces. This convergence provides best-of-both-worlds functionality combining CEX convenience with DeFi opportunities.

Exchanges face tighter compliance requirements including enhanced KYC/AML protocols, regular audits, and transparent reserve reporting. These measures increase user protection while creating barriers to entry for less-established platforms.

AI-powered trading assistance, sophisticated algorithmic trading tools, and professional-grade analytics are becoming standard offerings. Platforms like Token Metrics demonstrate how artificial intelligence revolutionizes crypto trading by providing insights previously available only to institutional investors.

Selecting trusted crypto exchanges requires balancing multiple factors including security track records, regulatory compliance, available features, fee structures, and regional accessibility. In 2025, exchanges like Kraken, Coinbase, Binance, Gemini, and Bitstamp have earned recognition as the most reliable platforms through consistent performance and strong security practices.

The most successful crypto traders don't rely on exchanges alone—they leverage sophisticated analytics platforms like Token Metrics to maximize returns across all their exchange accounts. By combining trusted exchange infrastructure with AI-powered market intelligence, traders gain significant advantages in identifying opportunities, managing risks, and optimizing portfolio performance.

Remember that no exchange is completely risk-free. Implement proper security practices including two-factor authentication, cold storage for significant holdings, and continuous monitoring of account activity. Diversify holdings across multiple trusted platforms to reduce concentration risk.

As the crypto industry matures, exchanges with strong regulatory compliance, proven security records, and commitment to transparency will continue dominating the market. Choose platforms aligned with your specific needs—whether prioritizing low fees, extensive coin selection, advanced trading tools, or regulatory certainty—and always conduct thorough research before committing significant capital.

With the right combination of trusted exchanges, robust security practices, and sophisticated analytics from platforms like Token Metrics, you can navigate the crypto market with confidence, maximizing opportunities while minimizing risks in this exciting and rapidly evolving financial landscape.

The cryptocurrency market has evolved from a niche digital experiment into a multi-trillion-dollar asset class. With thousands of tokens and coins available across hundreds of exchanges, the question isn't whether you should research before buying—it's how to conduct that research effectively. Smart investors know that thorough due diligence is the difference between identifying the next promising project and falling victim to a costly mistake.

Before diving into specific research methods, successful crypto investors start by understanding the fundamental difference between various digital assets. Bitcoin operates as digital gold and a store of value, while Ethereum functions as a programmable blockchain platform. Other tokens serve specific purposes within their ecosystems—governance rights, utility functions, or revenue-sharing mechanisms.

The first step in any research process involves reading the project's whitepaper. This technical document outlines the problem the project aims to solve, its proposed solution, tokenomics, and roadmap. While whitepapers can be dense, they reveal whether a project has substance or merely hype. Pay attention to whether the team clearly articulates a real-world problem and presents a viable solution.

A cryptocurrency project is only as strong as the team behind it. Investors scrutinize founder backgrounds, checking their LinkedIn profiles, previous projects, and industry reputation. Have they built successful companies before? Do they have relevant technical expertise? Anonymous teams aren't automatically red flags, but they require extra scrutiny and compelling reasons for their anonymity.

Development activity serves as a crucial health indicator for any blockchain project. GitHub repositories reveal whether developers are actively working on the project or if it's effectively abandoned. Regular commits, open issues being addressed, and community contributions all signal a vibrant, evolving project. Conversely, repositories with no activity for months suggest a project that may be dying or was never serious to begin with.

Understanding a token's economic model is essential for predicting its long-term value potential. Investors examine total supply, circulating supply, and emission schedules. Is the token inflationary or deflationary? How many tokens do the team and early investors hold, and when do those tokens unlock? Large unlock events can trigger significant price drops as insiders sell.

The token's utility within its ecosystem matters tremendously. Does holding the token provide governance rights, staking rewards, or access to platform features? Tokens without clear utility often struggle to maintain value over time. Smart researchers also investigate how value accrues to token holders—whether through buybacks, burning mechanisms, or revenue sharing.

Price action tells only part of the story, but market metrics provide valuable context. Trading volume indicates liquidity—can you buy or sell significant amounts without drastically moving the price? Market capitalization helps determine a token's relative size and potential growth runway. A small-cap project has more room to grow but carries higher risk.

On-chain metrics offer deeper insights into token health. Active addresses, transaction volume, and network usage reveal actual adoption versus speculation. High trading volume on exchanges with minimal on-chain activity might indicate wash trading or manipulation. Token distribution matters too—if a small number of wallets hold most of the supply, the token faces centralization risks and potential price manipulation.

Professional crypto investors increasingly rely on sophisticated analytics platforms that aggregate multiple data sources and provide actionable insights. Token Metrics has emerged as a leading crypto trading and analytics platform, offering comprehensive research tools that save investors countless hours of manual analysis.

Token Metrics combines artificial intelligence with expert analysis to provide ratings and predictions across thousands of cryptocurrencies. The platform evaluates projects across multiple dimensions—technology, team, market metrics, and risk factors—delivering clear scores that help investors quickly identify promising opportunities. Rather than manually tracking dozens of metrics across multiple websites, users access consolidated dashboards that present the information that matters most.

The platform's AI-driven approach analyzes historical patterns and current trends to generate price predictions and trading signals. For investors overwhelmed by the complexity of crypto research, Token Metrics serves as an invaluable decision-support system, translating raw data into understandable recommendations. The platform covers everything from established cryptocurrencies to emerging DeFi tokens and NFT projects, making it a one-stop solution for comprehensive market research.

Cryptocurrency projects thrive or die based on their communities. Active, engaged communities signal genuine interest and adoption, while astroturfed communities relying on bots and paid shillers raise red flags. Investors monitor project Discord servers, Telegram channels, and Twitter activity to gauge community health.

Social sentiment analysis has become increasingly sophisticated, with tools tracking mentions, sentiment polarity, and influencer engagement across platforms. Sudden spikes in social volume might indicate organic excitement about a partnership or product launch—or orchestrated pump-and-dump schemes. Experienced researchers distinguish between authentic enthusiasm and manufactured hype.

The regulatory landscape significantly impacts cryptocurrency projects. Researchers investigate whether projects have faced regulatory scrutiny, registered as securities, or implemented compliance measures. Geographic restrictions, potential legal challenges, and regulatory clarity all affect long-term viability.

Security audits from reputable firms like CertiK, Trail of Bits, or ConsenSys Diligence provide crucial assurance about smart contract safety. Unaudited contracts carry significant risk of exploits and bugs. Researchers also examine a project's history—has it been hacked before? How did the team respond to security incidents?

Experienced investors develop instincts for spotting problematic projects. Guaranteed returns and promises of unrealistic gains are immediate red flags. Legitimate projects acknowledge risk and market volatility rather than making impossible promises. Copied whitepapers, stolen team photos, or vague technical descriptions suggest scams.

Pressure tactics like "limited time offers" or artificial scarcity designed to force quick decisions without research are classic manipulation techniques. Projects with more focus on marketing than product development, especially those heavily promoted by influencers being paid to shill, warrant extreme skepticism.

Cryptocurrency research isn't a one-time activity but an ongoing process. Markets evolve rapidly, projects pivot, teams change, and new competitors emerge. Successful investors establish systems for monitoring their holdings and staying updated on developments. Setting up Google Alerts, following project social channels, and regularly reviewing analytics help maintain awareness of changing conditions.

Whether you're evaluating established cryptocurrencies or exploring emerging altcoins, thorough research remains your best defense against losses and your greatest tool for identifying opportunities. The time invested in understanding what you're buying pays dividends through better decision-making and improved portfolio performance in this dynamic, high-stakes market.