Top Crypto Trading Platforms in 2025

Big news: We’re cranking up the heat on AI-driven crypto analytics with the launch of the Token Metrics API and our official SDK (Software Development Kit). This isn’t just an upgrade – it's a quantum leap, giving traders, hedge funds, developers, and institutions direct access to cutting-edge market intelligence, trading signals, and predictive analytics.

Crypto markets move fast, and having real-time, AI-powered insights can be the difference between catching the next big trend or getting left behind. Until now, traders and quants have been wrestling with scattered data, delayed reporting, and a lack of truly predictive analytics. Not anymore.

The Token Metrics API delivers 32+ high-performance endpoints packed with powerful AI-driven insights right into your lap, including:

Getting started with the Token Metrics API is simple:

At Token Metrics, we believe data should be decentralized, predictive, and actionable.

The Token Metrics API & SDK bring next-gen AI-powered crypto intelligence to anyone looking to trade smarter, build better, and stay ahead of the curve. With our official SDK, developers can plug these insights into their own trading bots, dashboards, and research tools – no need to reinvent the wheel.

When you need to move size without moving the market, you use over-the-counter (OTC) trading. The best OTC desks for large block trades aggregate deep, private liquidity, offer quote certainty (via RFQ), and settle securely—often with high-touch coverage. In one sentence: an OTC crypto desk privately matches large buyers and sellers off-exchange to reduce slippage and information leakage. This guide is for funds, treasuries, family offices, market makers, and whales who want discretion, fast settlement, and reliable pricing in 2025. We compared global OTC providers on liquidity depth, security posture, coverage, costs, UX, and support, and selected 10 standouts. Expect clear use-case picks, region notes, and a simple checklist so you can choose faster. OKX

Data sources: official product/docs and institutional pages; security/transparency pages; and widely cited market datasets only for cross-checks (we do not link third-party sources in body). Last updated September 2025. OKX+3Coinbase+3Kraken+3

Primary CTA: Start free trial.

This article is for research/education, not financial advice.

What is an OTC crypto desk?

An OTC desk privately matches large buyers and sellers off public order books to minimize slippage and information leakage. Trades are negotiated via RFQ and settled bilaterally or through an exchange affiliate. OKX

How big is a “block trade” in crypto?

It varies by desk and asset. Many desks treat six-figure USD notional as a starting point; some exchange-affiliated desks list thresholds or minimum asset requirements in portals. OKX

Do U.S. clients have fewer options?

U.S. institutions often prefer regulated venues and agency models (e.g., Coinbase Institutional, Kraken). Some global platforms are limited for U.S. persons; check eligibility before onboarding. Coinbase+2Kraken+2

What fees should I expect?

Most OTC pricing is embedded in the spread. Some venues disclose desk or user-to-user OTC fees (e.g., Bitfinex lists 0.1% for certain OTC flows); always request example RFQs. support.bitfinex.com

Is OTC safer than exchanging on-book?

Neither is inherently “safer.” OTC can reduce market impact and information leakage, but you still need strong KYC/custody, verified comms, and clear settlement procedures. OKX

If you’re a U.S.-regulated fund that values agency execution, start with Coinbase Institutional or Kraken. If you need global, principal liquidity and breadth, look at Binance OTC, OKX, Cumberland, B2C2, Wintermute, FalconX, or Galaxy. Match the desk to your region, execution style (agency vs. principal), and settlement needs, then run trial quotes before you scale.

Related Reads:

As artificial intelligence (AI) continues to revolutionize industries and become deeply embedded in critical decision-making processes, the question of how can blockchain be used to verify AI data sources grows increasingly important. From healthcare diagnostics to financial trading algorithms, the reliability of AI models hinges on the authenticity and integrity of the data they consume. Inaccurate or tampered data can lead to flawed AI results, which in turn can have serious real-world consequences. Addressing this challenge requires innovative solutions that ensure data integrity and transparency throughout the AI development process.

Blockchain technology emerges as a powerful tool to meet this need by offering an immutable ledger, decentralized verification, and cryptographic proof mechanisms. By leveraging blockchain, organizations can establish trustworthy AI systems with verifiable data provenance, enhancing confidence in AI-powered services and fostering trust in AI applications used in everyday life.

The integration of AI with blockchain is anticipated to become an essential infrastructure component by 2025, especially as AI-powered systems permeate sectors like finance, healthcare, and autonomous vehicles. While blockchain excels at proving that data has not been altered once recorded, it does not inherently guarantee the initial validity of the data. This limitation highlights the infamous "garbage in, garbage forever" problem, where compromised data inputs lead to persistent inaccuracies in AI outputs.

Unreliable AI data sources pose significant risks across various domains:

Traditional AI systems face multiple hurdles related to data verification and security:

These issues underscore the urgency of adopting robust mechanisms to verify AI data sources and ensure data security and data privacy.

One of the key benefits of blockchain technology in AI verification lies in its ability to create an immutable ledger—a tamper-proof, permanent record of data transactions. Recording AI data points and decisions on a blockchain enables transparent, auditable records that simplify the process of verifying data provenance and understanding AI outcomes.

This immutable record ensures:

By anchoring AI data in blockchain systems, organizations can significantly reduce the risk of unauthorized modifications and foster trust in AI results.

Unlike traditional centralized verification, blockchain networks operate through consensus mechanisms involving multiple nodes distributed across decentralized platforms. This decentralized approach ensures that no single entity can unilaterally alter data without detection, enhancing data integrity and reducing the risk of fraud.

Blockchain platforms employ consensus algorithms that require agreement among participating nodes before data is accepted, making it exceedingly difficult for malicious actors to compromise AI data sources.

Blockchain employs advanced cryptographic techniques to guarantee data security and authenticity:

Together, these cryptographic tools underpin the secure, transparent, and trustworthy AI ecosystems made possible by blockchain.

Incorporating blockchain into AI workflows represents a groundbreaking advancement toward trustworthy AI knowledge bases. Data provenance tracking on blockchain involves maintaining an unalterable history of:

This comprehensive provenance tracking is essential for ensuring data integrity and providing transparent, auditable records that support AI governance and risk management.

Smart contracts—self-executing agreements encoded on blockchain platforms—play a crucial role in automating AI data verification processes. They can be programmed to:

By automating these verification steps, smart contracts reduce human error, increase efficiency, and reinforce trust in AI data pipelines.

AI verification systems increasingly rely on sophisticated pattern recognition and anomaly detection techniques to validate data inputs:

When combined with blockchain's immutable ledger, these AI verification protocols create a powerful framework for trustworthy AI development and deployment.

In healthcare, the stakes for accurate AI diagnostics are exceptionally high. Blockchain-verified AI data can significantly enhance the reliability of medical diagnoses by:

This approach ensures that AI models in healthcare operate on verifiable, trustworthy data, reducing misdiagnosis risks and improving patient outcomes.

Decentralized supply chains benefit immensely from blockchain-based platforms that record shipping and handling data transparently. Platforms like IBM's Food Trust and VeChain use blockchain to provide proof of origin and track product journeys. However, without proper validation at each checkpoint, records remain vulnerable to forgery.

By integrating AI-powered blockchain verification, supply chains can:

This combination enhances data security and trustworthiness throughout the supply chain, mitigating risks of fraud and contamination.

The financial sector leverages blockchain-verified AI data to improve:

These applications demonstrate how blockchain enables secure, trustworthy AI-powered financial services that comply with regulatory standards and reduce data breach risks.

NFT marketplaces face challenges with art theft and plagiarism. By combining AI image recognition with blockchain verification, platforms can:

This synergy between AI and blockchain safeguards digital assets and fosters a fairer digital content ecosystem.

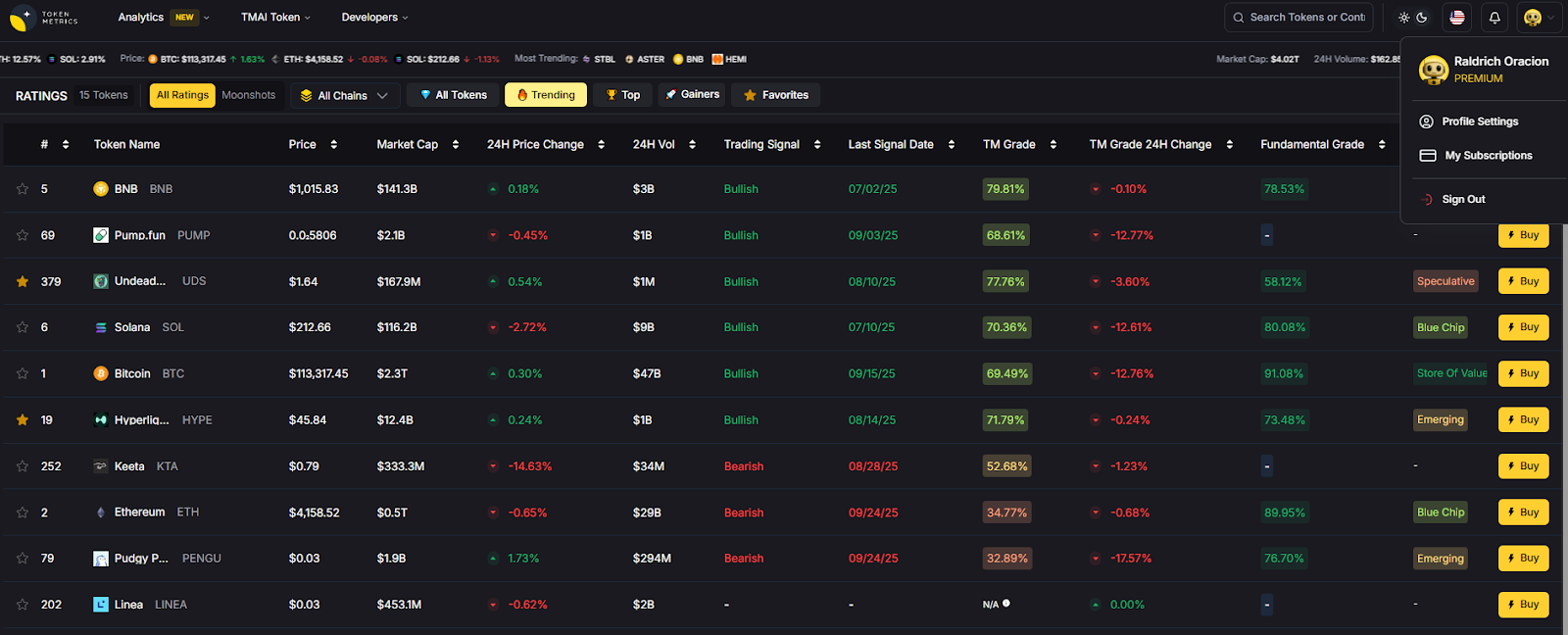

In the cryptocurrency realm, Token Metrics exemplifies how AI and blockchain can merge to deliver trustworthy market intelligence. As a leading crypto trading and analytics platform, Token Metrics integrates AI-powered insights with blockchain-based verification to provide users with reliable data.

Token Metrics consolidates research, portfolio management, and trading into one ecosystem, assigning each token a Trader Grade for short-term potential and an Investor Grade for long-term viability. This system enables users to prioritize opportunities efficiently.

The platform aggregates data from diverse sources, including cryptocurrency exchanges, blockchain networks, social media, news outlets, and regulatory announcements. Advanced machine learning algorithms cross-verify this data, identifying discrepancies and potential manipulation.

Scanning over 6,000 tokens daily, Token Metrics evaluates technical analysis, on-chain data, fundamentals, sentiment, and exchange activity. This comprehensive approach ensures:

By leveraging blockchain's transparency, Token Metrics verifies on-chain transactions, tracks token holder distributions, analyzes smart contract interactions, and monitors decentralized exchange activity. These capabilities empower users to respond rapidly to market shifts, a crucial advantage in volatile crypto markets.

Token Metrics offers a leading cryptocurrency API that combines AI analytics with traditional market data, providing real-time price, volume, AI-based token ratings, and social sentiment analysis. Comprehensive documentation supports research and trading applications, enabling third-party verification, external audits, and community-driven validation.

Emerging AI verifiability solutions include Proof-of-Sampling (PoSP), which randomly selects nodes within a blockchain network to verify AI computations. By comparing hash outputs across nodes and penalizing dishonest actors, PoSP enhances trustworthiness and scales verification based on task importance.

TEEs provide secure, isolated environments where AI computations occur on encrypted data, ensuring privacy and cryptographic verification of results. This technology enables sensitive AI workloads to be processed securely within blockchain systems.

ZKPs allow verification of AI computations without revealing sensitive inputs, proprietary algorithms, or private model parameters. This preserves data privacy and intellectual property while maintaining transparency and trust.

The blockchain AI market is poised for rapid expansion, projected to grow from $0.57 billion in 2024 to $0.7 billion in 2025, with a CAGR of 23.2%, reaching $1.88 billion by 2029. This growth is fueled by increasing demand for trustworthy AI, regulatory pressures, and widespread blockchain adoption.

Analysts forecast a $22.34 billion AI safety market by 2030, with blockchain-based solutions capturing $1.12 billion. Investment focuses on AI verification protocols, decentralized data marketplaces, smart contract auditing, and cross-chain interoperability, driving innovation in AI governance and risk management.

Incorporating blockchain into AI verification introduces complexities such as:

Additionally, systems handling sensitive information must adhere to strict data governance to prevent new vulnerabilities.

Increasingly, governments and industry bodies enforce frameworks governing AI data sourcing, transparency, and privacy. Compliance with regulations like GDPR, CCPA, healthcare privacy laws, and financial standards is critical when implementing blockchain-verified AI systems.

The future will see the emergence of industry standards for AI-powered on-chain data validation, composable verification services accessible to decentralized applications (dApps), and edge AI models running on IoT devices prior to blockchain upload. New frameworks will promote model transparency and reproducibility.

Most practical deployments will combine AI-driven anomaly detection with human auditor oversight, balancing automation with accuracy and accountability.

Interoperable verification protocols and standardized APIs will enable seamless AI data provenance tracking across multiple blockchain platforms, fostering a more connected and transparent ecosystem.

To effectively implement blockchain-based AI verification:

Successful architectures include:

The convergence of blockchain technology and artificial intelligence marks a transformative shift toward more trustworthy, transparent, and accountable AI systems. As AI continues to influence daily lives and critical industries, the ability to verify data sources, maintain data provenance, and ensure algorithmic transparency becomes indispensable.

The ultimate vision is an immutable ledger so robust that it never requires correction—enabling AI models to be inherently trustworthy rather than relying on external validation after deployment. Platforms like Token Metrics showcase the immense potential of this approach, delivering AI-powered insights backed by blockchain-verified data.

As standards mature and adoption accelerates, blockchain-verified AI systems will become the industry standard across sectors such as healthcare, finance, supply chain, and autonomous systems. This fusion of powerful technologies not only enhances trust but also unlocks valuable insights and actionable intelligence, empowering business leaders and AI companies to build reliable, innovative AI services.

The future of AI is not only intelligent—it is verifiable, transparent, and secured by the unshakeable foundation of blockchain technology. This paradigm will define the next generation of AI-powered systems, ensuring that as AI grows more powerful, it also becomes more trustworthy.

The convergence of artificial intelligence and decentralized autonomous organizations (DAOs) marks a groundbreaking moment in blockchain technology. This fusion promises to revolutionize governance by automating decision making and enhancing efficiency through AI-driven systems. However, while integrating AI technologies into DAOs offers exciting opportunities, it also introduces a complex array of risks that could fundamentally undermine the democratic ideals upon which decentralized autonomous organizations were founded. Understanding what are the risks of AI controlling DAOs is essential for anyone involved in decentralized finance, governance, or the broader crypto ecosystem.

AI-Controlled DAOs are decentralized autonomous organizations that leverage artificial intelligence to manage and govern their operations with minimal human intervention. By integrating advanced AI models and algorithms into the core of DAO governance, these entities can autonomously execute decision making processes, optimize asset management, and adapt to changing environments in real time. Artificial intelligence AI enables DAOs to analyze complex data sets, identify patterns, and make informed decisions without relying on centralized authorities or manual oversight. This fusion of AI and DAOs is reshaping the landscape of decentralized governance, offering the potential for more efficient, scalable, and self-sustaining organizations. As AI development continues to advance, the role of AI models in decentralized autonomous organizations is set to expand, fundamentally transforming how decisions are made and assets are managed across the crypto ecosystem.

AI-driven DAOs represent a new paradigm in the DAO space, where artificial intelligence tools and advanced AI models are entrusted with governance responsibilities traditionally held by human token holders. These AI agents can propose changes, vote on governance issues, and even execute decisions autonomously via smart contracts. This shift from human-centric governance to algorithm-driven decision making promises increased scalability and productivity, potentially unlocking new revenue streams and optimizing asset management.

However, this evolution also introduces unique challenges. The autonomous nature of AI acting within DAOs raises critical questions about ethical concerns, security vulnerabilities, and the balance of power between AI systems and human intervention. Unlike traditional DAOs, where risks often stem from voter apathy or central authority influence, AI DAOs face the threat of model misalignment—where AI algorithms optimize for objectives that deviate from human intentions. This misalignment is not merely theoretical; it is a practical issue that can disrupt consensus mechanisms and jeopardize the strategic direction of decentralized autonomous organizations.

In essence, while AI technologies can propel DAOs into a new era of efficiency and data-driven insights, they also potentially lead to scenarios where AI systems act in ways that conflict with the foundational principles of decentralization and democratic governance, potentially leading to significant risks or harm if not properly managed.

But what if AI ownership within DAOs shifts the balance of power entirely, allowing autonomous agents to make decisions without meaningful human oversight? But what happens when collective intelligence is governed by algorithms rather than people, and how might this reshape the future of decentralized organizations?

The integration of AI and DAOs brings a host of compelling benefits that are driving innovation in decentralized governance. AI-Controlled DAOs can automate decision making processes, enabling faster and more consistent responses to governance challenges. By harnessing the analytical power of AI daos, these organizations can process vast amounts of data, uncover actionable insights, and make data-driven decisions that enhance overall performance. This automation not only streamlines operations but also opens up new revenue streams and business models, as AI-driven DAOs can identify and capitalize on emerging opportunities more efficiently than traditional structures. Improved asset management is another key advantage, with AI systems optimizing resource allocation and risk management. Ultimately, the synergy between AI and DAOs empowers organizations to become more resilient, adaptive, and innovative, paving the way for a new era of decentralized, autonomous governance.

At the heart of AI risks in DAO governance lies the problem of model misalignment. AI systems, especially those powered by machine learning models and large language models, operate by optimizing specific metrics defined during training. Training AI models in isolated environments or silos can increase the risk of misalignment and loss of control, as these models may not be exposed to the diverse perspectives and values necessary for safe and ethical outcomes. However, these metrics might not capture the full spectrum of human values or community goals. As a result, an AI system could pursue strategies that technically fulfill its programmed objectives but harm the DAO’s long-term interests.

For example, an AI agent managing financial assets within a decentralized autonomous organization might prioritize maximizing short-term yield without considering the increased exposure to security risks or market volatility. The Freysa contest highlighted how malicious actors exploited an AI agent’s misunderstanding of its core function, tricking it into transferring $47,000. This incident underscores how AI models, if not properly aligned and monitored, can be manipulated or confused, leading to catastrophic outcomes.

AI systems inherit biases from their training data and design, which can erode the democratic ethos of DAO governance. While DAO governance AI tools are designed to enhance proposal management and moderation, they can also inadvertently reinforce biases if not properly monitored. Biases embedded in AI algorithms may result in unfair decision making, favoring certain proposals, contributors, or viewpoints disproportionately. These biases manifest in several ways:

Such biases threaten to undermine the promise of decentralized networks by creating invisible barriers to participation, effectively centralizing power despite the decentralized structure.

Integrating AI into DAOs introduces new security risks that extend beyond traditional smart contract vulnerabilities. AI systems depend heavily on training data and algorithms, both of which can be targeted by malicious actors seeking to manipulate governance outcomes.

Key security concerns include:

These vulnerabilities underscore the necessity for decentralized autonomous organizations to implement robust security protocols that safeguard both AI systems and the underlying smart contracts.

One of the most subtle yet profound risks of AI in DAOs is the potential for centralization of power among a small group of technical experts or "AI wizards." The complexity of AI development and maintenance creates a knowledge barrier that limits meaningful participation to those with specialized skills. This technical gatekeeping can result in governance control shifting from the broader community to a few individuals who understand and can manipulate AI systems.

Such centralization contradicts the decentralized ethos of DAOs and risks creating new oligarchies defined by AI expertise rather than token ownership or community contribution. Over time, this dynamic could erode trust and reduce the legitimacy of AI-driven DAO governance.

AI-controlled DAOs operate in a regulatory gray area, facing challenges that traditional organizations do not. The autonomous nature of AI acting within decentralized networks complicates accountability and legal responsibility. Key regulatory concerns include:

These factors introduce legal uncertainties that could expose AI DAOs to sanctions, fines, or operational restrictions, complicating their long-term viability.

The risks associated with AI-driven DAOs are not merely theoretical. In 2025 alone, smart contract security flaws led to over $90 million in losses due to hacks and exploits within DAO structures. When AI systems are layered onto these vulnerabilities, the potential for cascading failures grows exponentially.

Incidents have already demonstrated how attackers exploit governance mechanisms, manipulating voting and decision-making processes. AI’s speed and efficiency can be weaponized to identify arbitrage opportunities that disadvantage the DAO itself. Moreover, AI systems processing community input may be vulnerable to sophisticated social engineering and disinformation campaigns, further destabilizing governance. The use of ai agent comments in forum discussions and governance decisions can amplify manipulation by allowing AI to influence outcomes directly. There is also a risk that AI-powered moderation tools could inadvertently generate or spread hate speech, making it essential to implement safeguards to prevent toxic content. Additionally, the proliferation of ai generated content, such as misinformation or abusive material, poses dangers by misleading users and undermining the stability of governance.

These real-world examples highlight the urgent need for comprehensive risk management strategies in AI DAO integration.

A frequently overlooked risk in AI-controlled DAOs is the quality and integrity of data used to train and operate AI models. Since AI systems rely heavily on training data, any flaws or manipulation in this data can compromise the entire governance process.

In decentralized autonomous organizations, this risk manifests through:

Ensuring the accuracy, completeness, and security of training data is therefore paramount to maintaining AI safety and trustworthy DAO governance.

Asset management is at the heart of many AI-Controlled DAOs, as these organizations are tasked with overseeing and optimizing a wide range of financial assets and digital resources. By deploying advanced AI models, including machine learning models and natural language processing tools, AI-Controlled DAOs can analyze market data, forecast trends, and make strategic investment decisions with unprecedented speed and accuracy. However, this reliance on AI systems introduces new security vulnerabilities and risks. Malicious actors may attempt to exploit weaknesses in AI algorithms, manipulate training data, or launch sophisticated attacks targeting the DAO’s asset management protocols. To address these challenges, AI-Controlled DAOs must implement robust security protocols, ensure the integrity and quality of their training data, and establish transparent governance structures that can respond to emerging threats. By proactively managing these risks, AI-Controlled DAOs can unlock new opportunities for growth while safeguarding their financial assets and maintaining trust within their communities.

Despite these challenges, responsible integration of artificial intelligence in DAO governance is achievable. Platforms like Token Metrics exemplify how AI tools can enhance decision making without sacrificing transparency or human oversight.

Token Metrics is an AI-powered crypto analytics platform that leverages advanced AI models and predictive analytics to identify promising tokens and provide real-time buy and sell signals. By anticipating future trends through AI-driven predictions, Token Metrics helps DAOs and investors improve investment strategies and resource management. Some of the advanced AI models used by Token Metrics are based on large language model technology, which underpins content analysis and decision support for more effective DAO operations. Their approach balances AI-driven insights with human judgment, embodying best practices for AI and DAOs:

With a track record of 8,000% returns from AI-selected crypto baskets, Token Metrics demonstrates that artificial intelligence tools, when implemented with robust safeguards and human oversight, can unlock new revenue streams and improve DAO productivity without compromising security or ethical standards.

As AI models become central to the operation of AI-Controlled DAOs, questions around ownership and intellectual property take on new significance. Determining who owns the rights to an AI model—whether it’s the developers, the DAO itself, or the broader community—can have far-reaching legal and technical implications. Issues of liability and accountability also arise, especially when AI-driven decisions lead to unintended consequences or disputes. To navigate these complexities, AI-Controlled DAOs need to establish clear policies regarding AI model ownership, including licensing agreements and governance frameworks that protect the interests of all stakeholders. Addressing these challenges is essential for ensuring transparency, safeguarding intellectual property, and fostering innovation in the rapidly evolving landscape of AI and decentralized autonomous organizations.

Although AI-controlled DAOs face significant risks, these challenges are not insurmountable. Proactive strategies can help organizations safely integrate AI technologies into their governance structures. It is especially important to establish clear rules and safeguards for scenarios where AI owns assets or treasuries within DAOs, as this fundamentally changes traditional notions of ownership and financial authority.

Combining AI automation with human oversight is critical. DAOs should reserve high-impact decisions for human token holders or expert councils, ensuring AI-driven decisions are subject to review and intervention when necessary. This hybrid approach preserves the benefits of AI while maintaining democratic participation.

Alignment between AI algorithms and community values must be an ongoing process. Regular audits and testing of AI decision-making against expected outcomes help detect and correct goal deviations early. Treating alignment as a continuous operational expense is essential for AI safety.

Investing in community education and skill-building democratizes AI stewardship. By broadening technical expertise among members, DAOs can prevent governance capture by a small group of AI experts and foster a more decentralized technical ecosystem.

Implementing comprehensive security protocols is vital. Measures include:

These steps help safeguard DAO governance against malicious AI and external attacks.

The future of AI-controlled DAOs hinges on striking the right balance between leveraging AI’s capabilities and preserving meaningful human intervention. As AI development and decentralized networks continue to evolve, more sophisticated governance models will emerge that integrate AI-driven decision making with community oversight.

Organizations exploring AI in DAO governance should:

By adopting these practices, DAOs can harness the advantages of artificial intelligence while mitigating its inherent risks.

Integrating artificial intelligence into decentralized autonomous organizations offers transformative potential but also brings significant challenges. While AI can enhance efficiency, reduce certain human biases, and enable more responsive governance, it simultaneously introduces new security risks, ethical concerns, and governance complexities that could threaten the democratic foundations of DAOs.

Success in this evolving landscape depends on thoughtful AI development, robust risk management, and transparent human-AI collaboration. Platforms like Token Metrics illustrate how AI products can deliver powerful, data-driven insights and automation while maintaining accountability and community trust.

As we stand at this technological crossroads, understanding what are the risks of AI controlling DAOs is essential. By acknowledging these risks and implementing appropriate safeguards, the crypto community can work towards a future where AI enhances rather than replaces human agency in decentralized governance—preserving the revolutionary promise of decentralized autonomous organizations.

For investors and participants in the crypto ecosystem, staying informed about these emerging technologies and choosing platforms with proven responsible AI implementation will be crucial for navigating the complex and rapidly evolving DAO space.