Top Crypto Trading Platforms in 2025

%201.svg)

%201.svg)

Big news: We’re cranking up the heat on AI-driven crypto analytics with the launch of the Token Metrics API and our official SDK (Software Development Kit). This isn’t just an upgrade – it's a quantum leap, giving traders, hedge funds, developers, and institutions direct access to cutting-edge market intelligence, trading signals, and predictive analytics.

Crypto markets move fast, and having real-time, AI-powered insights can be the difference between catching the next big trend or getting left behind. Until now, traders and quants have been wrestling with scattered data, delayed reporting, and a lack of truly predictive analytics. Not anymore.

The Token Metrics API delivers 32+ high-performance endpoints packed with powerful AI-driven insights right into your lap, including:

Getting started with the Token Metrics API is simple:

At Token Metrics, we believe data should be decentralized, predictive, and actionable.

The Token Metrics API & SDK bring next-gen AI-powered crypto intelligence to anyone looking to trade smarter, build better, and stay ahead of the curve. With our official SDK, developers can plug these insights into their own trading bots, dashboards, and research tools – no need to reinvent the wheel.

%201.svg)

%201.svg)

DeFi protocols are maturing beyond early ponzi dynamics toward sustainable revenue models. Aave operates in this evolving landscape where real yield and proven product-market fit increasingly drive valuations rather than speculation alone. Growing regulatory pressure on centralized platforms creates tailwinds for decentralized alternatives—factors that inform our comprehensive AAVE price prediction framework.

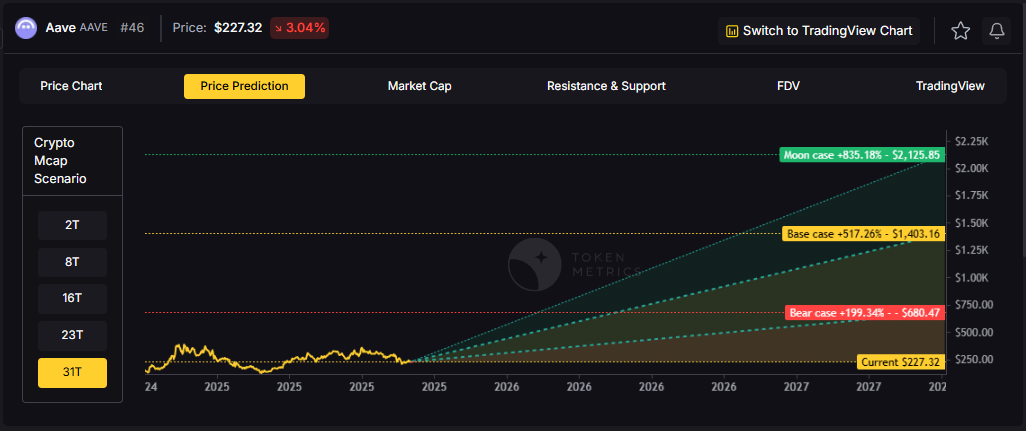

The scenario bands below reflect how AAVE price predictions might perform across different total crypto market cap environments. Each tier represents a distinct liquidity regime, from bear conditions with muted DeFi activity to moon scenarios where decentralized infrastructure captures significant value from traditional finance.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

Each band blends cycle analogues and market-cap share math with TA guardrails. Base assumes steady adoption and neutral or positive macro. Moon layers in a liquidity boom. Bear assumes muted flows and tighter liquidity.

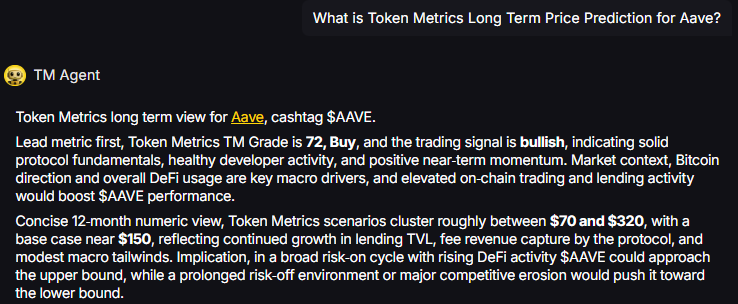

TM Agent baseline: Token Metrics TM Grade is 72, Buy, and the trading signal is bullish, indicating solid protocol fundamentals, healthy developer activity, and positive near-term momentum. Concise twelve-month numeric view, Token Metrics price prediction scenarios cluster roughly between $70 and $320, with a base case near $150, reflecting continued growth in lending TVL, fee revenue capture by the protocol, and modest macro tailwinds.

Live details: Aave Token Details

Affiliate Disclosure: We may earn a commission from qualifying purchases made via this link, at no extra cost to you.

Our Token Metrics price prediction framework spans four market cap tiers, each representing different levels of crypto market maturity and liquidity:

8T Market Cap - AAVE Price Prediction:

At an 8 trillion dollar total crypto market cap, AAVE projects to $293.45 in bear conditions, $396.69 in the base case, and $499.94 in bullish scenarios.

Doubling the market to 16 trillion expands the price prediction range to $427.46 (bear), $732.18 (base), and $1,041.91 (moon).

At 23 trillion, the price prediction scenarios show $551.46, $1,007.67, and $1,583.86 respectively.

In the maximum liquidity scenario of 31 trillion, AAVE price predictions could reach $680.47 (bear), $1,403.16 (base), or $2,175.85 (moon).

Each tier assumes progressively stronger market conditions, with the base case price prediction reflecting steady growth and the moon case requiring sustained bull market dynamics.

Aave represents one opportunity among hundreds in crypto markets. Token Metrics Indices bundle AAVE with top one hundred assets for systematic exposure to the strongest projects. Single tokens face idiosyncratic risks that diversified baskets mitigate.

Historical index performance demonstrates the value of systematic diversification versus concentrated positions.

Aave is a decentralized lending protocol that operates across multiple EVM-compatible chains including Ethereum, Polygon, Arbitrum, and Optimism. The network enables users to supply crypto assets as collateral and borrow against them in an over-collateralized manner, with interest rates dynamically adjusted based on utilization.

The AAVE token serves as both a governance asset and a backstop for the protocol through the Safety Module, where stakers earn rewards in exchange for assuming shortfall risk. Primary utilities include voting on protocol upgrades, fee switches, collateral parameters, and new market deployments.

Token Metrics AI provides comprehensive context on Aave's positioning and challenges.

Vision: Aave aims to create an open, accessible, and non-custodial financial system where users have full control over their assets. Its vision centers on decentralizing credit markets and enabling seamless, trustless lending and borrowing across blockchain networks.

Problem: Traditional financial systems often exclude users due to geographic, economic, or institutional barriers. Even in crypto, accessing credit or earning yield on idle assets can be complex, slow, or require centralized intermediaries. Aave addresses the need for transparent, permissionless, and efficient lending and borrowing markets in the digital asset space.

Solution: Aave uses a decentralized protocol where users supply assets to liquidity pools and earn interest, while borrowers can draw from these pools by posting collateral. It supports features like variable and stable interest rates, flash loans, and cross-chain functionality through its Layer 2 and multi-chain deployments. The AAVE token is used for governance and as a safety mechanism via its staking program (Safety Module).

Market Analysis: Aave is a leading player in the DeFi lending sector, often compared with protocols like Compound and Maker. It benefits from strong brand recognition, a mature codebase, and ongoing innovation such as Aave Arc for institutional pools and cross-chain expansion. Adoption is driven by liquidity, developer activity, and integration with other DeFi platforms. Key risks include competition from newer lending protocols, regulatory scrutiny on DeFi, and smart contract risks. As a top DeFi project, Aave's performance reflects broader trends in decentralized finance, including yield demand, network security, and user trust. Its multi-chain strategy helps maintain relevance amid shifting ecosystem dynamics.

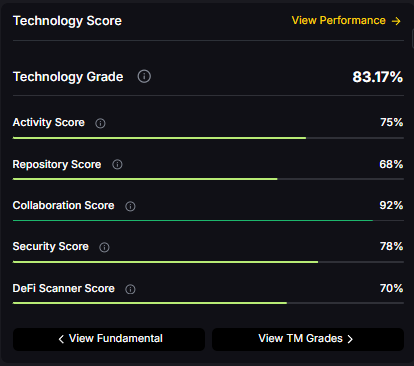

Fundamental Grade: 75.51% (Community 77%, Tokenomics 100%, Exchange 100%, VC 49%, DeFi Scanner 70%).

Technology Grade: 83.17% (Activity 75%, Repository 68%, Collaboration 92%, Security 78%, DeFi Scanner 70%).

Yes. Based on our price prediction scenarios, AAVE could reach $1,007.67 in the 23T base case and $1,041.91 in the 16T moon case. Not financial advice.

At current price of $228.16, a 10x would reach $2,281.60. This falls within the 31T moon case price prediction at $2,175.85 (only slightly below), and would require extreme liquidity expansion. Not financial advice.

Our moon case price predictions range from $499.94 at 8T to $2,175.85 at 31T. These scenarios assume maximum liquidity expansion and strong Aave adoption. Not financial advice.

Our comprehensive 2027 price prediction framework suggests AAVE could trade between $293.45 and $2,175.85, depending on market conditions and total crypto market capitalization. The base case scenario clusters around $396.69 to $1,403.16 across different market cap environments. Not financial advice.

AAVE shows strong fundamentals (75.51% grade) and technology scores (83.17% grade), with bullish trading signals. However, all price predictions involve uncertainty and risk. Always conduct your own research and consult financial advisors before investing. Not financial advice.

Track live grades and signals: Token Details

Want exposure? Buy AAVE on MEXC

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

%201.svg)

%201.svg)

x402 is an open-source, HTTP-native payment protocol developed by Coinbase that enables pay-per-call API access using crypto wallets. It leverages the HTTP 402 Payment Required status code to create seamless, keyless API payments.

It eliminates traditional API keys and subscriptions, allowing agents and applications to pay for exactly what they use in real time. It works across Base and Solana with USDC and selected native tokens such as TMAI.

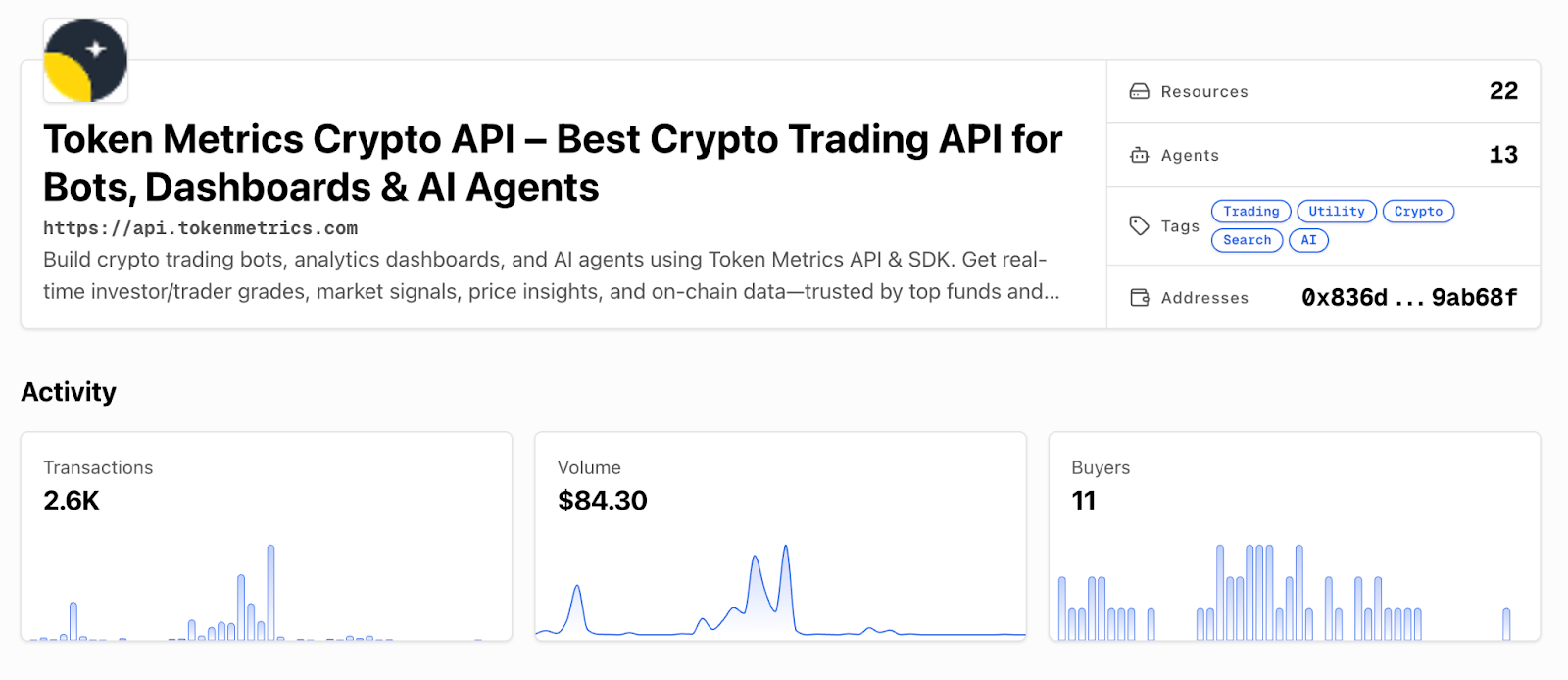

Start using Token Metrics X402 integration here. https://www.x402scan.com/server/244415a1-d172-4867-ac30-6af563fd4d25

x402 transforms API access by making payments native to HTTP requests.

Feature | Traditional APIs | x402 APIs |

Authentication | API keys, tokens | Wallet signature |

Payment Model | Subscription, prepaid | Pay-per-call |

Onboarding | Sign up, KYC, billing | Connect wallet |

Rate Limits | Fixed tiers | Economic (pay more = more access) |

Commitment | Monthly/annual | Zero, per-call only |

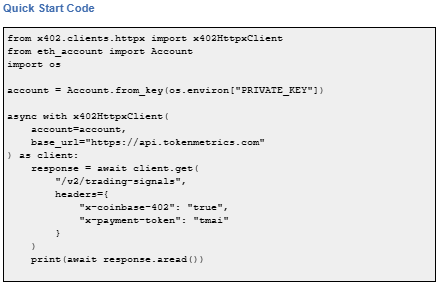

How to use it: Add x-coinbase-402: true header to any supported endpoint. Sign payment with your wallet. The API responds immediately after confirming micro-payment.

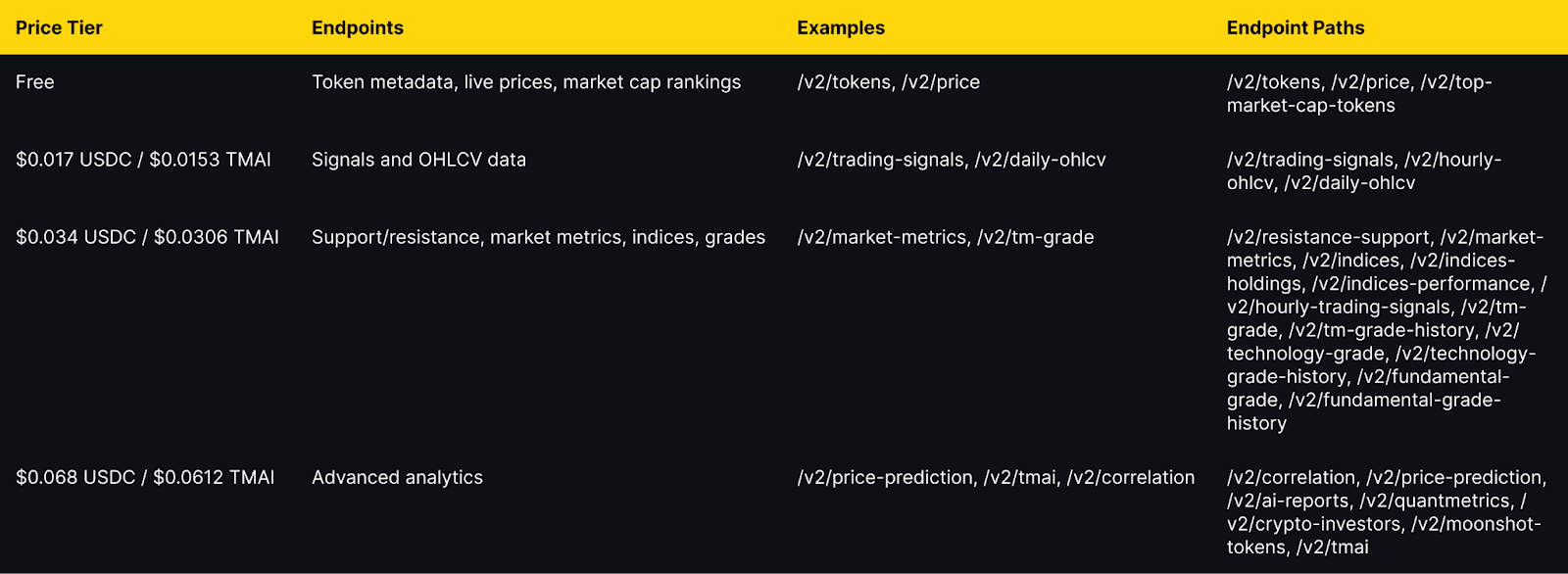

Token Metrics integration: All public endpoints available via x402 with per-call pricing from $0.017 to $0.068 USDC (10% discount with TMAI token).

Explore live agents: https://www.x402scan.com/composer.

The Protocol Flow

The HTTP 402 status code was reserved in HTTP/1.1 in 1997 for future digital payment use cases and was never standardized for any specific payment scheme. x402 activates this path by using 402 responses to coordinate crypto payments during API requests.

Why this matters: It eliminates intermediary payment processors, enables true machine-to-machine commerce, and reduces friction for AI agents.

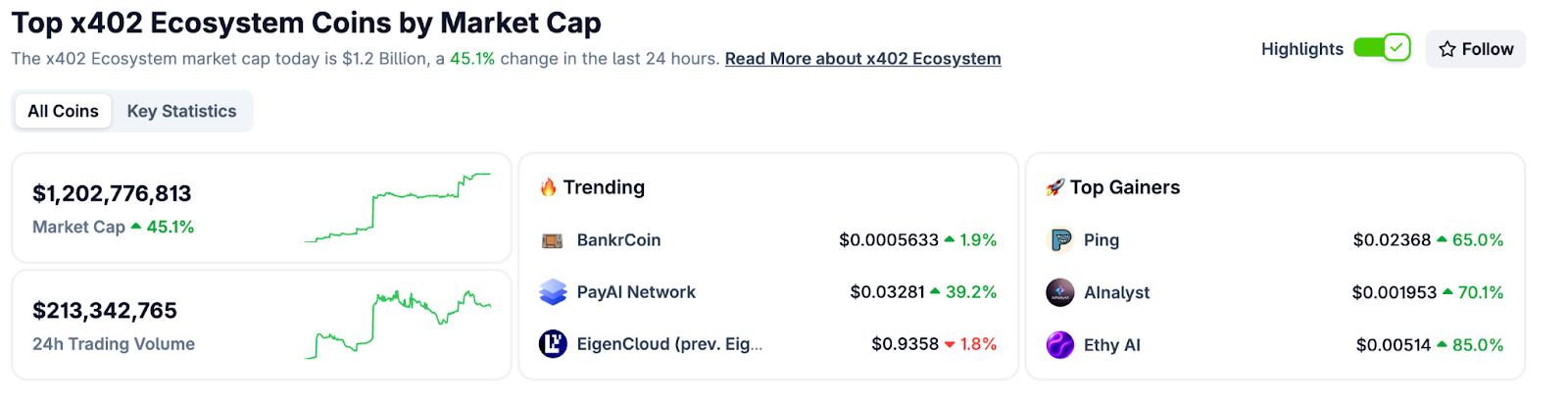

CoinGecko Recognition

CoinGecko launched a dedicated x402 Ecosystem category in October 2025, tracking 700+ projects with over $1 billion market cap and approximately $213 million in daily trading volume. Top performers include PING and Alnalyst, along with established projects like EigenCloud.

Base Network Adoption

Base has emerged as the primary chain for x402 adoption, with 450,000+ weekly transactions by late October 2025, up from near-zero in May. This growth demonstrates real agent and developer usage.

Composer is x402scan's sandbox for discovering and using AI agents that pay per tool call. Users can open any agent, chat with it, and watch tool calls and payments stream in real time.

Top agents include AInalyst, Canza, SOSA, and NewEra. The Composer feed shows live activity across all agents.

Explore Composer: https://x402scan.com/composer

What We Ship

Token Metrics offers all public API endpoints via x402 with no API key required. Pay per call with USDC or TMAI for a 10 percent discount. Access includes trading signals, price predictions, fundamental grades, technology scores, indices data, and the AI chatbot.

Check out Token Metrics Integration on X402. https://www.x402scan.com/server/244415a1-d172-4867-ac30-6af563fd4d25

Data as of October, 2025.

Pricing Tiers

Important note: TMAI Spend Limit: TMAI has 18 decimals. Set max payment to avoid overspending. Example: 200 TMAI = 200 * (10 ** 18) in base units.

Full integration guide: https://api.tokenmetrics.com

Ecosystem Participants and Tools

Active x402 Endpoints

Key endpoints beyond Token Metrics include Heurist Mesh for crypto intelligence, Tavily extract for structured web content, Firecrawl search for SERP and scraping, Twitter or X search for social discovery, and various DeFi and market data providers.

Infrastructure and Tools

Common Questions About x402

How is x402 different from traditional API keys?

x402 uses wallet signatures instead of API keys. Payment happens per call rather than via subscription. No sign-up, no monthly billing, no rate limit tiers. You pay for exactly what you use.

Which chains support x402?

Currently Base and Solana. Most activity is on Base with USDC as the primary payment token. Some endpoints accept native tokens like TMAI for discounts.

Do I need to trust the API provider with my funds?

No. Payments are on-chain and verifiable. You approve each transaction amount. No escrow or prepayment is required.

What happens if a payment fails?

The API returns 402 Payment Required again with updated payment details. Your client retries automatically. You do not receive data until payment confirms.

Can I use x402 with existing API clients?

Yes, with x402 client libraries such as x402-axios for Node and x402-httpx for Python. These wrap standard HTTP clients and handle the payment flow automatically.

Getting Started Checklist

Token Metrics x402 Resources

What's Next for x402

Ecosystem expansion. More API providers adopting x402, additional chains beyond Base and Solana, standardization of payment headers and response formats.

Agent sophistication. As x402 matures, expect agents that automatically discover and compose multiple paid endpoints, optimize costs across providers, and negotiate better rates for bulk usage.

Disclosure

Educational content only, not financial advice. API usage and crypto payments carry risks. Verify all transactions before signing. Do your own research.

%201.svg)

%201.svg)

Opening Hook

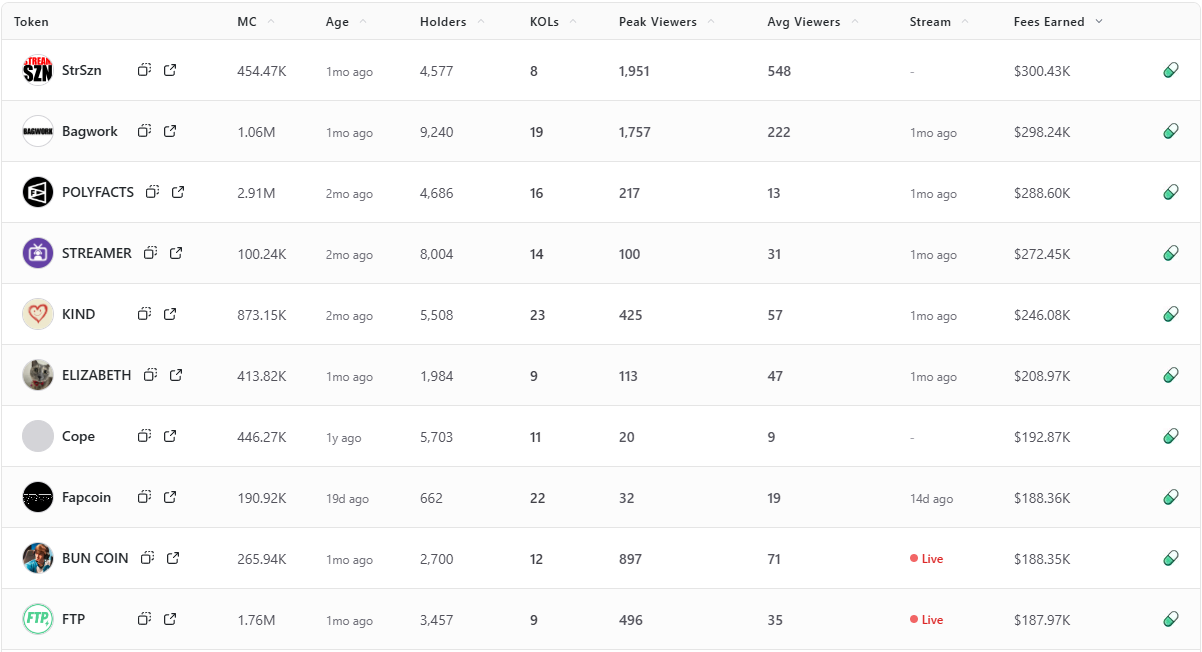

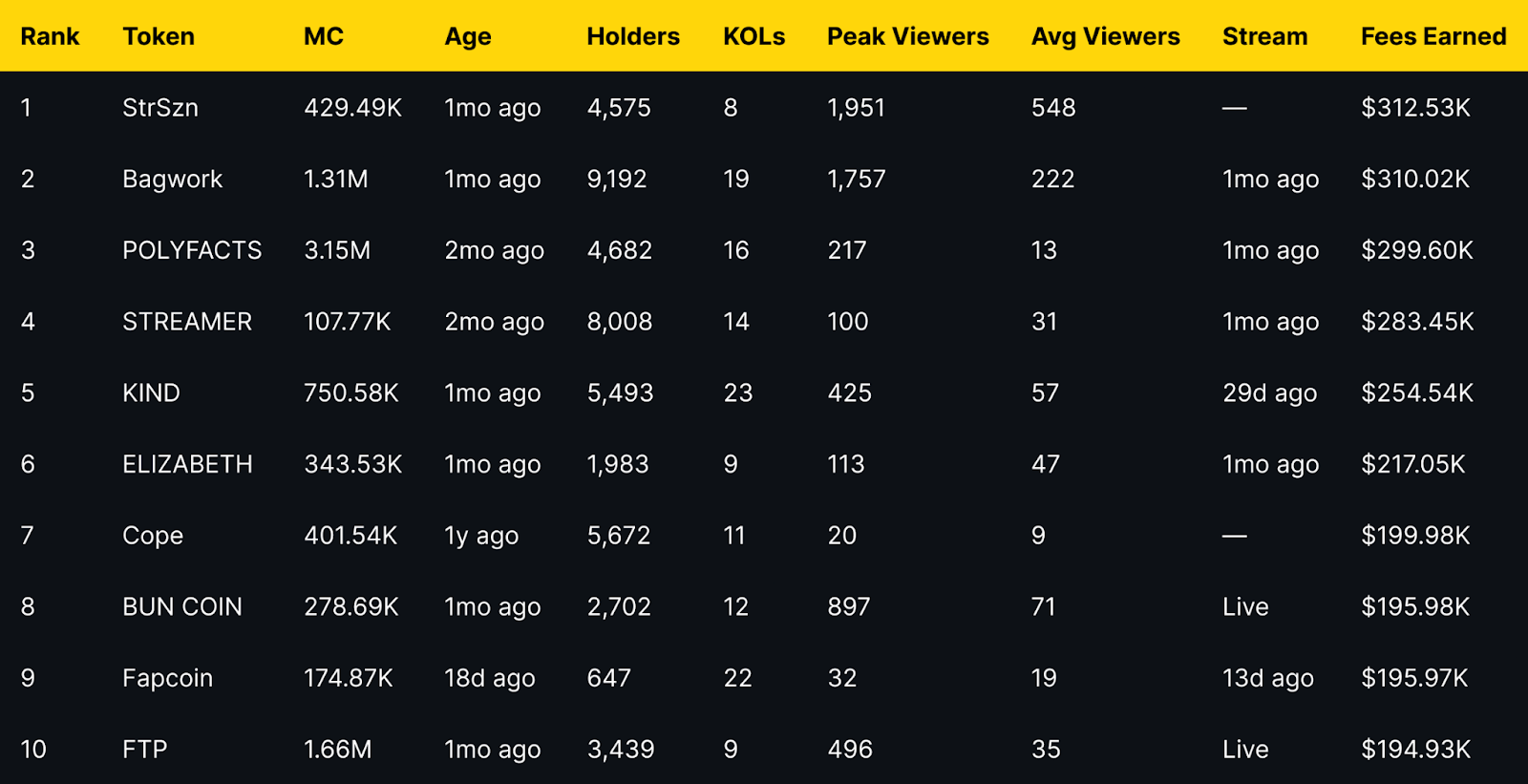

Fees Earned is a clean way to see which livestream tokens convert attention into on-chain activity. This leaderboard ranks the top 10 Pump.fun livestream tokens by Fees Earned using the screenshot you provided.

Selection rule is simple, top 10 by Fees Earned from the screenshot, numbers appear exactly as shown. If a field is not in the image, it is recorded as —.

Entity coverage: project names and tickers are taken as listed on Pump.fun, chain is Solana, sector is livestream meme tokens and creator tokens.

Token Metrics Live (TMLIVE) brings real time, data driven crypto market analysis to Pump.fun. The team has produced live crypto content for 7 years with a 500K plus audience and a platform of more than 100,000 users. Our public track record includes early coverage of winners like MATIC and Helium in 2018.

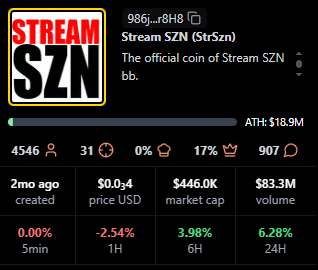

TMLIVE Quick Stats, as captured

TLDR: Fees Earned Leaders at a Glance

Short distribution note: the top three sit within a narrow band of each other, while mid-table tokens show a mix of older communities and recent streams. Several names with modest average viewers still appear due to concentrated activity during peaks.

StrSzn

Positioning: Active community meme with consistent viewer base.

Research Blurb: Project details unclear at time of writing. Fees and viewership suggest consistent stream engagement over the last month.

Quick Facts: Chain = Solana, Status = —, Peak Viewers = 1,951, Avg Viewers = 548.

https://pump.fun/coin/986j8mhmidrcbx3wf1XJxsQFvWBMXg7gnDi3mejsr8H8

Bagwork

Positioning: Large holder base with sustained attention.

Research Blurb: Project details unclear at time of writing. Strong holders and KOL presence supported steady audience numbers.

Quick Facts: Chain = Solana, Status = 1mo ago, Holders = 9,192, KOLs = 19.

https://pump.fun/coin/7Pnqg1S6MYrL6AP1ZXcToTHfdBbTB77ze6Y33qBBpump

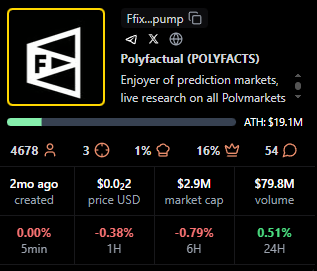

POLYFACTS

Positioning: Higher market cap with light average viewership.

Research Blurb: Project details unclear at time of writing. High market cap with comparatively low average viewers implies fees concentrated in shorter windows.

Quick Facts: Chain = Solana, Status = 1mo ago, MC = 3.15M, Avg Viewers = 13.

https://pump.fun/coin/FfixAeHevSKBZWoXPTbLk4U4X9piqvzGKvQaFo3cpump

STREAMER

Positioning: Community focused around streaming identity.

Research Blurb: Project details unclear at time of writing. Solid holders and moderate KOL count, steady averages over time.

Quick Facts: Chain = Solana, Status = 1mo ago, Holders = 8,008, KOLs = 14.

https://pump.fun/coin/3arUrpH3nzaRJbbpVgY42dcqSq9A5BFgUxKozZ4npump

KIND

Positioning: Heaviest KOL footprint in the top 10.

Research Blurb: Project details unclear at time of writing. The largest KOL count here aligns with above average view metrics and meaningful fees.

Quick Facts: Chain = Solana, Status = 29d ago, KOLs = 23, Avg Viewers = 57.

https://pump.fun/coin/V5cCiSixPLAiEDX2zZquT5VuLm4prr5t35PWmjNpump

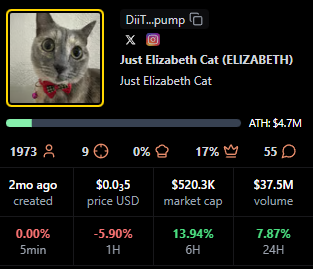

ELIZABETH

Positioning: Mid-cap meme with consistent streams.

Research Blurb: Project details unclear at time of writing. Viewer averages and recency indicate steady presence rather than single spike behavior.

Quick Facts: Chain = Solana, Status = 1mo ago, Avg Viewers = 47, Peak Viewers = 113.

https://pump.fun/coin/DiiTPZdpd9t3XorHiuZUu4E1FoSaQ7uGN4q9YkQupump

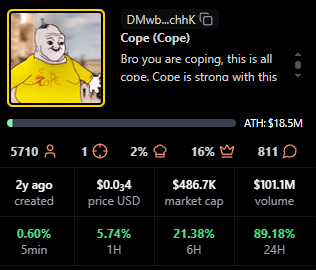

Cope

Positioning: Older token with a legacy community.

Research Blurb: Project details unclear at time of writing. Despite low recent averages, it holds a sizable base and meaningful fees.

Quick Facts: Chain = Solana, Status = —, Age = 1y ago, Avg Viewers = 9.

https://pump.fun/coin/DMwbVy48dWVKGe9z1pcVnwF3HLMLrqWdDLfbvx8RchhK

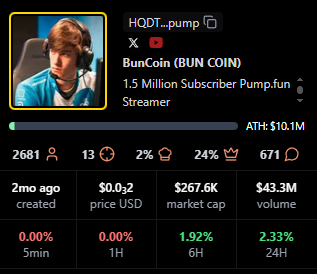

BUN COIN

Positioning: Currently live, strong peaks relative to size.

Research Blurb: Project details unclear at time of writing. Live streaming status often coincides with bursts of activity that lift fees quickly.

Quick Facts: Chain = Solana, Status = Live, Peak Viewers = 897, Avg Viewers = 71.

https://pump.fun/coin/HQDTzNa4nQVetoG6aCbSLX9kcH7tSv2j2sTV67Etpump

Fapcoin

Positioning: Newer token with targeted pushes.

Research Blurb: Project details unclear at time of writing. Recent age and meaningful KOL support suggest orchestrated activations that can move fees.

Quick Facts: Chain = Solana, Status = 13d ago, Age = 18d ago, KOLs = 22.

https://pump.fun/coin/8vGr1eX9vfpootWiUPYa5kYoGx9bTuRy2Xc4dNMrpump

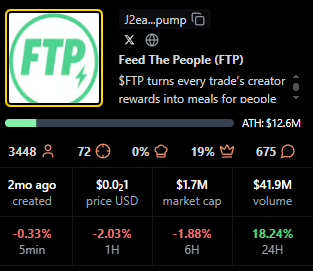

FTP

Positioning: Live status with solid mid-table view metrics.

Research Blurb: Project details unclear at time of writing. Peaks and consistent averages suggest an active audience during live windows.

Quick Facts: Chain = Solana, Status = Live, Peak Viewers = 496, Avg Viewers = 35.

https://pump.fun/coin/J2eaKn35rp82T6RFEsNK9CLRHEKV9BLXjedFM3q6pump

Signals From Fees Earned: Patterns to Watch

Fees Earned often rise with peak and average viewers, but timing matters. Several tokens here show concentrated peaks with modest averages, which implies that well timed announcements or coordinated segments can still produce high fees.

Age is not a blocker for this board. Newer tokens like Fapcoin appear due to focused activity, while older names such as Cope persist by mobilizing established holders. KOL count appears additive rather than decisive, with KIND standing out as the KOL leader.

For creators, Fees Earned reflects whether livestream moments translate into on-chain action. Design streams around clear calls to action, align announcements with segments that drive peaks, then sustain momentum with repeatable formats that stabilize averages.

For traders, Fees Earned complements market cap, viewers, and age. Look for projects that combine rising averages with consistent peaks, because those patterns suggest repeatable engagement rather than single event spikes.

TV Live is a fast way to follow real-time crypto market news, creator launches, and token breakdowns as they happen. You get context on stream dynamics, audience behavior, and on-chain activity while the story evolves.

CTA: Watch TV Live for real-time crypto market news →TV Live Link

CTA: Follow and enable alerts → TV Live

Token Metrics is trusted for transparent data, crypto analytics, on-chain ratings, and investor education. Our platform offers cutting-edge signals and market research to empower your crypto investing decisions.

What is the best way to track Pump.fun livestream leaders?

Tracking Pump.fun livestream leaders starts with the scanner views that show Fees Earned, viewers, and KOLs side by side, paired with live coverage so you see data and narrative shifts together.

Do higher fees predict higher market cap or sustained viewership?

Higher Fees Earned does not guarantee higher market cap or sustained viewership, it indicates conversion in specific windows, while longer term outcomes still depend on execution and community engagement.

How often do these rankings change?

Rankings can change quickly during active cycles, the entries shown here reflect the exact time of the screenshot.

Next Steps

Disclosure

This article is educational content. Cryptocurrency involves risk. Always do your own research.

%201.svg)

%201.svg)

APIs are the connective tissue of modern software: they expose functionality, move data, and enable integrations across services, devices, and platforms. A well-designed web API shapes developer experience, system resilience, and operational cost. This article breaks down core concepts, common architectures, security and observability patterns, and practical steps to build and maintain reliable web APIs without assuming a specific platform or vendor.

A web API (Application Programming Interface) is an HTTP-accessible interface that lets clients interact with server-side functionality. APIs can return JSON, XML, or other formats and typically define a contract of endpoints, parameters, authentication requirements, and expected responses. They matter because they enable modularity: front-ends, mobile apps, third-party integrations, and automation tools can all reuse the same backend logic.

When evaluating or designing an API, consider the consumer experience: predictable endpoints, clear error messages, consistent versioning, and comprehensive documentation reduce onboarding friction for integrators. Think of an API as a public product: its usability directly impacts adoption and maintenance burden.

There are several architectural approaches to web APIs. RESTful (resource-based) design emphasizes nouns and predictable HTTP verbs. GraphQL centralizes query flexibility into a single endpoint and lets clients request only the fields they need. gRPC is used for low-latency, binary RPC between services.

Key design practices:

Choose the pattern that aligns with your performance, flexibility, and developer ergonomics goals, and make that decision explicit in onboarding docs.

Security must be built into an API from day one. Common controls include TLS for transport, OAuth 2.0 / OpenID Connect for delegated authorization, API keys for service-to-service access, and fine-grained scopes for least-privilege access. Input validation, output encoding, and strict CORS policies guard against common injection and cross-origin attacks.

Operational protections such as rate limiting, quotas, and circuit breakers help preserve availability if a client misbehaves or a downstream dependency degrades. Design your error responses to be informative to developers but avoid leaking internal implementation details. Centralized authentication and centralized secrets management (vaults, KMS) reduce duplication and surface area for compromise.

Performance considerations span latency, throughput, and resource efficiency. Use caching (HTTP cache headers, CDN, or in-memory caches) to reduce load on origin services. Employ pagination, partial responses, and batch endpoints to avoid overfetching. Instrumentation is essential: traces, metrics, and logs help correlate symptoms, identify bottlenecks, and measure SLAs.

Testing should be layered: unit tests for business logic, contract tests against API schemas, integration tests for end-to-end behavior, and load tests that emulate real-world usage. Observability tools and APMs provide continuous insight; AI-driven analytics platforms such as Token Metrics can help surface unusual usage patterns and prioritize performance fixes based on impact.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

REST exposes multiple endpoints that represent resources and rely on HTTP verbs for operations. It is simple and maps well to HTTP semantics. GraphQL exposes a single endpoint where clients request precisely the fields they need, which reduces overfetching and can simplify mobile consumption. GraphQL adds complexity in query planning and caching; choose based on client needs and team expertise.

Prefer backward-compatible changes over breaking changes. Use semantic versioning for major releases, and consider header-based versioning or URI version prefixes when breaking changes are unavoidable. Maintain deprecation schedules and communicate timelines in documentation and response headers so clients can migrate predictably.

OAuth 2.0 and OpenID Connect are standard for delegated access and single-sign-on. For machine-to-machine communication, use short-lived tokens issued by a trusted authorization server. API keys can be simple to implement but should be scoped, rotated regularly, and never embedded in public clients without additional protections.

Implement synthetic monitoring for critical endpoints, collect real-user metrics (latency percentiles, error rates), and instrument distributed tracing to follow requests across services. Run scheduled contract tests against staging and production-like environments, and correlate incidents with deployment timelines and dependency health.

Make additive, non-breaking changes where possible: add new fields rather than changing existing ones, and preserve default behaviors. Document deprecated fields and provide feature flags to gate new behavior. Maintain versioned client libraries to give consumers time to upgrade.

Disclaimer

This article is educational and technical in nature. It does not provide legal, financial, or investment advice. Implementations should be evaluated with respect to security policies, compliance requirements, and operational constraints specific to your organization.

%201.svg)

%201.svg)

APIs power modern software by exposing discrete access points called endpoints. Whether you re integrating a third-party data feed, building a microservice architecture, or wiring a WebSocket stream, understanding what an api endpoint is and how to design, secure, and monitor one is essential for robust systems.

An api endpoint is a network-accessible URL or address that accepts requests and returns responses according to a protocol (usually HTTP/HTTPS or WebSocket). Conceptually, an endpoint maps a client intent to a server capability: retrieve a resource, submit data, or subscribe to updates. In a RESTful API, endpoints often follow noun-based paths (e.g., /users/123) combined with HTTP verbs (GET, POST, PUT, DELETE) to indicate the operation.

Key technical elements of an endpoint include:

Endpoints can be public (open to third parties) or private (internal to a service mesh). For crypto-focused data integrations, api endpoints may also expose streaming interfaces (WebSockets) or webhook callbacks for asynchronous events. For example, Token Metrics is an example of an analytics provider that exposes APIs for research workflows.

Different application needs favor different endpoint types and protocols:

Choosing a protocol depends on consistency requirements, latency tolerance, and client diversity. Hybrid architectures often combine REST for configuration and GraphQL/WebSocket for dynamic data.

Good endpoint design improves developer experience and system resilience. Follow these practical practices:

API schema tools (OpenAPI/Swagger, AsyncAPI) let you define endpoints, types, and contracts programmatically, enabling automated client generation, testing, and mock servers during development.

Endpoints are primary attack surfaces. Security and observability are critical:

Operational tooling such as API gateways, service meshes, and managed API platforms provide built-in policy enforcement for security and rate limiting, reducing custom code complexity.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

An API is the overall contract and set of capabilities a service exposes; an api endpoint is a specific network address (URI) where one of those capabilities is accessible. Think of the API as the menu and endpoints as the individual dishes.

Use HTTPS only, require authenticated tokens with appropriate scopes, implement rate limits and IP reputation checks, and validate all input. Employ monitoring to detect anomalous traffic patterns and rotate credentials periodically.

Introduce explicit versioning when you plan to make breaking changes to request/response formats or behavior. Semantic versioning in the path (e.g., /v1/) is common and avoids forcing clients to adapt unexpectedly.

Combine per-key quotas, sliding-window or token-bucket algorithms, and burst allowances. Communicate limits via response headers and provide clear error codes and retry-after values so clients can back off gracefully.

Track request rate (RPS), error rate (4xx/5xx), latency percentiles (p50, p95, p99), and active connections for streaming endpoints. Correlate with upstream/downstream service metrics to identify root causes.

Choose GraphQL when clients require flexible field selection and you want to reduce overfetching. Prefer REST for simple resource CRUD patterns and when caching intermediaries are important. Consider team familiarity and tooling ecosystem as well.

The information in this article is technical and educational in nature. It is not financial, legal, or investment advice. Implementations should be validated in your environment and reviewed for security and compliance obligations specific to your organization.

%201.svg)

%201.svg)

Modern web and mobile apps exchange data constantly. At the center of that exchange is the REST API — a widely adopted architectural style that standardizes how clients and servers communicate over HTTP. Whether you are a developer, product manager, or researcher, understanding what a REST API is and how it works is essential for designing scalable systems and integrating services efficiently.

A REST API (Representational State Transfer Application Programming Interface) is a style for designing networked applications. It defines a set of constraints that, when followed, enable predictable, scalable, and loosely coupled interactions between clients (browsers, mobile apps, services) and servers. REST is not a protocol or standard; it is a set of architectural principles introduced by Roy Fielding in 2000.

Key principles include:

A REST API organizes functionality around resources and uses standard HTTP verbs to manipulate them. Common conventions are:

Responses use HTTP status codes to indicate result state (200 OK, 201 Created, 204 No Content, 400 Bad Request, 401 Unauthorized, 404 Not Found, 500 Internal Server Error). Payloads are typically JSON but can be XML or other formats. Endpoints are structured hierarchically, for example: /api/users to list users, /api/users/123 to operate on user with ID 123.

Designing a robust REST API involves more than choosing verbs and URIs. Adopt patterns that make APIs understandable, maintainable, and secure:

Following these practices improves interoperability and reduces operational risk.

REST APIs are used across web services, microservices, mobile backends, IoT devices, and third-party integrations. Developers commonly use tools and practices to build and validate APIs:

AI-driven platforms and analytics can speed research and debugging by surfacing usage patterns, anomalies, and integration opportunities. For example, Token Metrics can be used to analyze API-driven data feeds and incorporate on-chain signals into application decision layers without manual data wrangling.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

"REST" refers to the architectural constraints described by Roy Fielding; "RESTful" is a colloquial adjective meaning an API that follows REST principles. Not all APIs labeled RESTful implement every REST constraint strictly.

SOAP is a protocol with rigid standards and built-in operations (often used in enterprise systems). GraphQL exposes a single endpoint and lets clients request precise data shapes. REST uses multiple endpoints and standard HTTP verbs. Each approach has trade-offs in flexibility, caching, and tooling.

Version your API before making breaking changes to request/response formats or behavior that existing clients depend on. Common strategies include URI versioning (e.g., /v1/) or header-based versioning.

No. Security must be designed in: use HTTPS/TLS, authenticate requests, validate input, apply authorization checks, and limit rate to reduce abuse. Treat REST APIs like any other public interface that requires protection.

Use API specifications (OpenAPI) to auto-generate docs and client stubs. Combine manual testing tools like Postman with automated integration and contract tests in CI pipelines to ensure consistent behavior across releases.

REST is request/response oriented and not ideal for continuous real-time streams. For streaming, consider WebSockets, Server-Sent Events (SSE), or specialized protocols; REST can still be used for control operations and fallbacks.

Disclaimer: This article is educational and technical in nature. It does not provide investment or legal advice. The information is intended to explain REST API concepts and best practices, not to recommend specific products or actions.

%201.svg)

%201.svg)

FastAPI has become a go-to framework for teams that need production-ready, high-performance APIs in Python. It combines modern Python features, automatic type validation via pydantic, and ASGI-based async support to deliver low-latency endpoints. This post breaks down pragmatic patterns for building, testing, and scaling FastAPI services, with concrete guidance on performance tuning, deployment choices, and observability so you can design robust APIs for real-world workloads.

FastAPI is an ASGI framework that emphasizes developer experience and runtime speed. It generates OpenAPI docs automatically, enforces request/response typing, and integrates cleanly with async workflows. Compare FastAPI to traditional WSGI stacks (Flask, Django sync endpoints): FastAPI excels when concurrency and I/O-bound tasks dominate, and when you want built-in validation and schema-driven design.

Use-case scenarios where FastAPI shines:

FastAPI leverages async/await to let a single worker handle many concurrent requests when operations are I/O-bound. Key principles:

Performance tuning checklist:

FastAPI's dependency injection and pydantic models enable clear separation of concerns. Recommended practices:

Scenario analysis: for CPU-bound workloads (e.g., heavy data processing), prefer external workers or serverless functions. For high-concurrency I/O-bound workloads, carefully tuned async endpoints perform best.

Deploying FastAPI requires choices around containers, orchestration, and observability:

CI/CD tips: include a test matrix for schema validation, contract tests against OpenAPI, and canary deploys for backward-incompatible changes.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

FastAPI is a modern, ASGI-based Python framework focused on speed and developer productivity. It differs from traditional frameworks by using type hints for validation, supporting async endpoints natively, and automatically generating OpenAPI documentation.

Prefer async endpoints for I/O-bound operations like network calls or async DB drivers. If your code is CPU-bound, spawning background workers or using synchronous workers with more processes may be better to avoid blocking the event loop.

There is no one-size-fits-all. Start with CPU core count as a baseline and adjust based on latency and throughput measurements. For async I/O-bound workloads, fewer workers with higher concurrency can be more efficient; for blocking workloads, increase worker count or externalize tasks.

Enforce strong input validation with pydantic, use HTTPS, validate and sanitize user data, implement authentication and authorization (OAuth2, JWT), and apply rate limiting and request size limits at the gateway.

Use TestClient from FastAPI for unit and integration tests, mock external dependencies, write contract tests against OpenAPI schemas, and include load tests in CI to catch performance regressions early.

This article is for educational purposes only. It provides technical and operational guidance for building APIs with FastAPI and does not constitute professional or financial advice.

%201.svg)

%201.svg)

The reliability and correctness of API systems directly impact every application that depends on them, making comprehensive testing non-negotiable for modern software development. In the cryptocurrency industry where APIs handle financial transactions, market data, and blockchain interactions, the stakes are even higher as bugs can result in financial losses, security breaches, or regulatory compliance failures. This comprehensive guide explores practical API testing strategies that ensure cryptocurrency APIs and other web services deliver consistent, correct, and secure functionality across all conditions.

API testing differs fundamentally from user interface testing by focusing on the business logic layer, data responses, and system integration rather than visual elements and user interactions. This distinction makes API testing faster to execute, easier to automate, and capable of covering more scenarios with fewer tests. For cryptocurrency APIs serving market data, trading functionality, and blockchain analytics, API testing validates that endpoints return correct data, handle errors appropriately, enforce security policies, and maintain performance under load.

The testing pyramid concept places API tests in the middle tier between unit tests and end-to-end tests, balancing execution speed against realistic validation. Unit tests run extremely fast but validate components in isolation, while end-to-end tests provide comprehensive validation but execute slowly and prove brittle. API tests hit the sweet spot by validating integrated behavior across components while remaining fast enough to run frequently during development. For crypto API platforms composed of multiple microservices, focusing on API testing provides excellent return on testing investment.

Different test types serve distinct purposes in comprehensive API testing strategies. Functional testing validates that endpoints produce correct outputs for given inputs, ensuring business logic executes properly. Integration testing verifies that APIs correctly interact with databases, message queues, blockchain nodes, and external services. Performance testing measures response times and throughput under various load conditions. Security testing probes for vulnerabilities like injection attacks, authentication bypasses, and authorization failures. Contract testing ensures APIs maintain compatibility with consuming applications. Token Metrics employs comprehensive testing across all these dimensions for its cryptocurrency API, ensuring that developers receive accurate, reliable market data and analytics.

Testing environments that mirror production configurations provide the most realistic validation while allowing safe experimentation. Containerization technologies like Docker enable creating consistent test environments that include databases, message queues, and other dependencies. For cryptocurrency APIs that aggregate data from multiple blockchain networks and exchanges, test environments must simulate these external dependencies to enable thorough testing without impacting production systems. Infrastructure as code tools ensure test environments remain synchronized with production configurations, preventing environment-specific bugs from escaping to production.

Functional testing forms the foundation of API testing by validating that endpoints produce correct responses for various inputs. Test case design begins with understanding API specifications and identifying all possible input combinations, edge cases, and error scenarios. For cryptocurrency APIs, functional tests verify that price queries return accurate values, trading endpoints validate orders correctly, blockchain queries retrieve proper transaction data, and analytics endpoints compute metrics accurately. Systematic test case design using equivalence partitioning and boundary value analysis ensures comprehensive coverage without redundant tests.

Request validation testing ensures APIs properly handle both valid and invalid inputs, rejecting malformed requests with appropriate error messages. Testing should cover missing required parameters, invalid data types, out-of-range values, malformed formats, and unexpected additional parameters. For crypto APIs, validation testing might verify that endpoints reject invalid cryptocurrency symbols, negative trading amounts, malformed wallet addresses, and future dates for historical queries. Comprehensive validation testing prevents APIs from processing incorrect data that could lead to downstream errors or security vulnerabilities.

Response validation confirms that API responses match expected structures, data types, and values. Automated tests should verify HTTP status codes, response headers, JSON schema compliance, field presence, data type correctness, and business logic results. For cryptocurrency market data APIs, response validation ensures that price data includes all required fields like timestamp, open, high, low, close, and volume, that numeric values fall within reasonable ranges, and that response pagination works correctly. Token Metrics maintains rigorous response validation testing across its crypto API endpoints, ensuring consistent, reliable data delivery to developers.

Error handling testing verifies that APIs respond appropriately to error conditions including invalid inputs, missing resources, authentication failures, authorization denials, rate limit violations, and internal errors. Each error scenario should return proper HTTP status codes and descriptive error messages that help developers understand and resolve issues. For crypto APIs, error testing validates behavior when querying non-existent cryptocurrencies, attempting unauthorized trading operations, exceeding rate limits, or experiencing blockchain node connectivity failures. Proper error handling testing ensures APIs fail gracefully and provide actionable feedback.

Business logic testing validates complex calculations, workflows, and rules that form the core API functionality. For cryptocurrency APIs, business logic tests verify that technical indicators compute correctly, trading signal generation follows proper algorithms, portfolio analytics calculate profit and loss accurately, and risk management rules enforce position limits. These tests often require carefully crafted test data and expected results computed independently to validate implementation correctness. Comprehensive business logic testing catches subtle bugs that simpler validation tests might miss.

Integration testing validates how APIs interact with external dependencies including databases, caching layers, message queues, blockchain nodes, and third-party services. These tests use real or realistic implementations of dependencies rather than mocks, providing confidence that integration points function correctly. For cryptocurrency APIs aggregating data from multiple sources, integration testing ensures data synchronization works correctly, conflict resolution handles discrepancies appropriately, and failover mechanisms activate when individual sources become unavailable.

Database integration testing verifies that APIs correctly read and write data including proper transaction handling, constraint enforcement, and query optimization. Tests should cover normal operations, concurrent access scenarios, transaction rollback on errors, and handling of database connectivity failures. For crypto APIs tracking user portfolios, transaction history, and market data, database integration tests ensure data consistency even under concurrent updates and system failures. Testing with realistic data volumes reveals performance problems before they impact production users.

External API integration testing validates interactions with blockchain nodes, cryptocurrency exchanges, data providers, and other external services. These tests verify proper request formatting, authentication, error handling, timeout management, and response parsing. Mock services simulating external APIs enable testing error scenarios and edge cases difficult to reproduce with actual services. For crypto APIs depending on multiple blockchain networks, integration tests verify that chain reorganizations, missing blocks, and node failures are handled appropriately without data corruption.

Message queue integration testing ensures that event-driven architectures function correctly with proper message publishing, consumption, error handling, and retry logic. Tests verify that messages are formatted correctly, consumed exactly once or at least once based on requirements, dead letter queues capture failed messages, and message ordering is preserved when required. For cryptocurrency APIs publishing real-time price updates and trading signals through message queues, integration testing ensures reliable event delivery even under high message volumes.

Circuit breaker and retry logic testing validates resilience patterns that protect APIs from cascading failures. Tests simulate external service failures and verify that circuit breakers open after threshold errors, requests fail fast while circuits are open, and circuits close after recovery periods. For crypto APIs integrating with numerous external services, circuit breaker testing ensures that failures in individual data sources don't compromise overall system availability. Token Metrics implements sophisticated resilience patterns throughout its crypto API infrastructure, validated through comprehensive integration testing.

Performance testing measures API response times, throughput, resource consumption, and scalability characteristics under various load conditions. Baseline performance testing establishes expected response times for different endpoints under normal load, providing reference points for detecting performance regressions. For cryptocurrency APIs, baseline tests measure latency for common operations like retrieving current prices, querying market data, executing trades, and running analytical calculations. Tracking performance metrics over time reveals gradual degradation that might otherwise go unnoticed.

Load testing simulates realistic user traffic to validate that APIs maintain acceptable performance at expected concurrency levels. Tests gradually increase concurrent users while monitoring response times, error rates, and resource utilization to identify when performance degrades. For crypto APIs experiencing traffic spikes during market volatility, load testing validates capacity to handle surge traffic without failures. Realistic load profiles modeling actual usage patterns provide more valuable insights than artificial uniform load distributions.

Stress testing pushes APIs beyond expected capacity to identify failure modes and breaking points. Understanding how systems fail under extreme load informs capacity planning and helps identify components needing reinforcement. Stress tests reveal bottlenecks like database connection pool exhaustion, memory leaks, CPU saturation, and network bandwidth limitations. For cryptocurrency trading APIs that might experience massive traffic during market crashes or rallies, stress testing ensures graceful degradation rather than catastrophic failure.

Soak testing validates API behavior over extended periods to identify issues like memory leaks, resource exhaustion, and performance degradation that only manifest after prolonged operation. Running tests for hours or days under sustained load reveals problems that short-duration tests miss. For crypto APIs running continuously to serve global markets, soak testing ensures stable long-term operation without requiring frequent restarts or memory clear operations.

Spike testing validates API response to sudden dramatic increases in traffic, simulating scenarios like viral social media posts or major market events driving user surges. These tests verify that auto-scaling mechanisms activate quickly enough, rate limiting protects core functionality, and systems recover gracefully after spikes subside. Token Metrics performance tests its cryptocurrency API infrastructure extensively, ensuring reliable service delivery even during extreme market volatility when usage patterns become unpredictable.

Security testing probes APIs for vulnerabilities that attackers might exploit including authentication bypasses, authorization failures, injection attacks, and data exposure. Automated security scanning tools identify common vulnerabilities quickly while manual penetration testing uncovers sophisticated attack vectors. For cryptocurrency APIs handling valuable digital assets and sensitive financial data, comprehensive security testing becomes essential for protecting users and maintaining trust.

Authentication testing verifies that APIs properly validate credentials and reject invalid authentication attempts. Tests should cover missing credentials, invalid credentials, expired tokens, token reuse after logout, and authentication bypass attempts. For crypto APIs using OAuth, JWT, or API keys, authentication testing ensures proper implementation of token validation, signature verification, and expiration checking. Simulating attacks like credential stuffing and brute force attempts validates rate limiting and account lockout mechanisms.

Authorization testing ensures that authenticated users can only access resources and operations they're permitted to access. Tests verify that APIs enforce access controls based on user roles, resource ownership, and operation type. For cryptocurrency trading APIs, authorization testing confirms that users can only view their own portfolios, execute trades with their own funds, and access analytics appropriate to their subscription tier. Testing authorization at the API level prevents privilege escalation attacks that bypass user interface controls.

Injection testing attempts to exploit APIs by submitting malicious input that could manipulate queries, commands, or data processing. SQL injection tests verify that database queries properly parameterize inputs rather than concatenating strings. Command injection tests ensure APIs don't execute system commands with unsanitized user input. For crypto APIs accepting cryptocurrency addresses, transaction IDs, and trading parameters, injection testing validates comprehensive input sanitization preventing malicious data from compromising backend systems.

Data exposure testing verifies that APIs don't leak sensitive information through responses, error messages, or headers. Tests check for exposed internal paths, stack traces in error responses, sensitive data in logs, and information disclosure through timing attacks. For cryptocurrency APIs, data exposure testing ensures that API responses don't reveal other users' holdings, trading strategies, or personal information. Proper error handling returns generic messages to clients while logging detailed information for internal troubleshooting.

Rate limiting and DDoS protection testing validates that APIs can withstand abuse and denial-of-service attempts. Tests verify that rate limits are enforced correctly, exceeded limits return appropriate error responses, and distributed attacks triggering rate limits across many IPs don't compromise service. For crypto APIs that attackers might target to manipulate markets or disrupt trading, DDoS protection testing ensures service availability under attack. Token Metrics implements enterprise-grade security controls throughout its cryptocurrency API, validated through comprehensive security testing protocols.

Selecting appropriate testing frameworks and tools significantly impacts testing efficiency, maintainability, and effectiveness. REST Assured for Java, Requests for Python, SuperTest for Node.js, and numerous other libraries provide fluent interfaces for making API requests and asserting responses. These frameworks handle request construction, authentication, response parsing, and validation, allowing tests to focus on business logic rather than HTTP mechanics. For cryptocurrency API testing, frameworks with JSON Schema validation, flexible assertion libraries, and good error reporting accelerate test development.

Postman and Newman provide visual test development with Postman's GUI and automated execution through Newman's command-line interface. Postman collections organize related requests with pre-request scripts for setup, test scripts for validation, and environment variables for configuration. Newman integrates Postman collections into CI/CD pipelines, enabling automated test execution on every code change. For teams testing crypto APIs, Postman's collaborative features and extensive ecosystem make it popular for both manual exploration and automated testing.

API testing platforms like SoapUI, Katalon, and Tricentis provide comprehensive testing capabilities including functional testing, performance testing, security testing, and test data management. These platforms offer visual test development, reusable components, data-driven testing, and detailed reporting. For organizations testing multiple cryptocurrency APIs and complex integration scenarios, commercial testing platforms provide capabilities justifying their cost through increased productivity.

Contract testing tools like Pact enable consumer-driven contract testing where API consumers define expectations that providers validate. This approach catches breaking changes before they impact integrated systems, particularly valuable in microservices architectures where multiple teams develop interdependent services. For crypto API platforms composed of numerous microservices, contract testing prevents integration failures and facilitates independent service deployment. Token Metrics employs contract testing to ensure its cryptocurrency API maintains compatibility as the platform evolves.

Performance testing tools like JMeter, Gatling, K6, and Locust simulate load and measure API performance under various conditions. These tools support complex test scenarios including ramping load profiles, realistic think times, and correlation of dynamic values across requests. Distributed load generation enables testing at scale, simulating thousands of concurrent users. For cryptocurrency APIs needing validation under high-frequency trading loads, performance testing tools provide essential capabilities for ensuring production readiness.

Effective test data management ensures tests execute reliably with realistic data while maintaining data privacy and test independence. Test data strategies balance realism against privacy, consistency against isolation, and manual curation against automated generation. For cryptocurrency APIs, test data must represent diverse market conditions, cryptocurrency types, and user scenarios while protecting any production data used in testing environments.

Synthetic data generation creates realistic test data programmatically based on rules and patterns that match production data characteristics. Generating test data for crypto APIs might include creating price histories with realistic volatility, generating blockchain transactions with proper structure, and creating user portfolios with diverse asset allocations. Synthetic data avoids privacy concerns since it contains no real user information while providing unlimited test data volume. Libraries like Faker and specialized financial data generators accelerate synthetic data creation.

Data anonymization techniques transform production data to remove personally identifiable information while maintaining statistical properties useful for testing. Techniques include data masking, tokenization, and differential privacy. For cryptocurrency APIs, anonymization might replace user identifiers and wallet addresses while preserving portfolio compositions and trading patterns. Properly anonymized production data provides realistic test scenarios without privacy violations or regulatory compliance issues.

Test data fixtures define reusable datasets for common test scenarios, providing consistency across test runs and reducing test setup complexity. Fixtures might include standard cryptocurrency price data, reference portfolios, and common trading scenarios. Database seeding scripts populate test databases with fixture data before test execution, ensuring tests start from known states. For crypto API testing, fixtures enable comparing results against expected values computed from the same test data.

Data-driven testing separates test logic from test data, enabling execution of the same test logic with multiple data sets. Parameterized tests read input values and expected results from external sources like CSV files, databases, or API responses. For cryptocurrency APIs, data-driven testing enables validating price calculations across numerous cryptocurrencies, testing trading logic with diverse order scenarios, and verifying analytics across various market conditions. Token Metrics employs extensive data-driven testing to validate calculations across its comprehensive cryptocurrency coverage.

Integrating API tests into continuous integration pipelines ensures automated execution on every code change, catching regressions immediately and maintaining quality throughout development. CI pipelines trigger test execution on code commits, pull requests, scheduled intervals, or manual requests. Test results gate deployments, preventing broken code from reaching production. For cryptocurrency APIs where bugs could impact trading and financial operations, automated testing in CI pipelines provides essential quality assurance.

Test selection strategies balance comprehensive validation against execution time. Running all tests on every change provides maximum confidence but may take too long for rapid iteration. Intelligent test selection runs only tests affected by code changes, accelerating feedback while maintaining safety. For large crypto API platforms with thousands of tests, selective execution enables practical continuous testing. Periodic full test suite execution catches issues that selective testing might miss.

Test environment provisioning automation ensures consistent, reproducible test environments for reliable test execution. Infrastructure as code tools create test environments on demand, containerization provides isolated execution contexts, and cloud platforms enable scaling test infrastructure based on demand. For cryptocurrency API testing requiring blockchain nodes, databases, and external service mocks, automated provisioning eliminates manual setup and environment configuration drift.

Test result reporting and analysis transform raw test execution data into actionable insights. Test reports show passed and failed tests, execution times, trends over time, and failure patterns. Integrating test results with code coverage tools reveals untested code paths. For crypto API development teams, comprehensive test reporting enables data-driven quality decisions and helps prioritize testing investments. Token Metrics maintains detailed test metrics and reports, enabling continuous improvement of its cryptocurrency API quality.

Flaky test management addresses tests that intermittently fail without code changes, undermining confidence in test results. Strategies include identifying flaky tests through historical analysis, quarantining unreliable tests, investigating root causes like timing dependencies or test pollution, and refactoring tests for reliability. For crypto API tests depending on external services or blockchain networks, flakiness often results from network issues or timing assumptions. Systematic flaky test management maintains testing credibility and efficiency.

Contract testing validates that API providers fulfill expectations of API consumers, catching breaking changes before deployment. Consumer-driven contracts specify the exact requests consumers make and responses they expect, creating executable specifications that both parties validate. For cryptocurrency API platforms serving diverse clients from mobile applications to trading bots, contract testing prevents incompatibilities that could break integrations.

Schema validation enforces API response structures through JSON Schema or OpenAPI specifications. Tests validate that responses conform to declared schemas, ensuring consistent field names, data types, and structures. For crypto APIs, schema validation catches changes like missing price fields, altered data types, or removed endpoints before clients encounter runtime failures. Maintaining schemas as versioned artifacts provides clear API contracts and enables automated compatibility checking.

Backward compatibility testing ensures new API versions don't break existing clients. Tests execute against multiple API versions, verifying that responses remain compatible or that deprecated features continue functioning with appropriate warnings. For cryptocurrency APIs where legacy trading systems might require long support windows, backward compatibility testing prevents disruptive breaking changes. Semantic versioning conventions communicate compatibility expectations through version numbers.

API versioning strategies enable evolution while maintaining stability. URI versioning embeds versions in endpoint paths, header versioning uses custom headers to specify versions, and content negotiation selects versions through Accept headers. For crypto APIs serving clients with varying update cadences, clear versioning enables controlled evolution. Token Metrics maintains well-defined versioning for its cryptocurrency API, allowing clients to upgrade at their own pace while accessing new features as they become available.

Deprecation testing validates that deprecated endpoints or features continue functioning until scheduled removal while warning consumers through response headers or documentation. Tests verify deprecation warnings are present, replacement endpoints function correctly, and final removal doesn't occur before communicated timelines. For crypto APIs, respectful deprecation practices maintain developer trust and prevent surprise failures in production trading systems.

Test doubles including mocks, stubs, and fakes enable testing APIs without depending on external systems like blockchain nodes, exchange APIs, or third-party data providers. Mocking frameworks create test doubles that simulate external system behavior, allowing tests to control responses and simulate error conditions difficult to reproduce with real systems. For cryptocurrency API testing, mocking external dependencies enables fast, reliable test execution independent of blockchain network status or exchange API availability.

API mocking tools like WireMock, MockServer, and Prism create HTTP servers that respond to requests according to defined expectations. These tools support matching requests by URL, headers, and body content, returning configured responses or simulating network errors. For crypto APIs consuming multiple external APIs, mock servers enable testing integration logic without actual external dependencies. Recording and replaying actual API interactions accelerates mock development while ensuring realistic test scenarios.

Stubbing strategies replace complex dependencies with simplified implementations sufficient for testing purposes. Database stubs might store data in memory rather than persistent storage, blockchain stubs might return predetermined transaction data, and exchange API stubs might provide fixed market prices. For cryptocurrency APIs, stubs enable testing business logic without infrastructure dependencies, accelerating test execution and simplifying test environments.

Contract testing tools like Pact generate provider verification tests from consumer expectations, ensuring mocks accurately reflect provider behavior. This approach prevents false confidence from tests passing against mocks but failing against real systems. For crypto API microservices, contract testing ensures service integration points match expectations even as services evolve independently. Shared contract repositories serve as communication channels between service teams.

Service virtualization creates sophisticated simulations of complex dependencies including state management, performance characteristics, and realistic data. Commercial virtualization tools provide recording and replay capabilities, behavior modeling, and performance simulation. For crypto APIs depending on expensive or limited external services, virtualization enables thorough testing without quota constraints or usage costs. Token Metrics uses comprehensive mocking and virtualization strategies to test its cryptocurrency API thoroughly across all integration points.

Production monitoring complements pre-deployment testing by providing ongoing validation that APIs function correctly in actual usage. Synthetic monitoring periodically executes test scenarios against production APIs, alerting when failures occur. These tests verify critical paths like authentication, data retrieval, and transaction submission work continuously. For cryptocurrency APIs operating globally across time zones, synthetic monitoring provides 24/7 validation without human intervention.

Real user monitoring captures actual API usage including response times, error rates, and usage patterns. Analyzing production telemetry reveals issues that testing environments miss like geographic performance variations, unusual usage patterns, and rare edge cases. For crypto APIs, real user monitoring shows which endpoints receive highest traffic, which cryptocurrencies are most popular, and when traffic patterns surge during market events. These insights guide optimization efforts and capacity planning.

Chaos engineering intentionally introduces failures into production systems to validate resilience and recovery mechanisms. Controlled experiments like terminating random containers, introducing network latency, or simulating API failures test whether systems handle problems gracefully. For cryptocurrency platforms where reliability is critical, chaos engineering builds confidence that systems withstand real-world failures. Netflix's Chaos Monkey pioneered this approach, now adopted broadly for testing distributed systems.

Canary deployments gradually roll out API changes to subsets of users, monitoring for problems before full deployment. If key metrics degrade for canary traffic, deployments are automatically rolled back. This production testing approach catches problems that testing environments miss while limiting blast radius. For crypto APIs where bugs could impact financial operations, canary deployments provide additional safety beyond traditional testing.

A/B testing validates that API changes improve user experience or business metrics before full deployment. Running old and new implementations side by side with traffic splits enables comparing performance, error rates, and business outcomes. For cryptocurrency APIs, A/B testing might validate that algorithm improvements actually increase prediction accuracy or that response format changes improve client performance. Token Metrics uses sophisticated deployment strategies including canary releases to ensure API updates maintain the highest quality standards.

Maintaining comprehensive test coverage requires systematic tracking of what's tested and what remains untested. Code coverage tools measure which code paths tests execute, revealing gaps in test suites. For cryptocurrency APIs with complex business logic, achieving high coverage ensures edge cases and error paths receive validation. Combining code coverage with mutation testing that introduces bugs to verify tests catch them provides deeper quality insights.

Test organization and maintainability determine long-term testing success. Well-organized test suites with clear naming conventions, logical structure, and documentation remain understandable and maintainable as codebases evolve. Page object patterns and helper functions reduce duplication and make tests easier to update. For crypto API test suites spanning thousands of tests, disciplined organization prevents tests from becoming maintenance burdens.

Test data independence ensures tests don't interfere with each other through shared state. Each test should create its own test data, clean up after execution, and not depend on execution order. For cryptocurrency API tests that modify databases or trigger external actions, proper isolation prevents one test's failure from cascading to others. Test frameworks providing setup and teardown hooks facilitate proper test isolation.

Performance testing optimization balances thoroughness against execution time. Parallelizing test execution across multiple machines dramatically reduces suite execution time for large test suites. Identifying and optimizing slow tests maintains rapid feedback cycles. For crypto API platforms with extensive test coverage, efficient test execution enables running full suites frequently without slowing development.

Continuous improvement of test suites through regular review, refactoring, and enhancement maintains testing effectiveness. Reviewing failed tests in production reveals gaps in test coverage, examining slow tests identifies optimization opportunities, and analyzing flaky tests uncovers reliability issues. For cryptocurrency APIs where market conditions and user needs evolve continuously, test suites must evolve to maintain relevance. Token Metrics continuously enhances its testing strategies and practices to maintain the highest quality standards for its crypto API platform.

Comprehensive API testing forms the foundation of reliable, secure, and performant web services, particularly critical for cryptocurrency APIs where bugs can result in financial losses and security breaches. This guide has explored practical testing strategies spanning functional testing, integration testing, performance testing, security testing, and production monitoring. Leveraging appropriate tools, frameworks, and automation enables thorough validation while maintaining development velocity.

Token Metrics demonstrates excellence in cryptocurrency API quality through rigorous testing practices that ensure developers receive accurate, reliable market data and analytics. By implementing the testing strategies outlined in this guide and leveraging well-tested crypto APIs like those provided by Token Metrics, developers can build cryptocurrency applications with confidence that underlying services will perform correctly under all conditions.

As cryptocurrency markets mature and applications grow more sophisticated, API testing practices must evolve to address new challenges and technologies. The fundamental principles of comprehensive test coverage, continuous integration, and production validation remain timeless even as specific tools and techniques advance. Development teams that invest in robust testing practices position themselves to deliver high-quality cryptocurrency applications that meet user expectations for reliability, security, and performance in the demanding world of digital asset management and trading.

%201.svg)

%201.svg)

APIs power modern software by letting systems communicate without exposing internal details. Whether you're building an AI agent, integrating price feeds for analytics, or connecting wallets, understanding the core concept of an "API" — and the practical rules around using one — is essential. This article defines what an API is, explains common types, highlights evaluation criteria, and outlines best practices for secure, maintainable integrations.

API stands for Application Programming Interface. At its simplest, an API is a contract: a set of rules that lets one software component request data or services from another. The contract specifies available endpoints (or methods), required inputs, expected outputs, authentication requirements, and error semantics. APIs abstract implementation details so consumers can depend on a stable surface rather than internal code.

Think of an API as a menu in a restaurant: the menu lists dishes (endpoints), describes ingredients (parameters), and sets expectations for what arrives at the table (responses). Consumers don’t need to know how the kitchen prepares the dishes — only how to place an order.

APIs come in several architectural styles. The three most common today are:

Choosing a style depends on use case: REST for simple, cacheable resources; GraphQL for complex client-driven queries; gRPC/WebSocket for low-latency or streaming scenarios.

Documentation quality often determines integration time and reliability. When evaluating an API, check for:

For crypto and market data APIs, also verify the latency SLAs, the freshness of on‑chain reads, and whether historical data is available in a form suitable for research or model training.

APIs expose surface area; securing that surface is critical. Key practices include:

Security and resilience are especially important in finance and crypto environments where integrity and availability directly affect analytics and automated systems.

APIs are central to AI-driven research and crypto tooling. When integrating APIs into data pipelines or agent workflows, consider these steps:

AI models and agents can benefit from structured, versioned APIs that provide deterministic responses; integrating dataset provenance and schema validation improves repeatability in experiments.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

An API is an interface that defines how two software systems communicate. It lists available operations, required inputs, and expected outputs so developers can use services without understanding internal implementations.

REST exposes fixed resource endpoints and relies on HTTP semantics. GraphQL exposes a flexible query language letting clients fetch precise fields in one request. REST favors caching and simplicity; GraphQL favors efficiency for complex client queries.

Confirm data freshness, historical coverage, authentication methods, rate limits, and the provider’s documentation. Also verify uptime, SLA terms if relevant, and whether the API provides proof or verifiable on‑chain reads for critical use cases.

Rate limits set a maximum number of requests per time window, often per API key or IP. Providers may return headers indicating remaining quota and reset time; implement exponential backoff and caching to stay within limits.

AI-driven research tools can summarize documentation, detect breaking changes, and suggest integration patterns. For provider-specific signals and token research, platforms like Token Metrics combine multiple data sources and models to support analysis workflows.

This article is educational and informational only. It does not constitute financial, legal, or investment advice. Readers should perform independent research and consult qualified professionals before making decisions related to finances, trading, or technical integrations.

%201.svg)

%201.svg)

Modern software architecture has evolved toward distributed systems composed of numerous microservices, each handling specific functionality and exposing APIs for interaction. As these systems grow in complexity, managing direct communication between clients and dozens or hundreds of backend services becomes unwieldy, creating challenges around security, monitoring, and operational consistency. API gateways have emerged as the architectural pattern that addresses these challenges, providing a unified entry point that centralizes cross-cutting concerns while simplifying client interactions with complex backend systems. This comprehensive guide explores API gateway architecture, security patterns, performance optimization strategies, deployment models, and best practices that enable building robust, scalable systems.

An API gateway functions as a reverse proxy that sits between clients and backend services, intercepting all incoming requests and routing them to appropriate destinations. This architectural pattern transforms the chaotic direct communication between clients and multiple services into an organized, manageable structure where the gateway handles common concerns that would otherwise be duplicated across every service. For cryptocurrency platforms where clients might access market data services, trading engines, analytics processors, blockchain indexers, and user management systems, the API gateway provides a single endpoint that orchestrates these interactions seamlessly.