Top Crypto Trading Platforms in 2025

%201.svg)

%201.svg)

Big news: We’re cranking up the heat on AI-driven crypto analytics with the launch of the Token Metrics API and our official SDK (Software Development Kit). This isn’t just an upgrade – it's a quantum leap, giving traders, hedge funds, developers, and institutions direct access to cutting-edge market intelligence, trading signals, and predictive analytics.

Crypto markets move fast, and having real-time, AI-powered insights can be the difference between catching the next big trend or getting left behind. Until now, traders and quants have been wrestling with scattered data, delayed reporting, and a lack of truly predictive analytics. Not anymore.

The Token Metrics API delivers 32+ high-performance endpoints packed with powerful AI-driven insights right into your lap, including:

Getting started with the Token Metrics API is simple:

At Token Metrics, we believe data should be decentralized, predictive, and actionable.

The Token Metrics API & SDK bring next-gen AI-powered crypto intelligence to anyone looking to trade smarter, build better, and stay ahead of the curve. With our official SDK, developers can plug these insights into their own trading bots, dashboards, and research tools – no need to reinvent the wheel.

%201.svg)

%201.svg)

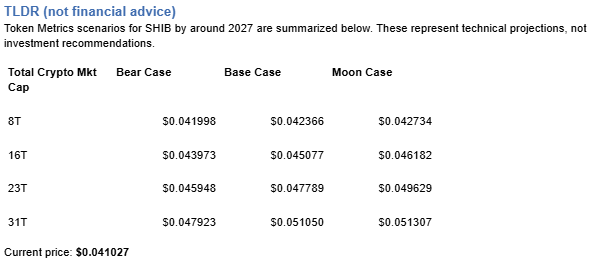

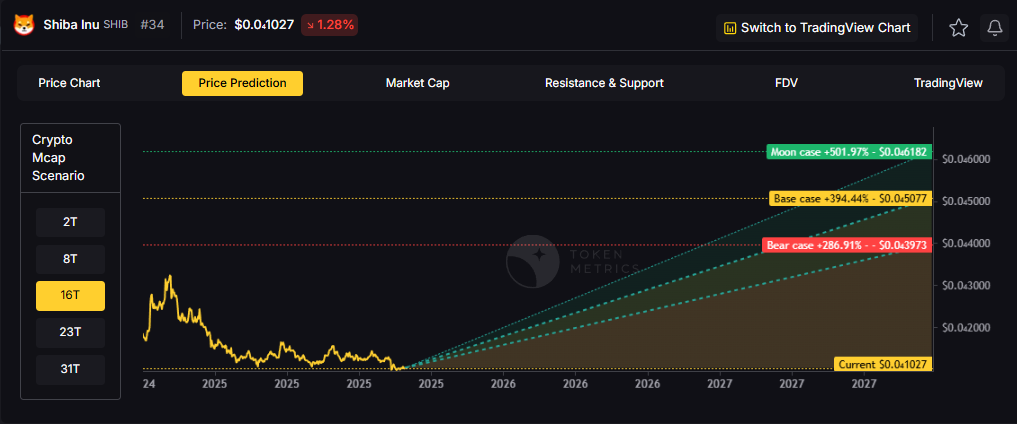

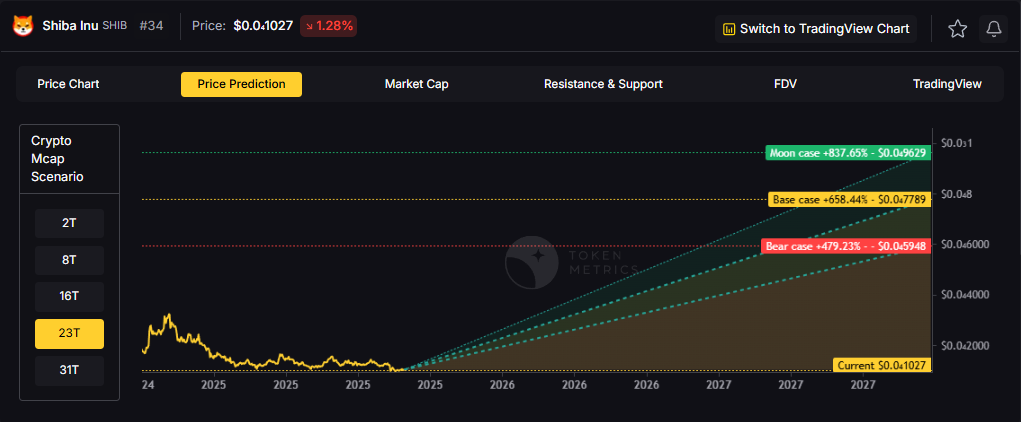

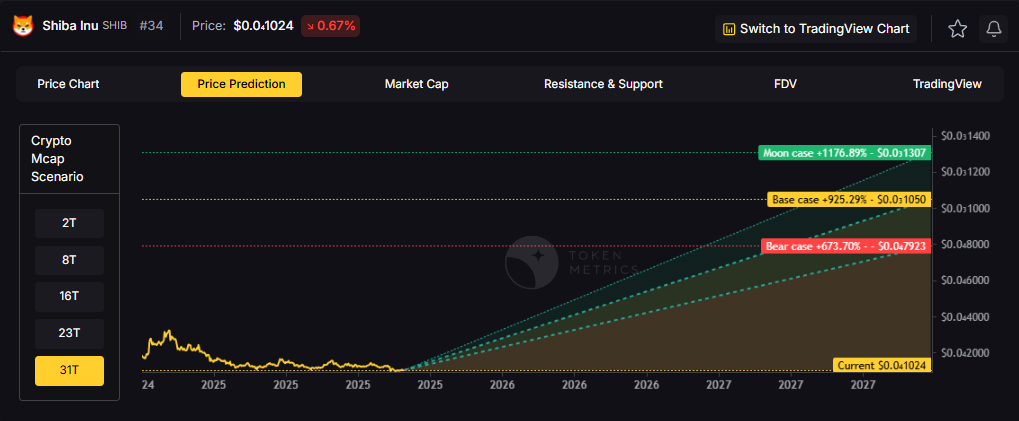

Shiba Inu operates as a community-driven meme token where price action stems primarily from social sentiment, attention cycles, and speculative trading rather than fundamental value drivers. SHIB exhibits extreme volatility with no defensive characteristics or revenue-generating mechanisms typical of utility tokens. Token Metrics scenarios below provide technical Price Predictions across different market cap environments, though meme tokens correlate more strongly with viral trends and community engagement than systematic market cap models. Positions in SHIB should be sized as high-risk speculative bets with potential for total loss.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

How to read it: Each band blends cycle analogues and market-cap share math with TA guardrails. Base assumes steady adoption and neutral or positive macro. Moon layers in a liquidity boom. Bear assumes muted flows and tighter liquidity. For meme tokens, actual outcomes depend heavily on social trends and community momentum beyond what market cap models capture.

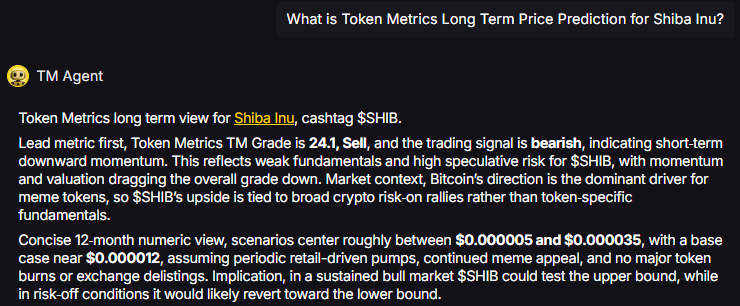

TM Agent baseline: Token Metrics TM Grade is 24.1%, Sell, with a bearish trading signal. The concise 12‑month numeric view centers between

TM Agent numeric view: scenarios center roughly between $0.000005 and $0.000035, with a base case near $0.000012.

Token Metrics scenarios provide technical price bands across market cap tiers:

8T: At 8 trillion total crypto market cap, SHIB projects to $0.041998 (bear), $0.042366 (base), and $0.042734 (moon).

16T: At 16 trillion total crypto market cap, SHIB projects to $0.043973 (bear), $0.045077 (base), and $0.046182 (moon).

23T: At 23 trillion total crypto market cap, SHIB projects to $0.045948 (bear), $0.047789 (base), and $0.049629 (moon).

31T: At 31 trillion total crypto market cap, SHIB projects to $0.047923 (bear), $0.051050 (base), and $0.051307 (moon).

These technical ranges assume meme tokens maintain market cap share proportional to overall crypto growth. Actual outcomes for speculative tokens typically exhibit higher variance and stronger correlation to social trends than these models predict.

Shiba Inu is a meme-born crypto project that centers on community and speculative culture. Unlike utility tokens with specific use cases, SHIB operates primarily as a speculative asset and community symbol. The project focuses on community engagement and entertainment value.

SHIB has demonstrated viral moments and community loyalty within the broader meme token category. The token trades on community sentiment and attention cycles more than fundamentals. Market performance depends heavily on social media attention and broader meme coin cycles.

Token Metrics provides technical analysis, scenario math, and rigorous risk evaluation for hundreds of crypto tokens. Want to dig deeper? Explore our powerful AI-powered ratings and scenario tools here.

Will SHIB 10x from here?

Answer: At current price of $0.041027, a 10x reaches $0.41027. This level does not appear in any of the listed bear, base, or moon scenarios across 8T, 16T, 23T, or 31T tiers. Meme tokens can 10x rapidly during viral moments but can also lose 90%+ just as quickly. Position sizing for potential total loss is critical. Not financial advice.

What are the biggest risks to SHIB?

Answer: Primary risks include attention shifting to newer memes, community fragmentation, developer abandonment, regulatory crackdowns, and liquidity collapse during downturns. Unlike utility tokens with defensive characteristics, SHIB has zero fundamental floor. Price can approach zero if community interest disappears. Total loss is a realistic outcome. Not financial advice.

Next Steps

Disclosure

Educational purposes only, not financial advice. SHIB is a highly speculative asset with extreme volatility and high risk of total loss. Meme tokens operate as entertainment and gambling instruments rather than investments. Only allocate capital you can afford to lose entirely. Do your own research and manage risk appropriately.

%201.svg)

%201.svg)

Exchange tokens like WhiteBIT Coin offer leveraged exposure to overall market activity, creating concentration risk around a single platform's success. While WBT can deliver outsized returns during bull markets with high trading volumes, platform-specific risks like regulatory action, security breaches, or competitive displacement amplify downside exposure. Portfolio theory suggests balancing such concentrated bets with broader sector exposure.

The scenarios below show how WBT might perform across different crypto market cap environments. Rather than betting entirely on WhiteBIT Coin's exchange succeeding, diversified strategies blend exchange tokens with L1s, DeFi protocols, and infrastructure plays to capture crypto market growth while mitigating single-platform risk.

Portfolio theory teaches that diversification is the only free lunch in investing. WBT concentration violates this principle by tying your crypto returns to one protocol's fate. Token Metrics Indices blend WhiteBIT Coin with the top one hundred tokens, providing broad exposure to crypto's growth while smoothing volatility through cross-asset diversification. This approach captures market-wide tailwinds without overweighting any single point of failure.

Systematic rebalancing within index strategies creates an additional return source that concentrated positions lack. As some tokens outperform and others lag, regular rebalancing mechanically sells winners and buys laggards, exploiting mean reversion and volatility. Single-token holders miss this rebalancing alpha and often watch concentrated gains evaporate during corrections while index strategies preserve more gains through automated profit-taking.

Beyond returns, diversified indices improve the investor experience by reducing emotional decision-making. Concentrated WBT positions subject you to severe drawdowns that trigger panic selling at bottoms. Indices smooth the ride through natural diversification, making it easier to maintain exposure through full market cycles. Get early access: https://docs.google.com/forms/d/1AnJr8hn51ita6654sRGiiW1K6sE10F1JX-plqTUssXk/preview.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

How to read it: Each band blends cycle analogues and market-cap share math with TA guardrails. Base assumes steady adoption and neutral or positive macro. Moon layers in a liquidity boom. Bear assumes muted flows and tighter liquidity.

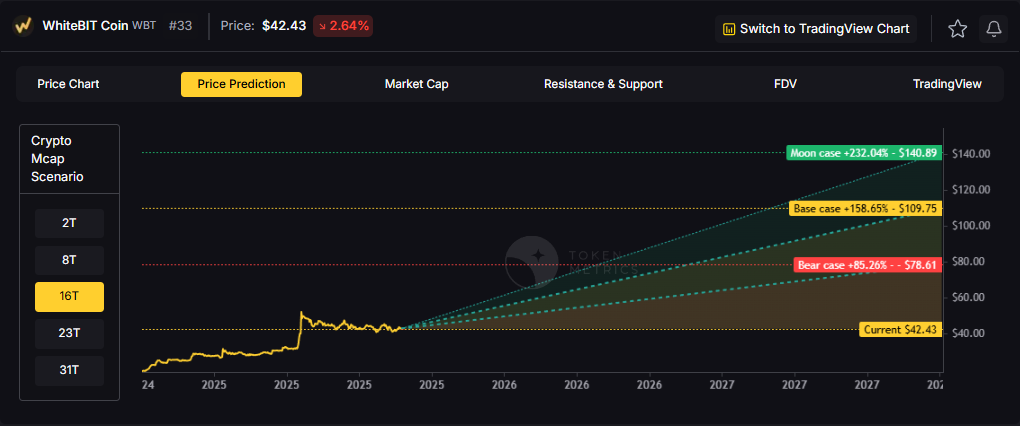

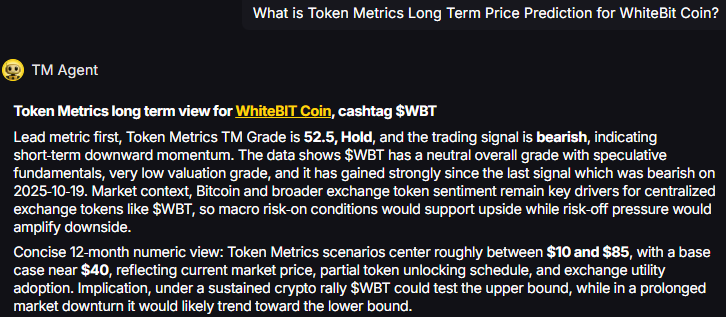

TM Agent baseline: Token Metrics long term view for WhiteBIT Coin, cashtag $WBT. Lead metric first, Token Metrics TM Grade is 52.5%, Hold, and the trading signal is bearish, indicating short-term downward momentum. Concise 12-month numeric view: Token Metrics scenarios center roughly between $10 and $85, with a base case near $40.

Token Metrics scenarios span four market cap tiers, each representing different levels of crypto market maturity and liquidity:

8T: At an 8 trillion dollar total crypto market cap, WBT projects to $54.50 in bear conditions, $64.88 in the base case, and $75.26 in bullish scenarios.

16T: Doubling the market to 16 trillion expands the range to $78.61 (bear), $109.75 (base), and $140.89 (moon).

23T: At 23 trillion, the scenarios show $102.71, $154.61, and $206.51 respectively.

31T: In the maximum liquidity scenario of 31 trillion, WBT could reach $126.81 (bear), $199.47 (base), or $272.13 (moon).

These ranges illustrate potential outcomes for concentrated WBT positions, but investors should weigh whether single-asset exposure matches their risk tolerance or whether diversified strategies better suit their objectives.

WhiteBIT Coin is the native exchange token associated with the WhiteBIT ecosystem. It is designed to support utility on the platform and related services.

WBT typically provides fee discounts and ecosystem benefits where supported. Usage depends on exchange activity and partner integrations.

Token Metrics AI provides comprehensive context on WhiteBIT Coin's positioning and challenges.

Vision: The stated vision for WhiteBIT Coin centers on enhancing user experience within the WhiteBIT exchange ecosystem by providing tangible benefits such as reduced trading fees, access to exclusive features, and participation in platform governance or rewards programs. It aims to strengthen user loyalty and engagement by aligning token holders’ interests with the exchange’s long-term success. While not positioned as a decentralized protocol token, its vision reflects a broader trend of exchanges leveraging tokens to build sustainable, incentivized communities.

Problem: Centralized exchanges often face challenges in retaining active users and differentiating themselves in a competitive market. Users may be deterred by high trading fees, limited reward mechanisms, or lack of influence over platform developments. WhiteBIT Coin aims to address these frictions by introducing a native incentive layer that rewards participation, encourages platform loyalty, and offers cost-saving benefits. This model seeks to improve user engagement and create a more dynamic trading environment on the WhiteBIT platform.

Solution: WhiteBIT Coin serves as a utility token within the WhiteBIT exchange, offering users reduced trading fees, staking opportunities, and access to special events such as token sales or airdrops. It functions as an economic lever to incentivize platform activity and user retention. While specific governance features are not widely documented, such tokens often enable voting on platform upgrades or listing decisions. The solution relies on integrating the token deeply into the exchange’s operational model to ensure consistent demand and utility for holders.

Market Analysis: Exchange tokens like WhiteBIT Coin operate in a competitive landscape led by established players such as Binance Coin (BNB) and KuCoin Token (KCS). While BNB benefits from a vast ecosystem including a launchpad, decentralized exchange, and payment network, WBT focuses on utility within its native exchange. Adoption drivers include the exchange’s trading volume, security track record, and the attractiveness of fee discounts and staking yields. Key risks involve regulatory pressure on centralized exchanges and competition from other exchange tokens that offer similar benefits.

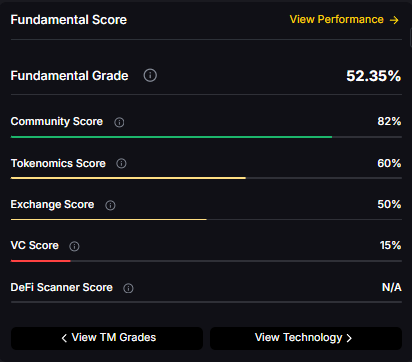

Fundamental Grade: 52.35% (Community 82%, Tokenomics 60%, Exchange 50%, VC —, DeFi Scanner N/A).

Can WBT reach $100?

Answer: Based on the scenarios, WBT could reach $100 in the 16T base case. The 16T tier projects $109.75 in the base case. Achieving this requires both broad market cap expansion and WhiteBIT Coin maintaining competitive position. Not financial advice.

What's the risk/reward profile for WBT?

Answer: Risk and reward span from $54.50 in the lowest bear case to $272.13 in the highest moon case. Downside risks include regulatory actions and competitive displacement, while upside drivers include expanding access and favorable macro liquidity. Concentrated positions amplify both tails, while diversified strategies smooth outcomes.

What gives WBT value?

Answer: WBT accrues value through fee discounts, staking rewards, access to special events, and potential participation in platform programs. Demand drivers include exchange activity, user growth, and security reputation. While these fundamentals matter, diversified portfolios capture value accrual across multiple tokens rather than betting on one protocol's success.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, concentration amplifies risk, and diversification is a fundamental principle of prudent portfolio construction. Do your own research and manage risk appropriately.

%201.svg)

%201.svg)

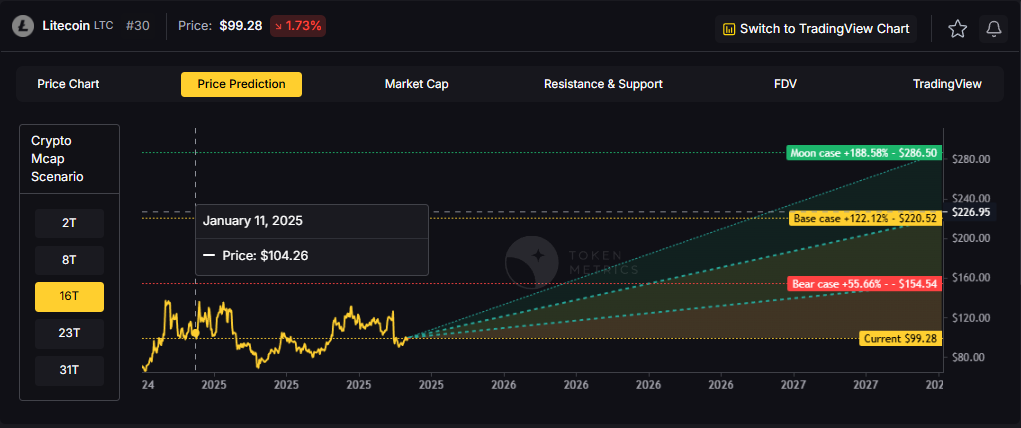

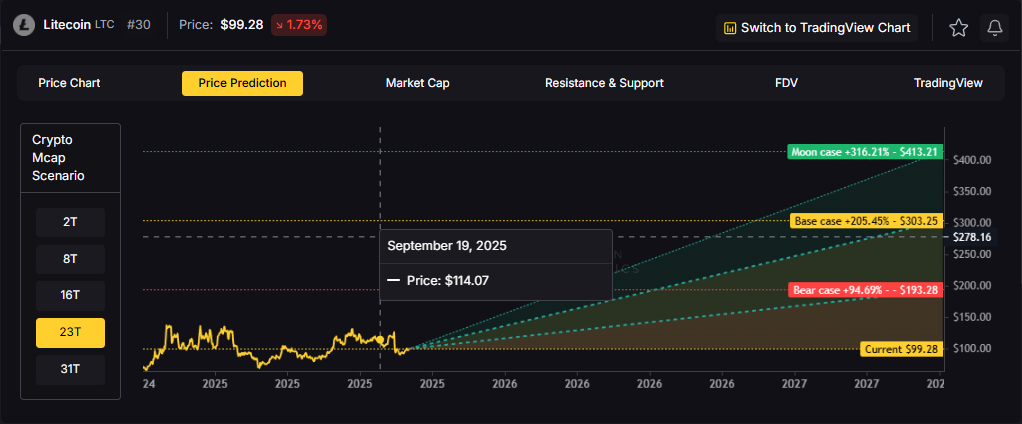

Layer 1 tokens capture value through transaction fees and miner economics. Litecoin processes blocks every 2.5 minutes using Proof of Work, targeting fast, low-cost payments. The scenarios below model LTC outcomes across different total crypto market sizes, reflecting network adoption and transaction volume.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

How to read it: Each band blends cycle analogues and market-cap share math with TA guardrails. Base assumes steady adoption and neutral or positive macro. Moon layers in a liquidity boom. Bear assumes muted flows and tighter liquidity.

TM Agent baseline: Token Metrics scenarios center roughly between $35 and $160, with a base case near $75, assuming gradual adoption, occasional retail rotation into major alts, and no major network issues. In a broad crypto rally LTC could test the upper bound, while in risk-off conditions it would likely drift toward the lower bound.

Token Metrics scenarios span four market cap tiers reflecting different crypto market maturity levels:

8T: At an 8 trillion dollar total crypto market cap, LTC projects to $115.80 in bear conditions, $137.79 in the base case, and $159.79 in bullish scenarios.

16T: At 16 trillion, the range expands to $154.54 (bear), $220.52 (base), and $286.50 (moon).

23T: The 23 trillion tier shows $193.28, $303.25, and $413.21 respectively.

31T: In the maximum liquidity scenario at 31 trillion, LTC reaches $232.03 (bear), $385.98 (base), or $539.92 (moon).

Litecoin is a peer-to-peer cryptocurrency launched in 2011 as an early Bitcoin fork. It uses Proof of Work with Scrypt and targets faster settlement, processing blocks roughly every 2.5 minutes with low fees.

LTC is the native token used for transaction fees and miner rewards. Its primary utilities are fast, low-cost payments and serving as a testing ground for Bitcoin-adjacent upgrades, with adoption in retail payments, remittances, and exchange trading pairs.

Token Metrics AI provides additional context on Litecoin's technical positioning and market dynamics.

Vision: Litecoin's vision is to serve as a fast, low-cost, and accessible digital currency for everyday transactions. It aims to complement Bitcoin by offering quicker settlement times and a more efficient payment system for smaller, frequent transfers.

Problem: Bitcoin's relatively slow block times and rising transaction fees during peak usage make it less ideal for small, frequent payments. This creates a need for a cryptocurrency that maintains security and decentralization while enabling faster and cheaper transactions suitable for daily use.

Solution: Litecoin addresses this by using a 2.5-minute block time and the Scrypt algorithm, which initially allowed broader participation in mining and faster transaction processing. It functions primarily as a payment-focused blockchain, supporting peer-to-peer transfers with low fees and high reliability, without the complexity of smart contract functionality.

Market Analysis: Litecoin operates in the digital payments segment of the cryptocurrency market, often compared to Bitcoin but positioned as a more efficient medium of exchange. While it lacks the smart contract capabilities of platforms like Ethereum or Solana, its simplicity, long-standing network security, and brand recognition give it a stable niche. It competes indirectly with other payment-focused cryptocurrencies like Bitcoin Cash and Dogecoin. Adoption is sustained by its integration across major exchanges and payment services, but growth is limited by the broader shift toward ecosystems offering decentralized applications.

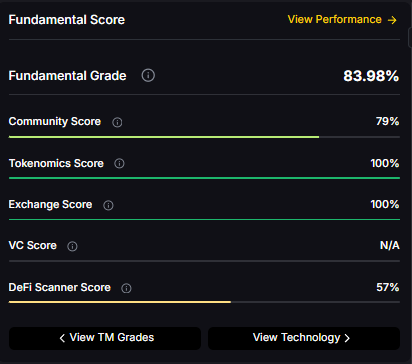

Fundamental Grade: 83.98% (Community 79%, Tokenomics 100%, Exchange 100%, VC —, DeFi Scanner 57%).

Technology Grade: 46.67% (Activity 51%, Repository 72%, Collaboration 60%, Security 20%, DeFi Scanner 57%).

For comprehensive Litecoin ratings, on-chain analysis, AI-powered price forecasts, and trading signals, go to Token Metrics.

What is LTC used for?

Answer: Primary use cases include fast peer-to-peer payments, low-cost remittances, and exchange settlement/liquidity pairs. LTC holders primarily pay transaction fees and support miner incentives. Adoption depends on active addresses and payment integrations.

What price could LTC reach in the moon case?

Answer: Moon case projections range from $159.79 at 8T to $539.92 at 31T. These scenarios require maximum market cap expansion and strong adoption dynamics. Not financial advice.

Next Steps

• Track live grades and signals: Token Details

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

%201.svg)

%201.svg)

APIs — application programming interfaces — are the invisible glue that lets software talk to software. Whether you're building a dashboard, feeding data into an AI model, or fetching market prices for analytics, understanding what an API is and how it works is essential to designing reliable systems. This guide explains APIs in plain language, shows how they’re used in crypto and AI, and outlines practical steps for safe, scalable integration.

An API (application programming interface) is a defined set of rules and endpoints that lets one software program request and exchange data or functionality with another. Think of it as a contract: the provider defines what inputs it accepts and what output it returns, and the consumer follows that contract to integrate services reliably.

Common API types:

At a technical level, using an API involves sending a request to an endpoint and interpreting the response. Key components include:

Understanding these elements helps teams design error handling, retry logic, and monitoring so integrations behave predictably in production.

APIs enable many building blocks in crypto and AI ecosystems. Examples include:

When integrating APIs for data-driven systems, consider latency, data provenance, and consistency. For research and model inputs, services that combine price data with on-chain metrics and signals can reduce the time it takes to assemble reliable datasets. For teams exploring such aggregations, Token Metrics provides an example of an AI-driven analytics platform that synthesizes multiple data sources for research workflows.

Secure, maintainable APIs follow established practices that protect data and reduce operational risk:

Following these practices helps teams scale API usage without sacrificing reliability or security.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

An API is a set of rules that enables software components to interact. It’s useful because it abstracts complexity, standardizes data exchange, and enables modular development across systems and teams.

Choose based on access patterns: REST is simple and widely supported; GraphQL excels when clients need flexible queries and fewer round trips; streaming (WebSocket) is best for low-latency, continuous updates. Consider caching, complexity, and tooling support.

Store secrets in secure vaults or environment variables, avoid hardcoding them in source code, rotate keys periodically, and apply principle of least privilege to limit access scopes.

Rate limits restrict how many requests a client can make in a time window. Handle them by respecting limits, implementing exponential backoff for retries, caching responses, and batching requests where possible.

Assess documentation quality, uptime SLAs, authentication methods, data freshness, cost model, and community or support channels. Test with realistic workloads and review security practices and versioning policies.

Yes. AI agents often call APIs for data ingestion, model inference, or action execution. Reliable APIs for feature data, model serving, and orchestration are key to building robust AI workflows.

This article is for educational and informational purposes only. It does not constitute financial, investment, legal, or professional advice. Evaluate APIs and data sources independently and consider security and compliance requirements specific to your use case.

%201.svg)

%201.svg)

APIs power modern software by acting as intermediaries that let different programs communicate. Whether you use a weather app, sign in with a social account, or combine data sources for analysis, APIs are the plumbing behind those interactions. This guide breaks down what an API is, how it works, common types and use cases, plus practical steps to evaluate and use APIs responsibly.

An application programming interface (API) is a contract between two software components. It specifies the methods, inputs, outputs, and error handling that allow one service to use another’s functionality or data without needing to know its internal implementation. Think of an API as a well-documented door: the requester knocks with a specific format, and the server replies according to agreed rules.

APIs matter because they:

At a technical level, an API involves several elements that define reliable communication:

Most web APIs use HTTP as a transport; RESTful APIs map CRUD operations to HTTP verbs, while alternatives like GraphQL let clients request exactly the data they need. The right style depends on use cases and performance trade-offs.

APIs appear across many layers of software and business models. Common categories include:

Practical examples: a mobile app calling a backend to fetch user profiles, an analytics pipeline ingesting a third-party data API, or a serverless function invoking a payment API to process transactions.

Designing and consuming APIs effectively requires both technical and governance considerations:

When evaluating an API to integrate, consider documentation quality, SLAs, data freshness, error handling patterns, and cost model. For data-driven workflows and AI systems, consistency of schemas and latency characteristics are critical.

APIs are foundational for AI and data research because they provide structured, automatable access to data and models. Teams often combine multiple APIs—data feeds, enrichment services, feature stores—to assemble training datasets or live inference pipelines. Important considerations include freshness, normalization, rate limits, and licensing of data.

AI-driven research platforms can simplify integration by aggregating multiple sources and offering standardized endpoints. For example, Token Metrics provides AI-powered analysis that ingests diverse signals via APIs to support research workflows and model inputs.

Discover Crypto Gems with Token Metrics AI

Token Metrics uses AI-powered analysis to help you uncover profitable opportunities in the crypto market. Get Started For Free

API stands for Application Programming Interface. It is a set of rules and definitions that lets software components communicate by exposing specific operations and data formats.

A web API is accessed over a network (typically HTTP) and provides remote functionality or data. A library or SDK is code included directly in an application. APIs enable decoupled services and cross-platform access; libraries are local dependencies.

REST is an architectural style using HTTP verbs and resource URIs. GraphQL lets clients specify exactly which fields they need in a single query. gRPC is a high-performance RPC framework using protocol buffers and is suited for internal microservice communication with strict performance needs.

Common methods include API keys, OAuth 2.0 for delegated access, and JWTs for stateless tokens. Choose an approach that matches security requirements and user interaction patterns; always use TLS to protect credentials in transit.

Failures include rate-limit rejections, transient network errors, schema changes, and authentication failures. Implement retries with exponential backoff for transient errors, validate responses, and monitor for schema or semantic changes.

Yes. Polling HTTP APIs at short intervals can approximate near-real-time, but push-based models (webhooks, streaming APIs, WebSockets, or event streams) are often more efficient and lower latency for real-time needs.

Evaluate documentation, uptime history, data freshness, pricing, rate limits, privacy and licensing, and community support. For data or AI integrations, prioritize consistent schemas, sandbox access, and clear SLAs.

Start with principles like consistent resource naming, strong documentation (OpenAPI/Swagger), automated testing, and security by design. Study public APIs from major platforms and use tools that validate contracts and simulate client behavior.

This article is for educational and informational purposes only. It does not constitute investment advice, financial recommendations, or endorsements. Readers should perform independent research and consult qualified professionals where appropriate.

%201.svg)

%201.svg)

APIs — short for application programming interfaces — are the invisible connectors that let software systems communicate, share data, and build layered services. Whether you’re building a mobile app, integrating a payment gateway, or connecting an AI model to live data, understanding what an API does and how it behaves is essential for modern product and research teams.

An API is a defined set of rules, protocols, and tools that lets one software component request services or data from another. Conceptually, an API is an interface: it exposes specific functions and data structures while hiding internal implementation details. That separation supports modular design, reusability, and clearer contracts between teams or systems.

Common API categories include:

At a high level, interaction with an API follows a request-response model. A client sends a request to an endpoint with a method (e.g., GET, POST), optional headers, and a payload. The server validates the request, performs logic or database operations, and returns a structured response. Key concepts include:

Advanced setups add caching, pagination, versioning, and webhook callbacks for asynchronous events. GraphQL, in contrast to REST, enables clients to request exactly the fields they need, reducing over- and under-fetching in many scenarios.

APIs are foundational in nearly every digital industry. Example use cases include:

In AI and research workflows, APIs let teams feed models with curated live data, automate labeling pipelines, or orchestrate multi-step agent behavior. In crypto, programmatic access to market and on-chain signals enables analytics, monitoring, and application integration without manual data pulls.

Designing and consuming APIs requires intentional choices: clear documentation, predictable error handling, and explicit versioning reduce integration friction. Security measures should include:

When integrating third-party APIs—especially for sensitive flows like payments or identity—run scenario analyses for failure modes, data consistency, and latency. For AI-driven systems, consider auditability and reproducibility of inputs and outputs to support troubleshooting and model governance.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

Q: What is the simplest way to think about an API?

A: Think of an API as a waiter in a restaurant: it takes a client’s request, communicates with the kitchen (the server), and delivers a structured response. The waiter abstracts the kitchen’s complexity.

Q: Which API styles should I consider for a new project?

A: Common choices are REST for broad compatibility, GraphQL for flexible queries, and gRPC for high-performance microservices. Selection depends on client needs, payload shape, and latency requirements.

Q: What authentication methods are typical?

A: Typical methods include API keys for simple access, OAuth2 for delegated access, JWT tokens for stateless auth, and mutual TLS for high-security environments.

Q: What should teams monitor to reduce API risk?

A: Monitor for excessive request volumes, suspicious endpoints, unusual payloads, and repeated failed auth attempts. Regularly review access scopes and rotate credentials.

Q: How do AI systems typically use APIs?

A: AI systems use APIs to fetch data for training or inference, send model inputs to inference endpoints, and collect telemetry. Well-documented APIs support reproducible experiments and production deployment.

This article is for educational and informational purposes only. It does not provide financial, legal, or professional advice. Evaluate third-party services carefully and consider security, compliance, and operational requirements before integration.

%201.svg)

%201.svg)

APIs (application programming interfaces) are the invisible connectors that let software systems talk to each other. Whether you open a weather app, sign in with a social account, or call a machine-learning model, an API is usually orchestrating the data exchange behind the scenes. This guide explains what an API is, how APIs work, common types and use cases, and practical frameworks to evaluate or integrate APIs into projects.

An API is a set of rules, protocols, and tools that defines how two software components communicate. At its simplest, an API specifies the inputs a system accepts, the outputs it returns, and the behavior in between. APIs abstract internal implementation details so developers can reuse capabilities without understanding the underlying codebase.

Key concepts:

Most modern APIs use HTTP as the transport protocol and follow architectural styles such as REST or GraphQL. A typical interaction looks like this:

APIs also expose documentation and machine-readable specifications (OpenAPI/Swagger, RAML) that describe available endpoints, parameters, data models, and expected responses. Tools can generate client libraries and interactive docs from these specs, accelerating integration.

APIs serve different purposes depending on design and context:

Use cases span the product lifecycle: integrating third-party services, composing microservices, extending platforms, or enabling AI models to fetch and write data programmatically.

When selecting or integrating an API, apply a simple checklist to reduce technical risk and operational friction:

Operationally, start with a sandbox key and integrate incrementally: mock responses in early stages, implement retry/backoff and circuit breakers, and monitor usage and costs in production.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

REST organizes resources as endpoints and often returns fixed data shapes per endpoint. GraphQL exposes a single endpoint where clients request the exact fields they need. REST is simple and cache-friendly; GraphQL reduces over-fetching but can require more server-side control and caching strategies.

API keys are simple tokens issued to identify a client and are easy to use for server-to-server interactions. OAuth provides delegated access where a user can authorize a third-party app to act on their behalf without sharing credentials; it's essential for user-consent flows.

Yes. OpenAPI (formerly Swagger) is widely used for REST APIs and supports automated client generation and interactive documentation. GraphQL has its own schema specification and introspection capabilities. Adopting standards improves developer experience significantly.

Common practices include strong authentication, TLS encryption, input validation, explicit authorization, rate limiting, and logging. For sensitive data, consider data minimization, field-level encryption, and strict access controls.

AI models can call APIs to fetch external context, enrich inputs, or persist outputs. Examples include retrieving live market data, fetching user profiles, or invoking specialized ML inference services. Manage latency, cost, and error handling when chaining many external calls in a pipeline.

This article is for educational and informational purposes only. It does not constitute professional, legal, or financial advice. Evaluate any API, provider, or integration according to your own technical, legal, and security requirements before use.

%201.svg)

%201.svg)

APIs from Google power a huge portion of modern applications, from location-aware mobile apps to automated data workflows in the cloud. Understanding how Google API endpoints, authentication, quotas, and client libraries fit together helps developers build reliable, maintainable integrations that scale. This guide breaks down the most practical aspects of working with Google APIs and highlights research and AI tools that can streamline development.

"Google API" is an umbrella term for a wide range of services offered by Google, including but not limited to Google Cloud APIs (Compute, Storage, BigQuery), Maps and Places, OAuth 2.0 identity, Drive, Sheets, and machine learning APIs like Vision and Translation. Each service exposes RESTful endpoints and often provides SDKs in multiple languages (Node.js, Python, Java, Go, and more).

Key dimensions to evaluate when selecting a Google API:

Popular categories and what developers commonly use them for:

Choosing the right API often starts with mapping product requirements to the available endpoints. For example, if you need user authentication and access to Google Drive files, combine OAuth 2.0 with the Drive API rather than inventing a custom flow.

Follow these practical steps to reduce friction and improve reliability:

These patterns reduce operational surprises and make integrations more maintainable over time.

Security and quota constraints often shape architecture decisions:

Secure-by-design implementations and proactive quota management reduce operational risk when moving from prototype to production.

Combining Google APIs with AI tooling unlocks new workflows: use Vision API to extract entities from images, then store structured results in BigQuery for analytics; call Translation or Natural Language for content normalization before indexing. When experimenting with AI-driven pipelines, maintain traceability between raw inputs and transformed outputs to support auditing and debugging.

AI-driven research platforms like Token Metrics can help developers prototype analytics and compare signal sources by aggregating on-chain and market datasets; such tools may inform how you prioritize data ingestion and model inputs when building composite systems that include external data alongside Google APIs.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

Google APIs are a collection of RESTful services and SDKs that grant programmatic access to Google products and cloud services. They differ in scope and SLAs from third-party APIs by integrating with Google Cloud's IAM, billing, and monitoring ecosystems.

Use OAuth 2.0 for user-level access where users must grant permission. For server-to-server calls, use service accounts with short-lived tokens. API keys are acceptable for public, limited-scope requests like simple Maps access but carry higher security risk if exposed.

Monitor quotas in Google Cloud Console under the "IAM & Admin" and "APIs & Services" sections. If you need more capacity, submit a quota increase request with usage patterns and justification; Google evaluates requests based on scope and safety.

Cost depends on API type and usage volume. Use the Google Cloud Pricing Calculator for services like BigQuery or Cloud Storage, and review per-request pricing for Maps and Vision APIs. Track costs via billing reports and set alerts to avoid surprises.

Client libraries are not strictly necessary, but they simplify authentication flows, retries, and response parsing. If you need maximum control or a minimal runtime, you can call REST endpoints directly with standard HTTP libraries.

This article is educational and technical in nature. It does not provide financial, legal, or investment advice. Evaluate APIs and third-party services against your own technical, security, and compliance requirements before use.

%201.svg)

%201.svg)

APIs are the connective tissue of modern software. As organizations expose more endpoints to partners, internal teams and third-party developers, effective api management becomes a competitive and operational imperative. This article breaks down practical frameworks, governance guardrails, and monitoring strategies that help teams scale APIs securely and reliably without sacrificing developer velocity.

API management is the set of practices, tools and processes that enable teams to design, publish, secure, monitor and monetize application programming interfaces. At its core it addresses three recurring challenges: consistent access control, predictable performance, and discoverability for developers. Well-managed APIs reduce friction for consumers, decrease operational incidents, and support governance priorities such as compliance and data protection.

Think of api management as a lifecycle discipline: from design and documentation to runtime enforcement and iterative refinement. Organizations that treat APIs as products—measuring adoption, latency, error rates, and business outcomes—are better positioned to scale integrations without accumulating technical debt.

Security and governance are non-negotiable for production APIs. Implement a layered approach:

Combining automated policy enforcement at an API gateway with a governance framework (ownerable APIs, review gates, and versioning rules) ensures changes are controlled without slowing legitimate feature delivery.

Developer experience (DX) determines adoption. Treat APIs as products by providing clear documentation, SDKs and a self-service developer portal. Key practices include:

Metrics to track DX include signups, first successful call time, and repeat usage per key. These are leading indicators of whether an API is fulfilling its product intent.

Operational visibility is essential for api management. Implement monitoring at multiple layers—gateway, service, and database—to triangulate causes when issues occur. Core telemetry includes:

Observability practices—distributed tracing, structured logs, and context propagation—help teams move from alert fatigue to actionable incident response. Build runbooks that map common alerts to remediation steps and owners.

Adopt an incremental roadmap rather than a big-bang rollout. A pragmatic sequence looks like:

Choose tools that match team maturity: managed API platforms accelerate setup for companies lacking infra resources, while open-source gateways provide control for those with specialized needs. Evaluate vendors on extensibility, observability integrations, and policy-as-code support to avoid lock-in.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

API management encompasses the processes and tools required to publish, secure, monitor, and monetize APIs. It matters because it enables predictable, governed access to services while maintaining developer productivity and operational reliability.

Common components include an API gateway (auth, routing, rate limiting), developer portal (docs, keys), analytics and monitoring systems (metrics, traces), and lifecycle tooling (design, versioning, CI/CD integrations).

Implement defense-in-depth: centralized authentication, token validation, input schema checks, rate limits, and continuous auditing. Shift security left by validating contracts and scanning specs before deployment.

Track latency percentiles, error rates, traffic patterns, and consumer-specific usage. Pair operational metrics with business KPIs (e.g., API-driven signups) to prioritize work that affects outcomes.

Use explicit versioning, deprecation windows, and dual-running strategies where consumers migrate incrementally. Communicate changes via the developer portal and automated notifications tied to API keys.

Introduce a gateway early when multiple consumers, partners, or internal teams rely on APIs. A gateway centralizes cross-cutting concerns and reduces duplicated security and routing logic.

This article is for educational and informational purposes only. It provides neutral, analytical information about api management practices and tools and does not constitute professional or investment advice.

%201.svg)

%201.svg)

APIs are the connective tissue of modern software: they expose functionality, move data, and enable integrations across services, devices, and platforms. A well-designed web API shapes developer experience, system resilience, and operational cost. This article breaks down core concepts, common architectures, security and observability patterns, and practical steps to build and maintain reliable web APIs without assuming a specific platform or vendor.

A web API (Application Programming Interface) is an HTTP-accessible interface that lets clients interact with server-side functionality. APIs can return JSON, XML, or other formats and typically define a contract of endpoints, parameters, authentication requirements, and expected responses. They matter because they enable modularity: front-ends, mobile apps, third-party integrations, and automation tools can all reuse the same backend logic.

When evaluating or designing an API, consider the consumer experience: predictable endpoints, clear error messages, consistent versioning, and comprehensive documentation reduce onboarding friction for integrators. Think of an API as a public product: its usability directly impacts adoption and maintenance burden.

There are several architectural approaches to web APIs. RESTful (resource-based) design emphasizes nouns and predictable HTTP verbs. GraphQL centralizes query flexibility into a single endpoint and lets clients request only the fields they need. gRPC is used for low-latency, binary RPC between services.

Key design practices:

Choose the pattern that aligns with your performance, flexibility, and developer ergonomics goals, and make that decision explicit in onboarding docs.

Security must be built into an API from day one. Common controls include TLS for transport, OAuth 2.0 / OpenID Connect for delegated authorization, API keys for service-to-service access, and fine-grained scopes for least-privilege access. Input validation, output encoding, and strict CORS policies guard against common injection and cross-origin attacks.

Operational protections such as rate limiting, quotas, and circuit breakers help preserve availability if a client misbehaves or a downstream dependency degrades. Design your error responses to be informative to developers but avoid leaking internal implementation details. Centralized authentication and centralized secrets management (vaults, KMS) reduce duplication and surface area for compromise.

Performance considerations span latency, throughput, and resource efficiency. Use caching (HTTP cache headers, CDN, or in-memory caches) to reduce load on origin services. Employ pagination, partial responses, and batch endpoints to avoid overfetching. Instrumentation is essential: traces, metrics, and logs help correlate symptoms, identify bottlenecks, and measure SLAs.

Testing should be layered: unit tests for business logic, contract tests against API schemas, integration tests for end-to-end behavior, and load tests that emulate real-world usage. Observability tools and APMs provide continuous insight; AI-driven analytics platforms such as Token Metrics can help surface unusual usage patterns and prioritize performance fixes based on impact.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

REST exposes multiple endpoints that represent resources and rely on HTTP verbs for operations. It is simple and maps well to HTTP semantics. GraphQL exposes a single endpoint where clients request precisely the fields they need, which reduces overfetching and can simplify mobile consumption. GraphQL adds complexity in query planning and caching; choose based on client needs and team expertise.

Prefer backward-compatible changes over breaking changes. Use semantic versioning for major releases, and consider header-based versioning or URI version prefixes when breaking changes are unavoidable. Maintain deprecation schedules and communicate timelines in documentation and response headers so clients can migrate predictably.

OAuth 2.0 and OpenID Connect are standard for delegated access and single-sign-on. For machine-to-machine communication, use short-lived tokens issued by a trusted authorization server. API keys can be simple to implement but should be scoped, rotated regularly, and never embedded in public clients without additional protections.

Implement synthetic monitoring for critical endpoints, collect real-user metrics (latency percentiles, error rates), and instrument distributed tracing to follow requests across services. Run scheduled contract tests against staging and production-like environments, and correlate incidents with deployment timelines and dependency health.

Make additive, non-breaking changes where possible: add new fields rather than changing existing ones, and preserve default behaviors. Document deprecated fields and provide feature flags to gate new behavior. Maintain versioned client libraries to give consumers time to upgrade.

Disclaimer

This article is educational and technical in nature. It does not provide legal, financial, or investment advice. Implementations should be evaluated with respect to security policies, compliance requirements, and operational constraints specific to your organization.

%201.svg)

%201.svg)

APIs power modern software by exposing discrete access points called endpoints. Whether you re integrating a third-party data feed, building a microservice architecture, or wiring a WebSocket stream, understanding what an api endpoint is and how to design, secure, and monitor one is essential for robust systems.

An api endpoint is a network-accessible URL or address that accepts requests and returns responses according to a protocol (usually HTTP/HTTPS or WebSocket). Conceptually, an endpoint maps a client intent to a server capability: retrieve a resource, submit data, or subscribe to updates. In a RESTful API, endpoints often follow noun-based paths (e.g., /users/123) combined with HTTP verbs (GET, POST, PUT, DELETE) to indicate the operation.

Key technical elements of an endpoint include:

Endpoints can be public (open to third parties) or private (internal to a service mesh). For crypto-focused data integrations, api endpoints may also expose streaming interfaces (WebSockets) or webhook callbacks for asynchronous events. For example, Token Metrics is an example of an analytics provider that exposes APIs for research workflows.

Different application needs favor different endpoint types and protocols:

Choosing a protocol depends on consistency requirements, latency tolerance, and client diversity. Hybrid architectures often combine REST for configuration and GraphQL/WebSocket for dynamic data.

Good endpoint design improves developer experience and system resilience. Follow these practical practices:

API schema tools (OpenAPI/Swagger, AsyncAPI) let you define endpoints, types, and contracts programmatically, enabling automated client generation, testing, and mock servers during development.

Endpoints are primary attack surfaces. Security and observability are critical:

Operational tooling such as API gateways, service meshes, and managed API platforms provide built-in policy enforcement for security and rate limiting, reducing custom code complexity.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

An API is the overall contract and set of capabilities a service exposes; an api endpoint is a specific network address (URI) where one of those capabilities is accessible. Think of the API as the menu and endpoints as the individual dishes.

Use HTTPS only, require authenticated tokens with appropriate scopes, implement rate limits and IP reputation checks, and validate all input. Employ monitoring to detect anomalous traffic patterns and rotate credentials periodically.

Introduce explicit versioning when you plan to make breaking changes to request/response formats or behavior. Semantic versioning in the path (e.g., /v1/) is common and avoids forcing clients to adapt unexpectedly.

Combine per-key quotas, sliding-window or token-bucket algorithms, and burst allowances. Communicate limits via response headers and provide clear error codes and retry-after values so clients can back off gracefully.

Track request rate (RPS), error rate (4xx/5xx), latency percentiles (p50, p95, p99), and active connections for streaming endpoints. Correlate with upstream/downstream service metrics to identify root causes.

Choose GraphQL when clients require flexible field selection and you want to reduce overfetching. Prefer REST for simple resource CRUD patterns and when caching intermediaries are important. Consider team familiarity and tooling ecosystem as well.

The information in this article is technical and educational in nature. It is not financial, legal, or investment advice. Implementations should be validated in your environment and reviewed for security and compliance obligations specific to your organization.

%201.svg)

%201.svg)

Modern web and mobile apps exchange data constantly. At the center of that exchange is the REST API — a widely adopted architectural style that standardizes how clients and servers communicate over HTTP. Whether you are a developer, product manager, or researcher, understanding what a REST API is and how it works is essential for designing scalable systems and integrating services efficiently.

A REST API (Representational State Transfer Application Programming Interface) is a style for designing networked applications. It defines a set of constraints that, when followed, enable predictable, scalable, and loosely coupled interactions between clients (browsers, mobile apps, services) and servers. REST is not a protocol or standard; it is a set of architectural principles introduced by Roy Fielding in 2000.

Key principles include:

A REST API organizes functionality around resources and uses standard HTTP verbs to manipulate them. Common conventions are:

Responses use HTTP status codes to indicate result state (200 OK, 201 Created, 204 No Content, 400 Bad Request, 401 Unauthorized, 404 Not Found, 500 Internal Server Error). Payloads are typically JSON but can be XML or other formats. Endpoints are structured hierarchically, for example: /api/users to list users, /api/users/123 to operate on user with ID 123.

Designing a robust REST API involves more than choosing verbs and URIs. Adopt patterns that make APIs understandable, maintainable, and secure:

Following these practices improves interoperability and reduces operational risk.

REST APIs are used across web services, microservices, mobile backends, IoT devices, and third-party integrations. Developers commonly use tools and practices to build and validate APIs:

AI-driven platforms and analytics can speed research and debugging by surfacing usage patterns, anomalies, and integration opportunities. For example, Token Metrics can be used to analyze API-driven data feeds and incorporate on-chain signals into application decision layers without manual data wrangling.

Build Smarter Crypto Apps & AI Agents with Token Metrics

Token Metrics provides real-time prices, trading signals, and on-chain insights all from one powerful API. Grab a Free API Key

"REST" refers to the architectural constraints described by Roy Fielding; "RESTful" is a colloquial adjective meaning an API that follows REST principles. Not all APIs labeled RESTful implement every REST constraint strictly.

SOAP is a protocol with rigid standards and built-in operations (often used in enterprise systems). GraphQL exposes a single endpoint and lets clients request precise data shapes. REST uses multiple endpoints and standard HTTP verbs. Each approach has trade-offs in flexibility, caching, and tooling.

Version your API before making breaking changes to request/response formats or behavior that existing clients depend on. Common strategies include URI versioning (e.g., /v1/) or header-based versioning.

No. Security must be designed in: use HTTPS/TLS, authenticate requests, validate input, apply authorization checks, and limit rate to reduce abuse. Treat REST APIs like any other public interface that requires protection.

Use API specifications (OpenAPI) to auto-generate docs and client stubs. Combine manual testing tools like Postman with automated integration and contract tests in CI pipelines to ensure consistent behavior across releases.

REST is request/response oriented and not ideal for continuous real-time streams. For streaming, consider WebSockets, Server-Sent Events (SSE), or specialized protocols; REST can still be used for control operations and fallbacks.

Disclaimer: This article is educational and technical in nature. It does not provide investment or legal advice. The information is intended to explain REST API concepts and best practices, not to recommend specific products or actions.

Create Your Free Account

Create Your Free Account9450 SW Gemini Dr

PMB 59348

Beaverton, Oregon 97008-7105 US

.svg)

.png)

Token Metrics Media LLC is a regular publication of information, analysis, and commentary focused especially on blockchain technology and business, cryptocurrency, blockchain-based tokens, market trends, and trading strategies.

Token Metrics Media LLC does not provide individually tailored investment advice and does not take a subscriber’s or anyone’s personal circumstances into consideration when discussing investments; nor is Token Metrics Advisers LLC registered as an investment adviser or broker-dealer in any jurisdiction.

Information contained herein is not an offer or solicitation to buy, hold, or sell any security. The Token Metrics team has advised and invested in many blockchain companies. A complete list of their advisory roles and current holdings can be viewed here: https://tokenmetrics.com/disclosures.html/

Token Metrics Media LLC relies on information from various sources believed to be reliable, including clients and third parties, but cannot guarantee the accuracy and completeness of that information. Additionally, Token Metrics Media LLC does not provide tax advice, and investors are encouraged to consult with their personal tax advisors.

All investing involves risk, including the possible loss of money you invest, and past performance does not guarantee future performance. Ratings and price predictions are provided for informational and illustrative purposes, and may not reflect actual future performance.