Top Crypto Trading Platforms in 2025

Big news: We’re cranking up the heat on AI-driven crypto analytics with the launch of the Token Metrics API and our official SDK (Software Development Kit). This isn’t just an upgrade – it's a quantum leap, giving traders, hedge funds, developers, and institutions direct access to cutting-edge market intelligence, trading signals, and predictive analytics.

Crypto markets move fast, and having real-time, AI-powered insights can be the difference between catching the next big trend or getting left behind. Until now, traders and quants have been wrestling with scattered data, delayed reporting, and a lack of truly predictive analytics. Not anymore.

The Token Metrics API delivers 32+ high-performance endpoints packed with powerful AI-driven insights right into your lap, including:

Getting started with the Token Metrics API is simple:

At Token Metrics, we believe data should be decentralized, predictive, and actionable.

The Token Metrics API & SDK bring next-gen AI-powered crypto intelligence to anyone looking to trade smarter, build better, and stay ahead of the curve. With our official SDK, developers can plug these insights into their own trading bots, dashboards, and research tools – no need to reinvent the wheel.

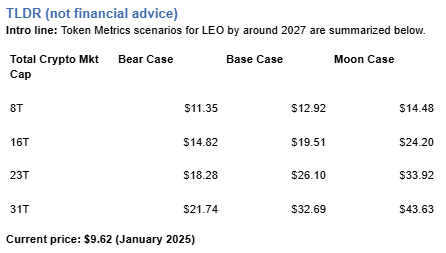

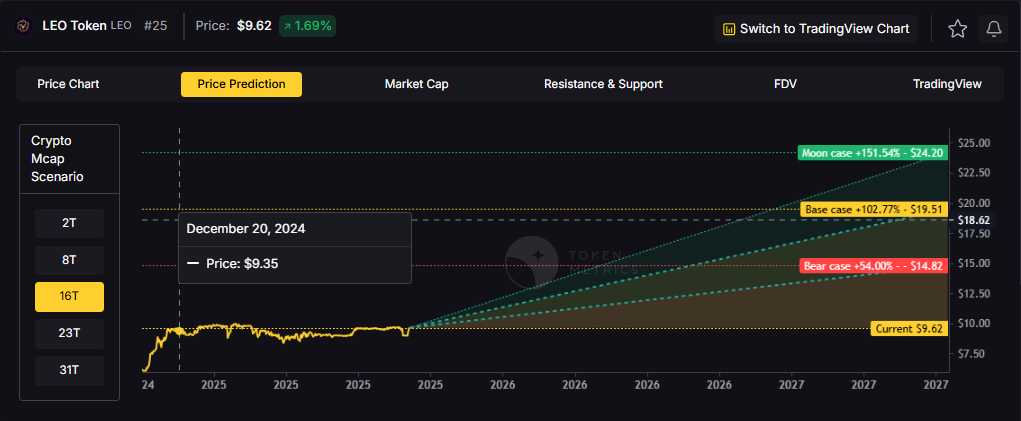

Exchange tokens desrive value from trading volume and platform revenue, creating linkage between crypto market activity and LEO price action. LEO Token delivers utility through reduced trading fees and enhanced platform services on Bitfinex and iFinex across Ethereum and EOS. Token Metrics scenarios below model LEO outcomes across different total crypto market cap environments.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

How to read it: Each band blends cycle analogues and market-cap share math with TA guardrails. Base assumes steady adoption and neutral or positive macro. Moon layers in a liquidity boom. Bear assumes muted flows and tighter liquidity.

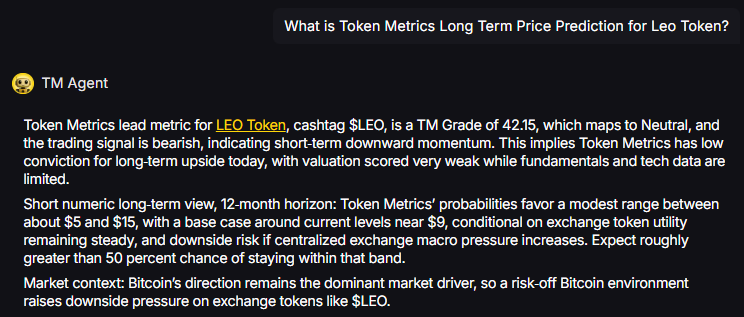

TM Agent baseline: Token Metrics probabilities favor a modest range between about $5 and $15, with a base case around current levels near $9, conditional on exchange token utility remaining steady, and downside risk if centralized exchange macro pressure increases.

Token Metrics scenarios span four market cap tiers reflecting different crypto market maturity levels:

LEO Token is the native utility token of the Bitfinex and iFinex ecosystem, designed to provide benefits like reduced trading fees, enhanced lending and borrowing terms, and access to exclusive features on the platform. It operates on both Ethereum (ERC-20) and EOS blockchains, offering flexibility for users.

The primary role of LEO is to serve as a utility token within the exchange ecosystem, enabling fee discounts, participation in token sales, and other platform-specific advantages. Common usage patterns include holding LEO to reduce trading costs and utilizing it for enhanced platform services, positioning it primarily within the exchange token sector.

What gives LEO value?

LEO accrues value through reduced trading fees and enhanced platform services within the Bitfinex and iFinex ecosystem. Demand drivers include exchange usage and access to platform features, while supply dynamics follow the token’s exchange utility design. Value realization depends on platform activity and user adoption.

What price could LEO reach in the moon case?

Moon case projections range from $14.48 at 8T to $43.63 at 31T. These scenarios require maximum market cap expansion and strong exchange activity. Not financial advice.

Curious how these forecasts are made? Token Metrics delivers LEO on-chain grades, forecasts, and deep research on 6,000+ tokens. Instantly compare fundamentals, on-chain scores, and AI-powered predictions.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

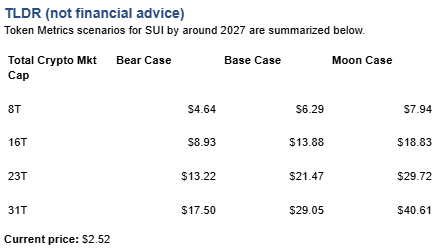

Layer 1 tokens like Sui represent bets on specific blockchain architectures winning developer and user mindshare. SUI carries both systematic crypto risk and unsystematic risk from Sui's technical roadmap execution and ecosystem growth. Multi-chain thesis suggests diversifying across several L1s rather than concentrating in one, since predicting which chains will dominate remains difficult.

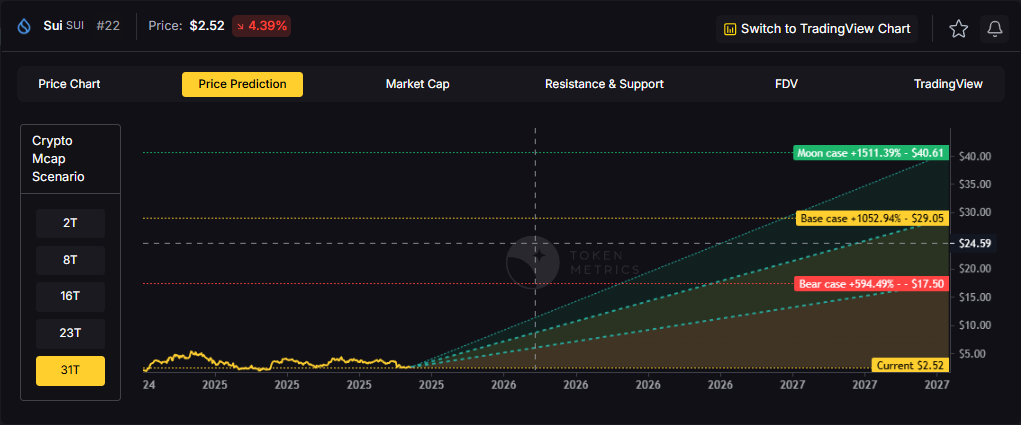

The projections below show how SUI might perform under different market cap scenarios. While Sui may have strong fundamentals, prudent portfolio construction balances L1 exposure across Ethereum, competing smart contract platforms, and Bitcoin to capture the sector without overexposure to any single chain's fate.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

Each band blends cycle analogues and market-cap share math with TA guardrails. Base assumes steady adoption and neutral or positive macro. Moon layers in a liquidity boom. Bear assumes muted flows and tighter liquidity.

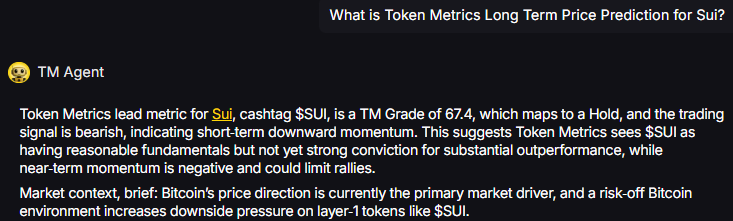

TM Agent baseline: Token Metrics lead metric for Sui, cashtag $SUI, is a TM Grade of 67.4%, which maps to a Hold, and the trading signal is bearish, indicating short-term downward momentum. This suggests Token Metrics sees $SUI as having reasonable fundamentals but not yet strong conviction for substantial outperformance, while near-term momentum is negative and could limit rallies. Market context, brief: Bitcoin’s price direction is currently the primary market driver, and a risk-off Bitcoin environment increases downside pressure on layer-1 tokens like $SUI.

Professional investors across asset classes prefer diversified exposure over concentrated bets for good reason. Sui faces numerous risks - technical vulnerabilities, competitive pressure, regulatory targeting, team execution failure - any of which could derail SUI performance independent of broader market conditions. Token Metrics Indices spread this risk across one hundred tokens, ensuring no single failure destroys your crypto portfolio.

Diversification becomes especially critical in crypto given the sector's nascency and rapid evolution. Technologies and narratives that dominate today may be obsolete within years as the space matures. By holding SUI exclusively, you're betting not only on crypto succeeding but on Sui specifically remaining relevant. Index approaches hedge against picking the wrong horse while maintaining full crypto exposure.

Early access to Token Metrics Indices

Token Metrics scenarios span four market cap tiers, each representing different levels of crypto market maturity and liquidity:

These ranges illustrate potential outcomes for concentrated SUI positions, but investors should weigh whether single-asset exposure matches their risk tolerance or whether diversified strategies better suit their objectives.

Sui is a layer-1 blockchain network designed for general-purpose smart contracts and scalable user experiences. It targets high throughput and fast settlement, aiming to support applications that need low-latency interactions and horizontal scaling.

SUI is the native token used for transaction fees and staking, aligning validator incentives and securing the network. It underpins activity across common crypto sectors such as NFTs and DeFi while the ecosystem builds developer tooling and integrations.

Vision: Sui aims to create a highly scalable and low-latency blockchain platform that enables seamless user experiences for decentralized applications. Its vision centers on making blockchain technology accessible and efficient for mainstream applications by removing traditional bottlenecks in transaction speed and cost.

Problem: Many existing blockchains face trade-offs between scalability, security, and decentralization, often resulting in high fees and slow transaction finality during peak usage. This limits their effectiveness for applications requiring instant settlement, frequent interactions, or large user bases, such as games or social platforms. Sui addresses the need for a network that can scale horizontally without sacrificing speed or cost-efficiency.

Solution: Sui uses a unique object-centric blockchain model and the Move programming language to enable parallel transaction processing, allowing high throughput and instant finality for many operations. Its consensus mechanism, Narwhal and Tusk, is optimized for speed and scalability by decoupling transaction dissemination from ordering. The network supports smart contracts, NFTs, and decentralized applications, with an emphasis on developer ease and user experience. Staking is available for network security, aligning with common proof-of-stake utility patterns.

Market Analysis: Sui competes in the layer-1 blockchain space with platforms like Solana, Avalanche, and Aptos, all targeting high-performance decentralized applications. It differentiates itself through its object-based data model and parallel execution, aiming for superior scalability in specific workloads. Adoption drivers include developer tooling, ecosystem incentives, and integration with wallets and decentralized exchanges. The broader market for high-throughput blockchains is driven by demand for scalable Web3 applications, though it faces risks from technical complexity, regulatory uncertainty, and intense competition.

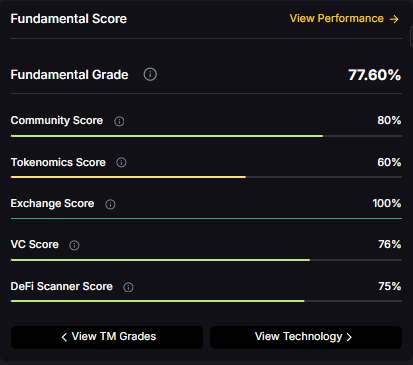

Fundamental Grade: 77.60% (Community 80%, Tokenomics 60%, Exchange 100%, VC 76%, DeFi Scanner 75%).

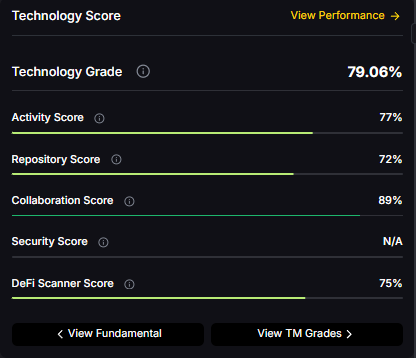

Technology Grade: 79.06% (Activity 77%, Repository 72%, Collaboration 89%, Security N/A, DeFi Scanner 75%).

Token Metrics empowers you to analyze Sui and hundreds of digital assets with AI-driven ratings, on-chain and fundamental data, and index solutions to manage portfolio risk smartly in a rapidly evolving crypto market.

What price could SUI reach in the moon case?

Moon case projections range from $7.94 at 8T to $40.61 at 31T. These scenarios assume maximum liquidity expansion and strong Sui adoption. Diversified strategies aim to capture upside across multiple tokens rather than betting exclusively on any single moon scenario. Not financial advice.

What's the risk/reward profile for SUI?

Risk/reward spans from $4.64 to $40.61. Downside risks include regulatory pressure and competitive displacement, while upside drivers include ecosystem growth and favorable liquidity. Concentrated positions amplify both tails, while diversified strategies smooth outcomes.

What are the biggest risks to SUI?

Key risks include regulatory actions, technical issues, competitive pressure from other L1s, and adverse market liquidity. Concentrated SUI positions magnify exposure to these risks. Diversified strategies spread risk across tokens with different profiles, reducing portfolio vulnerability to any single failure point.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, concentration amplifies risk, and diversification is a fundamental principle of prudent portfolio construction. Do your own research and manage risk appropriately.

Most investors understand that diversification matters—the famous "don't put all your eggs in one basket" principle. However, understanding diversification conceptually differs dramatically from implementing it effectively. Poor diversification strategies create illusions of safety while concentrating risk in hidden ways. True diversification requires sophisticated allocation across multiple dimensions simultaneously.

Token Metrics AI Indices provide professional-grade diversification tools, but maximizing their power requires strategic allocation decisions. How much total capital should you allocate to crypto? How should you split that allocation across different indices? How do you balance crypto with traditional assets? What role should conservative versus aggressive indices play?

This comprehensive guide explores portfolio allocation mastery, examining the principles of effective diversification, specific allocation frameworks for different investor profiles, tactical adjustments for changing conditions, and avoiding common diversification mistakes that undermine portfolio performance.

Many investors believe diversification simply means holding many assets. True diversification requires deeper strategic thinking.

Crypto represents one component of comprehensive financial planning. Optimal allocation requires considering how crypto fits within total wealth.

Appropriate crypto allocation varies dramatically based on age, income stability, and financial obligations.

Ages 20-35 (Aggressive Accumulation Phase):

Ages 35-50 (Balanced Growth Phase):

Ages 50-65 (Pre-Retirement Transition):

Ages 65+ (Retirement Distribution):

These frameworks provide starting points—adjust based on individual risk tolerance, wealth level, and financial obligations.

Never invest emergency funds or money needed within 3-5 years in cryptocurrency. Maintain 6-12 months of living expenses in high-yield savings accounts or money market funds completely separate from investment portfolios.

This liquidity buffer prevents forced selling during market crashes. Without adequate emergency reserves, unexpected expenses force liquidating crypto holdings at worst possible times—turning temporary paper losses into permanent realized losses.

High-interest debt (credit cards, personal loans above 8-10%) should be eliminated before aggressive crypto investing. The guaranteed "return" from eliminating 18% credit card interest exceeds expected crypto returns on risk-adjusted basis.

However, low-interest debt (mortgages below 4-5%) can coexist with crypto investing—no need to delay investing until mortgage-free. The opportunity cost of waiting decades to invest exceeds the modest interest savings from accelerated mortgage payments.

Once you've determined total crypto allocation, the next decision involves distributing that allocation across Token Metrics' various indices.

Structure crypto allocation across three risk tiers creating balanced exposure:

Conservative Tier (40-50% of crypto allocation):

Conservative indices emphasizing Bitcoin, Ethereum, and fundamentally strong large-cap tokens. This tier provides stability and reliable exposure to crypto's overall growth while limiting volatility.

Suitable indices: Bitcoin-weighted indices, large-cap indices, blue-chip crypto indices

Balanced Tier (30-40% of crypto allocation):

Balanced indices combining established tokens with growth-oriented mid-caps. This tier balances stability and growth potential through strategic diversification.

Suitable indices: Diversified market indices, multi-sector indices, smart contract platform indices

Aggressive Tier (20-30% of crypto allocation):

Aggressive growth indices targeting smaller-cap tokens with highest upside potential. This tier drives outperformance during bull markets while limited position sizing contains downside risk.

Suitable indices: Small-cap growth indices, sector-specific indices (DeFi, gaming, AI), emerging ecosystem indices

Different crypto sectors outperform during different market phases. Tactical sector rotation within your allocation captures these rotations:

Token Metrics indices provide sector-specific options allowing tactical overweighting of sectors positioned for outperformance while maintaining diversified core holdings.

Blockchain ecosystems exhibit different characteristics and growth trajectories. Diversifying across multiple ecosystems prevents concentration in single platform risk:

Token Metrics indices spanning multiple ecosystems provide automatic geographic and platform diversification preventing single-ecosystem concentration risk.

Markets move constantly, causing allocations to drift from targets. Systematic rebalancing maintains desired risk exposure and forces beneficial "buy low, sell high" discipline.

The simplest approach rebalances on fixed schedules regardless of market conditions:

More frequent rebalancing captures opportunities faster but triggers more taxable events in non-retirement accounts. Less frequent rebalancing reduces trading costs but allows greater allocation drift.

More sophisticated approaches rebalance when allocations drift beyond predetermined thresholds:

Threshold rebalancing responds to actual market movements rather than arbitrary calendar dates, potentially improving timing while reducing unnecessary transactions.

In taxable accounts, coordinate rebalancing with tax considerations:

This tax awareness preserves more wealth for compounding rather than sending it to tax authorities.

Effective diversification includes position sizing rules preventing excessive concentration even within diversified portfolios.

Establish maximum position sizes preventing any single index from dominating:

These guardrails maintain diversification even when particular indices perform extremely well, preventing overconfidence from creating dangerous concentration.

While crypto indices should be held long-term through volatility, establish strategic loss limits for total crypto allocation relative to overall portfolio:

These strategic boundaries prevent crypto volatility from creating portfolio-level instability while maintaining beneficial long-term exposure.

Beyond basic frameworks, advanced strategies optimize allocation for specific goals and market conditions.

The barbell approach combines extremely conservative and extremely aggressive positions while avoiding middle ground:

This approach provides downside protection through conservative core while capturing maximum upside through concentrated aggressive positions—potentially delivering superior risk-adjusted returns versus balanced approaches.

Maintain stable core allocation (70% of crypto) in diversified indices while using tactical satellite positions (30%) rotated based on market conditions and opportunities:

This approach combines buy-and-hold stability with active opportunity capture.

Rather than allocating by dollar amounts, allocate by risk contribution ensuring each index contributes equally to portfolio volatility:

This sophisticated approach prevents high-volatility positions from dominating portfolio risk even with modest dollar allocations.

Portfolio allocation represents the most important investment decision you'll make—far more impactful than individual token selection or market timing. Academic research consistently shows asset allocation determines 90%+ of portfolio performance variation, while security selection and timing contribute only marginally.

Token Metrics provides world-class indices, but your allocation strategy determines whether you capture their full potential or undermine them through poor diversification. The frameworks presented here offer starting points—adapt them to your specific situation, risk tolerance, and financial goals.

Remember that optimal allocation isn't static—it evolves with life stages, market conditions, and financial circumstances. Regular review and adjustment keeps strategies aligned with current reality rather than outdated assumptions.

The investors who build lasting wealth aren't those who find magical assets or perfect timing—they're those who implement sound allocation strategies and maintain them through all market conditions. This discipline, more than any other factor, separates successful wealth builders from those whose portfolios underperform despite choosing quality investments.

Begin implementing strategic allocation today. Start with appropriate total crypto allocation for your life stage, distribute across conservative, balanced, and aggressive indices providing genuine diversification, and establish rebalancing discipline maintaining target exposures through market volatility.

Your allocation strategy, properly implemented, will compound into extraordinary wealth over decades. Token Metrics provides the tools—your allocation decisions determine the results.

Start your 7-day free trial today and begin building the optimally allocated portfolio that will drive your financial success for decades to come.