Top Crypto Trading Platforms in 2025

%201.svg)

%201.svg)

Big news: We’re cranking up the heat on AI-driven crypto analytics with the launch of the Token Metrics API and our official SDK (Software Development Kit). This isn’t just an upgrade – it's a quantum leap, giving traders, hedge funds, developers, and institutions direct access to cutting-edge market intelligence, trading signals, and predictive analytics.

Crypto markets move fast, and having real-time, AI-powered insights can be the difference between catching the next big trend or getting left behind. Until now, traders and quants have been wrestling with scattered data, delayed reporting, and a lack of truly predictive analytics. Not anymore.

The Token Metrics API delivers 32+ high-performance endpoints packed with powerful AI-driven insights right into your lap, including:

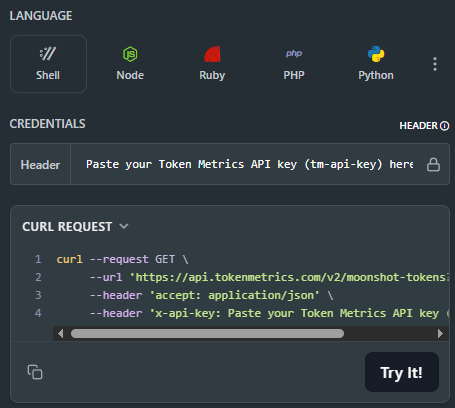

Getting started with the Token Metrics API is simple:

At Token Metrics, we believe data should be decentralized, predictive, and actionable.

The Token Metrics API & SDK bring next-gen AI-powered crypto intelligence to anyone looking to trade smarter, build better, and stay ahead of the curve. With our official SDK, developers can plug these insights into their own trading bots, dashboards, and research tools – no need to reinvent the wheel.

%201.svg)

%201.svg)

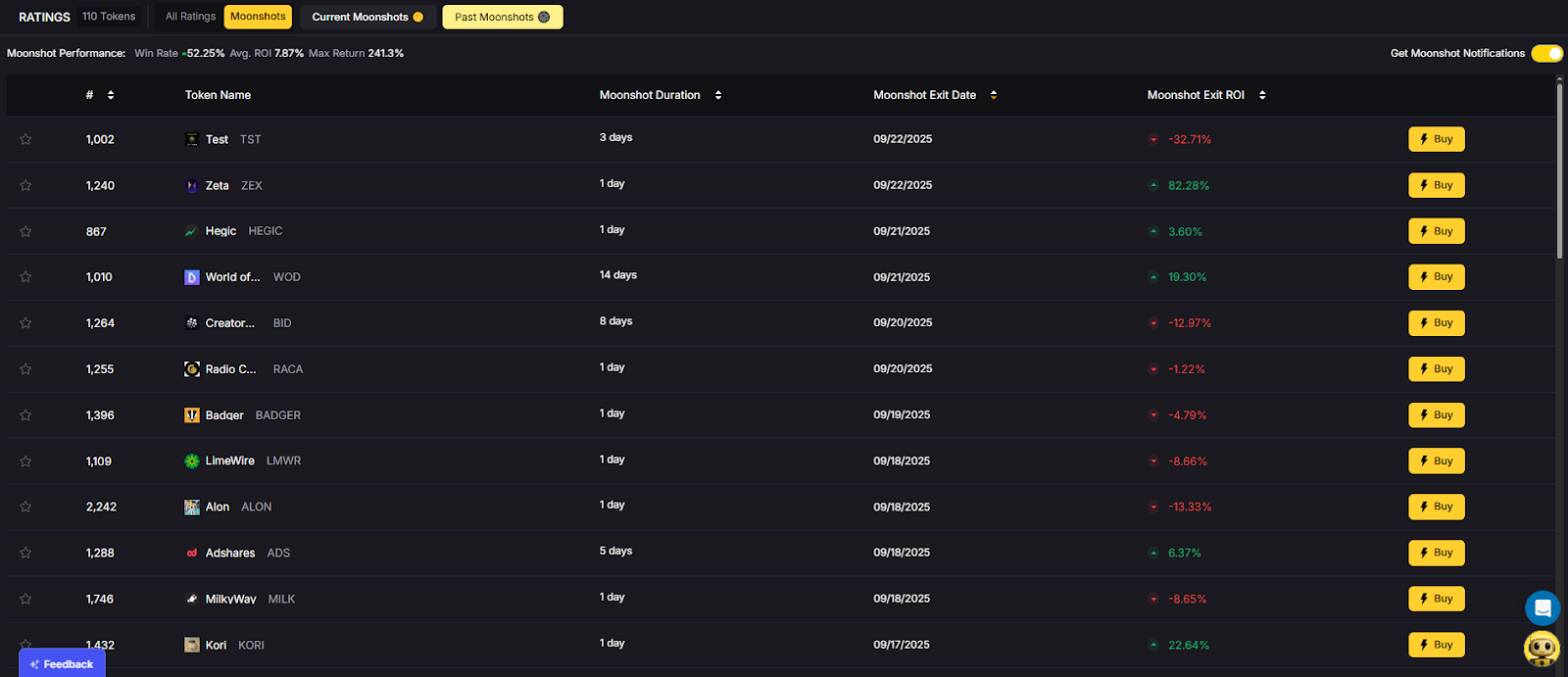

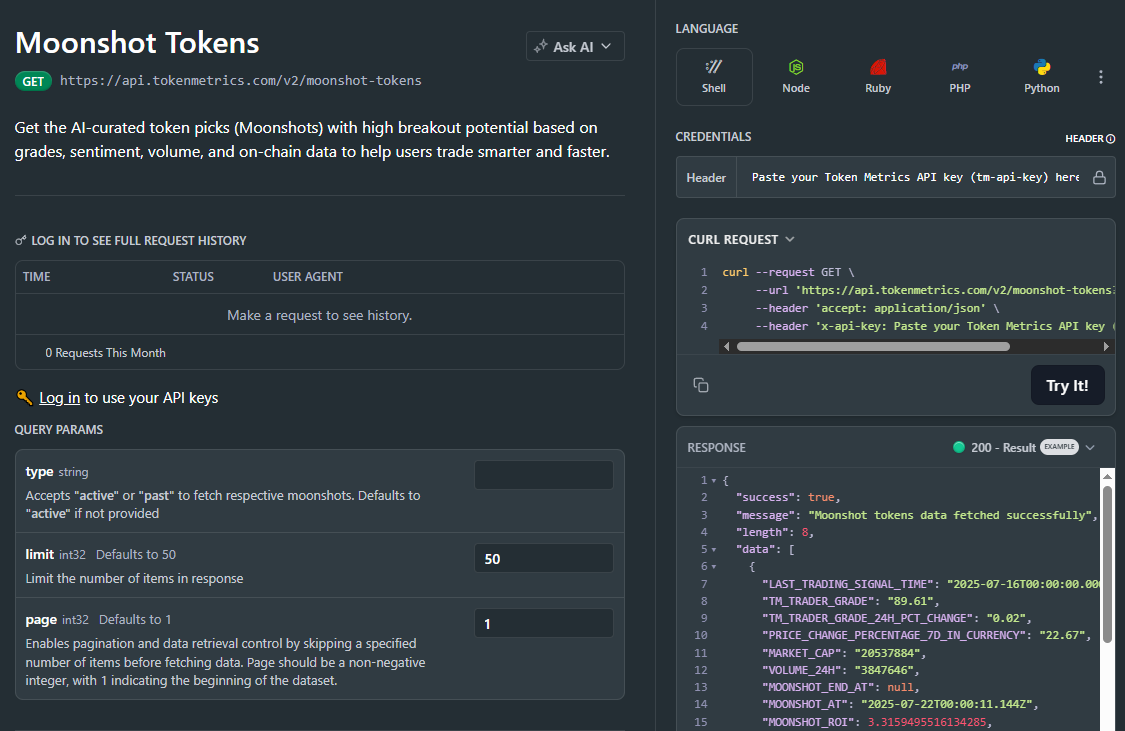

The biggest gains in crypto rarely come from the majors. They come from Moonshots—fast-moving tokens with breakout potential. The Moonshots API surfaces these candidates programmatically so you can rank, alert, and act inside your product. In this guide, you’ll call /v2/moonshots, display a high-signal list with TM Grade and Bullish tags, and wire it into bots, dashboards, or screeners in minutes. Start by grabbing your key at Get API Key, then Run Hello-TM and Clone a Template to ship fast.

Discovery that converts. Users want more than price tickers—they want a curated, explainable list of high-potential tokens. The moonshots API encapsulates multiple signals into a short list designed for exploration, alerts, and watchlists you can monetize.

Built for builders. The endpoint returns a consistent schema with grade, signal, and context so you can immediately sort, badge, and trigger workflows. With predictable latency and clear filters, you can scale to dashboards, mobile apps, and headless bots without reinventing the discovery pipeline.

The Moonshots API cURL request is right there in the top right of the API Reference. Grab it and start tapping into the potential!

👉 Keep momentum: Get API Key • Run Hello-TM • Clone a Template

Fork a screener or alerting template, plug your key, and deploy. Validate your environment with Hello-TM. When you scale users or need higher limits, compare API plans.

The Moonshots endpoint aggregates a set of evidence—often combining TM Grade, signal state, and momentum/volume context—into a shortlist of breakout candidates. Each row includes a symbol, grade, signal, and timestamp, plus optional reason tags for transparency.

For UX, a common pattern is: headline list → token detail where you render TM Grade (quality), Trading Signals (timing), Support/Resistance (risk placement), Quantmetrics (risk-adjusted performance), and Price Prediction scenarios. This lets users understand why a token was flagged and how to act with risk controls.

Polling vs webhooks. Dashboards typically poll with short-TTL caching. Alerting flows use scheduled jobs or webhooks (where available) to smooth traffic and avoid duplicates. Always make notifications idempotent.

1) What does the Moonshots API return?

A list of breakout candidates with fields such as symbol, tm_grade, signal (often Bullish/Bearish), optional reason tags, and updated_at. Use it to drive discover tabs, alerts, and watchlists.

2) How fresh is the list? What about latency/SLOs?

The endpoint targets predictable latency and timely updates for dashboards and alerts. Use short-TTL caching and queued jobs/webhooks to avoid bursty polling.

3) How do I use Moonshots in a trading workflow?

Common stack: Moonshots for discovery, Trading Signals for timing, Support/Resistance for SL/TP, Quantmetrics for sizing, and Price Prediction for scenario context. Always backtest and paper-trade first.

4) I saw results like “+241%” and a “7.5% average return.” Are these guaranteed?

No. Any historical results are illustrative and not guarantees of future performance. Markets are risky; use risk management and testing.

5) Can I filter the Moonshots list?

Yes—pass parameters like min_grade, signal, and limit (as supported) to tailor to your audience and keep pages fast.

6) Do you provide SDKs or examples?

REST works with JavaScript and Python snippets above. Docs include quickstarts, Postman collections, and templates—start with Run Hello-TM.

7) Pricing, limits, and enterprise SLAs?

Begin free and scale up. See API plans for rate limits and enterprise options.

%201.svg)

%201.svg)

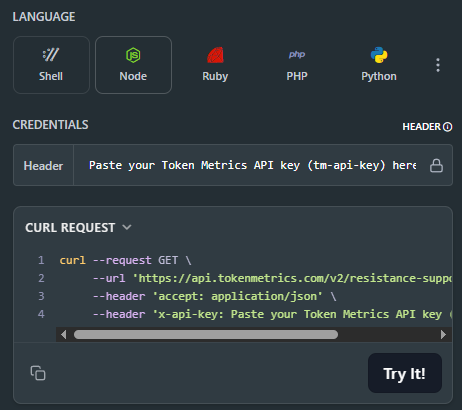

Most traders still draw lines by hand in TradingView. The support and resistance API from Token Metrics auto-calculates clean support and resistance levels from one request, so your dashboard, bot, or alerts can react instantly. In minutes, you’ll call /v2/resistance-support, render actionable levels for any token, and wire them into stops, targets, or notifications. Start by grabbing your key on Get API Key, then Run Hello-TM and Clone a Template to ship a production-ready feature fast.

Precision beats guesswork. Hand-drawn lines are subjective and slow. The support and resistance API standardizes levels across assets and timeframes, enabling deterministic stops and take-profits your users (and bots) can trust.

Production-ready by design. A simple REST shape, predictable latency, and clear semantics let you add levels to token pages, automate SL/TP alerts, and build rule-based execution with minimal glue code.

Need the Support and Resistance data? The cURL request for it is in the top right of the API Reference for quick access.

👉 Keep momentum: Get API Key • Run Hello-TM • Clone a Template

Kick off with our quickstarts—fork a bot or dashboard template, plug your key, and deploy. Confirm your environment by Running Hello-TM. When you’re scaling or need webhooks/limits, review API plans.

The Support/Resistance endpoint analyzes recent price structure to produce discrete levels above and below current price, along with strength indicators you can use for priority and styling. Query /v2/resistance-support?symbol=<ASSET>&timeframe=<HORIZON> to receive arrays of level objects and timestamps.

Polling vs webhooks. For dashboards, short-TTL caching and batched fetches keep pages snappy. For bots and alerts, use queued jobs or webhooks (where applicable) to avoid noisy, bursty polling—especially around market opens and major events.

1) What does the Support & Resistance API return?

A JSON payload with arrays of support and resistance levels for a symbol (and optional timeframe), each with a price and strength indicator, plus an update timestamp.

2) How timely are the levels? What are the latency/SLOs?

The endpoint targets predictable latency suitable for dashboards and alerts. Use short-TTL caching for UIs, and queued jobs or webhooks for alerting to smooth traffic.

3) How do I trigger alerts or trades from levels?

Common patterns: alert when price is within X% of a level, touches a level, or breaks beyond with confirmation. Always make downstream actions idempotent and respect rate limits.

4) Can I combine levels with other endpoints?

Yes—pair with /v2/trading-signals for timing, /v2/tm-grade for quality context, and /v2/quantmetrics for risk sizing. This yields a complete decide-plan-execute loop.

5) Which timeframe should I use?

Intraday bots prefer shorter horizons; swing/position dashboards use daily or higher-timeframe levels. Offer a timeframe toggle and cache results per setting.

6) Do you provide SDKs or examples?

Use the REST snippets above (JS/Python). The docs include quickstarts, Postman collections, and templates—start with Run Hello-TM.

7) Pricing, limits, and enterprise SLAs?

Begin free and scale as you grow. See API plans for rate limits and enterprise SLA options.

%201.svg)

%201.svg)

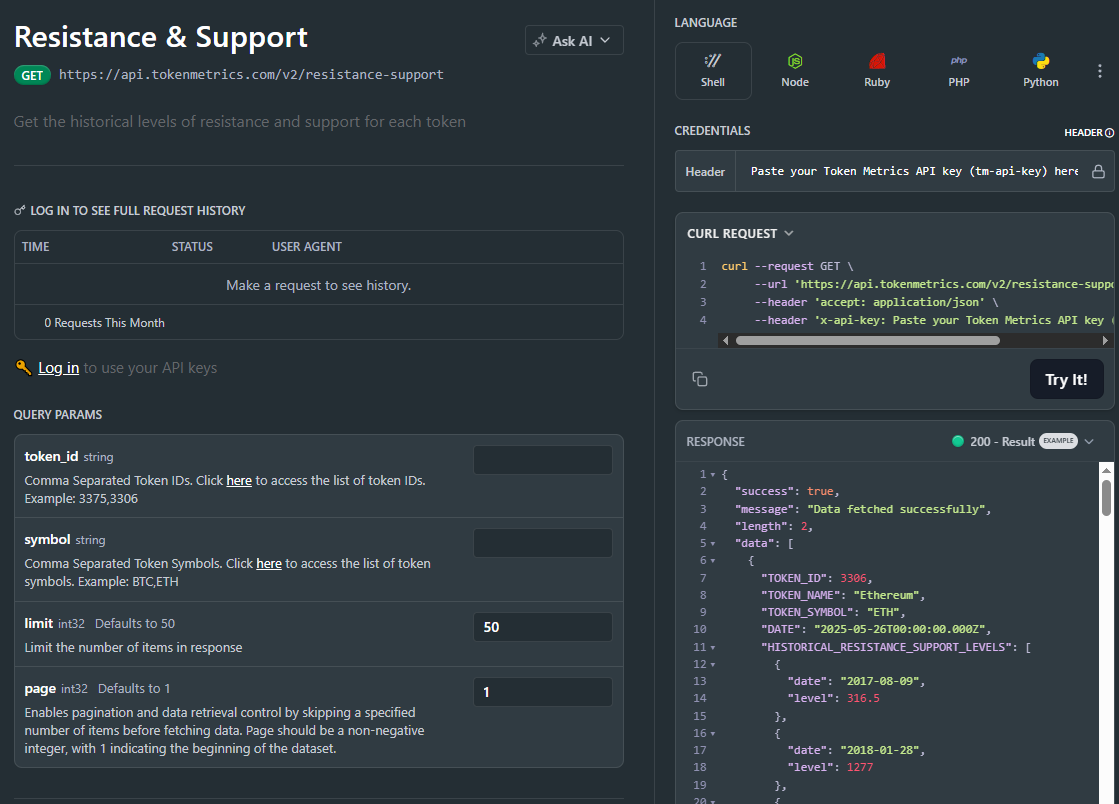

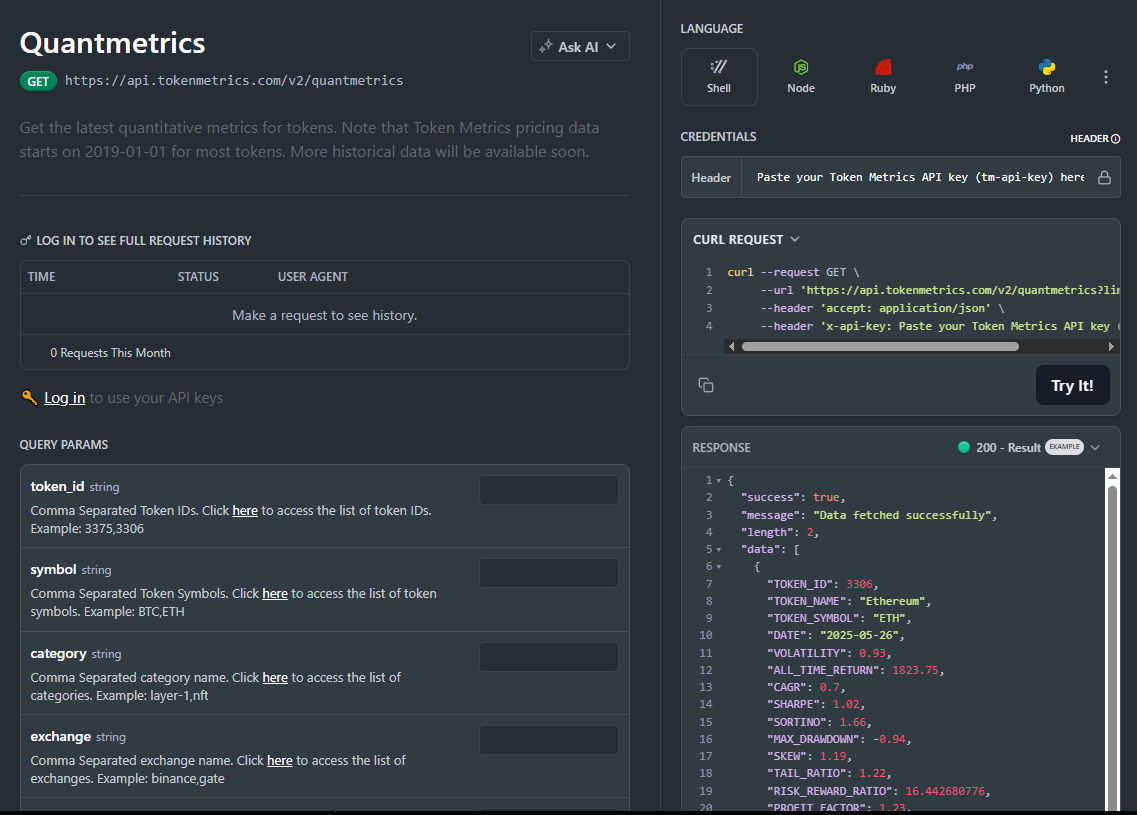

Most traders see price—quants see probabilities. The Quantmetrics API turns raw performance into risk-adjusted stats like Sharpe, Sortino, volatility, drawdown, and CAGR so you can compare tokens objectively and build smarter bots and dashboards. In minutes, you’ll query /v2/quantmetrics, render a clear performance snapshot, and ship a feature that customers trust. Start by grabbing your key at Get API Key, Run Hello-TM to verify your first call, then Clone a Template to go live fast.

Risk-adjusted truth beats hype. Price alone hides tail risk and whipsaws. Quantmetrics compresses edge, risk, and consistency into metrics that travel across assets and timeframes—so you can rank universes, size positions, and communicate performance like a pro.

Built for dev speed. A clean REST schema, predictable latency, and easy auth mean you can plug Sharpe/Sortino into bots, dashboards, and screeners without maintaining your own analytics pipeline. Pair with caching and batching to serve fast pages at scale.

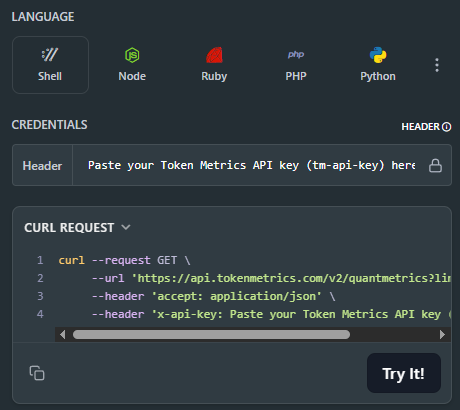

The Quant Metrics cURL request is located in the top right of the API Reference, allowing you to easily integrate it with your application.

👉 Keep momentum: Get API Key • Run Hello-TM • Clone a Template

Kick off from quickstarts in the docs—fork a dashboard or screener template, plug your key, and deploy in minutes. Validate your environment with Run Hello-TM; when you need more throughput or webhooks, compare API plans.

Quantmetrics computes risk-adjusted performance over a chosen lookback (e.g., 30d, 90d, 1y). You’ll receive a JSON snapshot with core statistics:

Call /v2/quantmetrics?symbol=<ASSET>&window=<LOOKBACK> to fetch the current snapshot. For dashboards spanning many tokens, batch symbols and apply short-TTL caching. If you generate alerts (e.g., “Sharpe crossed 1.5”), run a scheduled job and queue notifications to avoid bursty polling.

1) What does the Quantmetrics API return?

A JSON snapshot of risk-adjusted metrics (e.g., Sharpe, Sortino, volatility, max drawdown, CAGR) for a symbol and lookback window—ideal for ranking, sizing, and dashboards.

2) How fresh are the stats? What about latency/SLOs?

Responses are engineered for predictable latency. For heavy UI usage, add short-TTL caching and batch requests; for alerts, use scheduled jobs or webhooks where available.

3) Can I use Quantmetrics to size positions in a live bot?

Yes—many quants size inversely to volatility or require Sharpe ≥ X to trade. Always backtest and paper-trade before going live; past results are illustrative, not guarantees.

4) Which lookback window should I choose?

Short windows (30–90d) adapt faster but are noisier; longer windows (6–12m) are steadier but slower to react. Offer users a toggle and cache each window.

5) Do you provide SDKs or examples?

REST is straightforward (JS/Python above). Docs include quickstarts, Postman collections, and templates—start with Run Hello-TM.

6) Polling vs webhooks for quant alerts?

Dashboards usually use cached polling. For threshold alerts (e.g., Sharpe crosses 1.0), run scheduled jobs and queue notifications to keep usage smooth and idempotent.

7) Pricing, limits, and enterprise SLAs?

Begin free and scale up. See API plans for rate limits and enterprise SLA options.

%201.svg)

%201.svg)

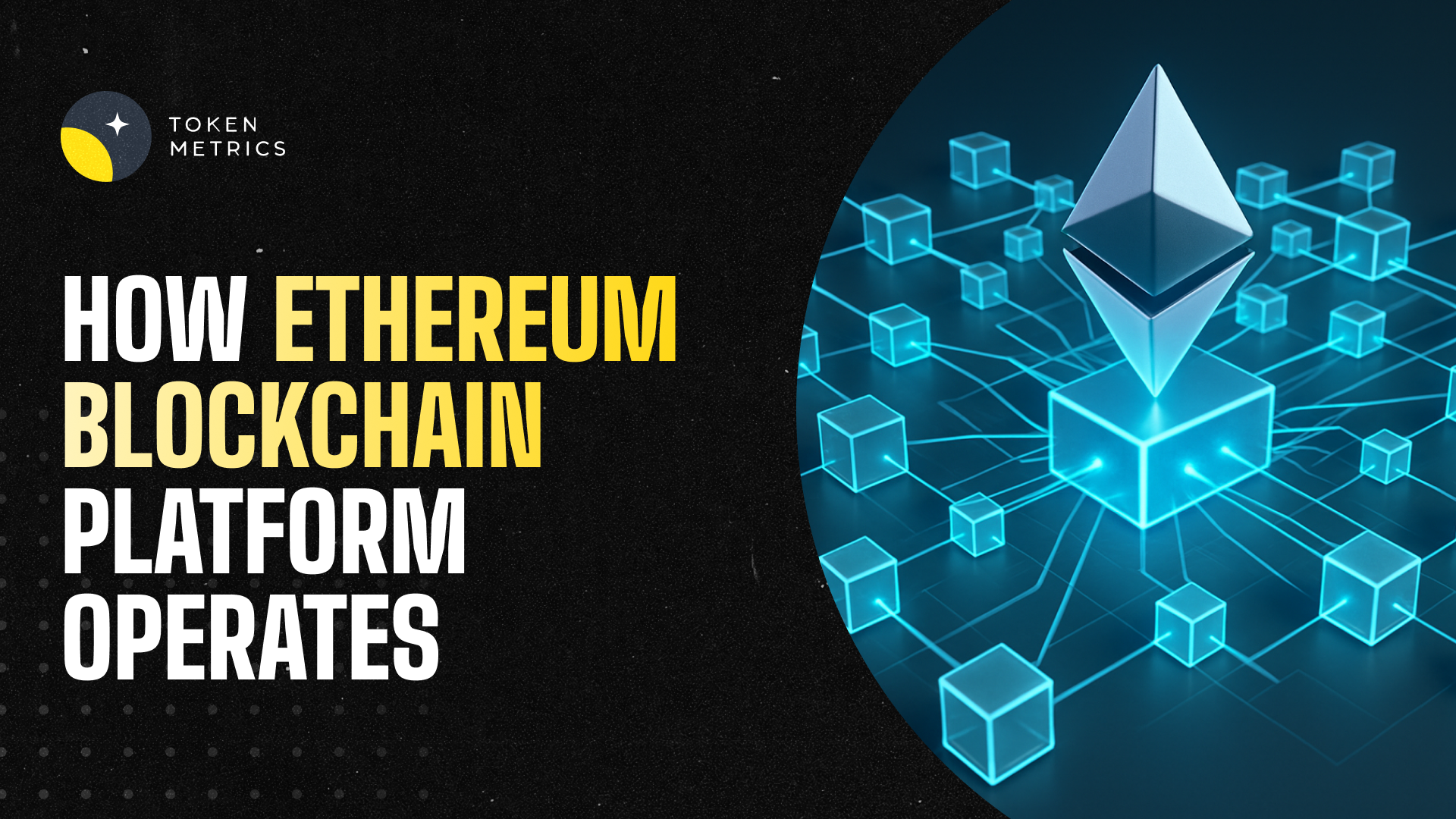

Ethereum is one of the most influential blockchain platforms developed since Bitcoin. It extends the concept of a decentralized ledger by integrating a programmable layer that enables developers to build decentralized applications (dApps) and smart contracts. This blog post explores how Ethereum operates technically and functionally without delving into investment aspects.

At its core, Ethereum operates as a distributed ledger technology—an immutable blockchain maintained by a decentralized network of nodes. These nodes collectively maintain and validate the Ethereum blockchain, which records every transaction and smart contract execution.

The Ethereum blockchain differs from Bitcoin primarily through its enhanced programmability and faster block times. Ethereum’s block time averages around 12-15 seconds, which allows for quicker confirmation of transactions and execution of contracts.

A fundamental innovation introduced by Ethereum is the smart contract. Smart contracts are self-executing pieces of code stored on the blockchain, triggered automatically when predefined conditions are met.

The Ethereum Virtual Machine (EVM) is the runtime environment for smart contracts. It interprets the contract code and operates across all Ethereum nodes to ensure consistent execution. This uniformity enforces the trustless and decentralized nature of applications built on Ethereum.

Originally, Ethereum used a Proof of Work (PoW) consensus mechanism similar to Bitcoin, requiring miners to solve complex cryptographic puzzles to confirm transactions and add new blocks. However, Ethereum has transitioned to Proof of Stake (PoS) through an upgrade called Ethereum 2.0.

In the PoS model, validators are chosen to propose and validate blocks based on the amount of cryptocurrency they stake as collateral. This method reduces energy consumption and improves scalability and network security.

Executing transactions and running smart contracts on Ethereum requires computational resources. These are measured in units called gas. Users pay gas fees, denominated in Ether (ETH), to compensate validators for processing and recording the transactions.

The gas fee varies depending on network demand and the complexity of the operation. Simple transactions require less gas, while complex contracts or high congestion periods incur higher fees. Gas mechanics incentivize efficient code and prevent spam on the network.

Ethereum’s decentralization is maintained by nodes located worldwide. These nodes can be categorized as full nodes, which store the entire blockchain and validate all transactions, and light nodes, which store only essential information.

Anyone can run a node, contributing to Ethereum’s resilience and censorship resistance. Validators in PoS must stake Ether to participate in block validation, ensuring alignment of incentives for network security.

Decentralized applications (dApps) are built on Ethereum’s infrastructure. These dApps span various sectors, including decentralized finance (DeFi), supply chain management, gaming, and digital identity. The open-source nature of Ethereum encourages innovation and interoperability across platforms.

Understanding Ethereum’s intricate network requires access to comprehensive data and analytical tools. AI-driven platforms, such as Token Metrics, utilize machine learning to evaluate on-chain data, developer activity, and market indicators to provide in-depth insights.

Such platforms support researchers and users by offering data-backed analysis, helping to comprehend Ethereum’s evolving technical landscape and ecosystem without bias or financial recommendations.

Ethereum revolutionizes blockchain technology by enabling programmable, trustless applications through smart contracts and a decentralized network. Transitioning to Proof of Stake enhances its scalability and sustainability. Understanding its mechanisms—from the EVM to gas fees and network nodes—provides critical perspectives on its operation.

For those interested in detailed Ethereum data and ratings, tools like Token Metrics offer analytical resources driven by AI and machine learning to keep pace with Ethereum’s dynamic ecosystem.

This content is for educational and informational purposes only. It does not constitute financial, investment, or trading advice. Readers should conduct independent research and consult professionals before making decisions related to cryptocurrencies or blockchain technologies.

%201.svg)

%201.svg)

Ethereum mining has been an essential part of the Ethereum blockchain network, enabling transaction validation and new token issuance under a Proof-of-Work (PoW) consensus mechanism. As Ethereum evolves, understanding the fundamentals of mining, the required technology, and operational aspects can provide valuable insights into this cornerstone process. This guide explains the key components of Ethereum mining, focusing on technical and educational details without promotional or financial advice.

Ethereum mining involves validating transactions and securing the network by solving complex mathematical problems using computational resources. Miners employ high-performance hardware to perform hashing calculations and compete to add new blocks to the blockchain. Successfully mined blocks reward miners with Ether (ETH) generated through block rewards and transaction fees.

At its core, Ethereum mining requires:

GPU-based mining rigs are currently the standard hardware for Ethereum mining due to their efficiency in processing the Ethash PoW algorithm. Graphics Processing Units (GPUs) are well-suited for the memory-intensive hashing tasks required for Ethereum, as opposed to ASICs (Application-Specific Integrated Circuits) that tend to specialize in other cryptocurrencies.

Key considerations when selecting GPUs include:

Popular GPUs such as the Nvidia RTX and AMD RX series often top mining performance benchmarks. However, hardware availability and electricity costs significantly impact operational efficiency.

Once mining hardware is selected, the next step involves configuring mining software suited for Ethereum. Mining software translates computational tasks into actionable processes executed by the hardware while connecting to the Ethereum network or mining pools.

Common mining software options include:

When configuring mining software, consider settings related to:

Mining Ethereum independently can be challenging due to increasing network difficulty and competition. Mining pools provide cooperative frameworks where multiple miners combine computational power to improve chances of mining a block. Rewards are then distributed proportionally according to contributed hash power.

Benefits of mining pools include:

Popular mining pools for Ethereum include Ethermine, SparkPool, and Nanopool. When selecting a mining pool, evaluate factors such as fees, payout methods, server locations, and minimum payout thresholds.

Mining Ethereum incurs ongoing costs, primarily electricity consumption and hardware maintenance. Efficiency optimization entails balancing power consumption with mining output to ensure sustainable operations.

Key factors to consider include:

Understanding power consumption (wattage) of mining rigs relative to their hashrate assists in determining energy efficiency. For example, a rig with a hashrate of 60 MH/s consuming 1200 watts has different efficiency metrics compared to others.

Efficient mining operations benefit from monitoring tools that track hardware performance, network status, and market dynamics. Analytical platforms offer data-backed insights that can guide equipment upgrades, pool selection, and operational adjustments.

Artificial intelligence-driven research platforms like Token Metrics provide quantitative analysis of Ethereum network trends and mining considerations. Leveraging such tools can optimize decision-making by integrating technical data with market analytics without endorsing specific investment choices.

Ethereum’s transition from Proof-of-Work to Proof-of-Stake (PoS), known as Ethereum 2.0, represents a significant development that impacts mining practices. PoS eliminates traditional mining in favor of staking mechanisms, which means Ethereum mining as performed today may phase out.

Miners should remain informed about network upgrades and consensus changes through official channels and reliable analysis platforms like Token Metrics. Understanding potential impacts enables strategic planning related to hardware usage and participation in alternative blockchain activities.

This article is intended for educational purposes only. It does not offer investment advice, price predictions, or endorsements. Readers should conduct thorough individual research and consider multiple reputable sources before engaging in Ethereum mining or related activities.

%201.svg)

%201.svg)

The digital landscape is continually evolving, giving rise to a new paradigm known as Web 3. This iteration promises a shift towards decentralization, enhanced user control, and a more immersive internet experience. But what exactly is Web 3, and why is it considered a transformative phase of the internet? This article explores its fundamentals, technology, potential applications, and the tools available to understand this complex ecosystem.

Web 3, often referred to as the decentralized web, represents the next generation of internet technology that aims to move away from centralized platforms dominated by a few major organizations. Instead of relying on centralized servers, Web 3 utilizes blockchain technology and peer-to-peer networks to empower users and enable trustless interactions.

In essence, Web 3 decentralizes data ownership and governance, allowing users to control their information and digital assets without intermediaries. This marks a significant departure from Web 2.0, where data is predominantly managed by centralized corporations.

Several emerging technologies underpin the Web 3 movement, each playing a vital role in achieving its vision:

Together, these technologies provide a robust foundation for a more autonomous and transparent internet landscape.

Understanding Web 3 requires comparing it to its predecessor, Web 2:

This shift fosters a more user-centric, permissionless, and transparent internet experience.

Web 3's decentralized infrastructure unlocks numerous application possibilities across industries:

As Web 3 matures, the range of practical and innovative use cases is expected to expand further.

Despite its promise, Web 3 faces several hurdles that need attention:

Addressing these challenges is crucial for realizing the full potential of Web 3.

For individuals and organizations interested in understanding Web 3 developments, adopting a structured research approach is beneficial:

This approach supports informed analysis based on technology fundamentals rather than speculation.

Artificial intelligence technologies complement Web 3 by enhancing research and analytical capabilities. AI-driven platforms can process vast amounts of blockchain data to identify patterns, assess project fundamentals, and forecast potential developments.

For example, Token Metrics integrates AI methodologies to provide insightful ratings and reports on various Web 3 projects and tokens. Such tools facilitate more comprehensive understanding for users navigating decentralized ecosystems.

Web 3 embodies a transformative vision for the internet—one that emphasizes decentralization, user empowerment, and innovative applications across multiple sectors. While challenges remain, its foundational technologies like blockchain and smart contracts hold substantial promise for reshaping digital interactions.

Continuing research and utilization of advanced analytical tools like Token Metrics can help individuals and organizations grasp Web 3’s evolving landscape with clarity and rigor.

This article is for educational and informational purposes only and does not constitute financial, investment, or legal advice. Readers should conduct their own research and consult with professional advisors before making any decisions related to Web 3 technologies or digital assets.

%201.svg)

%201.svg)

The explosion of interest in non-fungible tokens (NFTs) has opened new opportunities for creators and collectors alike. If you've ever wondered, "How can I mint my own NFT?", this guide will walk you through the essential concepts, processes, and tools involved in creating your unique digital asset on the blockchain.

Minting an NFT refers to the process of turning a digital file — such as artwork, music, video, or other digital collectibles — into a unique token recorded on a blockchain. This tokenization certifies the originality and ownership of the asset in a verifiable manner. Unlike cryptocurrencies, NFTs are unique and cannot be exchanged on a one-to-one basis.

Several blockchains support NFT minting, each with distinct features, costs, and communities. The most popular blockchain for NFTs has been Ethereum due to its widespread adoption and support for ERC-721 and ERC-1155 token standards. However, alternatives such as Binance Smart Chain, Solana, Polygon, and Tezos offer different advantages, such as lower transaction fees or faster processing times.

When deciding where to mint your NFT, consider factors like network fees (also known as gas fees), environmental impact, and marketplace support. Analytical tools, including Token Metrics, can offer insights into blockchain performance and trends, helping you make an informed technical decision.

Once you have chosen a blockchain, the next step is to select an NFT platform that facilitates minting and listing your digital asset. Popular NFT marketplaces such as OpenSea, Rarible, Foundation, and Mintable provide user-friendly interfaces to upload digital files, set metadata, and mint tokens.

Some platforms have specific entry requirements, such as invitation-only access or curation processes, while others are open to all creators. Consider the platform's user base, fees, minting options (e.g., lazy minting or direct minting), and supported blockchains before proceeding.

Minting an NFT typically involves transaction fees known as gas fees, which vary based on blockchain network congestion and platform policies. Costs can fluctuate significantly; therefore, it's prudent to monitor fee trends, potentially using analytical resources like Token Metrics to gain visibility into network conditions.

Some NFT platforms offer "lazy minting," allowing creators to mint NFTs with zero upfront fees, with costs incurred only upon sale. Understanding these financial mechanics is crucial to planning your minting process efficiently.

The intersection of artificial intelligence and blockchain has produced innovative tools that assist creators and collectors throughout the NFT lifecycle. AI can generate creative artwork, optimize metadata, and analyze market trends to inform decisions.

Research platforms such as Token Metrics utilize AI-driven methodologies to provide data insights and ratings that support neutral, analytical understanding of blockchain assets, including aspects relevant to NFTs. Employing such tools can help you better understand the technical fundamentals behind NFT platforms and ecosystems.

Minting your own NFT involves understanding the technical process of creating a unique token on a blockchain, choosing appropriate platforms, managing costs, and utilizing supporting tools. While the process is accessible to many, gaining analytical insights and leveraging AI-driven research platforms such as Token Metrics can deepen your understanding of underlying technologies and market dynamics.

This article is for educational purposes only and does not constitute financial or investment advice. Always conduct your own research and consult professionals before engaging in digital asset creation or transactions.

%201.svg)

%201.svg)

Centralized cryptocurrency exchanges have become the primary venues for trading a wide array of digital assets. Their user-friendly interfaces and liquidity pools make them appealing for both new and experienced traders. However, the inherent risks of using such centralized platforms warrant careful consideration. This article explores the risks associated with centralized exchanges, offering an analytical overview while highlighting valuable tools that can assist users in evaluating these risks.

Centralized exchanges (CEXs) operate as intermediaries that facilitate buying, selling, and trading cryptocurrencies. Users deposit funds into the exchange's custody and execute trades on its platform. Unlike decentralized exchanges, where users maintain control of their private keys and assets, centralized exchanges hold users' assets on their behalf, which introduces specific vulnerabilities and considerations.

One of the primary risks associated with centralized exchanges is security vulnerability. Holding large sums of digital assets in a single entity makes exchanges prominent targets for hackers. Over the years, numerous high-profile breaches have resulted in the loss of millions of dollars worth of crypto assets. These attacks often exploit software vulnerabilities, insider threats, or phishing campaigns.

Beyond external hacking attempts, users must be aware of the risks posed by potential internal malfeasance within these organizations. Since exchanges control private keys to user assets, trust in their operational security and governance practices is critical.

Using centralized exchanges means users relinquish direct control over their private keys. This custodial arrangement introduces counterparty risk, fundamentally differing from holding assets in self-custody wallets. In situations of insolvency, regulatory intervention, or technical failures, users may face difficulties accessing or retrieving their funds.

Additionally, the lack of comprehensive insurance coverage on many platforms means users bear the brunt of potential losses. The concept "not your keys, not your coins" encapsulates this risk, emphasizing that asset ownership and control are distinct on centralized platforms.

Centralized exchanges typically operate under jurisdictional regulations which can vary widely. Regulatory scrutiny may lead to sudden operational restrictions, asset freezes, or delisting of certain cryptocurrencies. Users of these platforms should be aware that regulatory changes can materially impact access to their assets.

Furthermore, compliance requirements such as Know Your Customer (KYC) and Anti-Money Laundering (AML) procedures involve sharing personal information, posing privacy considerations. Regulatory pressures could also compel exchanges to surveil or restrict user activities.

Large centralized exchanges generally offer high liquidity, facilitating quick trade execution. However, liquidity can vary significantly between platforms and tokens, possibly leading to slippage or failed orders during volatile conditions. In extreme scenarios, liquidity crunches may limit the ability to convert assets efficiently.

Moreover, centralized control over order books and matching engines means that trade execution transparency is limited compared to decentralized protocols. Users should consider market structure risks when interacting with centralized exchanges.

System outages, software bugs, or maintenance periods pose operational risks on these platforms. Unexpected downtime can prevent users from acting promptly in dynamic markets. Moreover, technical glitches could jeopardize order accuracy, deposits, or withdrawals.

Best practices involve users staying informed about platform status and understanding terms of service that govern incident responses. Awareness of past incidents can factor into decisions about trustworthiness.

While the risks highlighted are inherent to centralized exchanges, utilizing advanced research and analytical tools can enhance users’ understanding and management of these exposures. AI-driven platforms like Token Metrics offer data-backed insights into exchange security practices, regulatory compliance, liquidity profiles, and overall platform reputation.

Such tools analyze multiple risk dimensions using real-time data, historical performance, and fundamental metrics. This structured approach allows users to make informed decisions based on factual assessments rather than anecdotal information.

Additionally, users can monitor news, community sentiment, and technical analytics collectively via these platforms to evaluate evolving conditions that may affect centralized exchange risk profiles.

Centralized cryptocurrency exchanges continue to play a significant role in digital asset markets, providing accessibility and liquidity. Nevertheless, they carry multifaceted risks ranging from security vulnerabilities to regulatory uncertainties and operational challenges. Understanding these risks through a comprehensive analytical framework is crucial for all participants.

Non-investment-focused, AI-driven research platforms like Token Metrics can support users in navigating the complexity of exchange risks by offering systematic, data-driven insights. Combining such tools with prudent operational practices paves the way for more informed engagement with centralized exchanges.

This content is provided solely for educational and informational purposes. It does not constitute financial, investment, or legal advice. Readers should conduct their own research and consult qualified professionals before making any financial decisions.

%201.svg)

%201.svg)

The landscape of digital assets and blockchain technology has expanded rapidly over recent years, bringing forth a new realm known as Web3 alongside the burgeoning crypto ecosystem. For individuals curious about allocating resources into this sphere, questions often arise: should the focus be on cryptocurrencies or Web3 companies? This article aims to provide an educational and analytical perspective on these options, highlighting considerations without providing direct investment advice.

Before exploring the nuances between investing in crypto assets and Web3 companies, it's important to clarify what each represents.

Web3 companies often develop decentralized applications (dApps), offer blockchain-based services, or build infrastructure layers for the decentralized web.

Deciding between crypto assets or Web3 companies involves analyzing different dynamics:

To approach these complex investment types thoughtfully, frameworks can assist in structuring analysis:

Due to the rapidly evolving and data-intensive nature of crypto and Web3 industries, AI-powered platforms can enhance analysis by processing vast datasets and providing insights.

For instance, Token Metrics utilizes machine learning to rate crypto assets by analyzing market trends, project fundamentals, and sentiment data. Such tools support an educational and neutral perspective by offering data-driven research support rather than speculative advice.

When assessing Web3 companies, AI tools can assist with identifying emerging technologies, tracking developmental progress, and monitoring regulatory developments relevant to the decentralized ecosystem.

To gain a well-rounded understanding, consider the following steps:

Both crypto assets and Web3 companies involve unique risks that warrant careful consideration:

Deciding between crypto assets and Web3 companies involves analyzing different dimensions including technological fundamentals, market dynamics, and risk profiles. Employing structured evaluation frameworks along with AI-enhanced research platforms such as Token Metrics can provide clarity in this complex landscape.

It is essential to approach this domain with an educational mindset focused on understanding rather than speculative intentions. Staying informed and leveraging analytical tools supports sound comprehension of the evolving world of blockchain-based digital assets and enterprises.

This article is intended for educational purposes only and does not constitute financial, investment, or legal advice. Readers should conduct their own research and consult with professional advisors before making any decisions related to cryptocurrencies or Web3 companies.

%201.svg)

%201.svg)

The evolution from Web2 to Web3 marks a significant paradigm shift in how we interact with digital services. While Web2 platforms have delivered intuitive and seamless user experiences, Web3—the decentralized internet leveraging blockchain technology—still faces considerable user experience (UX) challenges. This article explores the reasons behind the comparatively poor UX in Web3 and the technical, design, and infrastructural hurdles contributing to this gap.

Web2 represents the current mainstream internet experience characterized by centralized servers, interactive social platforms, and streamlined services. Its UX benefits from consistent standards, mature design patterns, and direct control over data.

In contrast, Web3 aims at decentralization, enabling peer-to-peer interactions through blockchain protocols, decentralized applications (dApps), and user-owned data ecosystems. While promising increased privacy and autonomy, Web3 inherently introduces complexity in UX design.

Several intrinsic technical barriers impact the Web3 user experience:

The nascent nature of Web3 results in inconsistent and sometimes opaque design standards:

Web2 giants have invested billions over decades fostering developer communities, design systems, and customer support infrastructure. In contrast, Web3 is still an emerging ecosystem characterized by:

Such factors contribute to a user experience that feels fragmented and inaccessible to mainstream audiences.

Emerging tools powered by artificial intelligence and data analytics can help mitigate some UX challenges in Web3 by:

Integrating such AI-driven research and analytic tools enables developers and users to progressively enhance Web3 usability.

For users trying to adapt to Web3 environments, the following tips may help:

For developers, focusing on the following can improve UX outcomes:

The current disparity between Web3 and Web2 user experience primarily stems from decentralization complexities, immature design ecosystems, and educational gaps. However, ongoing innovation in AI-driven analytics, comprehensive rating platforms like Token Metrics, and community-driven UX improvements are promising. Over time, these efforts could bridge the UX divide to make Web3 more accessible and user-friendly for mainstream adoption.

This article is for educational and informational purposes only and does not constitute financial advice or an endorsement. Users should conduct their own research and consider risks before engaging in any blockchain or cryptocurrency activities.

%201.svg)

%201.svg)

Smart contracts have become an integral part of blockchain technology, enabling automated, trustless agreements across various platforms. Understanding what languages are used for smart contract development is essential for developers entering this dynamic field, as well as for analysts and enthusiasts who want to deepen their grasp of blockchain ecosystems. This article offers an analytical and educational overview of popular programming languages for smart contract development, discusses their characteristics, and provides insights on how analytical tools like Token Metrics can assist in evaluating smart contract projects.

Smart contract languages are specialized programming languages designed to create logic that runs on blockchains. The most prominent blockchain for smart contracts currently is Ethereum, but other blockchains have their languages as well. The following section outlines some of the most widely-used smart contract languages.

Developers evaluate smart contract languages based on various factors such as security, expressiveness, ease of use, and compatibility with blockchain platforms. Below are some important criteria:

Solidity remains the dominant language due to Ethereum's market position and is well-suited for developers familiar with JavaScript or object-oriented paradigms. It continuously evolves with community input and protocol upgrades.

Vyper has a smaller user base but appeals to projects requiring stricter security standards, as its design deliberately omits complex features that increase vulnerabilities.

Rust is leveraged by newer chains that aim to combine blockchain decentralization with high throughput and low latency. Developers familiar with systems programming find Rust a robust choice.

Michelson’s niche is in formal verification-heavy projects where security is paramount, such as financial contracts and governance mechanisms on Tezos.

Move and Clarity represent innovative approaches to contract safety and complexity management, focusing on deterministic execution and resource constraints.

Artificial Intelligence (AI) and machine learning have become increasingly valuable in analyzing and researching blockchain projects, including smart contracts. Platforms such as Token Metrics provide AI-driven ratings and insights by analyzing codebases, developer activity, and on-chain data.

Such tools facilitate the identification of patterns that might indicate strong development practices or potential security risks. While they do not replace manual code audits or thorough research, they support investors and developers by presenting data-driven evaluations that help in filtering through numerous projects.

Developers choosing a smart contract language should consider the blockchain platform’s restrictions and the nature of the application. Those focused on DeFi might prefer Solidity or Vyper for Ethereum, while teams aiming for cross-chain applications might lean toward Rust or Move.

Analysts seeking to understand a project’s robustness can utilize resources like Token Metrics for AI-powered insights combined with manual research, including code reviews and community engagement.

Security should remain a priority as vulnerabilities in smart contract code can lead to significant issues. Therefore, familiarizing oneself with languages that encourage safer programming paradigms contributes to better outcomes.

Understanding what languages are used for smart contract development is key to grasping the broader blockchain ecosystem. Solidity leads the field due to Ethereum’s prominence, but alternative languages like Vyper, Rust, Michelson, Move, and Clarity offer different trade-offs in security, performance, and usability. Advances in AI-driven research platforms such as Token Metrics play a supportive role in evaluating the quality and safety of smart contract projects.

This article is intended for educational purposes only and does not constitute financial or investment advice. Readers should conduct their own research and consult professionals before making decisions related to blockchain technologies and smart contract development.

%201.svg)

%201.svg)

With the increasing popularity of cryptocurrencies, selecting a trusted crypto exchange is an essential step for anyone interested in participating safely in the market. Crypto exchanges serve as platforms that facilitate the buying, selling, and trading of digital assets. However, the diversity and complexity of available exchanges make the selection process imperative yet challenging. This article delves into some trusted crypto exchanges, alongside guidance on how to evaluate them, all while emphasizing the role of analytical tools like Token Metrics in supporting well-informed decisions.

Crypto exchanges can broadly be categorized into centralized and decentralized platforms. Centralized exchanges (CEXs) act as intermediaries holding users’ assets and facilitating trades within their systems, while decentralized exchanges (DEXs) allow peer-to-peer transactions without a central authority. Each type offers distinct advantages and considerations regarding security, liquidity, control, and regulatory compliance.

When assessing trusted crypto exchanges, several fundamental factors come into focus, including security protocols, regulatory adherence, liquidity, range of supported assets, user interface, fees, and customer support. Thorough evaluation of these criteria assists in identifying exchanges that prioritize user protection and operational integrity.

Security Measures: Robust security is critical to safeguarding digital assets. Trusted exchanges implement multi-factor authentication (MFA), cold storage for the majority of funds, and regular security audits. Transparency about security incidents and response strategies further reflects an exchange’s commitment to protection.

Regulatory Compliance: Exchanges operating within clear regulatory frameworks demonstrate credibility. Registration with financial authorities, adherence to Anti-Money Laundering (AML) and Know Your Customer (KYC) policies are important markers of legitimacy.

Liquidity and Volume: High liquidity ensures competitive pricing and smooth order execution. Volume trends can be analyzed via publicly available data or through analytics platforms such as Token Metrics to gauge an exchange’s activeness.

Range of Cryptocurrencies: The diversity of supported digital assets allows users flexibility in managing their portfolios. Trusted exchanges often list major cryptocurrencies alongside promising altcoins, with transparent listing criteria.

User Experience and Customer Support: A user-friendly interface and responsive support contribute to efficient trading and problem resolution, enhancing overall trust.

While numerous crypto exchanges exist, a few have earned reputations for trustworthiness based on their operational history and general acceptance in the crypto community. Below is an educational overview without endorsement.

These examples illustrate the diversity of trusted exchanges, highlighting the importance of matching exchange characteristics to individual cybersecurity preferences and trading needs.

The rapid evolution of the crypto landscape underscores the value of AI-driven research tools in navigating exchange assessment. Platforms like Token Metrics provide data-backed analytics, including exchange ratings, volume analysis, security insights, and user sentiment evaluation. Such tools equip users with comprehensive perspectives that supplement foundational research.

Integrating these insights allows users to monitor exchange performance trends, identify emerging risks, and evaluate service quality over time, fostering a proactive and informed approach.

Despite due diligence, crypto trading inherently involves risks. Common concerns linked to exchanges encompass hacking incidents, withdrawal delays, regulatory actions, and operational failures. Reducing exposure includes diversifying asset holdings, using hardware wallets for storage, and continuously monitoring exchange announcements.

Educational tools such as Token Metrics contribute to ongoing awareness by highlighting risk factors and providing updates that reflect evolving market and regulatory conditions.

Choosing a trusted crypto exchange requires comprehensive evaluation across security, regulatory compliance, liquidity, asset diversity, and user experience dimensions. Leveraging AI-based analytics platforms such as Token Metrics enriches the decision-making process by delivering data-driven insights. Ultimately, informed research and cautious engagement are key components of navigating the crypto exchange landscape responsibly.

This article is for educational purposes only and does not constitute financial, investment, or legal advice. Readers should conduct independent research and consult professionals before making decisions related to cryptocurrency trading or exchange selection.

Create Your Free Account

Create Your Free Account9450 SW Gemini Dr

PMB 59348

Beaverton, Oregon 97008-7105 US

.svg)

.png)

Token Metrics Media LLC is a regular publication of information, analysis, and commentary focused especially on blockchain technology and business, cryptocurrency, blockchain-based tokens, market trends, and trading strategies.

Token Metrics Media LLC does not provide individually tailored investment advice and does not take a subscriber’s or anyone’s personal circumstances into consideration when discussing investments; nor is Token Metrics Advisers LLC registered as an investment adviser or broker-dealer in any jurisdiction.

Information contained herein is not an offer or solicitation to buy, hold, or sell any security. The Token Metrics team has advised and invested in many blockchain companies. A complete list of their advisory roles and current holdings can be viewed here: https://tokenmetrics.com/disclosures.html/

Token Metrics Media LLC relies on information from various sources believed to be reliable, including clients and third parties, but cannot guarantee the accuracy and completeness of that information. Additionally, Token Metrics Media LLC does not provide tax advice, and investors are encouraged to consult with their personal tax advisors.

All investing involves risk, including the possible loss of money you invest, and past performance does not guarantee future performance. Ratings and price predictions are provided for informational and illustrative purposes, and may not reflect actual future performance.