Top Crypto Trading Platforms in 2025

%201.svg)

%201.svg)

Big news: We’re cranking up the heat on AI-driven crypto analytics with the launch of the Token Metrics API and our official SDK (Software Development Kit). This isn’t just an upgrade – it's a quantum leap, giving traders, hedge funds, developers, and institutions direct access to cutting-edge market intelligence, trading signals, and predictive analytics.

Crypto markets move fast, and having real-time, AI-powered insights can be the difference between catching the next big trend or getting left behind. Until now, traders and quants have been wrestling with scattered data, delayed reporting, and a lack of truly predictive analytics. Not anymore.

The Token Metrics API delivers 32+ high-performance endpoints packed with powerful AI-driven insights right into your lap, including:

Getting started with the Token Metrics API is simple:

At Token Metrics, we believe data should be decentralized, predictive, and actionable.

The Token Metrics API & SDK bring next-gen AI-powered crypto intelligence to anyone looking to trade smarter, build better, and stay ahead of the curve. With our official SDK, developers can plug these insights into their own trading bots, dashboards, and research tools – no need to reinvent the wheel.

%201.svg)

%201.svg)

The Layer 1 landscape is consolidating as users and developers gravitate to chains with clear specialization. Bitcoin Cash positions itself as a payment-focused chain with low fees and quick settlement for everyday usage.

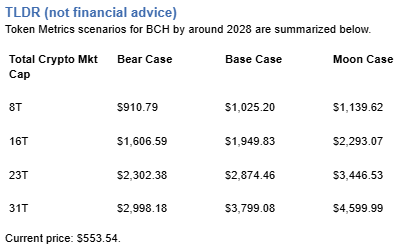

The scenario projections below map potential outcomes for BCH across different total crypto market sizes. Base cases assume steady usage and listings, while moon scenarios factor in stronger liquidity and accelerated adoption.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

How to read it: Each band blends cycle analogues and market-cap share math with TA guardrails. Base assumes steady adoption and neutral or positive macro. Moon layers in a liquidity boom. Bear assumes muted flows and tighter liquidity.

TM Agent baseline:

Token Metrics lead metric for Bitcoin Cash, cashtag $BCH, is a TM Grade of 54.81%, which translates to Neutral, and the trading signal is bearish, indicating short-term downward momentum. This implies Token Metrics views $BCH as mixed value long term: fundamentals look strong, while valuation and technology scores are weak, so upside depends on improvements in adoption or technical development. Market context: Bitcoin has been setting market direction, and with broader risk-off moves altcoins face pressure, which increases downside risk for $BCH in the near term.

Live details:

Affiliate Disclosure: We may earn a commission from qualifying purchases made via this link, at no extra cost to you.

Token Metrics scenarios span four market cap tiers, each representing different levels of crypto market maturity and liquidity:

Each tier assumes progressively stronger market conditions, with the base case reflecting steady growth and the moon case requiring sustained bull market dynamics.

Bitcoin Cash represents one opportunity among hundreds in crypto markets. Token Metrics Indices bundle BCH with top one hundred assets for systematic exposure to the strongest projects. Single tokens face idiosyncratic risks that diversified baskets mitigate.

Historical index performance demonstrates the value of systematic diversification versus concentrated positions.

Bitcoin Cash is a peer-to-peer electronic cash network focused on fast confirmation and low fees. It launched in 2017 as a hard fork of Bitcoin with larger block capacity to prioritize payments. The chain secures value transfers using proof of work and aims to keep everyday transactions affordable.

BCH is used to pay transaction fees and settle transfers, and it is widely listed across major exchanges. Adoption centers on payments, micropayments, and remittances where low fees matter. It competes as a payment‑focused Layer 1 within the broader crypto market.

Token Metrics AI provides comprehensive context on Bitcoin Cash's positioning and challenges.

Vision:

Bitcoin Cash (BCH) is a cryptocurrency that emerged from a 2017 hard fork of Bitcoin, aiming to function as a peer-to-peer electronic cash system with faster transactions and lower fees. It is known for prioritizing on-chain scalability by increasing block sizes, allowing more transactions per block compared to Bitcoin. This design choice supports its use in everyday payments, appealing to users seeking a digital cash alternative. Adoption has been driven by its utility in micropayments and remittances, particularly in regions with limited banking infrastructure. However, Bitcoin Cash faces challenges including lower network security due to reduced mining hash rate compared to Bitcoin, and ongoing competition from both Bitcoin and other scalable blockchains. Its value proposition centers on accessibility and transaction efficiency, but it operates in a crowded space with evolving technological and regulatory risks.

Problem:

The project addresses scalability limitations in Bitcoin, where rising transaction fees and slow confirmation times hinder its use for small, frequent payments. As Bitcoin evolved into a store of value, a gap emerged for a blockchain-based currency optimized for fast, low-cost transactions accessible to the general public.

Solution:

Bitcoin Cash increases block size limits from 1 MB to 32 MB, enabling more transactions per block and reducing congestion. This on-chain scaling approach allows for faster confirmations and lower fees, making microtransactions feasible. The network supports basic smart contract functionality and replay protection, maintaining compatibility with Bitcoin's core architecture while prioritizing payment utility.

Market Analysis:

Bitcoin Cash operates in the digital currency segment, competing with Bitcoin, Litecoin, and stablecoins for use in payments and remittances. While not the market leader, it occupies a niche focused on on-chain scalability for transactional use. Its adoption is influenced by merchant acceptance, exchange liquidity, and narratives around digital cash. Key risks include competition from layer-2 solutions on other blockchains, regulatory scrutiny of cryptocurrencies, and lower developer and miner activity compared to larger networks. Price movements are often tied to broader crypto market trends and internal protocol developments. Despite its established presence, long-term growth depends on sustained utility, network security, and differentiation in a market increasingly dominated by high-throughput smart contract platforms.

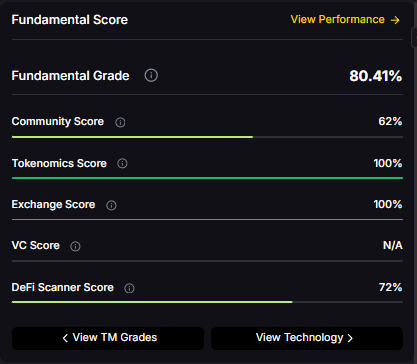

Fundamental Grade: 80.41% (Community 62%, Tokenomics 100%, Exchange 100%, VC —, DeFi Scanner 72%).

Technology Grade: 29.63% (Activity 22%, Repository 70%, Collaboration 48%, Security —, DeFi Scanner 72%).

Can BCH reach $3,000?

Based on the scenarios, BCH could reach $3,000 in the 23T moon case and 31T base case. The 23T tier projects $3,446.53 in the moon case. Not financial advice.

Can BCH 10x from current levels?

At current price of $553.54, a 10x would reach $5,535.40. This falls within the 31T base and moon cases. Bear in mind that 10x returns require substantial market cap expansion. Not financial advice.

Should I buy BCH now or wait?

Timing depends on your risk tolerance and macro outlook. Current price of $553.54 sits below the 8T bear case in our scenarios. Dollar-cost averaging may reduce timing risk. Not financial advice.

Want exposure? Buy BCH on MEXC

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

%201.svg)

%201.svg)

Infrastructure protocols become more valuable as the crypto ecosystem scales and relies on robust middleware. Chainlink provides critical oracle infrastructure where proven utility and deep integrations drive long-term value over retail speculation. Increasing institutional adoption raises demand for professional-grade data delivery and security.

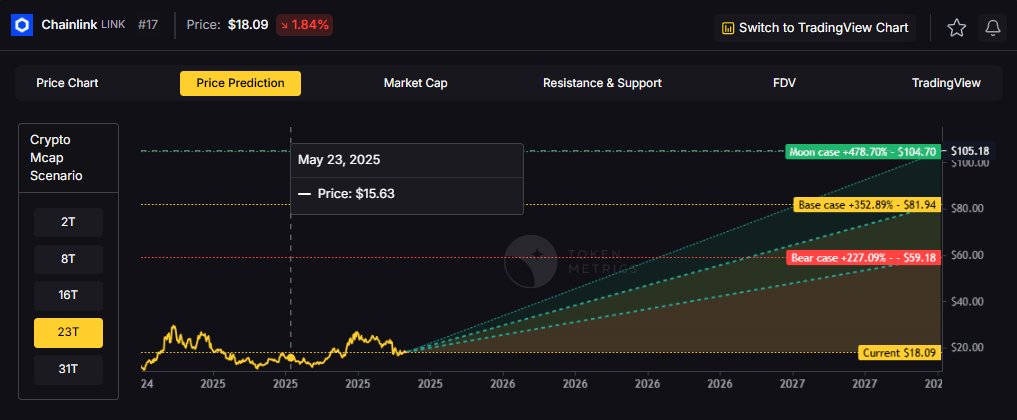

Token Metrics projections for LINK below span multiple total market cap scenarios from conservative to aggressive. Each tier assumes different levels of infrastructure demand as crypto evolves from speculative markets to institutional-grade systems. These bands frame LINK's potential outcomes into 2027.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

How to read it: Each band blends cycle analogues and market-cap share math with TA guardrails. Base assumes steady adoption and neutral or positive macro. Moon layers in a liquidity boom. Bear assumes muted flows and tighter liquidity.

TM Agent baseline: Token Metrics lead metric for Chainlink, cashtag $LINK, is a TM Grade of 23.31%, which translates to a Sell, and the trading signal is bearish, indicating short-term downward momentum. This means Token Metrics currently does not endorse $LINK as a long-term buy at current conditions.

Live details: Chainlink Token Details

Affiliate Disclosure: We may earn a commission from qualifying purchases made via this link, at no extra cost to you.

Token Metrics scenarios span four market cap tiers, each representing different levels of crypto market maturity and liquidity:

8T: At an 8 trillion dollar total crypto market cap, LINK projects to $26.10 in bear conditions, $30.65 in the base case, and $35.20 in bullish scenarios.

16T: Doubling the market to 16 trillion expands the range to $42.64 (bear), $56.29 (base), and $69.95 (moon).

23T: At 23 trillion, the scenarios show $59.18, $81.94, and $104.70 respectively.

31T: In the maximum liquidity scenario of 31 trillion, LINK could reach $75.71 (bear), $107.58 (base), or $139.44 (moon).

Chainlink represents one opportunity among hundreds in crypto markets. Token Metrics Indices bundle LINK with top one hundred assets for systematic exposure to the strongest projects. Single tokens face idiosyncratic risks that diversified baskets mitigate.

Historical index performance demonstrates the value of systematic diversification versus concentrated positions.

Chainlink is a decentralized oracle network that connects smart contracts to real-world data and systems. It enables secure retrieval and verification of off-chain information, supports computation, and integrates across multiple blockchains. As adoption grows, Chainlink serves as critical infrastructure for reliable data feeds and automation.

The LINK token is used to pay node operators and secure the network’s services. Common use cases include DeFi price feeds, insurance, and enterprise integrations, with CCIP extending cross-chain messaging and token transfers.

Vision: Chainlink aims to create a decentralized, secure, and reliable network for connecting smart contracts with real-world data and systems. Its vision is to become the standard for how blockchains interact with external environments, enabling trust-minimized automation across industries.

Problem: Smart contracts cannot natively access data outside their blockchain, limiting their functionality. Relying on centralized oracles introduces single points of failure and undermines the security and decentralization of blockchain applications. This creates a critical need for a trustless, tamper-proof way to bring real-world information onto blockchains.

Solution: Chainlink solves this by operating a decentralized network of node operators that fetch, aggregate, and deliver data from off-chain sources to smart contracts. It uses cryptographic proofs, reputation systems, and economic incentives to ensure data integrity. The network supports various data types and computation tasks, allowing developers to build complex, data-driven decentralized applications.

Market Analysis: Chainlink is a market leader in the oracle space and a key infrastructure component in the broader blockchain ecosystem, particularly within Ethereum and other smart contract platforms. It faces competition from emerging oracle networks like Band Protocol and API3, but maintains a strong first-mover advantage and widespread integration across DeFi, NFTs, and enterprise blockchain solutions. Adoption is driven by developer activity, partnerships with major blockchain projects, and demand for secure data feeds. Key risks include technological shifts, regulatory scrutiny on data providers, and execution challenges in scaling decentralized oracle networks. As smart contract usage grows, so does the potential for oracle services, positioning Chainlink at the center of a critical niche, though its success depends on maintaining security and decentralization over time.

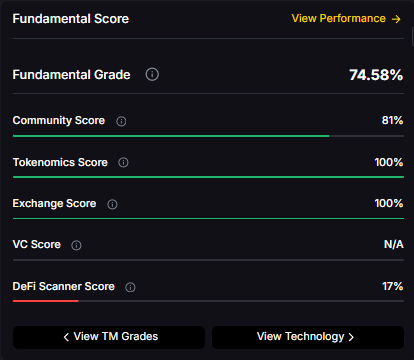

Fundamental Grade: 74.58% (Community 81%, Tokenomics 100%, Exchange 100%, VC —, DeFi Scanner 17%).

Technology Grade: 88.50% (Activity 81%, Repository 72%, Collaboration 100%, Security 86%, DeFi Scanner 17%).

Can LINK reach $100?

Yes. Based on the scenarios, LINK could reach $100+ in the 23T moon case. The 23T tier projects $104.70 in the moon case. Not financial advice.

What price could LINK reach in the moon case?

Moon case projections range from $35.20 at 8T to $139.44 at 31T. These scenarios assume maximum liquidity expansion and strong Chainlink adoption. Not financial advice.

Should I buy LINK now or wait?

Timing depends on risk tolerance and macro outlook. Current price of $18.09 sits below the 8T bear case in the scenarios. Dollar-cost averaging may reduce timing risk. Not financial advice.

Track live grades and signals: Token Details

Want exposure? Buy LINK on MEXC

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

Discover the full potential of your crypto research and portfolio management with Token Metrics. Our ratings combine AI-driven analytics, on-chain data, and decades of investing expertise—giving you the edge to navigate fast-changing markets. Try our platform to access scenario-based price targets, token grades, indices, and more for institutional and individual investors. Token Metrics is your research partner through every crypto market cycle.

%201.svg)

%201.svg)

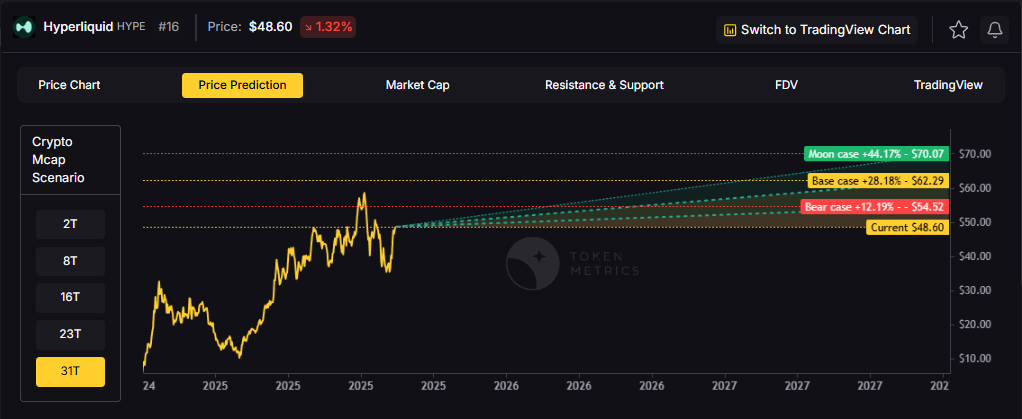

The crypto market is tilting bullish into 2026 as liquidity, infrastructure, and participation improve across the board. Clearer rules and standards are reshaping the classic four-year cycle, flows can arrive earlier, and strength can persist longer than in prior expansions.

Institutional access is widening through ETFs and custody, while L2 scaling and real-world integrations help sustain on‑chain activity. This healthier backdrop frames our scenario work for HYPE. The ranges below reflect different total crypto market sizes and the share Hyperliquid could capture under each regime.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

How to read it: Each band blends cycle analogues and market-cap share math with TA guardrails. Base assumes steady adoption and neutral or positive macro. Moon layers in a liquidity boom. Bear assumes muted flows and tighter liquidity.

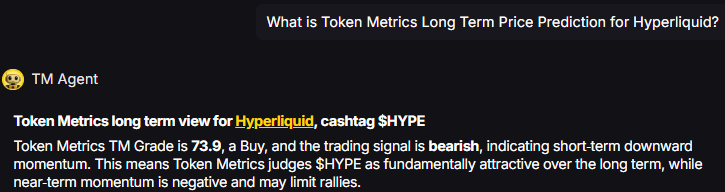

TM Agent baseline: Token Metrics TM Grade is 73.9%, a Buy, and the trading signal is bearish, indicating short-term downward momentum. This means Token Metrics judges HYPE as fundamentally attractive over the long term, while near-term momentum is negative and may limit rallies.

Live details: Hyperliquid Token Details

Affiliate Disclosure: We may earn a commission from qualifying purchases made via this link, at no extra cost to you.

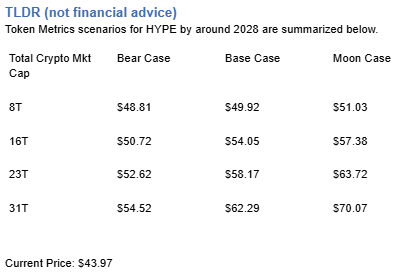

Scenario Analysis

Token Metrics scenarios span four market cap tiers, each representing different levels of crypto market maturity and liquidity:

8T: At an 8 trillion dollar total crypto market cap, HYPE projects to $48.81 in bear conditions, $49.92 in the base case, and $51.03 in bullish scenarios.

16T: Doubling the market to 16 trillion expands the range to $50.72 (bear), $54.05 (base), and $57.38 (moon).

23T: At 23 trillion, the scenarios show $52.62, $58.17, and $63.72 respectively.

31T: In the maximum liquidity scenario of 31 trillion, HYPE could reach $54.52 (bear), $62.29 (base), or $70.07 (moon).

Each tier assumes progressively stronger market conditions, with the base case reflecting steady growth and the moon case requiring sustained bull market dynamics.

Diversification matters. HYPE is compelling, yet concentrated bets can be volatile. Token Metrics Indices hold HYPE alongside the top one hundred tokens for broad exposure to leaders and emerging winners.

Our backtests indicate that owning the full market with diversified indices has historically outperformed both the total market and Bitcoin in many regimes due to diversification and rotation.

Hyperliquid is a decentralized exchange focused on perpetual futures with a high-performance order book architecture. The project emphasizes low-latency trading, risk controls, and capital efficiency aimed at professional and retail derivatives traders. Its token, HYPE, is used for ecosystem incentives and governance-related utilities.

Can HYPE reach $60?

Yes, the 23T and 31T tiers imply ranges above $60 in the Base and Moon bands, though outcomes depend on liquidity and adoption. Not financial advice.

Is HYPE a good long-term investment?

Outcome depends on adoption, liquidity regime, competition, and supply dynamics. Diversify and size positions responsibly.

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

Token Metrics delivers AI-based crypto ratings, scenario projections, and portfolio tools so you can make smarter decisions. Discover real-time analytics on Token Metrics.

%201.svg)

%201.svg)

In the world of blockchain technology, a hard fork refers to a significant and radical change in a network's protocol. This change results in the creating of two separate branches, one following the previous protocol and the other following the new version.

Unlike a soft fork, which is a minor upgrade to the protocol, a hard fork requires all nodes or users to upgrade to the latest version of the protocol software.

Before delving into hard forks, it's important to understand the basics of blockchain technology. A blockchain is a decentralized digital ledger that records transactions and other events in a series of blocks.

Each block contains data and a set of instructions, known as protocols, which dictate how the blockchain network functions. Because a blockchain is decentralized, any changes to its protocol need to be voted on and approved by its community of users.

When developers propose major changes or disagreements arise regarding the development of a blockchain, a hard fork may be initiated to create a new and separate blockchain.

When a hard fork occurs, the new version of the blockchain is no longer compatible with older versions. This creates a permanent divergence from the previous version of the blockchain.

The new rules and protocols implemented through the hard fork create a fork in the blockchain, with one path following the upgraded blockchain and the other path continuing along the old one.

Miners, who play a crucial role in verifying transactions and maintaining the blockchain, must choose which blockchain to continue verifying. Holders of tokens in the original blockchain will also be granted tokens in the new fork.

However, it's important to note that the old version of the blockchain may continue to exist even after the fork, potentially with security or performance flaws that the hard fork aimed to address.

Developers may implement a hard fork for various reasons. One common motivation is to correct significant security risks found in older versions of the software.

Hard forks can also introduce new functionality or reverse transactions, as seen in the case of the Ethereum blockchain's hard fork to address the hack on the Decentralized Autonomous Organization (DAO).

In 2016, the Ethereum community unanimously voted in favor of a hard fork to roll back transactions that resulted in the theft of millions of dollars worth of digital currency.

The hard fork allowed DAO token holders to retrieve their funds through a newly created smart contract. While the hard fork did not undo the network's transaction history, it enabled the recovery of stolen funds and provided failsafe protection for the organization.

Hard forks have occurred in various blockchain networks, not just in Bitcoin. Bitcoin itself has witnessed several notable hard forks.

In 2014, Bitcoin XT emerged as a hard fork to increase the number of transactions per second that Bitcoin could handle. However, the project lost interest and is no longer in use.

Another significant hard fork in the Bitcoin ecosystem took place in 2017, resulting in the creation of Bitcoin Cash.

The hard fork aimed to increase Bitcoin's block size to improve transaction capacity. Subsequently, in 2018, Bitcoin Cash experienced another hard fork, leading to the emergence of Bitcoin Cash ABC and Bitcoin Cash SV.

Ethereum, another prominent cryptocurrency, also underwent a hard fork in response to the DAO hack mentioned earlier. The fork resulted in the creation of Ethereum Classic, which maintained the original blockchain and the updated Ethereum network.

Hard forks offer several benefits to blockchain networks. They can address security issues, enhance the performance of a blockchain, and introduce new features or functionalities.

Hard forks also provide an opportunity for participants in a blockchain community to pursue different visions for their projects and potentially resolve disagreements.

However, hard forks also come with disadvantages. They can confuse investors when a new but similar cryptocurrency is created alongside the original.

Furthermore, hard forks may expose blockchain networks to vulnerabilities, such as 51% attacks or replay attacks. Additionally, the existence of the old version of the blockchain after a hard fork may lead to security or performance flaws that the fork aimed to fix.

While hard forks create two separate blockchains, soft forks result in a single valid blockchain. In a soft fork, the blockchain's existing code is updated, but the old version remains compatible with the new one.

This means that not all nodes or users need to upgrade to the latest version of the protocol software. The decision to implement a hard fork or a soft fork depends on a blockchain network's specific goals and requirements.

Hard forks are often favored when significant changes are necessary, even if a soft fork could potentially achieve the same outcome.

Hard forks play a significant role in the evolution of blockchain technology. They allow for radical changes to a network's protocol, creating new blockchains and potential improvements in security, performance, and functionality.

However, hard forks also come with risks and challenges, such as confusion among investors and possibly exposing blockchain networks to vulnerabilities.

As the blockchain industry continues to evolve, it's essential for investors and stakeholders to stay informed about proposed changes and forks in their cryptocurrency holdings.

Understanding the implications of hard forks and their potential impact on the value of crypto assets is crucial for navigating this rapidly changing landscape.

Remember, investing in cryptocurrency should be cautiously approached, especially for newcomers who are still learning how blockchain works. Stay updated, do thorough research, and seek professional advice before making investment decisions.

The information provided on this website does not constitute investment advice, financial advice, trading advice, or any other advice, and you should not treat any of the website's content as such.

Token Metrics does not recommend buying, selling, or holding any cryptocurrency. Conduct your due diligence and consult your financial advisor before making investment decisions.

%201.svg)

%201.svg)

In the fast-paced world of cryptocurrency, it's essential to thoroughly evaluate a project before investing your hard-earned money.

With thousands of cryptocurrencies flooding the market, it can be challenging to determine which ones hold promise and which ones are destined to fade away.

This comprehensive guide will walk you through the process of evaluating cryptocurrencies, so you can make informed investment decisions and maximize your chances of success.

A cryptocurrency project worth considering should have a well-designed and informative website. Start your evaluation by visiting the project's website and looking for the following key elements:

The white paper serves as the backbone of a cryptocurrency project. It provides detailed information about the project's vision, utility, and tokenomics.

While some white papers can be technical, understanding the key aspects is essential. Pay attention to these important elements:

A cryptocurrency's social media and news presence can give you valuable insights into its community engagement and overall sentiment.

Consider the following factors when assessing a project's social media and news presence:

Community engagement: Visit the project's social media channels like Twitter, Discord, or Reddit. Look for active community moderators and meaningful interactions among community members. A strong and engaged community is a positive sign.

News mentions: Determine the project's visibility in the news. Positive mentions and coverage can indicate growing interest and potential investment opportunities. However, be cautious of excessive hype without substance.

The success of a cryptocurrency project often hinges on the capabilities and experience of its team members. Assess the project team and any partnerships they have established:

Team expertise: Research the background and qualifications of the team members. Look for relevant experience in the blockchain industry or related fields. A team with a strong track record is likelier to deliver on their promises.

Industry partnerships: Check if the project has established partnerships with reputable brands or organizations. These partnerships can provide valuable support and credibility to the project.

Market metrics provide insights into a cryptocurrency's performance and potential. Consider the following metrics when evaluating a cryptocurrency:

Note - Remember to conduct thorough research, read financial blogs, stay updated with the latest news and developments, and consider your own financial goals and risk tolerance.

Analyzing a cryptocurrency's price history can provide valuable insights into its volatility and overall trajectory.

While past performance does not indicate future results, understanding price trends can help you make more informed investment decisions.

Look for gradual and steady price increases rather than erratic spikes followed by sharp declines, which may indicate pump-and-dump schemes.

Evaluate the cryptocurrency's utility and its potential for widespread adoption. Consider whether the project solves a real-world problem or offers value within the blockchain ecosystem.

Cryptocurrencies with practical use cases and strong adoption potential are more likely to retain their value over time. Look for projects that have partnerships with established businesses or offer unique features that set them apart from competitors.

By following this comprehensive evaluation guide, you can make more informed decisions when investing in cryptocurrencies.

Cryptocurrency investments can be highly rewarding, but they require careful analysis and due diligence to maximize your chances of success.

The information provided on this website does not constitute investment advice, financial advice, trading advice, or any other advice, and you should not treat any of the website's content as such.

Token Metrics does not recommend buying, selling, or holding any cryptocurrency. Conduct your due diligence and consult your financial advisor before making investment decisions.

%201.svg)

%201.svg)

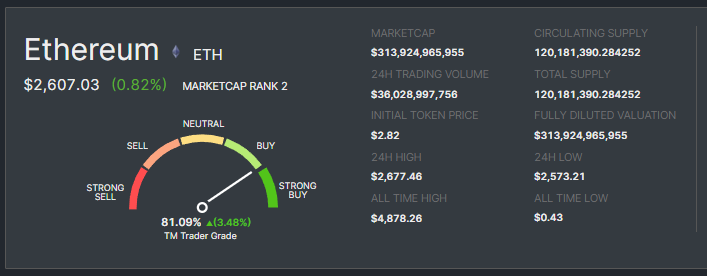

Ethereum, a trailblazer in the blockchain technology space, has established itself as the second-largest cryptocurrency by market capitalization.

However, its journey hasn't been without its fair share of ups and downs. Investors are now looking towards the future, wondering if Ethereum will experience a surge in the next bull run.

This blog post will comprehensively analyze Ethereum's current state, potential driving forces, and what it could mean for its future value.

Launched in 2015 by Vitalik Buterin, Ethereum is a decentralized platform powered by blockchain technology. Its native token, ETH, fuels various activities within the network, such as running decentralized applications (dApps) and executing smart contracts.

Ethereum is significant in the DeFi (decentralized finance) space, enabling various financial instruments like lending, borrowing, and trading without relying on traditional financial institutions.

As of today, the price of Ethereum sits at around $2600, representing an 86% increase year-to-date. This growth comes after a significant dip, with the price falling from its peak of $4800 in November 2021 to around $1200 in October 2022.

The recent price increase can be attributed to several factors, including the successful completion of "The Merge" in September 2022, which transitioned Ethereum from a proof-of-work to a proof-of-stake consensus mechanism.

While the current trend shows optimism, it's essential to understand the forces that caused Ethereum's previous decline. The cryptocurrency market, along with other financial markets, faced a downturn in 2022 due to several key factors:

Despite the recent downtrend, several factors suggest that Ethereum could experience significant growth in the next bull run:

While predicting the exact timing of the next bull run is difficult, many experts believe it is on the horizon, and Ethereum is poised to reap substantial benefits.

Here are a few factors that can significantly impact Ethereum.

Direct Price Increase: Historically, bull runs have led to significant price surges across the crypto market, and Ethereum is no exception. The combined effect of increased market demand, investor confidence, and heightened media attention could propel Ethereum's price significantly higher.

Market experts predict price targets to a potential peak of $8,000 by 2026 and even higher in the long run.

Booming DeFi and dApp Ecosystem: The DeFi and dApp ecosystem on Ethereum is already thriving, but a bull run could fuel its exponential growth. This growth would directly translate to increased demand for ETH, further pushing its price upward.

Moreover, new projects and innovative use cases will likely emerge, attracting even more users and capital to the Ethereum network.

Enhanced Liquidity and Trading Volume: Bull runs typically lead to increased trading activity and higher liquidity in the market. This translates to buying easier and selling orders for investors, creating favorable conditions for opportunistic trading and capitalizing on short-term price movements.

Institutional Investment: During bull runs, institutional investors tend to allocate a portion of their portfolios to cryptocurrencies, recognizing their potential for high returns. This influx of institutional capital would provide significant backing to Ethereum, further solidifying its position as a leading cryptocurrency and potentially driving its price higher.

Also Read - Is Ethereum Dead?

1. Diversification: Consider allocating a portion of your portfolio to Ethereum to capitalize on its potential growth, but remember to diversify your investments to mitigate risk.

2. Dollar-Cost Averaging: Invest gradually over time to smooth out price fluctuations and reduce the risk of buying at a peak.

3. Research and Due Diligence: Conduct your research and due diligence before investing in any cryptocurrency project.

4. Long-Term Perspective: Consider investing for the long term, as the full potential of Ethereum might not be realized in the short term.

5. Stay Informed: Remain informed about key developments in the cryptocurrency market and Ethereum's progress to make informed investment decisions.

While it's impossible to predict the future with certainty, several factors suggest that Ethereum could see a significant price recovery in the next bull run. Experts offer varying predictions:

Techopedia: Estimates an average price of $9,800 by the end of 2030, with highs of $12,200 and lows of $7,400.

Changelly: Changelly predicts a potential peak of $7,200 by 2026.

Standard Chartered: Offers the most optimistic outlook, forecasting a possible fivefold increase to $8,000 by the end of 2026, with a long-term target of $26,000-$35,000.

Note - Start Your Free Trial Today and Uncover Your Token's Price Prediction and Forecast on Token Metrics.

Ethereum is a complex and dynamic ecosystem with numerous factors influencing its price. While the recent downtrend may raise concerns, its strong fundamentals, ongoing development, and potential for future growth suggest that Ethereum is well-positioned to thrive in the next bull run.

Investors should carefully consider their risk tolerance and investment goals before making decisions.

Remember:

1. Investing in cryptocurrencies carries inherent risks.

2. Always conduct your own research and due diligence before investing.

3. Never invest more than you can afford to lose.

The information provided on this website does not constitute investment advice, financial advice, trading advice, or any other advice, and you should not treat any of the website's content as such.

Token Metrics does not recommend buying, selling, or holding any cryptocurrency. Conduct your due diligence and consult your financial advisor before making investment decisions.

%201.svg)

%201.svg)

The crypto market is a dynamic and interconnected landscape, where one event can trigger a chain reaction of consequences throughout the entire ecosystem. This phenomenon, known as the domino effect, poses risks and opportunities for investors and enthusiasts alike.

Understanding the domino effect is crucial for navigating the complexities of the crypto market and making informed decisions.

This comprehensive post will delve into the intricate workings of this phenomenon, exploring its potential impact and providing you with actionable insights to mitigate risks and maximize opportunities.

Imagine a line of dominoes standing upright. When a single domino falls, it knocks over the next domino, which in turn knocks over another, and so on, creating a chain reaction. This is analogous to the domino effect in crypto.

In the crypto world, a single negative event, such as a major exchange hack or a regulatory crackdown, can trigger a wave of panic and selling pressure.

As investors lose confidence, they sell their crypto holdings, causing prices to plummet. This sell-off can lead to further negative consequences, such as the insolvency of crypto lending platforms or the collapse of poorly-capitalized projects.

Several factors contribute to the domino effect in crypto:

Several factors contribute to this interconnectedness. First, market sentiment plays a crucial role. Positive news or a significant development in the crypto industry can create a positive ripple effect, boosting the confidence and value of other cryptocurrencies.

Conversely, negative news or market downturns can trigger a panic sell-off, causing a decline in the value of multiple cryptocurrencies. Second, market liquidity is another contributing factor.

When investors try to cash out their holdings in a specific cryptocurrency, it can lead to a chain reaction of sell orders that also affect other cryptocurrencies.

Finally, regulatory actions and government policies can significantly impact the crypto market. If there are new regulations or bans imposed on cryptocurrencies in one country, it can create fear and uncertainty, leading to a domino effect across the global crypto market.

Mt. Gox Hack (2014): The hack of the Mt. Gox exchange, which resulted in the loss of over 850,000 bitcoins, triggered a major sell-off that sent the price of Bitcoin plummeting by 50%.

The DAO Hack (2016): A smart contract exploit on The DAO, a decentralized autonomous organization, led to the theft of approximately $150 million worth of ETH. This event eroded investor confidence and contributed to a broader market downturn.

TerraUSD Collapse (2022): The collapse of the TerraUSD stablecoin triggered a domino effect that ultimately led to the bankruptcy of crypto hedge fund Three Arrows Capital and the suspension of withdrawals on the Celsius Network.

While the domino effect can be unpredictable and difficult to control, there are steps you can take to protect yourself:

By implementing these strategies, you can protect yourself from the Domino Effect and minimize the risks associated with cryptocurrency investments.

Expert opinions on the future of the Domino Effect in crypto vary. Some experts believe that as the cryptocurrency market becomes more mature and diversified, the impact of the Domino Effect will diminish.

They argue that with the increasing adoption of blockchain technology and the emergence of various use cases, cryptocurrencies will become less correlated, reducing the likelihood of a widespread collapse.

On the other hand, some experts caution that the interconnectedness of cryptocurrencies and the market's overall volatility make it susceptible to a Domino Effect.

They argue that the lack of regulation and the potential for speculative behavior can exacerbate the impact of a major cryptocurrency's downfall.

Overall, the future of the Domino Effect in crypto remains uncertain, but it is clear that market dynamics and regulatory measures will play crucial roles in shaping its impact.

The domino effect is a powerful force in the crypto market, and it's crucial to understand its potential impact. By taking the necessary precautions and adopting a prudent approach, you can navigate the complexities of the crypto landscape and maximize your chances of success.

The information provided on this website does not constitute investment advice, financial advice, trading advice, or any other advice, and you should not treat any of the website's content as such.

Token Metrics does not recommend buying, selling, or holding any cryptocurrency. Conduct your due diligence and consult your financial advisor before making investment decisions.

%201.svg)

%201.svg)

Tokenization is a groundbreaking concept that has gained significant traction in recent years. It has transformed how we perceive ownership, protect valuable assets, and engage in cryptocurrency investments.

In this comprehensive guide, we will delve into the fundamentals of tokenization, explore its inner workings, and unravel its practical applications. So, let's embark on this journey to understand the power of tokenization and its potential to reshape the future.

Tokenization is the process of converting the ownership rights of an asset into unique digital units called tokens. These tokens are digital representations of tangible or intangible assets, ranging from artwork and real estate to company shares and voting rights.

By tokenizing assets, individuals and businesses can unlock new avenues of ownership and transfer, facilitating seamless transactions and enhancing liquidity.

Tokenization originally emerged as a data security technique businesses employ to safeguard sensitive information. It involves replacing the original data with tokens, which do not contain the actual data but share similar characteristics or formatting.

This method ensures that the sensitive information remains protected, as access to the tokens alone is insufficient to decipher the original data.

Tokens essentially serve as substitutes for real assets or information. They hold no inherent value or purpose other than securing data or representing ownership.

Tokens can be created through various techniques, such as reversible cryptographic functions, non-reversible functions, or randomly generated numbers.

These tokens are then linked to transactional data stored on a decentralized ledger known as the blockchain. This integration with blockchain technology ensures the immutability and transparency of asset ownership, as all transactions can be easily verified using blockchain data.

In the context of payment information security, tokenization involves using a payment gateway that automates the token creation process and stores the original data separately.

The token is then transmitted to a payment processor, which can be traced back to the original information stored in the seller's token vault.

This approach eliminates the need to provide sensitive payment details during transactions, enhancing security and reducing the risk of data breaches.

Tokenization encompasses various forms, with each type serving distinct purposes and applications. Let's explore the different categories of tokenization:

Fungible Tokenization - Fungible tokens are standard blockchain tokens with identical values, making them interchangeable. Think of it as swapping one dollar bill for another dollar bill.

Non-Fungible Tokenization - Non-fungible tokens (NFTs) represent ownership of unique assets, such as digital art pieces or real estate properties. Unlike fungible tokens, NFTs do not have a set value and derive their worth from the underlying asset they represent.

Governance Tokenization - Governance tokens grant voting rights to token holders, enabling them to participate in decision-making processes within a blockchain ecosystem. These tokens are crucial in blockchain systems' governance and collaborative aspects.

Utility Tokenization - Utility tokens serve as access keys to specific products and services within a particular blockchain network. They facilitate actions like paying transaction fees, operating decentralized market systems, or accessing certain functionalities of the blockchain platform.

Vault Tokenization - Vault tokenization is a conventional method to protect payment information. It involves generating tokens that can be used for payment processing without divulging sensitive card numbers or other data. The original data is securely stored in a token vault.

Vaultless Tokenization - Vaultless tokenization is an alternative approach to payment processing that eliminates the need for a token vault. Instead, cryptographic devices and algorithms are utilized to convert data into tokens, ensuring secure transactions without centralized storage.

Natural Language Processing Tokenization - Natural language processing tokenization involves breaking down information into simpler terms, enabling computers to understand better and process the data. This technique encompasses word, subword, and character tokenization to enhance computational efficiency.

Tokenization offers many benefits that revolutionize asset ownership, financial transactions, and data security. Let's explore the advantages of tokenization:

Improved Liquidity and Accessibility - Tokenization opens asset ownership to a broader audience, enhancing liquidity and accessibility.

By dividing assets into tokens, investment opportunities become more inclusive, allowing individuals with limited capital to participate in previously exclusive markets.

Moreover, digitizing assets through tokenization eliminates many traditional barriers associated with investing in tangible assets, streamlining the investment process and reducing costs.

Faster and Convenient Transactions - Tokenization enables faster and more convenient transactions by eliminating intermediaries and minimizing the complexities of traditional financial processes. Assets can be easily transferred through tokenization, and blockchain data can seamlessly verify ownership.

This streamlined approach significantly reduces transaction times and eliminates the need for intermediaries such as lawyers, banks, escrow accounts, and brokerage commissions.

Enhanced Security and Transparency - Tokenization leverages blockchain technology to ensure the security and transparency of transactions. Blockchain's decentralized nature and immutability make it an ideal platform for storing transaction data and verifying asset ownership.

The transparency of blockchain transactions allows for increased trust among potential buyers, as the entire transaction history can be audited and verified. Additionally, tokenization eliminates the risk of fraudulent activities and ensures the integrity of asset ownership records.

Tokenization holds immense potential for transforming enterprise systems across various industries. Let's explore how tokenization can benefit businesses:

Streamlined Transactions and Settlements - Tokenization can greatly reduce transaction times between payments and settlements, enabling faster and more efficient financial processes.

By tokenizing intangible assets such as copyrights and patents, businesses can digitize and enhance the value of these assets, facilitating shareholding and improving the overall valuation process.

Additionally, tokenized assets like stablecoins can be utilized for transactions, reducing reliance on traditional banking systems and intermediaries.

Loyalty Programs and Incentives - Tokenization enables businesses to create loyalty-based tokens incentivizing customers to engage with their products and services.

These tokens can be used to reward customer loyalty, facilitate seamless transactions, and even participate in decision-making processes within decentralized autonomous organizations (DAOs).

Loyalty tokens enhance transparency and efficiency in loyalty reward systems, benefiting businesses and customers.

Renewable Energy Projects and Trust Building - Tokenization can play a vital role in financing renewable energy projects. Project developers can expand their investor pool and build trust within the industry by issuing tokens backed by renewable energy assets.

Tokenization allows investors to participate in renewable energy initiatives, contributing to a sustainable future while enjoying the benefits of asset ownership.

While tokenization presents numerous advantages, it also faces challenges that must be addressed for widespread adoption and growth. Let's explore some of these challenges:

Regulatory Considerations - As tokenization gains prominence, regulatory frameworks must evolve to accommodate this emerging technology.

Different countries have varying regulations and policies regarding tokenization, creating a fragmented landscape that hinders seamless transactions and investments. Regulatory clarity is essential to ensure compliance and foster trust within the tokenization ecosystem.

Asset Management and Governance - Managing tokenized assets, especially those backed by physical assets, poses challenges regarding ownership and governance.

For instance, determining the entity responsible for managing the property becomes complex if multiple foreign investors collectively own a tokenized hotel.

Tokenization platforms must establish clear rules and governance structures to address such scenarios and ensure the smooth operation of tokenized assets.

Integration of Real-World Assets with Blockchain - Integrating real-world assets with blockchain technology presents technical and logistical challenges.

For example, ensuring the availability and authenticity of off-chain assets like gold when tokenizing them requires robust mechanisms and trusted external systems.

The overlap between the physical world and the blockchain environment necessitates the establishment of defined rules and protocols to govern the interaction between the two realms.

Despite these challenges, tokenization continues to gain momentum as a powerful financial tool. Increased regulatory clarity, technological advancements, and growing awareness drive the adoption and recognition of tokenization's potential.

As governments and industries embrace tokenization, new investment opportunities and innovative ways of asset ownership will emerge, shaping the future of finance.

Tokenization has emerged as a transformative force in the realm of ownership, asset security, and financial transactions. By converting assets into unique digital tokens, tokenization enables seamless transfers, enhances liquidity, and ensures the integrity of ownership records.

Through blockchain or non-blockchain methods, tokenization provides businesses and individuals unprecedented opportunities to engage in secure transactions, access new investment avenues, and revolutionize traditional systems.

With its potential to unlock value, improve accessibility, and streamline processes, tokenization is poised to shape the future of finance and redefine the concept of ownership. Embrace the power of tokenization and be future-ready in this dynamic landscape of digital assets and decentralized economies.

The information provided on this website does not constitute investment advice, financial advice, trading advice, or any other advice, and you should not treat any of the website's content as such.

Token Metrics does not recommend buying, selling, or holding any cryptocurrency. Conduct your due diligence and consult your financial advisor before making investment decisions.

%201.svg)

%201.svg)

In the world of cryptocurrencies, Coinbase and Robinhood are two popular platforms that allow users to buy and sell digital assets.

Both exchanges have unique features and advantages, making it important for users to understand the differences before deciding which is better for their investment needs.

This article will compare Coinbase and Robinhood across various aspects such as fees, cryptocurrency selection, security, ease of use, and more. So, let's dive in and find out which crypto exchange comes out on top.

When it comes to fees, Robinhood takes the lead over Coinbase. Robinhood offers commission-free trading, allowing users to buy and sell cryptocurrencies without incurring any transaction fees.

However, it's important to note that Robinhood still makes money through its controversial payment-for-order flow (PFOF) system, which may impact the execution price of trades.

On the other hand, Coinbase has a more complex fee structure. The fees vary depending on factors such as the trade size, payment method used, market conditions, and location.

Coinbase charges a flat fee of 0.50% for transactions and a spread of about 0.5% for cryptocurrency sales and purchases. These fees can add up, especially for frequent traders. However, Coinbase offers a fee reduction for traders using Coinbase Pro, their advanced trading platform.

In terms of fees, Robinhood wins for its commission-free trading. However, it's worth considering the potential impact of Robinhood's PFOF system on trade execution and fill prices.

When it comes to the variety of cryptocurrencies available for trading, Coinbase outshines Robinhood by a significant margin.

Coinbase supports over 250 digital currencies and tokens, regularly making new additions. Some of the popular cryptocurrencies available on Coinbase include Bitcoin (BTC), Ethereum (ETH), Cardano (ADA), and many more.

On the other hand, Robinhood offers a more limited selection of cryptocurrencies. Currently, Robinhood supports only 18 digital assets, including Bitcoin, Ethereum, Dogecoin, and others.

While Robinhood has been expanding its crypto offerings, it still lags behind Coinbase regarding the number of supported cryptocurrencies. If you're looking for a wide range of cryptocurrency options, Coinbase is the clear winner in this category.

Security is a crucial factor to consider when choosing a crypto exchange. Both Coinbase and Robinhood prioritize the security of user funds, but they have different approaches.

Coinbase implements robust security measures to protect user assets. They store 98% of digital assets in air-gapped cold storage, keeping them offline and away from potential hacks.

Coinbase also offers two-factor authentication (2FA) for added account security. In addition, Coinbase holds an insurance policy to cover potential breaches of cryptocurrencies held in hot wallets.

On the other hand, Robinhood also takes security seriously. They store crypto assets in cold storage, although the exact percentage of assets stored offline is not specified. Robinhood offers two-factor authentication (2FA) for account security as well.

Both exchanges have their own insurance coverage. Coinbase provides FDIC insurance for USD balances, while Robinhood offers SIPC coverage for ETFs, stocks, and cash funds.

Regarding security, both Coinbase and Robinhood have solid measures in place to protect user funds. However, Coinbase's emphasis on cold storage and insurance coverage gives it an edge in this category.

Both Coinbase and Robinhood have user-friendly interfaces, making them accessible to beginners. The simplicity of their platforms makes it easy for users to navigate and execute trades.

Coinbase offers an intuitive interface, allowing users to sign up easily and connect their bank accounts or credit cards for buying and selling crypto.

The platform provides a straightforward process for completing transactions and tracking activities. Coinbase also offers a mobile app for convenient on-the-go trading.

Similarly, Robinhood provides a user-friendly trading application that supports the purchase of crypto, stocks, options, and ETFs.

The app is designed with simplicity in mind, making it easy for beginners to understand and use. Robinhood also offers a web-based platform for users who prefer trading on their computers.

In terms of ease of use, both Coinbase and Robinhood excel in providing intuitive platforms that are suitable for beginners.

When it comes to advanced capabilities, Coinbase offers more options compared to Robinhood. Coinbase provides features such as staking, where users can earn rewards for holding certain cryptocurrencies.

They also offer an advanced trading platform, Coinbase Pro, which caters to experienced and professional traders. Additionally, Coinbase allows users to trade cryptocurrencies for one another, providing more flexibility in investment strategies.

On the other hand, Robinhood is primarily focused on providing a simple and accessible trading experience. While they have expanded their crypto offerings, Robinhood does not currently offer advanced features like staking or crypto-to-crypto trading.

If you're an experienced trader or looking for advanced capabilities, Coinbase's additional features make it the preferred choice.

Apart from the key factors discussed above, there are a few additional considerations when choosing between Coinbase and Robinhood.

Firstly, Coinbase has a wider global reach, available in over 100 countries, while Robinhood is limited to the United States.

Secondly, Coinbase offers various payment methods, including bank account transfers, credit/debit cards, and PayPal. On the other hand, Robinhood only supports bank account transfers for cryptocurrency purchases.

Finally, Coinbase has faced occasional technical issues during high trading volumes, which may impact the user experience. Robinhood has also experienced outages in the past, with reported service interruptions.

Considering these additional factors can help you make an informed decision based on your specific needs and preferences.

Both Coinbase and Robinhood platforms have their strengths and weaknesses. Coinbase offers a wider selection of cryptocurrencies, advanced trading capabilities, and a global presence.

On the other hand, Robinhood provides commission-free trading, a user-friendly interface, and the ability to trade crypto alongside other asset classes.

If you're looking for a wide selection of cryptocurrencies and advanced features, Coinbase is the better choice. However, Robinhood may be more suitable if you prefer commission-free trading and the ability to trade multiple asset classes on a single platform.

Ultimately, the decision between Coinbase and Robinhood depends on your individual investment goals, trading preferences, and the specific features that matter most to you. Considering these factors and conducting further research before making your final choice is important.

The information provided on this website does not constitute investment advice, financial advice, trading advice, or any other advice, and you should not treat any of the website's content as such.

Token Metrics does not recommend buying, selling, or holding any cryptocurrency. Conduct your due diligence and consult your financial advisor before making investment decisions.

%201.svg)

%201.svg)

In the fast-paced world of cryptocurrencies, Ethereum has always been at the forefront of innovation. While "halving" is commonly associated with Bitcoin, Ethereum has its unique approach to this concept.

The Ethereum halving, often referred to as the "Triple Halving," is a multifaceted process that has profound implications for the future of this popular cryptocurrency.

In this article, we will delve deep into the Ethereum halving phenomenon, exploring its significance in the ever-evolving crypto market.

To understand the Ethereum halving, it's important first to grasp the fundamentals of Ethereum's underlying technology. Ethereum operates on a consensus mechanism known as Proof of Work (PoW), similar to Bitcoin.

Miners solve complex mathematical problems in this system to validate transactions and create new blocks. As a reward for their efforts, miners are given Ethereum coins.

However, Ethereum has been undergoing a significant transformation with the introduction of the Ethereum 2.0 upgrade. This upgrade involves transitioning from the PoW consensus mechanism to a more energy-efficient and scalable model called Proof of Stake (PoS).

Unlike PoW, where miners compete to validate transactions, PoS relies on validators who lock up a certain amount of Ethereum as a stake. These validators are then chosen to create new blocks based on factors such as the amount they stake.

This transition to PoS brings about several significant changes to the Ethereum ecosystem. It eliminates the energy-intensive process of mining and reduces the daily issuance rate of Ethereum tokens.

Additionally, the Ethereum Improvement Proposal (EIP) 1559 introduces a new fee structure that burns a portion of the transaction fees, further reducing the overall supply of Ethereum.

One of the key components of the Ethereum halving is the shift from mining to staking. Under the PoS model, validators are chosen to create new blocks based on the amount of Ethereum they have staked.

This means that the more Ethereum a validator holds, the higher their chances of being selected to validate transactions.

Staking Ethereum has several advantages over traditional mining. First and foremost, it is more energy-efficient, as it does not require the use of powerful computational hardware.

This shift to a more sustainable consensus mechanism aligns with Ethereum's commitment to environmental sustainability.

Secondly, staking Ethereum helps to reduce the overall supply of Ethereum in circulation. When Ethereum is staked, it is effectively locked up for a certain period of time, making it temporarily unavailable for trading or selling.

This reduction in circulating supply creates scarcity, which can potentially drive up the price of Ethereum over time.

Moreover, staking Ethereum allows validators to earn staking rewards. These rewards are proportional to the amount of Ethereum staked, providing an additional incentive for users to participate in the network and contribute to its security and stability.

Another crucial aspect of the Ethereum halving is the implementation of EIP-1559, which introduces a new fee structure for transactions on the Ethereum network.

Under the previous fee model, users would bid for transaction priority by suggesting a gas fee. This often resulted in bidding wars during periods of network congestion.

EIP-1559 sets a base fee for transactions, which adjusts dynamically based on network demand. Crucially, this base fee is burned or permanently removed from circulation rather than being given to miners or validators.

By burning a portion of the transaction fees, Ethereum's overall supply can decrease during times of high network usage. This deflationary pressure can offset the inflationary issuance of new coins, potentially leading to Ethereum becoming a deflationary asset over time.

The introduction of fee burning has several implications for the Ethereum ecosystem. First, it improves the predictability and stability of transaction fees, making it easier for users to estimate the cost of their transactions.

This is particularly beneficial for developers and users of decentralized applications (dApps), as it creates a more user-friendly experience and reduces the barriers to entry.

Second, fee burning helps to align the incentives of miners and validators with the long-term success of the Ethereum network. In the PoW model, miners are primarily motivated by the block rewards they receive for validating transactions.

However, as the issuance of new coins decreases over time, transaction fees become a more significant source of income for miners. By burning some of these fees, Ethereum ensures miners are vested in the network's sustainability and efficiency.

In addition to the shift to PoS and the burning of transaction fees, the Ethereum halving also involves a significant reduction in the daily issuance rate of Ethereum tokens.

Under the PoW model, miners were rewarded with newly issued Ethereum tokens for validating transactions and securing the network.

However, with the transition to PoS and the phasing out of traditional mining, the daily issuance rate of Ethereum tokens has been significantly reduced.

This reduction in token issuance limits the supply of new Ethereum entering the market, creating scarcity. When combined with the burning of transaction fees, this reduction in supply can exert upward pressure on the price of Ethereum.

The reduced token issuance has several implications for Ethereum as an investment. First, it helps to mitigate the potential impact of inflation on the value of Ethereum.

As the issuance of new coins decreases, the inflation rate decreases as well, making Ethereum a more attractive asset for long-term holders.

Second, the reduction in token issuance aligns with the principles of scarcity and supply and demand economics. With a limited supply of new coins entering the market, the value of existing coins can increase, assuming continued demand for Ethereum.

The Ethereum halving, or the "Triple Halving," has profound implications for the Ethereum network, its participants, and the broader crypto ecosystem. Here's an in-depth exploration of its impact across various aspects:

Reward System Changes for Validators

With the transition to PoS, the Ethereum halving represents a shift in the reward system for validators. Instead of relying on mining rewards, validators are rewarded with staking rewards based on the amount of Ethereum they stake.

These rewards are proportional to the stake and other factors, incentivizing validators to actively participate in the network and secure its operations.

Transaction Fees: A More Efficient and Predictable System

The introduction of EIP-1559 and the burning of transaction fees have significant implications for users of the Ethereum network.

Ethereum has created a more efficient and predictable fee system by setting a base fee for transactions and burning a portion of these fees. This benefits users by reducing the volatility of transaction fees and creating a more user-friendly experience.

Deflationary Pressure and Scarcity

The Ethereum halving introduces deflationary pressure on the Ethereum supply through a combination of reduced token issuance and the burning of transaction fees. This deflationary nature can create scarcity, potentially leading to upward pressure on the price of Ethereum.

It aligns with supply and demand economics principles, where a limited supply combined with continued demand can drive price appreciation.

Impact on Stakeholder Dynamics

The Ethereum halving has implications for various stakeholders within the Ethereum ecosystem:

Ethereum and Bitcoin, as leading cryptocurrencies, both have mechanisms in place to control inflation and ensure the longevity of their respective networks.

While they share similar goals, the methods and implications of their halving events are distinct. Let's delve deeper into the comparison between the Ethereum halving and the Bitcoin halving:

Definition of Halving

The Ethereum halving, or the "Triple Halving," is a continuous process without a fixed date. It encompasses the shift to PoS, the burning of transaction fees, and the reduction in token issuance.

In contrast, the Bitcoin halving is a predetermined event that occurs approximately every four years. During the Bitcoin halving, the block rewards for miners are reduced by 50%, decreasing the rate of new Bitcoin issuance.

Purpose and Impact on Mining

The Ethereum halving aims to transition to a more energy-efficient consensus mechanism (PoS) and potentially make Ethereum deflationary over time. As a result, traditional mining becomes obsolete, and miners must adapt by either transitioning to staking or mining other PoW cryptocurrencies.

On the other hand, the Bitcoin halving aims to control inflation by reducing the rate at which new Bitcoins are introduced into circulation. The reduction in block rewards puts downward pressure on mining profitability, leading to a potential reduction in the number of miners or a consolidation of mining power.

Effect on Supply and Price Implications

The Ethereum halving, through the combination of reduced token issuance and the burning of transaction fees, limits the supply of new Ethereum entering the market. This reduction in supply, coupled with continued demand, can exert upward pressure on Ethereum's price over time.

Historically, the Bitcoin halving has been associated with price surges in the months following the event. However, it's important to note that various factors, including market sentiment, regulatory changes, and macroeconomic conditions influence price dynamics.

Network Security and Historical Context

The Ethereum halving introduces PoS as a more energy-efficient and secure consensus mechanism. Validators are incentivized to act honestly, as they have Ethereum at stake. Misbehaving validators risk losing their staked Ethereum, ensuring the security and integrity of the network.

On the other hand, Bitcoin relies on PoW and miners' computational power to validate transactions. As block rewards decrease, transaction fees become a more significant incentive for miners, ensuring continued network security.

In terms of historical context, Ethereum's journey has been marked by continuous evolution and various upgrades, such as the introduction of EIP-1559 and the Ethereum Merge.

These milestones contribute to Ethereum's position as a leading smart contract platform and highlight the importance of innovation and adaptability in the blockchain and crypto space.

The Ethereum halving, or the "Triple Halving," is a testament to Ethereum's adaptability, resilience, and vision for the future. It aims to enhance Ethereum's efficiency, sustainability, and value proposition through the transition to PoS, burning of transaction fees, and reduction in token issuance.

This sets a precedent for other cryptocurrencies, emphasizing scalability, security, and user-centric design. The Ethereum halving creates new opportunities for investors, developers, and users, fostering growth and innovation within the ecosystem.

The information provided on this website does not constitute investment advice, financial advice, trading advice, or any other advice, and you should not treat any of the website's content as such.

Token Metrics does not recommend buying, selling, or holding any cryptocurrency. Conduct your due diligence and consult your financial advisor before making investment decisions.

%201.svg)

%201.svg)

In the cryptocurrency world, the rise of meme coins has been nothing short of extraordinary. These unique digital assets take inspiration from popular memes and often possess a comedic or entertaining trait.

Meme coins have gained significant attention and popularity thanks to their enthusiastic online communities and viral nature.

In this comprehensive guide, we will explore the world of meme coins, their characteristics, top examples, and the potential risks and benefits of investing in them.

Meme coins are a unique category of cryptocurrencies that draw inspiration from popular memes or possess a comedic trait. These digital assets are designed to capture the online community's attention and go viral.

Meme coins originated with Dogecoin, created in 2013 as a satirical take on the hype surrounding Bitcoin and other mainstream cryptocurrencies.

Dogecoin's creators, Billy Markus and Jackson Palmer, intended it to be a fun and accessible alternative to traditional cryptocurrencies.

Like their meme counterparts, Meme coins aim to create a sense of community and engage with their followers through humor and entertainment. They often have an ample or uncapped supply, making them inflationary.

While some meme coins serve purely as trading instruments, others have started to offer utility within decentralized finance (DeFi) ecosystems or as part of wider crypto projects.

One of the defining characteristics of meme coins is their high volatility. These coins are subject to extreme changes in value over short periods, driven by the current buzz and popularity surrounding the token.

Factors such as celebrity endorsements, social media trends, and online communities can significantly impact the value of meme coins.

For example, when Elon Musk or Mark Cuban promotes a meme coin like Dogecoin, its value often experiences a surge. However, once the hype dies down, the price can plummet just as quickly.

It's important to note that meme coins generally have a higher risk level than traditional cryptocurrencies. Their values are mainly speculative and may not have a clear use case or intrinsic value.

Additionally, meme coins often have a massive or uncapped supply, which can contribute to their fluctuating value. However, despite the risks, meme coins have gained significant market capitalization and continue to attract a passionate community of traders and investors.

Meme coins operate on blockchain technology, similar to other cryptocurrencies. They use smart contracts and are often built on blockchains like Ethereum or Solana. These smart contracts enable creation, distribution, and trading of meme coins on decentralized exchanges (DEX) and other platforms.

The process of buying and selling meme coins is similar to that of other cryptocurrencies. Users can access centralized cryptocurrency exchanges such as Coinbase, Binance, or Kraken to purchase meme coins directly with fiat currencies or other cryptocurrencies.

Alternatively, decentralized exchanges like PancakeSwap allow users to trade meme coins directly from their wallets. It's important to note that conducting thorough research and due diligence before investing in any meme coin is crucial to minimize risks.

In the ever-evolving landscape of meme coins, several tokens have emerged as market capitalization and popularity leaders.

While Dogecoin and Shiba Inu are widely recognized as the pioneers of meme coins, the market now boasts various options. Let's take a closer look at some of the top meme coins:

Dogecoin (DOGE) holds a special place in the history of meme coins. Created in 2013, Dogecoin quickly gained popularity thanks to its iconic Shiba Inu dog logo and its association with the "Doge" meme.

Initially intended as a joke, Dogecoin's lighthearted nature attracted a passionate online community, leading to widespread adoption.

Dogecoin has experienced significant price volatility throughout its existence, often driven by influential figures like Elon Musk.

Despite its meme origins, Dogecoin has managed to maintain a strong following and has even surpassed Bitcoin in terms of daily transaction volume at certain times.

Also Read - Is Dogecoin Dead?

Shiba Inu (SHIB) is another prominent meme coin that has gained traction in recent years. The project takes inspiration from the Dogecoin community and aims to create a decentralized ecosystem with its decentralized exchange called ShibaSwap. Shiba Inu gained popularity after being listed on major cryptocurrency exchanges, attracting many traders and investors.

Shiba Inu's developers introduced innovative features such as the "ShibaSwap Bone" (BONE) governance token, allowing community members to participate in decision-making. The project has also implemented burn mechanisms to reduce the supply of SHIB tokens over time.

Also Read - Is Shiba Inu Dead?

Pepe, a meme coin that has gained significant popularity in the cryptocurrency market, has captured the attention of many due to its association with the iconic Pepe the Frog meme.

This digital currency has created a dedicated online community that is passionate about its success. With its high volatility and speculative nature, Pepe offers investors the opportunity to engage in market or limit orders on various cryptocurrency exchanges.

However, it is crucial to prioritize the security of Pepe coins by storing them in a secure wallet, whether a software or hardware wallet, to safeguard against potential security threats.

Floki Inu (FLOKI) is a meme coin recently gaining significant attention. Named after Elon Musk's pet dog, Floki Inu aims to dethrone Dogecoin as the leading meme coin. The project boasts an ambitious roadmap, including developing a 3D NFT metaverse, DeFi utilities, a crypto education platform, and a merchandise store.

Floki Inu's community actively engages in charitable initiatives, pledging to build schools as part of their social impact efforts. With its unique features and dedicated community, Floki Inu has the potential to become a significant player in the meme coin space.

When considering investing in meme coins, conducting thorough research and evaluating the project's safety is essential.

While meme coins can offer exciting opportunities for potential gains, they also come with inherent risks. Here are some factors to consider when assessing the safety of meme coins:

By performing due diligence and considering these factors, investors can make more informed decisions when investing in meme coins.

Meme coins have come a long way since their inception, and their future looks promising. While meme coins initially faced skepticism for their lack of clear use cases or benefits beyond entertainment, the landscape is evolving.

New projects that aim to offer utility and create decentralized ecosystems around their meme coins are emerging.

For example, Shiba Inu has developed ShibaSwap, a decentralized exchange where users can swap tokens and participate in governance through the BONE token.

Floki Inu is exploring the potential of NFT gaming and crypto education platforms. These utility-focused meme coins aim to expand meme coin possibilities and value propositions beyond mere speculation.

Additionally, the growing acceptance and integration into mainstream businesses could further contribute to the adoption and value of meme coins.

Buying meme coins is relatively straightforward, but selecting reputable platforms and exercising caution is essential. Here are the general steps to follow when purchasing meme coins:

Remember to conduct thorough research and only invest what you can afford to lose when buying meme coins. The volatile nature of these assets means that prices can fluctuate dramatically, and cryptocurrency investments have inherent risks.

Meme coins have undoubtedly made a significant impact on the cryptocurrency market. These unique digital assets, inspired by memes and driven by passionate online communities, have attracted the attention of traders and investors alike.

While meme coins have risks, including high volatility and uncertain value propositions, they offer significant gains and community engagement opportunities.

Remember, the cryptocurrency market is highly volatile, and investing in meme coins or other digital assets carries inherent risks.

It's essential to stay updated on market trends, perform due diligence, and only invest what you can afford to lose. With the right approach, meme coins can be an exciting addition to your investment portfolio, offering the potential for both entertainment and financial gains.

The information provided on this website does not constitute investment advice, financial advice, trading advice, or any other advice, and you should not treat any of the website's content as such.

Token Metrics does not recommend buying, selling, or holding any cryptocurrency. Conduct your due diligence and consult your financial advisor before making investment decisions.

%201.svg)

%201.svg)

In the volatile world of cryptocurrency investing, it is crucial for investors to have a solid understanding of risk management strategies.

While many investors focus solely on potential returns, it is equally important to prioritize risk mitigation in order to achieve long-term success.