Top Crypto Trading Platforms in 2025

%201.svg)

%201.svg)

Big news: We’re cranking up the heat on AI-driven crypto analytics with the launch of the Token Metrics API and our official SDK (Software Development Kit). This isn’t just an upgrade – it's a quantum leap, giving traders, hedge funds, developers, and institutions direct access to cutting-edge market intelligence, trading signals, and predictive analytics.

Crypto markets move fast, and having real-time, AI-powered insights can be the difference between catching the next big trend or getting left behind. Until now, traders and quants have been wrestling with scattered data, delayed reporting, and a lack of truly predictive analytics. Not anymore.

The Token Metrics API delivers 32+ high-performance endpoints packed with powerful AI-driven insights right into your lap, including:

Getting started with the Token Metrics API is simple:

At Token Metrics, we believe data should be decentralized, predictive, and actionable.

The Token Metrics API & SDK bring next-gen AI-powered crypto intelligence to anyone looking to trade smarter, build better, and stay ahead of the curve. With our official SDK, developers can plug these insights into their own trading bots, dashboards, and research tools – no need to reinvent the wheel.

%201.svg)

%201.svg)

Crypto is transitioning into a broadly bullish regime into 2026 as liquidity improves and adoption deepens.

Regulatory clarity is reshaping the classic four-year cycle, flows can arrive earlier and persist longer as institutions gain confidence.

Access and infrastructure continue to mature with ETFs, qualified custody, and faster L2 scaling that reduce frictions for new capital.

Real‑world integrations expand the surface area for crypto utility, which supports sustained participation across market phases.

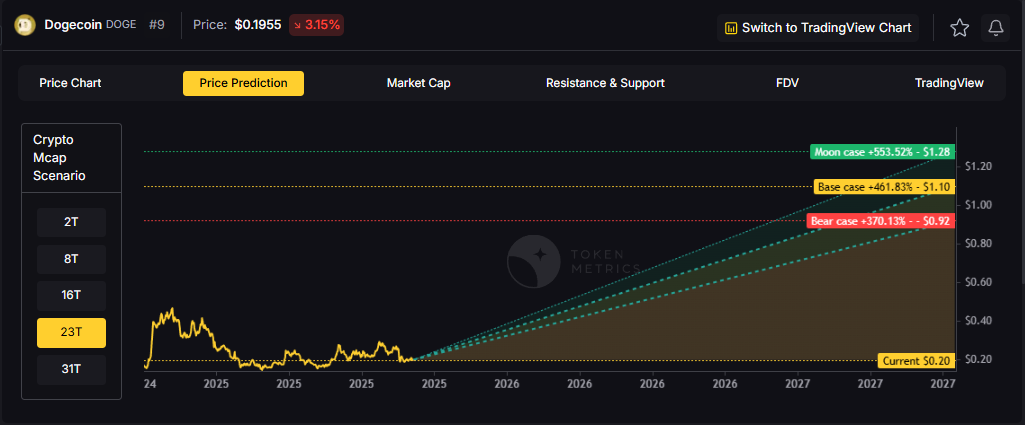

This backdrop frames our scenario work for DOGE. The bands below reflect different total market sizes and DOGE's share dynamics.

Read the TLDR first, then dive into grades, catalysts, and risks.

How to read it: Each band blends cycle analogues and market-cap share math with TA guardrails. Base assumes steady adoption and neutral or positive macro. Moon layers in a liquidity boom. Bear assumes muted flows and tighter liquidity.

TM Agent baseline: Token Metrics lead metric, TM Grade, is 22.65 (Sell), and the trading signal is bearish, indicating short-term downward momentum. Price context: $DOGE is trading around $0.193, rank #9, down about 3.1% in 24 hours and roughly 16% over 30 days. Implication: upside likely requires a broader risk-on environment and renewed retail or celebrity-driven interest.

Live details: Dogecoin Token Details → https://app.tokenmetrics.com/en/dogecoin

• Scenario driven, outcomes hinge on total crypto market cap, higher liquidity and adoption lift the bands.

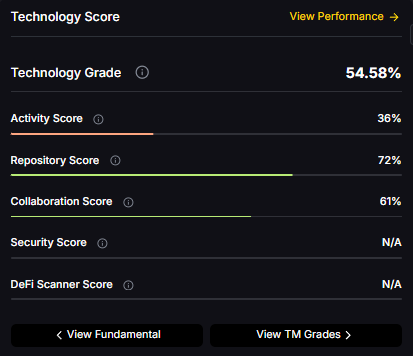

• Technology: Technology Grade 54.58% (Activity 36%, Repository 72%, Collaboration 61%, Security N/A, DeFi Scanner N/A).

• TM Agent gist: cautious long‑term stance until grades and momentum improve.

• Education only, not financial advice.

8T:

16T:

23T:

31T:

Diversification matters. Dogecoin is compelling, yet concentrated bets can be volatile. Token Metrics Indices hold DOGE alongside the top one hundred tokens for broad exposure to leaders and emerging winners.

Our backtests indicate that owning the full market with diversified indices has historically outperformed both the total market and Bitcoin in many regimes due to diversification and rotation.

Dogecoin is a peer-to-peer cryptocurrency that began as a meme but has evolved into a widely recognized digital asset used for tipping, payments, and community-driven initiatives. It runs on its own blockchain with inflationary supply mechanics. The token’s liquidity and brand awareness create periodic speculative cycles, especially during broad risk-on phases.

Technology Snapshot from Token Metrics

Technology Grade: 54.58% (Activity 36%, Repository 72%, Collaboration 61%, Security N/A, DeFi Scanner N/A).

Catalysts That Skew Bullish

• Institutional and retail access expands with ETFs, listings, and integrations.

• Macro tailwinds from lower real rates and improving liquidity.

• Product or roadmap milestones such as upgrades, scaling, or partnerships.

Risks That Skew Bearish

• Macro risk-off from tightening or liquidity shocks.

• Regulatory actions or infrastructure outages.

• Concentration or validator economics and competitive displacement.

Special Offer — Token Metrics Advanced Plan with 20% Off

Unlock platform-wide intelligence on every major crypto asset. Use code ADVANCED20 at checkout for twenty percent off.

• AI powered ratings on thousands of tokens for traders and investors.

• Interactive TM AI Agent to ask any crypto question.

• Indices explorer to surface promising tokens and diversified baskets.

• Signal dashboards, backtests, and historical performance views.

• Watchlists, alerts, and portfolio tools to track what matters.

• Early feature access and enhanced research coverage.

Can DOGE reach $1.00?

Yes, multiple tiers imply levels above $1.00 by the 2027 horizon, including the 23T Base and all 31T scenarios. Not financial advice.

Is DOGE a good long-term investment?

Outcome depends on adoption, liquidity regime, competition, and supply dynamics. Diversify and size positions responsibly.

• Track live grades and signals: Token Details

• Join Indices Early Access

• Want exposure Buy DOGE on MEXC

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

%201.svg)

%201.svg)

The crypto market is shifting toward a broadly bullish regime into 2026 as liquidity improves and risk appetite normalizes.

Regulatory clarity across major regions is reshaping the classic four-year cycle, flows can arrive earlier and persist longer.

Institutional access keeps expanding through ETFs and qualified custody, while L2 scaling and real-world integrations broaden utility.

Infrastructure maturity lowers frictions for capital, which supports deeper order books and more persistent participation.

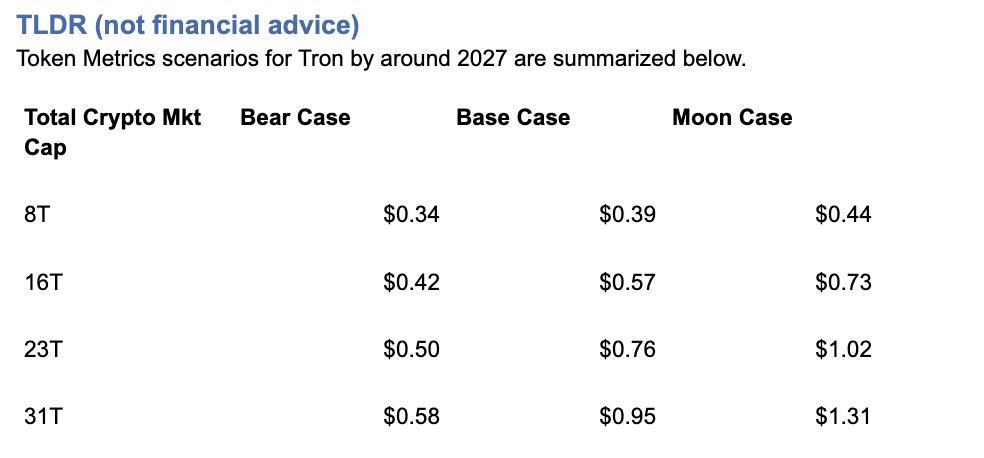

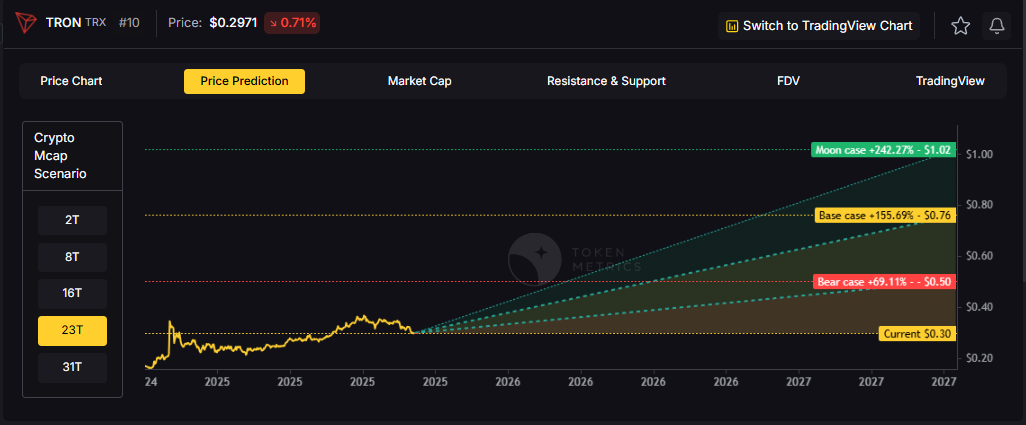

This backdrop frames our scenario work for TRX. The bands below map potential outcomes to different total crypto market sizes.

Use the table as a quick benchmark, then layer in live grades and signals for timing.

Current price: $0.2971.

How to read it: Each band blends cycle analogues and market-cap share math with TA guardrails. Base assumes steady adoption and neutral or positive macro. Moon layers in a liquidity boom. Bear assumes muted flows and tighter liquidity.

TM Agent baseline: Token Metrics TM Grade for $TRX is 19.06, which translates to a Strong Sell, and the trading signal is bearish, indicating short-term downward momentum. Price context: $TRX is trading around $0.297, market cap rank #10, and is down about 11% over 30 days while up about 80% year-over-year, it has returned roughly 963% since the last trading signal flip.

Live details: Tron Token Details → https://app.tokenmetrics.com/en/tron

Buy TRX: https://www.mexc.com/acquisition/custom-sign-up?shareCode=mexc-2djd4

• Scenario driven, outcomes hinge on total crypto market cap, higher liquidity and adoption lift the bands.

• TM Agent gist: bearish near term, upside depends on a sustained risk-on regime and improvements in TM Grade and the trading signal.

• Education only, not financial advice.

8T:

16T:

23T:

Diversification matters. Tron is compelling, yet concentrated bets can be volatile. Token Metrics Indices hold TRX alongside the top one hundred tokens for broad exposure to leaders and emerging winners.

Our backtests indicate that owning the full market with diversified indices has historically outperformed both the total market and Bitcoin in many regimes due to diversification and rotation.

Get early access: https://docs.google.com/forms/d/1AnJr8hn51ita6654sRGiiW1K6sE10F1JX-plqTUssXk/preview

If your editor supports embeds, place a form embed here. Otherwise, include the link above as a button labeled Join Indices Early Access.

Tron is a smart-contract blockchain focused on low-cost, high-throughput transactions and cross-border settlement.

The network supports token issuance and a broad set of dApps, with an emphasis on stablecoin transfer volume and payments.

TRX is the native asset that powers fees and staking for validators and delegators within the network.

Developers and enterprises use the chain for predictable costs and fast finality, which supports consumer-facing use cases.

Catalysts That Skew Bullish

• Institutional and retail access expands with ETFs, listings, and integrations.

• Macro tailwinds from lower real rates and improving liquidity.

• Product or roadmap milestones such as upgrades, scaling, or partnerships.

Risks That Skew Bearish

• Macro risk-off from tightening or liquidity shocks.

• Regulatory actions or infrastructure outages.

• Concentration or validator economics and competitive displacement.

Unlock platform-wide intelligence on every major crypto asset. Use code ADVANCED20 at checkout for twenty percent off.

• AI powered ratings on thousands of tokens for traders and investors.

• Interactive TM AI Agent to ask any crypto question.

• Indices explorer to surface promising tokens and diversified baskets.

• Signal dashboards, backtests, and historical performance views.

• Watchlists, alerts, and portfolio tools to track what matters.

• Early feature access and enhanced research coverage.

Start with Advanced today → https://www.tokenmetrics.com/token-metrics-pricing

Can TRX reach $1?

Yes, the 23T moon case shows $1.02 and the 31T moon case shows $1.31, which imply a path to $1 in higher-liquidity regimes. Not financial advice.

Is TRX a good long-term investment

Outcome depends on adoption, liquidity regime, competition, and supply dynamics. Diversify and size positions responsibly.

Track live grades and signals: Token Details → https://app.tokenmetrics.com/en/tron

Join Indices Early Access: https://docs.google.com/forms/d/1AnJr8hn51ita6654sRGiiW1K6sE10F1JX-plqTUssXk/preview

Want exposure Buy TRX on MEXC → https://www.mexc.com/acquisition/custom-sign-up?shareCode=mexc-2djd4

Disclosure

Educational purposes only, not financial advice. Crypto is volatile, do your own research and manage risk.

%201.svg)

%201.svg)

The cryptocurrency market presents unprecedented wealth-building opportunities, but it also poses significant challenges.

With thousands of tokens competing for investor attention and market volatility that can erase gains overnight, success in crypto investing requires more than luck—it demands a strategic, data-driven approach.

Token Metrics AI Indices have emerged as a game-changing solution for investors seeking to capitalize on crypto's growth potential while managing risk effectively.

This comprehensive guide explores how to leverage these powerful tools to build, manage, and optimize your cryptocurrency portfolio for maximum returns in 2025 and beyond.

The traditional approach to crypto investing involves countless hours of research, technical analysis, and constant market monitoring.

For most investors, this proves unsustainable.

Token Metrics solves this challenge by offering professionally managed, AI-driven index portfolios that automatically identify winning opportunities and rebalance based on real-time market conditions.

What makes Token Metrics indices unique is their foundation in machine learning technology.

The platform analyzes over 6,000 cryptocurrencies daily, processing more than 80 data points per asset including technical indicators, fundamental metrics, on-chain analytics, sentiment data, and exchange information.

This comprehensive evaluation far exceeds what individual investors can accomplish manually.

The indices employ sophisticated AI models including gradient boosting decision trees, recurrent neural networks, random forests, natural language processing algorithms, and anomaly detection frameworks.

These systems continuously learn from market patterns, adapt to changing conditions, and optimize portfolio allocations to maximize risk-adjusted returns.

Token Metrics offers a diverse range of indices designed to serve different investment objectives, risk tolerances, and market outlooks.

Understanding these options is crucial for building an effective crypto portfolio.

Conservative Indices: Stability and Long-Term Growth

For investors prioritizing capital preservation and steady appreciation, conservative indices focus on established, fundamentally sound cryptocurrencies with proven track records.

These indices typically allocate heavily to Bitcoin and Ethereum while including select large-cap altcoins with strong fundamentals.

The Investor Grade Index exemplifies this approach, emphasizing projects with solid development teams, active communities, real-world adoption, and sustainable tokenomics.

This index is ideal for retirement accounts, long-term wealth building, and risk-averse investors seeking exposure to crypto without excessive volatility.

Balanced Indices: Growth with Measured Risk

Balanced indices strike a middle ground between stability and growth potential.

These portfolios combine major cryptocurrencies with promising mid-cap projects that demonstrate strong technical momentum and fundamental strength.

The platform's AI identifies tokens showing positive divergence across multiple indicators—rising trading volume, improving developer activity, growing social sentiment, and strengthening technical patterns.

Balanced indices typically rebalance weekly or bi-weekly, capturing emerging trends while maintaining core positions in established assets.

Aggressive Growth Indices: Maximum Upside Potential

For investors comfortable with higher volatility in pursuit of substantial returns, aggressive growth indices target smaller-cap tokens with explosive potential.

These portfolios leverage Token Metrics' Trader Grade system to identify assets with strong short-term momentum and technical breakout patterns.

Aggressive indices may include DeFi protocols gaining traction, Layer-1 blockchains with innovative technology, AI tokens benefiting from market narratives, and memecoins showing viral adoption patterns.

While risk is higher, the potential for 10x, 50x, or even 100x returns makes these indices attractive for portfolio allocation strategies that embrace calculated risk.

Sector-Specific Indices: Thematic Investing

Token Metrics offers specialized indices targeting specific cryptocurrency sectors, allowing investors to align portfolios with their market convictions and thematic beliefs.

• DeFi Index: Focuses on decentralized finance protocols including lending platforms, decentralized exchanges, yield aggregators, and synthetic asset platforms.

• Layer-1 Index: Concentrates on base-layer blockchains competing with Ethereum, including Solana, Avalanche, Cardano, Polkadot, and emerging ecosystems.

• AI and Machine Learning Index: Targets tokens at the intersection of artificial intelligence and blockchain technology.

• Memecoin Index: Contrary to traditional wisdom dismissing memecoins as purely speculative, Token Metrics recognizes that community-driven tokens can generate extraordinary returns.

This index uses AI to identify memecoins with genuine viral potential, active communities, and sustainable momentum before they become mainstream.

Success with Token Metrics indices requires more than simply choosing an index—it demands a comprehensive portfolio strategy tailored to your financial situation, goals, and risk tolerance.

Step 1: Assess Your Financial Profile

Begin by honestly evaluating your investment capacity, time horizon, and risk tolerance.

Ask yourself critical questions: How much capital can I allocate to crypto without compromising financial security? What is my investment timeline—months, years, or decades? How would I react emotionally to a 30% portfolio drawdown? What returns do I need to achieve my financial goals?

Your answers shape your portfolio construction.

Conservative investors with shorter timelines should emphasize stable indices, while younger investors with longer horizons can embrace more aggressive strategies.

Step 2: Determine Optimal Allocation Percentages

Financial advisors increasingly recommend including cryptocurrency in diversified portfolios, but the appropriate allocation varies significantly based on individual circumstances.

• Conservative Allocation (5-10% of portfolio): Suitable for investors approaching retirement or with low risk tolerance. Focus 80% on conservative indices, 15% on balanced indices, and 5% on sector-specific themes you understand deeply.

• Moderate Allocation (10-20% of portfolio): Appropriate for mid-career professionals building wealth. Allocate 50% to conservative indices, 30% to balanced indices, and 20% to aggressive growth or sector-specific indices.

• Aggressive Allocation (20-30%+ of portfolio): Reserved for younger investors with high risk tolerance and long time horizons. Consider 30% conservative indices for stability, 30% balanced indices for steady growth, and 40% split between aggressive growth and thematic sector indices.

Step 3: Implement Dollar-Cost Averaging

Rather than investing your entire allocation at once, implement a dollar-cost averaging strategy over 3-6 months.

This approach reduces timing risk and smooths out entry prices across market cycles.

For example, if allocating $10,000 to Token Metrics indices, invest $2,000 monthly over five months.

This strategy proves particularly valuable in volatile crypto markets where timing the perfect entry proves nearly impossible.

Step 4: Set Up Automated Rebalancing

Token Metrics indices automatically rebalance based on AI analysis, but you should also establish personal portfolio rebalancing rules.

Review your overall allocation quarterly and rebalance if any index deviates more than 10% from your target allocation.

If aggressive growth indices perform exceptionally well and grow from 20% to 35% of your crypto portfolio, take profits and rebalance back to your target allocation.

This disciplined approach ensures you systematically lock in gains and maintain appropriate risk levels.

Step 5: Monitor Performance and Adjust Strategy

While Token Metrics indices handle day-to-day portfolio management, you should conduct quarterly reviews assessing overall performance, comparing returns to benchmarks like Bitcoin and Ethereum, evaluating whether your risk tolerance has changed, and considering whether emerging market trends warrant allocation adjustments.

Use Token Metrics' comprehensive analytics to track performance metrics including total return, volatility, Sharpe ratio, maximum drawdown, and correlation to major cryptocurrencies.

These insights inform strategic decisions about continuing, increasing, or decreasing exposure to specific indices.

Once comfortable with basic index investing, consider implementing advanced strategies to enhance returns and manage risk more effectively.

Tactical Overweighting

While maintaining core index allocations, temporarily overweight specific sectors experiencing favorable market conditions.

During periods of heightened interest in AI, increase allocation to the AI and Machine Learning Index by 5-10% at the expense of other sector indices.

Return to strategic allocation once the catalyst dissipates.

Combining Indices with Individual Tokens

Use Token Metrics indices for 70-80% of your crypto allocation while dedicating 20-30% to individual tokens identified through the platform's Moonshots feature.

This hybrid approach provides professional management while allowing you to pursue high-conviction opportunities.

Market Cycle Positioning

Adjust index allocations based on broader market cycles.

During bull markets, increase exposure to aggressive growth indices.

As conditions turn bearish, shift toward conservative indices with strong fundamentals.

Token Metrics' AI Indicator provides valuable signals for market positioning.

Even with sophisticated AI-driven indices, cryptocurrency investing carries substantial risks.

Implement robust risk management practices to protect your wealth.

Diversification Beyond Crypto

Never allocate so much to cryptocurrency that a market crash would devastate your financial position.

Most financial advisors recommend limiting crypto exposure to 5-30% of investment portfolios depending on age and risk tolerance.

Maintain substantial allocations to traditional assets—stocks, bonds, real estate—that provide diversification and stability.

Position Sizing and Security

Consider implementing portfolio-level stop-losses if your crypto allocation declines significantly from its peak.

Use hardware wallets or secure custody solutions for significant holdings.

Implement strong security practices including two-factor authentication and unique passwords.

Tax Optimization

Cryptocurrency taxation typically involves capital gains taxes on profits.

Consult tax professionals to optimize your strategy through tax-loss harvesting and strategic rebalancing timing.

Token Metrics' transaction tracking helps maintain accurate records for tax reporting.

Several factors distinguish Token Metrics indices from alternatives and explain their consistent outperformance.

Token Metrics indices respond to market changes in real-time rather than waiting for scheduled monthly or quarterly rebalancing.

This responsiveness proves crucial in crypto markets where opportunities can appear and disappear rapidly.

The platform's AI evaluates dozens of factors simultaneously—technical patterns, fundamental strength, on-chain metrics, sentiment analysis, and exchange dynamics.

This comprehensive approach identifies tokens that traditional indices would miss.

The AI continuously learns from outcomes, improving predictive accuracy over time.

Models that underperform receive reduced weighting while successful approaches gain influence, creating an evolving system that adapts to changing market dynamics.

Token Metrics' extensive coverage of 6,000+ tokens provides exposure to emerging projects before they gain mainstream attention, positioning investors for maximum appreciation potential.

To illustrate practical application, consider several investor profiles and optimal index strategies.

Profile 1: Conservative 55-Year-Old Preparing for Retirement

Total portfolio: $500,000

Crypto allocation: $25,000 (5%)

Strategy: $20,000 in Investor Grade Index (80%), $4,000 in Balanced Index (16%), $1,000 in DeFi Index (4%)

This conservative approach provides crypto exposure with minimal volatility, focusing on established assets likely to appreciate steadily without risking retirement security.

Profile 2: Moderate 35-Year-Old Building Wealth

Total portfolio: $150,000

Crypto allocation: $30,000 (20%)

Strategy: $12,000 in Investor Grade Index (40%), $9,000 in Balanced Index (30%), $6,000 in Layer-1 Index (20%), $3,000 in Aggressive Growth Index (10%)

This balanced approach captures crypto growth potential while maintaining stability through substantial conservative and balanced allocations.

Profile 3: Aggressive 25-Year-Old Maximizing Returns

Total portfolio: $50,000

Crypto allocation: $15,000 (30%)

Strategy: $4,500 in Investor Grade Index (30%), $3,000 in Balanced Index (20%), $4,500 in Aggressive Growth Index (30%), $3,000 in Memecoin Index (20%)

This aggressive strategy embraces volatility and maximum growth potential, appropriate for younger investors with decades to recover from potential downturns.

Ready to begin building wealth with Token Metrics indices?

Follow this action plan:

• Week 1-2: Sign up for Token Metrics' 7-day free trial and explore available indices, historical performance, and educational resources. Define your investment goals, risk tolerance, and allocation strategy using the frameworks outlined in this guide.

• Week 3-4: Open necessary exchange accounts and wallets. Fund accounts and begin implementing your strategy through dollar-cost averaging. Set up tracking systems and calendar reminders for quarterly reviews.

• Ongoing: Follow Token Metrics' index recommendations, execute rebalancing transactions as suggested, monitor performance quarterly, and adjust strategy as your financial situation evolves.

Cryptocurrency represents one of the most significant wealth-building opportunities in modern financial history, but capturing this potential requires sophisticated approaches that most individual investors cannot implement alone.

Token Metrics AI Indices democratize access to professional-grade investment strategies, leveraging cutting-edge machine learning, comprehensive market analysis, and real-time responsiveness to build winning portfolios.

Whether you're a conservative investor seeking measured exposure or an aggressive trader pursuing maximum returns, Token Metrics provides indices tailored to your specific needs.

The choice between random coin picking and strategic, AI-driven index investing is clear.

One approach relies on luck and guesswork; the other harnesses data, technology, and proven methodologies to systematically build wealth while managing risk.

Your journey to crypto investment success begins with a single decision: commit to a professional, strategic approach rather than speculative gambling.

Token Metrics provides the tools, insights, and management to transform crypto investing from a game of chance into a calculated path toward financial freedom.

Start your 7-day free trial today and discover how AI-powered indices can accelerate your wealth-building journey.

The future of finance is decentralized, intelligent, and accessible—make sure you're positioned to benefit.

Token Metrics stands out as a leader in AI-driven crypto index solutions.

With over 6,000 tokens analyzed daily and indices tailored to every risk profile, the platform provides unparalleled analytics, real-time rebalancing, and comprehensive investor education.

Its commitment to innovation and transparency makes it a trusted partner for building your crypto investment strategy in today's fast-evolving landscape.

Token Metrics indices use advanced AI models to analyze technical, fundamental, on-chain, and sentiment data across thousands of cryptocurrencies.

They construct balanced portfolios that are automatically rebalanced in real-time to adapt to evolving market conditions and trends.

There are conservative, balanced, aggressive growth, and sector-specific indices including DeFi, Layer-1, AI, and memecoins.

Each index is designed for a different investment objective, risk tolerance, and market outlook.

No mandatory minimum is outlined for using Token Metrics indices recommendations.

You can adapt your allocation based on your personal investment strategy, capacity, and goals.

Token Metrics indices are rebalanced automatically based on dynamic AI analysis, but it is recommended to review your overall crypto allocation at least quarterly to ensure alignment with your targets.

Token Metrics provides analytics and index recommendations; investors maintain custody of their funds and should implement robust security practices such as hardware wallets and two-factor authentication.

No investing approach, including AI-driven indices, can guarantee profits.

The goal is to maximize risk-adjusted returns through advanced analytics and professional portfolio management, but losses remain possible due to the volatile nature of crypto markets.

This article is for educational and informational purposes only.

It does not constitute financial, investment, or tax advice.

Cryptocurrency investing carries risk, and past performance does not guarantee future results. Always consult your own advisor before making investment decisions.

%201.svg)

%201.svg)

In today’s digital world, our identities define how we interact online—from accessing services to proving who we are. However, traditional identity management systems often place control of your personal information in the hands of centralized authorities, such as governments, corporations, or social media platforms. This centralized control exposes users to risks like data breaches, identity theft, and loss of privacy. Enter Self-Sovereign Identity (SSI), a revolutionary digital identity model aligned with the core principles of Web3: decentralization, user empowerment, and true digital ownership. Understanding what is self sovereign identity in Web3 is essential in 2025 for anyone who wants to take full control of their digital identity and navigate the decentralized future safely and securely.

At its core, self sovereign identity is a new digital identity model that enables individuals to own, manage, and control their identity data without relying on any central authority. Unlike traditional identity systems, where identity data is stored and controlled by centralized servers or platforms—such as social media companies or government databases—SSI empowers users to become the sole custodians of their digital identity.

The self sovereign identity model allows users to securely store their identity information, including identity documents like a driver’s license or bank account details, in a personal digital wallet app. This wallet acts as a self sovereign identity wallet, enabling users to selectively share parts of their identity information with others through verifiable credentials. These credentials are cryptographically signed by trusted issuers, making them tamper-proof and instantly verifiable by any verifier without needing to contact the issuer directly.

This approach means users have full control over their identity information, deciding exactly what data to share, with whom, and for how long. By allowing users to manage their digital identities independently, SSI eliminates the need for centralized authorities and reduces the risk of data breaches and unauthorized access to sensitive information.

The emergence of Web3—a decentralized internet powered by blockchain and peer-to-peer networks—has brought new challenges and opportunities for digital identity management. Traditional login methods relying on centralized platforms like Google or Facebook often result in users surrendering control over their personal data, which is stored on centralized servers vulnerable to hacks and misuse.

In contrast, Web3 promotes decentralized identity, where users own and control their digital credentials without intermediaries. The question what is self sovereign identity in Web3 becomes especially relevant because SSI is the key to realizing this vision of a user-centric, privacy-respecting digital identity model.

By 2025, businesses and developers are urged to adopt self sovereign identity systems to thrive in the Web3 ecosystem. These systems leverage blockchain technology and decentralized networks to create a secure, transparent, and user-controlled identity infrastructure, fundamentally different from centralized identity systems and traditional identity management systems.

SSI’s robust framework is built on three essential components that work together to create a secure and decentralized identity ecosystem:

Blockchain serves as a distributed database or ledger that records information in a peer-to-peer network without relying on a central database or centralized servers. This decentralized nature makes blockchain an ideal backbone for SSI, as it ensures data security, immutability, and transparency.

By storing digital identifiers and proofs on a blockchain, SSI systems can verify identity data without exposing the actual data or compromising user privacy. This eliminates the vulnerabilities associated with centralized platforms and frequent data breaches seen in traditional identity systems.

A Decentralized Identifier (DID) is a new kind of globally unique digital identifier that users fully control. Unlike traditional identifiers such as usernames or email addresses, which depend on centralized authorities, DIDs are registered on decentralized networks like blockchains.

DIDs empower users with user control over their identity by enabling them to create and manage identifiers without relying on a central authority. This means users can establish secure connections and authenticate themselves directly, enhancing data privacy and reducing reliance on centralized identity providers.

Verifiable Credentials are cryptographically secure digital documents that prove certain attributes about an individual, organization, or asset. Issued by trusted parties, these credentials can represent anything from a university diploma to a government-issued driver’s license.

VCs are designed to be tamper-proof and easily verifiable without contacting the issuer, thanks to blockchain and cryptographic signatures. This ensures enhanced security and trustworthiness in digital identity verification processes, while allowing users to share only the necessary information through selective disclosure.

The operation of SSI revolves around a trust triangle involving three key participants:

When a verifier requests identity information, the holder uses their self sovereign identity wallet to decide which credentials to share, ensuring full control and privacy. This interaction eliminates the need for centralized intermediaries and reduces the risk of identity theft.

As SSI platforms gain traction, understanding their underlying token economies and security is critical for investors and developers. Token Metrics is a leading analytics platform that provides deep insights into identity-focused projects within the Web3 ecosystem.

By analyzing identity tokens used for governance and utility in SSI systems, Token Metrics helps users evaluate project sustainability, security, and adoption potential. This is crucial given the rapid growth of the digital identity market, projected to reach over $30 billion by 2025.

Token Metrics offers comprehensive evaluations, risk assessments, and performance tracking, empowering stakeholders to make informed decisions in the evolving landscape of self sovereign identity blockchain projects.

SSI streamlines Know Your Customer (KYC) processes by enabling users to reuse verifiable credentials issued by one institution across multiple services. This reduces redundancy and accelerates onboarding, while significantly lowering identity fraud, which currently costs billions annually.

SSI enhances the authenticity and privacy of medical records, educational certificates, and professional licenses. Universities can issue digital diplomas as VCs, simplifying verification and reducing fraud.

By assigning DIDs to products and issuing VCs, SSI improves product provenance and combats counterfeiting. Consumers gain verifiable assurance of ethical sourcing and authenticity.

SSI allows users to prove ownership of NFTs and other digital assets without exposing their entire wallet, adding a layer of privacy and security to digital asset management.

SSI enables users to share only specific attributes of their credentials. For example, proving age without revealing a full birthdate helps protect sensitive personal information during verification.

Zero-knowledge proofs (ZKPs) allow users to prove statements about their identity without revealing the underlying data. For instance, a user can prove they are over 18 without sharing their exact birthdate, enhancing privacy and security in digital interactions.

Several initiatives showcase the practical adoption of SSI:

These projects highlight SSI’s potential to transform identity management globally.

Managing private keys is critical; losing a private key can mean losing access to one’s identity. Solutions like multi-signature wallets and biometric authentication are being developed to address this.

Global regulations, including the General Data Protection Regulation (GDPR) and emerging frameworks like Europe’s eIDAS 2.0, are shaping SSI adoption. Ensuring compliance while maintaining decentralization is a key challenge.

Despite the promise, some critics argue the term "self-sovereign" is misleading because issuers and infrastructure still play roles. Improving user experience and educating the public are essential for widespread adoption.

By 2025, self sovereign identity systems will be vital for secure, private, and user-centric digital interactions. Key trends shaping SSI’s future include:

Businesses aiming to adopt SSI should:

Self-Sovereign Identity is more than a technological innovation; it represents a fundamental shift towards digital sovereignty—where individuals truly own and control their online identities. As Web3 reshapes the internet, SSI offers a secure, private, and user-centric alternative to centralized identity systems that have long dominated the digital world.

For professionals, investors, and developers, understanding what is self sovereign identity in Web3 and leveraging platforms like Token Metrics is crucial to navigating this transformative landscape. The journey toward a decentralized, privacy-respecting digital identity model has begun, and those who embrace SSI today will lead the way in tomorrow’s equitable digital world.

%201.svg)

%201.svg)

In the rapidly evolving cryptocurrency landscape, one concept has emerged as the critical differentiator between project success and failure: tokenomics. Far more than a trendy buzzword, tokenomics represents the economic backbone that determines whether a crypto project will thrive or collapse. As we navigate through 2025, understanding tokenomics has become essential for investors, developers, and anyone serious about participating in the digital asset ecosystem. This article explores what is tokenomics and how does it impact crypto projects, providing a comprehensive guide to its key components, mechanisms, and real-world implications.

Tokenomics is a fusion of “token” and “economics,” referring to the economic principles and mechanisms that govern a digital token or cryptocurrency within a blockchain project. It encompasses various aspects such as the token’s supply, distribution, utility, governance, and overall value proposition. The key elements of tokenomics include supply models, distribution mechanisms, utility, and governance, all of which influence the value and stability of a cryptocurrency. Simply put, tokenomics is the study of the economic design of blockchain projects, focusing on how digital tokens operate within an ecosystem.

A well-crafted project's tokenomics model is crucial for any crypto project because it drives user adoption, incentivizes desired behavior, and fosters a sustainable and thriving ecosystem. By defining the token supply, token utility, governance rights, and economic incentives, tokenomics shapes how a digital asset interacts with its community, influences user behavior, and ultimately impacts the token’s value and longevity.

The tokenomics landscape in 2025 has matured significantly compared to earlier years. Initially, tokenomics was often limited to simple concepts like fixed maximum supply or token burns. Today, it represents a sophisticated economic architecture that governs value flow, community interaction, and project sustainability.

Several key developments characterize tokenomics in 2025:

This evolution means that understanding what is tokenomics and how does it impact crypto projects now requires a nuanced grasp of multiple economic mechanisms, community dynamics, and regulatory considerations.

A fundamental aspect of tokenomics is the token supply, which directly influences scarcity and price dynamics. Two key metrics are important to understand: the token's total supply, which is the total number of tokens that exist (including those locked, reserved, or yet to be circulated), and the token's supply in circulation, often referred to as circulating supply, which is the number of tokens currently available in the market and held by the public.

The relationship between these supplies affects the token price and market capitalization. For example, a large difference between the token's total supply and circulating supply might indicate tokens locked for future use or held by insiders.

Token supply models generally fall into three categories:

How tokens are distributed among stakeholders significantly impacts project fairness and community trust. Token distribution involves allocating tokens to the team, early investors, advisors, the community, and reserves. A transparent and equitable distribution encourages community engagement and prevents disproportionate control by a few entities. It is essential to ensure fair distribution to promote a healthy ecosystem and incentivize broad network participation.

Typical token allocation structures include:

Ensuring a fair distribution mitigates risks of price manipulation and aligns incentives between token holders and project success. A transparent issuance process is also crucial for building trust and ensuring the long-term sustainability of the project.

The utility of a token is a core driver of its demand and value. A token's utility is a critical factor for its demand, market value, and long-term sustainability, making it essential for both investors and project success. Tokens with clear, real-world use cases tend to sustain long-term interest and adoption. Common types of token utility include:

Tokens that enable holders to pay transaction fees, participate in governance, or earn staking rewards incentivize active involvement and network security. Additionally, the blockchain or environment in which a token operates can significantly influence its adoption and overall utility.

In the diverse world of cryptocurrency, not all tokens are created equal. Understanding the different types of tokens is a key component of tokenomics and can help investors and users navigate the rapidly evolving digital asset landscape. Each token type serves a distinct purpose within its ecosystem, shaping how value is transferred, how decisions are made, and how users interact with decentralized platforms.

By understanding the roles of utility tokens, security tokens, governance tokens, and non fungible tokens, participants can better assess a crypto project’s tokenomics and its potential for long term success in the digital economy.

The economic model underlying a token’s supply and distribution is a key factor in determining its value, price stability, and long-term viability. Tokenomics models are designed to manage how many tokens exist, how they are distributed, and how their supply changes over time. The three primary approaches—inflationary, deflationary, and hybrid—each have unique implications for token price, token value, and market dynamics.

Choosing the right tokenomics model is crucial for any crypto project, as it directly impacts token distribution, market cap, and the ability to create scarcity or manage inflationary pressures. A well-designed model aligns incentives, supports healthy supply and demand dynamics, and fosters long-term success.

Given the complexity of crypto tokenomics, making informed decisions requires sophisticated analytical tools. Token Metrics is a leading platform that offers in-depth insights into tokenomics fundamentals for over 6,000 cryptocurrencies. It helps users understand the factors influencing token demand and market performance.

Token Metrics provides comprehensive analysis of token supply dynamics, distribution patterns, and utility mechanisms. Its AI-powered system distinguishes between robust economic models and those prone to failure, helping investors avoid pitfalls like pump-and-dump schemes or poorly designed tokens.

Real-time performance tracking links tokenomics features to market outcomes, offering both short-term Trader Grades and long-term Investor Grades. By integrating technical, on-chain, fundamental, social, and exchange data, Token Metrics delivers a holistic view of how tokenomics influence a token’s market performance. Additionally, Token Metrics provides insights into how tokenomics features impact the token's price over time, helping users understand the relationship between economic design and valuation.

Using Token Metrics, users can identify projects with sustainable tokenomics, assess risks such as excessive team allocations or unsustainable inflation, and make investment decisions grounded in economic fundamentals rather than hype. This platform is invaluable for navigating the intricate interplay of supply and demand characteristics, governance structures, and token incentives.

Token burns involve permanently removing tokens from circulation by sending them to an inaccessible address. This deflationary tactic can create scarcity, as the remaining tokens in circulation become more scarce, potentially increasing a token’s value. Modern burn mechanisms include:

By reducing supply through burns, the deflationary effect can positively impact the token's value by making each remaining token more desirable to users and investors.

Examples include Binance Coin’s quarterly burns and Ethereum’s EIP-1559, which burns a portion of transaction fees, reducing the token’s circulating supply during periods of high network activity.

Staking is a powerful tool for enhancing network security and incentivizing user participation. When tokens are staked, they are locked, reducing the circulating supply and potentially supporting price appreciation and network stability. Staked tokens are also used to validate transactions, helping to maintain the integrity and security of the blockchain network. Staked tokens may also confer governance rights, empowering committed token holders to influence the project.

Yield farming is another DeFi strategy that incentivizes users to provide liquidity and earn rewards by moving tokens between protocols with the highest APY, supporting network liquidity and resilience.

Innovations in staking for 2025 include:

These models align economic incentives with network health and user engagement.

Decentralized Autonomous Organizations (DAOs) rely on governance tokens to distribute decision-making power among community members. Token holders can vote on protocol upgrades, treasury spending, and other key issues, ensuring projects remain adaptable and community-driven.

Effective governance structures promote transparency, decentralization, and alignment of incentives, which are critical for long-term success in decentralized finance (DeFi) and beyond.

The integrity of a crypto project’s tokenomics relies heavily on robust network security and the effective use of smart contracts. These elements are foundational to protecting the key components of tokenomics, including token supply, token utility, governance tokens, and token distribution.

Smart contracts are self-executing agreements coded directly onto the blockchain, automating critical processes such as token issuance, token allocations, and token burns. By removing the need for intermediaries, smart contracts ensure that tokenomics mechanisms—like distributing staking rewards or executing governance decisions—are transparent, reliable, and tamper-proof.

Network security is equally vital, as it safeguards the blockchain against attacks and ensures the validity of transactions. Secure consensus mechanisms, such as proof of stake or proof of work, play a key role in validating transactions and maintaining the network’s security. This, in turn, protects the token’s supply and the value of digital assets within the ecosystem.

By combining strong network security with well-audited smart contracts, projects can protect their tokenomics from vulnerabilities and malicious actors. This not only preserves the integrity of key components like token burns, token allocations, and token price, but also builds trust among token holders and supports the project’s long term success.

In summary, understanding how network security and smart contracts underpin the key components of tokenomics is essential for anyone evaluating a crypto project’s potential. These safeguards ensure that the economic model operates as intended, supporting sustainable growth and resilience in the ever-changing world of digital assets.

These cases underscore the importance of sound tokenomics for project viability.

In 2025, regulatory compliance is a core consideration in tokenomics design. Projects that demonstrate transparent, community-governed models gain legal clarity and market trust.

Innovative projects increasingly combine multiple tokenomic mechanisms—such as burning part of transaction fees, staking for rewards, and soft rebasing—to maintain balance and incentivize participation.

Tokenomics now extends to the tokenization of physical assets, creating new economic models that blend traditional finance with blockchain technology, expanding the utility and reach of digital tokens.

When assessing a project’s tokenomics, consider these key questions:

Avoid red flags such as excessive team allocations without vesting, tokens lacking utility, unsustainable economic models, or poor transparency.

Understanding tokenomics requires more than reading whitepapers; it demands sophisticated analysis of the economic incentives, game theory, and supply and demand dynamics that govern a cryptocurrency token. Platforms like Token Metrics leverage AI to detect patterns and provide insights that individual investors might overlook, making them essential tools for navigating the complex world of crypto tokenomics.

In 2025, tokenomics has evolved from a peripheral consideration to the strategic foundation upon which successful crypto projects are built. Good tokenomics fosters trust, encourages adoption, and sustains value by aligning incentives, creating scarcity, and enabling governance. Conversely, flawed tokenomics can lead to inflation, centralization, and project failure.

For investors, developers, and enthusiasts, understanding what is tokenomics and how does it impact crypto projects is no longer optional—it is essential. The projects that thrive will be those that thoughtfully design their economic models to balance supply and demand, incentivize user behavior, and adapt to regulatory and market changes.

As the crypto ecosystem continues to mature, tokenomics will remain the key factor determining which projects create lasting value and which fade into obscurity. By leveraging professional tools and adopting best practices, participants can better navigate this dynamic landscape and contribute to the future of decentralized finance and digital assets.

%201.svg)

%201.svg)

The question “Is Web3 just a buzzword or is it real?” reverberates across tech conferences, and especially in the Twitter bio of those who want to signal they are 'in the know' about the future of the internet and decentralized platforms. As we navigate through 2025, the debate about whether Web3 represents a true revolution in the internet or merely another marketing buzzword has intensified. Advocates tout it as the next internet built on decentralization and user empowerment, while skeptics dismiss it as a vapid marketing campaign fueled by hype and venture capitalists. The truth, as with many technological paradigm shifts, lies somewhere between these extremes.

Web3, also known as Web 3.0, is envisioned as the next generation of the internet, built on blockchain technology and decentralized protocols. Unlike the early days of the web—Web1, characterized by static pages and read-only content—and web 2.0, which was dominated by interactive platforms controlled by big tech companies, Web3 promises a new paradigm where users can read, write, and own their digital interactions. Web1 was primarily about connecting people through basic online platforms, while web 2.0 expanded on this by enabling greater collaboration and interaction among individuals. This represents a fundamental shift from centralized servers and platforms toward a user-controlled internet. The current internet faces challenges such as centralization and data privacy concerns, which Web3 aims to address through decentralization and user empowerment.

The term “web3” was first coined by Gavin Wood, co-founder of Ethereum and founder of Polkadot, in 2014 to describe a decentralized online ecosystem based on blockchain technology. Interest in Web3 surged toward the end of 2021, driven largely by crypto enthusiasts, venture capital types, and companies eager to pioneer token-based economics and decentralized applications. At its core, Web3 challenges the legacy tech company hegemony by redistributing power from centralized intermediaries to users collectively, promising digital ownership and governance rights through decentralized autonomous organizations (DAOs) and smart contracts.

Despite the public’s negative associations with hype and marketing buzzwords, Web3 has demonstrated real value in several key areas by 2025.

Advocates of Web3 often refer to it as the 'promised future internet,' envisioning a revolutionary shift that addresses issues like centralization and privacy.

As the next phase of the internet's evolution, Web3 is beginning to show tangible impact beyond its initial hype.

Decentralized finance (DeFi) stands out as one of the most mature and actively implemented sectors proving that Web3 is more than just a buzzword. DeFi platforms enable users worldwide to lend, borrow, trade, and invest without relying on centralized intermediaries like banks. These platforms operate 24/7, breaking down barriers imposed by geography and time zones. DeFi empowers users to control their own money, eliminating the need for traditional banks and giving individuals direct access to their digital assets.

Millions of users now engage with DeFi protocols daily, and traditional financial institutions have begun adopting tokenized assets, bridging the gap between legacy finance and decentralized finance. By participating in these systems, users can accrue real value and tangible benefits, earning rewards and profits through blockchain-based activities. This integration signals a shift towards a more inclusive financial system, powered by blockchain technology and crypto assets.

Web3’s impact extends beyond cryptocurrencies and JPEG non-fungible tokens (NFTs). Web3's influence is not limited to Bitcoin and other cryptocurrencies; it also encompasses a wide range of tokenized assets. Real-world asset tokenization is redefining how we perceive ownership and liquidity. Assets such as real estate, carbon credits, and even U.S. Treasury bonds are being digitized and traded on blockchain platforms, enhancing transparency and accessibility.

For instance, Ondo Finance tokenizes U.S. government bonds, while Mattereum offers asset-backed tokens with legal contracts, ensuring enforceable ownership rights. Agricultural tracking systems in Abu Dhabi collaborate with nearly 1,000 farmers to tokenize produce and supply chain data, illustrating practical applications of tokenization in diverse industries.

The Web3 ecosystem has experienced unprecedented growth, with over 3,200 startups and 17,000 companies actively operating in the space as of 2025. This rapid expansion, supported by more than 2,300 investors and nearly 9,800 successful funding rounds, reflects a robust market eager to explore blockchain’s potential. The underlying infrastructure of blockchain technology is fundamental to this growth, enabling decentralization, enhanced security, and privacy across the internet.

Major industries—including finance, healthcare, supply chain, and entertainment—are integrating blockchain technology to enhance security, transparency, and efficiency. Enterprises are deploying decentralized applications and smart contracts to manage digital assets, identity verification, and transactional data, moving beyond speculative use cases to practical, scalable solutions. Web3 aims to deliver improved, interoperable service experiences across digital platforms, creating seamless and user-centric online services.

A core promise of Web3 is empowering users with control over their data and digital assets. Decentralized platforms host data across distributed networks, allowing users to maintain greater control and privacy over their information. Unlike Web2 platforms that monetize user information through centralized servers and walled gardens, decentralized social networks and user-controlled internet services give individuals ownership and governance over their data. This shift addresses growing concerns about privacy, censorship, and data exploitation, enabling users to monetize their digital presence directly.

While Web3 has made impressive strides, it is not without significant challenges that temper the hype. Some critics argue that Web3 is a false narrative designed to reframe public perception without delivering real benefits.

Scalability issues remain a critical hurdle. Ethereum, the most widely used Web3 platform, continues to face slow transaction speeds and prohibitively high gas fees during peak demand, sometimes exceeding $20 per transaction. This inefficiency limits the average person's ability to engage seamlessly with decentralized applications.

Current blockchain networks typically process fewer than 100 transactions per second, a stark contrast to legacy systems like Visa, which handle tens of thousands. Although layer-2 solutions such as Arbitrum and zk-Rollups are addressing these scalability issues, broad adoption and full integration are still works in progress.

The complexity of Web3 applications poses a significant barrier to mass adoption. Managing wallets, private keys, gas fees, and bridging assets between chains can be intimidating even for tech-savvy users. For Web3 to become mainstream, platforms must prioritize intuitive interfaces and seamless user experiences, a challenge that the ecosystem continues to grapple with.

Governments worldwide are still defining regulatory frameworks for decentralized technologies. The fragmented and evolving legal landscape creates uncertainty for innovators and investors alike. Without clear guidelines, companies may hesitate to launch new services, and users may remain wary of engaging with decentralized platforms.

Blockchain technologies, especially those relying on proof-of-work consensus, have drawn criticism for their substantial energy consumption. This environmental impact conflicts with global sustainability goals, prompting debates about the ecological viability of a blockchain-based internet. Transitioning to more energy-efficient consensus mechanisms remains a priority for the community.

In this complex and rapidly evolving landscape, distinguishing genuine innovation from hype is crucial. Token Metrics offers a powerful AI-driven platform that analyzes over 6,000 crypto tokens daily, providing comprehensive market intelligence to evaluate which Web3 projects deliver real value.

Unlike traditional online platforms dominated by a small group of companies, Token Metrics empowers users with decentralized insights, reducing reliance on centralized authorities and supporting a more user-driven ecosystem.

By leveraging technical analysis, on-chain data, fundamental metrics, sentiment analysis, and social data, Token Metrics helps users identify projects with sustainable tokenomics and governance structures. Its dual scoring system—Trader Grade for short-term potential and Investor Grade for long-term viability—enables investors, developers, and business leaders to make informed decisions grounded in data rather than speculation.

Token Metrics tracks the maturity of various Web3 sectors, from DeFi protocols to enterprise blockchain solutions, helping users separate signal from noise in an ecosystem often clouded by hype and false narratives.

In 2025, the question “Is Web3 just a buzzword or is it real?” defies a simple yes-or-no answer. Web3 is neither a complete failure nor a fully realized vision; it is an evolving ecosystem showing clear progress alongside persistent challenges.

Web3 has been touted as the solution to all the things people dislike about the current internet, but the reality is more nuanced.

Web3 is not dead; it is maturing and shedding its earlier excesses of hype and get-rich-quick schemes. The vision of a fully decentralized internet remains a north star, but the community increasingly embraces pragmatic approaches.

Communities play a crucial role in Web3 by driving decentralized governance, fostering innovation, and enabling user participation through collective decision-making and user-created groups.

Rather than demanding all-or-nothing decentralization, most successful projects pursue “progressive decentralization,” balancing user control with practical considerations. This approach acknowledges that decentralization is a feature to be integrated thoughtfully—not an ideological mandate.

The debate over whether Web3 is real or just a buzzword presents a false dichotomy. In 2025, Web3 is both a real technological shift with tangible applications and an ecosystem still grappling with hype and speculation. James Grimmelmann, a Cornell University law and technology professor, has expressed skepticism about Web3's decentralization claims, highlighting ongoing concerns about centralization and data privacy.

We are witnessing Web3’s transition from a speculative fairy story to a building phase, where decentralized social networks, token-based economics, and user-generated content platforms are already reshaping digital interactions. The key lies in focusing on the fundamental value these technologies bring—digital ownership, security, and user empowerment—rather than being distracted by marketing buzzwords. The public's negative associations with Web3, including concerns about scams, gambling, and marketing gimmicks, continue to fuel skepticism and distrust regarding its true value and decentralization.

For businesses, developers, and individuals navigating this landscape, platforms like Token Metrics offer essential tools to separate genuine innovation from hype. The future of the internet will not be determined by maximalist visions or outright dismissal but by practical implementations that solve real problems.

The builders focused on identity, ownership, censorship resistance, and coordination are laying the foundation for a decentralized internet that benefits users collectively. Just as the internet evolved through cycles of boom and bust, so too will Web3. The critical question is not whether Web3 is real or hype, but how swiftly we can move beyond speculation toward sustainable value creation.

%201.svg)

%201.svg)

The blockchain revolution has evolved from a niche curiosity into a foundational element of modern digital infrastructure. As we move through 2025, the demand for skilled blockchain developers is skyrocketing, driven by the rapid expansion of blockchain technology across various sectors. Experts predict that the global blockchain technology market will reach an astounding USD 1,879.30 billion by 2034. Whether you are an aspiring blockchain developer or an experienced software engineer looking to transition into this dynamic field, understanding what are the core skills needed for blockchain development is essential to thrive in today’s competitive landscape.

Blockchain development involves creating and maintaining decentralized applications (DApps), blockchain protocols, and innovative blockchain solutions. This technology is revolutionizing industries ranging from finance and healthcare to supply chain management by offering secure, transparent, and immutable systems. In 2025, blockchain developers typically fall into two main categories:

Blockchain Core Developers focus on the foundational layers of blockchain technology. They design and build blockchain architecture, including consensus algorithms such as Proof of Work (PoW), Proof of Stake (PoS), and Proof of History (PoH). Their work ensures the security, scalability, and resilience of blockchain networks by managing blockchain nodes and maintaining network security. These core developers possess a thorough knowledge of distributed ledger technology, cryptographic principles, and network architecture.

On the other hand, Blockchain Software Developers leverage existing blockchain platforms and protocols to build decentralized applications and smart contracts. They specialize in smart contract development, integrating frontend web development with blockchain backends, and creating user-friendly decentralized apps (DApps). These developers work on blockchain applications that interact with blockchain transactions, digital assets, and decentralized exchanges, often utilizing APIs to connect blockchain services with traditional business processes.

Understanding this distinction is critical to identify the core blockchain developer skills required for each role and to tailor your learning path accordingly.

Mastering specific programming languages is a cornerstone of blockchain developer technical skills. Here are the top languages that every blockchain professional should consider:

Solidity reigns supreme in the blockchain space, especially for Ethereum-based development. Created by Gavin Wood in 2014, Solidity is a statically typed language specifically designed for writing smart contracts. It combines the familiarity of C++, JavaScript, and Python syntax, making it accessible for many software developers.

Solidity’s strong community support, extensive documentation, and widespread adoption make it the backbone of approximately 90% of smart contracts deployed today. Major decentralized finance (DeFi) platforms like Compound, Uniswap, and MakerDAO rely heavily on Solidity for their smart contract infrastructure. For any developer aiming to become a blockchain developer, proficiency in Solidity and smart contract logic is indispensable.

Rust is gaining significant traction in blockchain development due to its focus on memory safety and high performance. Unlike languages with garbage collection, Rust’s ownership model guarantees memory safety without sacrificing speed, reducing common programming pitfalls such as race conditions and memory corruption.

Rust is extensively used in cutting-edge blockchain platforms like Solana, NEAR, and Polkadot. Developers familiar with C++ will find Rust’s syntax approachable, while appreciating its enhanced security features. For blockchain core developers working on blockchain systems that demand speed and reliability, Rust is a top blockchain developer skill.

JavaScript remains a versatile and essential language, especially for integrating blockchain applications with traditional web interfaces. Its extensive ecosystem, including frameworks like Node.js, React.js, Angular, and Vue.js, enables developers to build responsive frontends and backend services that interact seamlessly with blockchain networks.

Libraries such as Web3.js and Ethers.js facilitate blockchain integration, allowing developers to manage blockchain transactions, interact with smart contracts, and maintain decentralized applications. JavaScript skills are vital for blockchain software developers aiming to create intuitive decentralized apps and blockchain services.

Python’s simplicity and versatility make it a popular choice for blockchain development, particularly for scripting, backend integration, and prototyping. Python is also the foundation for Vyper, a smart contract language designed to complement Solidity with a focus on security and simplicity.

Python’s easy-to-learn syntax and broad application in data science and machine learning make it a valuable skill for blockchain developers interested in emerging technologies and AI integration within blockchain solutions.

Go (Golang) is renowned for its user-friendliness, scalability, and speed, making it ideal for blockchain development. It powers prominent projects such as Go-Ethereum (the official Ethereum client), Hyperledger Fabric, and various DeFi protocols.

For blockchain developers focusing on enterprise blockchain applications and network architecture, Go offers the tools necessary to build efficient distributed systems and maintain decentralized networks.

While programming languages form the foundation, several other technical competencies are essential to excel in blockchain development.

Smart contracts are self-executing agreements coded directly into blockchain networks. Proficiency in creating smart contracts is central to blockchain development involves creating automated, transparent, and secure agreements without intermediaries. Developers must master gas optimization to reduce transaction fees, apply security best practices to prevent vulnerabilities, and adopt rigorous testing and deployment strategies to ensure contract reliability.

A deep understanding of blockchain architecture is vital. This includes knowledge of consensus mechanisms such as PoW, PoS, and emerging alternatives, which govern how blockchain networks agree on the validity of transactions. Familiarity with cryptographic principles, including hash functions, cryptographic hash functions, digital signatures, and public key cryptography, is necessary to secure blockchain transactions and digital assets.

Moreover, blockchain developers should understand how blockchain nodes communicate within distributed networks, the structure of data blocks, and how blockchain consensus ensures data integrity across decentralized systems.

Modern blockchain applications require seamless integration between traditional web technologies and blockchain backends. Developers use libraries like Web3.js, Ethers.js, and Web3.py to connect decentralized apps with user-friendly interfaces. Skills in frontend frameworks (React.js, Angular, Vue.js), backend development (Node.js, Python), and API development are essential to build responsive and scalable blockchain applications that cater to diverse user needs.

Frameworks such as Hardhat and Truffle simplify blockchain development by providing comprehensive environments for compiling, testing, and deploying smart contracts. Hardhat is a flexible JavaScript-based framework favored for its powerful debugging capabilities, while Truffle offers an end-to-end development suite for scalable blockchain applications. For beginners, Remix IDE presents a browser-based environment ideal for learning and experimenting with smart contracts.

Security is paramount in blockchain development. Developers must rigorously test smart contracts using tools like Remix, Hardhat, and Truffle to simulate various scenarios and identify vulnerabilities. Adhering to security best practices, such as leveraging established libraries like OpenZeppelin, conducting professional security audits, and following industry-standard coding conventions, ensures that blockchain applications remain secure against attacks like reentrancy or denial-of-service.

Proficiency across different blockchain platforms is crucial. Ethereum remains the most popular blockchain development platform, supported by a vast ecosystem and extensive developer resources. Layer 2 solutions such as Polygon, Arbitrum, and Optimism address Ethereum’s scalability challenges, while alternative blockchains like Solana, Binance Smart Chain, and Avalanche cater to specific use cases with unique performance attributes.

Enterprise-focused platforms like Hyperledger Fabric offer permissioned blockchain networks tailored for business applications, emphasizing privacy and compliance. Understanding these platforms enables developers to select the appropriate blockchain ecosystem for their projects.

Beyond technical skills, understanding token economics and market dynamics is increasingly important for blockchain developers. Token Metrics is an invaluable platform offering AI-powered analytics that help developers navigate the cryptocurrency market and make informed technical decisions.

Token Metrics equips developers with market intelligence by analyzing over 6,000 tokens daily, providing insights into which blockchain platforms and projects are gaining traction. This knowledge aids developers in selecting technologies and designing blockchain solutions aligned with market trends.

The platform’s comprehensive analysis of token economics supports developers working on DeFi protocols, decentralized finance applications, and tokenized ecosystems. Real-time performance tracking and sentiment analysis help developers evaluate project viability, guiding architecture choices and consensus mechanism implementations.

By using Token Metrics, blockchain professionals can build expertise that combines technical proficiency with market awareness—an increasingly sought-after combination in the blockchain industry. The platform’s scoring systems teach developers to evaluate projects systematically, a skill crucial for leadership roles.

Staying current with emerging trends through Token Metrics’ real-time alerts ensures developers remain at the forefront of innovation, enabling them to create innovative solutions that meet evolving market demands.

The blockchain space is rapidly evolving, with new languages like Move, Cadence, Cairo, and Ligo addressing challenges related to scalability, security, and usability. Integration with AI and machine learning technologies is becoming more prevalent, enhancing blockchain applications’ capabilities.

Cross-chain development skills, including knowledge of interoperability protocols and bridges, are essential as decentralized networks become more interconnected. Privacy-preserving technologies like zero-knowledge proofs (zk-SNARKs and zk-STARKs) are critical for developing secure, confidential blockchain applications.

Efficient transaction processing and scalability are vital for blockchain usability. Developers must master gas optimization techniques to minimize transaction fees and implement scalability solutions such as Layer 2 protocols and sidechains. Load testing ensures that blockchain applications can handle high volumes of transactions without compromising performance.

Security remains a top priority. Developers should acquire skills in code review methodologies, vulnerability assessments, penetration testing, and formal verification to audit smart contracts thoroughly. Choosing programming languages with strong typing and memory safety features helps prevent common security threats, reinforcing blockchain security.

To become a proficient blockchain developer, a structured learning path is beneficial:

Phase 1: Foundation (2-3 months)

Start by mastering a core programming language such as Python or JavaScript. Build a solid understanding of blockchain basics, cryptographic concepts, and blockchain fundamentals. Utilize platforms like Token Metrics to gain insights into market dynamics.

Phase 2: Specialization (4-6 months)

Learn Solidity and focus on smart contract development. Gain hands-on experience with development frameworks like Hardhat or Truffle. Build and deploy simple decentralized applications on testnets to apply your knowledge practically.

Phase 3: Advanced Development (6-12 months)

Delve into advanced topics such as Layer 2 solutions, cross-chain interoperability, and blockchain consensus algorithms. Contribute to open-source blockchain projects and develop expertise in specific blockchain ecosystems to build a robust portfolio.

A strong portfolio is essential for showcasing your blockchain developer skills. Include smart contracts with clean, audited code, full-stack DApps featuring intuitive user interfaces, and contributions to open-source blockchain projects. Demonstrate your understanding of token economics and market dynamics to highlight your comprehensive blockchain expertise.

The blockchain development landscape in 2025 offers unprecedented opportunities fueled by exponential market growth. The expanding blockchain industry creates demand across multiple sectors:

As blockchain technology continues to integrate with emerging technologies, blockchain professionals with a blend of technical and soft skills will be pivotal in driving the future of decentralized solutions.

In 2025, what are the core skills needed for blockchain development extends beyond just programming. It encompasses a thorough understanding of blockchain architecture, cryptographic principles, smart contract development, and seamless web integration. Equally important is market awareness, token economics knowledge, and the ability to evaluate projects critically.

Platforms like Token Metrics provide indispensable market intelligence that complements technical skills, empowering developers to make informed decisions and create innovative blockchain applications. The blockchain revolution is still unfolding, and developers who combine technical proficiency with market insight will lead the next wave of innovation.

Start your journey today by mastering the fundamentals, leveraging professional tools, and building projects that demonstrate both your technical competence and market understanding. The future of decentralized technology depends on blockchain developers equipped with the right skills to build secure, scalable, and transformative blockchain systems.

%201.svg)

%201.svg)